Abstract

Aspect extraction is a critical step in constructing knowledge graphs and involves extracting aspect information from unstructured text. Current methods typically employ attention-based techniques such as global or local attention mechanisms, each with significant limitations. Global mechanisms are prone to introducing noise, while local mechanisms face challenges in determining the optimal window size. To address these issues, we propose a novel aspect extraction approach utilizing a multi-scale local attention mechanism (MLA). This method leverages a pre-trained model to convert text into vector representations. Feature extraction is then performed with gated recurrent units, followed by representation learning at various window sizes through the MLA. Features are selected using max pooling and decoded by a fully connected neural network combined with a conditional random field to generate precise aspect labels. Experimental validation on the Zhejiang Cup e-commerce review mining dataset demonstrates that our proposed method outperforms existing models in aspect extraction performance.

Similar content being viewed by others

Introduction

Aspect extraction1, alternatively termed aspect term extraction (ATE), is pivotal for constructing knowledge graphs as it involves isolating entity-related aspect terms from relevant texts. This technique is particularly instrumental in e-commerce knowledge graphs2 where it applies to the analysis of user reviews and social media data on e-commerce platforms, extracting aspect information to expand the graph. For illustration, Table 1 provides two examples of Chinese review texts from the beauty industry and the computer industry. In both examples, terms underlined represent the aspect terms targeted for extraction.

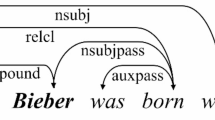

Aspect extraction plays a pivotal role in converting unstructured data into structured data, simplifying information, and highlighting key terms, with extensive research and applications in academia. The development of aspect extraction methods encompasses three main stages: rule-based, traditional machine learning, and deep learning-based approaches. Rule-based methods represent the earliest approach, relying on manually constructed rules and templates to extract aspects from text. For example, Poria et al.3 employed commonsense knowledge and sentence dependency trees to identify explicit and implicit aspects within product reviews. Traditional machine learning-based methods treat aspect extraction as a sequence labeling task. These methods build models by manually selecting and optimizing features, leveraging machine learning principles. Jakob et al.4 first applied the Conditional Random Field (CRF) model to extract product aspects from review texts, achieving notable results. Hamdan et al.5 enhanced the CRF model by incorporating syntactic, lexical, semantic, and sentiment features, proposing a BIO-tagged CRF model that significantly improved extraction performance. However, rule-based methods suffer from dependency on manually constructed templates and poor transferability, while traditional machine learning-based methods necessitate substantial manual feature engineering, which is both labor-intensive and dependent on expert knowledge. Recently, deep learning technology has made significant advances across various fields. Its powerful data representation capabilities have shown great potential and advantages in specific tasks, such as aspect extraction. For example, Ma et al.6 used the Bidirectional Long Short-Term Memory (BiLSTM) and CRF to extract various aspects from encyclopedia entries, allowing for more accurate identification of key information in text data. Zhang et al.7 utilized BERT (Bidirectional Encoder Representations from Transformers) and combined it with BiLSTM-CRF, significantly improving cross-___domain aspect extraction performance.

The attention mechanism has been extensively incorporated into neural networks for aspect extraction research, resulting in notable performance enhancements. Attention modeling typically employs global or local mechanisms. For instance, Avinash et al.8 used a hierarchical self-attention network to capture the importance of words and the internal dependencies within sentences, assisting in the recognition of aspect terms. Similarly, Hannach et al.9 applied LSTM with an attention mechanism for implicit aspect identification. However, current aspect extraction methods leveraging attention mechanisms exhibit two primary limitations. Firstly, the majority of attention mechanisms operate globally. Global attention mechanisms consider the entire input sequence when processing each target character, assigning weights to evaluate the importance of each character relative to the target character. The absence of explicit constraints in this process may cause characters that are distant from and weakly related to the target character to receive attention weights, thereby introducing noise into the attention distribution vector and impairing aspect extraction performance. Secondly, while CNNs can capture local features by sliding convolutional kernels over the input, they are less effective than attention mechanisms at capturing contextual information and identifying associations of characters near the target character. Although local attention mechanisms can handle local features through the introduction of a window, the optimal window size varies across different contexts, adding uncertainty.

To address the aforementioned limitations, we propose an aspect extraction method based on a multi-scale local attention mechanism. This method leverages local attention to accurately capture the contextual information and adjacent associations of target words. Drawing inspiration from the multi-kernel convolution and feature pooling strategies in convolutional neural networks, we introduce attention windows of different sizes to process features at various ranges. Feature fusion is then performed via pooling layers to obtain the final attention feature vector.

The main contributions of this paper are as follows:

-

We propose and design a deep learning framework for aspect extraction tasks, fully utilizing the deep semantic representation capabilities of pre-trained language models, and incorporating attention mechanisms to perform aspect extraction from e-commerce review texts.

-

To address the issues of noise introduced by global attention mechanisms and the uncertainty of the optimal window size in traditional local attention mechanisms, we innovatively propose a multi-scale local attention mechanism. This mechanism can model the context and adjacent associations of target words across different window ranges, significantly reducing noise and enhancing the capture of key features.

-

Extensive experimental results on the Zhejiang Cup e-commerce review mining dataset show that, compared to existing mainstream methods, the proposed model achieves superior performance in aspect extraction tasks.

Related work

Currently, aspect extraction methods, both domestically and internationally, have primarily been based on deep learning. Traditional aspect extraction techniques have been further improved by incorporating pre-trained models and attention mechanisms.

In recent years, pre-trained language models such as ELMo10, BERT11, ALBERT12, and RoBERTa13 have demonstrated significant effectiveness in aspect extraction tasks, owing to their powerful contextual representation capabilities. Unlike traditional word embedding models such as Word2Vec14 and GloVe15, which represent each word as a static vector and are incapable of capturing contextual differences, pre-trained language models learn deep language representations through self-supervised learning on large-scale corpora, enabling them to effectively capture semantic features of context and the meanings of polysemous words16,17. BERT and its variants have been widely applied in aspect extraction tasks, resulting in a range of architectural enhancements. For example, Song et al.18 proposed the AEN-BERT model, which utilizes two independent BERT encoders to model the context and aspect terms separately, achieving strong performance with a lightweight structure. Fadel et al.19 combined contextual string embeddings with BERT and stacked BiLSTM and CRF layers on top to form the BF-BiLSTM-CRF model, enhancing word-level aspect representation and label prediction performance. Karabila et al.20 retrained the BERT model on a customer review corpus and fine-tuned it for aspect-based sentiment analysis tasks, enabling the model to better capture ___domain-specific features and thereby achieve improved performance. He et al.21 proposed the CABiLSTM-BERT model, which uses a frozen BERT to extract word embeddings and incorporates BiLSTM to retain implicit feature information across layers, enhancing classification performance in aspect-based sentiment analysis.

Although pre-trained models have significantly improved task performance, researchers have identified several persistent challenges in aspect extraction. These challenges include weak semantic associations between aspect terms and their surrounding context, the tendency of multi-word aspect phrases to introduce redundant information or cause imbalanced term weighting, and the difficulty of accurately distinguishing aspects when multiple aspects co-occur. To address these problems, subsequent studies have introduced increasingly sophisticated attention mechanisms to enhance semantic interaction. For example, Lin et al.22 proposed a model that integrates multi-head attention with convolutional neural networks (CNNs). In this approach, CNNs are employed to capture local structural features, while the attention mechanism is used for global modeling. This design improves the model’s ability to extract aspect terms from unstructured data. Similarly, Su et al.23 constructed a multi-layer interactive attention mechanism that emphasizes the deep semantic connections between text structure and content, thereby enhancing the model’s capability to represent hierarchical semantics in context. In response to the issue that global attention mechanisms often introduce noise during feature processing, which makes it difficult to identify the boundaries of aspect terms, Ma et al.24 proposed a position-aware attention mechanism. This method explicitly incorporates positional parameters into the attention computation in order to reduce the influence of distant words. In another study, Wei et al.25 proposed a convolution-like interactive attention mechanism. Drawing on the concept of sliding windows in CNNs, this approach controls the contextual width for each word and performs interactive attention between the convolution-like attention distribution vectors and all words. As a result, it effectively captures important global information and improves aspect tagging performance. However, most of the above methods focus primarily on attention modeling at a single scale, making it difficult to capture semantic information at different levels such as word-level and phrase-level. This limitation affects the model’s ability to identify fine-grained aspect boundaries and to adapt to semantic variation.

Proposed model

Sequence labeling

The model described in this paper is specifically developed for analyzing Chinese review texts. Due to the infrequency of single-character aspects in Chinese, we employ the BIO tagging scheme for sequence labeling. Within this method, B indicates the beginning of an aspect, I marks the intermediate or ending part of an aspect, and O represents non-aspect characters. Table 2 provides an illustration of how this labeling is applied.

Model architecture

In this paper, we propose an aspect extraction model named Bert-BiGRU-MLA, which utilizes a multi-scale local attention mechanism for such tasks. The structure of the model is illustrated in Fig. 1. It comprises a pre-trained embedding layer, a Bidirectional Gated Recurrent Unit (BiGRU)26 layer, a multi-scale local attention layer, a feature fusion layer, and a CRF layer. Given a sentence \(S=\{{{s}_{1}},{{s}_{2}},\cdots ,{{s}_{n}}\}\) for extraction, where each \({s}_{i}\) denotes a single Chinese character, and n is the total number of characters in the input sentence, it is fed into the BERT layer to encode the sentence, resulting in the distributed representationit is fed into the Bert layer to encode the sentence, resulting in the distributed representation \(X=\{{{x}_{1}},{{x}_{2}},\cdots ,{{x}_{n}}\}\). X is then input into the BiGRU layer to capture the contextual information of each character, yielding hidden vectors \(H=\{{{h}_{1}},{{h}_{2}},\cdots ,{{h}_{n}}\}\). Next, multi-scale local attention mechanism is employed to process the hidden vectors H. This mechanism assigns attention weights to each character relative to its neighboring characters, resulting in a set of attention matrices \(\{{{H}^{{{N}_{1}}}},{{H}^{{{N}_{2}}}},\cdots ,{{H}^{{{N}_{k}}}}\}\) for different attention window sizes. The attention matrices are then processed through max-pooling for feature selection and concatenated with the original hidden vectors H to produce the fused feature matrix \(M=\{{{m}_{1}},{{m}_{2}},\cdots ,{{m}_{n}}\}\). Finally, M is input into a fully connected layer for dimensionality reduction and decoded using a CRF model to obtain the predicted labels \(L=\{{{y}_{1}},{{y}_{2}},\cdots ,{{y}_{n}}\}\) for each character, where \({{y}_{i}}\in \{B,I,O\}\).

Encoder layer

Bert is widely used in natural language processing (NLP) for pre-training semantic representations, offering effective representations of text for specific downstream tasks. We employ Bert for pre-training, which maps each character \({{s}_{t}}\) (\(t=1,2,\cdots ,n\)) in the sentence \(S=\{{{s}_{1}},{{s}_{2}},\cdots ,{{s}_{n}}\}\) into a fixed vector representation, resulting in the feature matrix \(X=\{{{x}_{1}},{{x}_{2}},\cdots ,{{x}_{n}}\}\) for the sentence S. To enhance the interaction of text information and better capture contextual dependencies, an additional BiGRU is introduced to process the feature matrix X. By combining the forward GRU and backward GRU, we obtain the features associated with each character and its context. The GRU includes an update gate and a reset gate, which control the flow of information within the network. Specifically, the input \({{x}_{t}}\in X\) (i.e., the t-th character) at the current time step t, and the hidden state \({{h}_{t-1}}\) from the previous time step are used as inputs to the GRU. The calculation process is as follows:

where \({{U}_{r}}\), \({{U}_{u}}\), \({{U}_{{\tilde{h}}}}\), \({{V}_{r}}\), \({{V}_{u}}\), \({{V}_{{\tilde{h}}}}\) denote weight matrices, tanh is the hyperbolic tangent function, \(\sigma\) is the sigmoid function, and \(\odot\) is element-wise multiplication. \({{r}_{t}}\) denotes the reset gate, which controls the influence of past information on the current candidate hidden state. \({{u}_{t}}\) denotes the update gate, which determines the extent to which the current state is updated. \({{h}_{t}}\) denotes the final output of the GRU, primarily controlled by the update gate \({{u}_{t}}\), which combines the hidden state \({{h}_{t-1}}\) from the previous time step with the current candidate hidden state \({{\tilde{h}}_{t}}\).

The GRU is limited to capturing forward semantic information, neglecting the impact of reverse semantic information. Consequently, this study employs a BiGRU model, in which the forward GRU captures contextual information preceding the current character, while the backward GRU captures subsequent contextual information, yielding forward and backward hidden states, \({{\vec {h}}_{t}}\) and \({{\overset{\scriptscriptstyle \leftarrow }{h}}_{t}}\), respectively. These states are concatenated to form the final hidden layer representation of the current character, denoted as \({{h}_{t}}=[{{\vec {h}}_{t}},{{\overset{\scriptscriptstyle \leftarrow }{h}}_{t}}]\), thereby generating the output \(H=\{{{h}_{1}},{{h}_{2}},\cdots ,{{h}_{n}}\}\) for the entire input text.

Multi-scale local attention mechanism layer

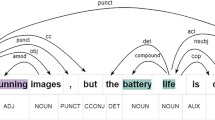

We proposed a novel multi-scale local attention mechanism for aspect extraction, specifically designed to focus on the boundary information of aspect terms within the text. This attention mechanism is inspired by the concept of multi-scale convolutional kernels. By integrating local attention networks with different window sizes, the model effectively captures features of aspect terms with varying lengths. By combining local attention networks with different window sizes, the model is able to extract features of aspect terms with varying lengths. This approach avoids the noise interference associated with traditional global attention mechanisms and addresses the issue of determining the optimal window size in local attention mechanisms. The local attention mechanism is illustrated in Fig. 2.

The hidden state \(H=\{{{h}_{1}},{{h}_{2}},\cdots ,{{h}_{n}}\}\) of the original input text is obtained through the encoding layer, where \({{h}_{t}}\) is the hidden state at the current time step t (i.e., the t-th character). A local context window of size \(2N+1\) is defined around \({{h}_{t}}\), with N representing the offset from the current time step. This window includes the hidden states from \(t-N\) to \(t+N\). Within this window, the attention score \(a_{i}^{t}\) for each hidden state \({{h}_{i}}\) (\(i\in [t-N,t+N]\)) for \({{h}_{t}}\) is calculated as follows:

where W, \({{W}_{1}}\), \({{W}_{2}}\) are weight matrices, and \(a_{i}^{t}\) indicates the importance of the i-th character within the window to the current character. Through local attention calculation, the contextual attention weights \(A=\{a_{t-N}^{t},a_{t-N+1}^{t},\cdots ,a_{t+N}^{t}\}\) for the current character are obtained. Further, A is normalized to obtain the normalized attention weights \(\mathsf {{V}}=\{\nu _{t-N}^{t},\nu _{t-N+1}^{t},\cdots ,\nu _{t+N}^{t}\}\), where \(\nu _{i}^{t}\) is calculated as follows:

The normalized attention weights \(\mathsf {{V}}=\{\nu _{t-N}^{t},\nu _{t-N+1}^{t},\cdots ,\nu _{t+N}^{t}\}\) are used to weigh and sum the corresponding hidden states \({{h}_{t-N}},{{h}_{t-N+1}},\cdots ,{{h}_{t+N}}\) within the window, yielding the attention vector \({{h}_{t}}^{N}\) at the current time step t (i.e., the t-th character), as follows:

Thus, the attention features \({{H}^{N}}=\{h_{1}^{N},h_{2}^{N},\cdots ,h_{n}^{N}\}\) are derived after applying the multi-scale local attention mechanism to \(H=\{{{h}_{1}},{{h}_{2}},\cdots ,{{h}_{n}}\}\). By setting local attention windows of different sizes, we can obtain a set of features \(\{{{H}^{{{N}_{1}}}},{{H}^{{{N}_{2}}}},\cdots ,{{H}^{{{N}_{k}}}}\}\) under multi-scale local attention, where \({{N}_{1}},{{N}_{2}},\cdots ,{{N}_{k}}\) represent different offsets and k denotes the number of different local attention windows.

Feature fusion layer

We obtain a set of attention features \(\{{{H}^{{{N}_{1}}}},{{H}^{{{N}_{2}}}},\cdots ,{{H}^{{{N}_{k}}}}\}\) corresponding to different attention window sizes from the multi-scale local attention layer. Inspired by the pooling operations and residual connections in convolutional neural networks, these attention features are further fused. The feature fusion layer is illustrated in Fig. 3.

We use max-pooling, as shown in Eq.(8) to extract key features from the k attention feature matrices.

To address the training degradation problem caused by deepening the network, we use residual neural networks to fuse the pooled attention feature matrices with the input of the multi-scale local attention network (i.e., H), resulting in the fused feature \(M=\{{{m}_{1}},{{m}_{2}},\cdots ,{{m}_{n}}\}\), as follows:

where \(\sigma\) is the sigmoid function.

CRF layer

CRF is used to enforce constraints between labels to avoid generating invalid label sequences. The fused feature \(M=\{{{m}_{1}},{{m}_{2}},\cdots ,{{m}_{n}}\}\) is passed through a fully connected neural network for dimensionality reduction, yielding the emission scores \(E=\{{{e}_{1}},{{e}_{2}},\cdots ,{{e}_{n}}\}\) required by the CRF. These scores are then input into the CRF for decoding to predict the corresponding labels \(L=\{{{y}_{1}},{{y}_{2}},\cdots ,{{y}_{n}}\}\), where \({{y}_{i}}\in \{B,I,O\}\). The aspect extraction model is trained by optimizing the log-likelihood loss function of the CRF, which is expressed as follows:

where \(y_i\) denotes the ground truth label of the i-th character, \(e_i\) is the corresponding emission score produced by the neural network, and \(W_T\) represents the transition matrix of the CRF layer. During the prediction phase, the Viterbi algorithm is used to compute the predicted label sequence by combining the emission scores E with the transition matrix \({{W}_{T}}\). The detailed procedure of the proposed model is shown in Algorithm 1.

Experiments

Experimental settings

Dataset

The performance of the Bert-BiGRU-MLA model is evaluated using the Zhejiang Cup e-commerce review mining datasetFootnote 1, divided into two sub-datasets: Makeup and Laptop. Before conducting the experiments, we allocate 80% of the dataset for training and 20% for testing, excluding reviews that lack aspect terms. The details of the refined dataset are presented in Table 3.

Experiment setup

The implementation of our proposed method utilizes Python 3.10 and Pytorch 1.12, with training conducted on a server equipped with an Intel Core i7-11800H @ 2.3GHz and an NVIDIA GeForce RTX 3060 GPU. The BiGRU hidden size is set to 128, the attention vector dimension is set to 256, and the batch size is set to 1. The Adam optimizer is used, with the learning rate set to 0.001. The number of epochs is set to 30.

Baselines

To validate the effectiveness of the proposed Bert-BiGRU-MLA model, we conducted comparative experiments with six current mainstream aspect extraction models. The baseline models for comparison are introduced as follows:

-

(1)

BiLSTM-CRF27: Chai et al. proposed using static word vectors for encoding and implemented aspect extraction using the classic BiLSTM-CRF model.

-

(2)

BiLSTM-ATT28: Zhang et al. introduced a global attention mechanism on top of the BiLSTM-CRF model.

-

(3)

Seq2Seq-PAA24: This model employed an encoder-decoder structure and introduced position-based weights within the attention mechanism.

-

(4)

RoBerta-BiLSTM29: Zhang et al. modified the BiLSTM-CRF model by substituting the traditional word vector model with the RoBerta pre-trained model for encoding.

-

(5)

Bert-BiLSTM-ATT30: Based on the BiLSTM-ATT model, the Bert pre-trained model was used for encoding.

-

(6)

Bert-BiLSTM-CIA25: Wei et al. employed the Bert pre-trained model for encoding and enhanced the BiLSTM-CRF framework by integrating a convolutional interactive attention mechanism, which allocates attention weights to each character’s context.

Evaluation metrics

During evaluation, a prediction is considered correct if it exactly matches the label. We evaluate our method and baselines using precision (P), recall (R), and F1-score.

Experimental results and analysis

Overall experimental results comparison

We conducted experiments on the Zhejiang Cup e-commerce review mining Makeup and Laptop datasets with our proposed model and comparison models. The comparative results are presented in Table 4. The results for the comparison models are obtained by replicating the models from the original papers and testing them on the dataset used in this study. It shows that our model consistently achieves optimal performance across both datasets.

Experiment setup

Analysis of the results in Table 4 indicates that the BiLSTM-ATT model, which incorporates a global attention mechanism, shows an improvement in F1-score and other metrics compared to the BiLSTM-CRF aspect extraction model. However, because the global attention mechanism allocates attention weights to every character in the context of the target character, fewer related characters can introduce noise into the final attention vector, resulting in a modest improvement. The Seq2Seq-PAA model, addressing the shortcomings of the global attention mechanism in aspect extraction, integrates position-based weights to diminish the noise from distant features, leading to significant improvements in metrics. Compared to BiLSTM-ATT, the F1-score on the Makeup and Laptop datasets increased by 1.65% and 1.3% respectively.

The introduction of pre-trained models significantly enhances the performance of aspect extraction models. Integrating Roberta with BiLSTM-CRF leads to F1-score increases of 2.51% and 2.98% on the Makeup and Laptop datasets, respectively. Similarly, incorporating Bert into BiLSTM-ATT results in F1-score improvements of 2.18% and 3.53%, primarily due to the pre-trained models’ superior capability to extract semantic features, thus strengthening the ability to extract aspect terms from texts. Additionally, the Bert-BiLSTM-CIA model uses Bert and a convolutional interactive attention mechanism to mitigate noise from global attention and enhance interaction capabilities, achieving F1-score increases of 0.84% and 1.2%, respectively, compared to Bert-BiLSTM-ATT. Unlike the Bert-BiLSTM-CIA model, our proposed Bert-BiGRU-MLA model leverages the strengths of Bert and BiGRU and employs a multi-scale local attention mechanism to capture the contextual information and local relevancy of target characters. It incorporates diverse window sizes to merge attention features across multiple scales effectively, not only diminishing noise from the global attention mechanism but also alleviating the uncertainty involved in selecting optimal window sizes for existing local attention mechanisms. Compared to other baseline models, our model achieves the best P, R, and F1-scores on both datasets, demonstrating its superiority in aspect extraction tasks.

Ablation study

To evaluate the contribution of the BiGRU encoder layer and the multi-scale local attention (MLA) mechanism to the overall performance of the model, we conducted the following ablation experiments:

-

To analyze the role of BiGRU in enhancing text information interaction and modeling contextual dependencies, we constructed a variant without the BiGRU layer, referred to as w/o BiGRU, while keeping all other components unchanged.

-

The key to aspect extraction lies in improving the model’s ability to capture local features around aspect terms and their contextual boundaries. CNNs have been widely used in previous aspect extraction studies31,32 for modeling local features. To verify the effectiveness of the proposed MLA mechanism in local feature modeling, we designed two comparative settings by replacing MLA with single-kernel CNN (denoted as w/ CNN) and multi-kernel CNN (denoted as w/ Multi-CNN), respectively, while maintaining the rest of the architecture unchanged.

All experiments were conducted on the Makeup dataset, and the results are shown in Table 5. The results indicate that removing the BiGRU layer leads to a decrease in P, R, and F1-score, suggesting that BiGRU plays an important role in capturing long-range bidirectional semantic dependencies and contributes to better global semantic modeling. Furthermore, when comparing different local feature modeling methods, the proposed MLA mechanism consistently outperforms both CNN and Multi-CNN across all metrics. This demonstrates that MLA is more effective in focusing on multi-scale contextual information and extracting critical local boundary features and semantic cues, which helps achieve more accurate identification of aspect term boundaries.

Impact of number of windows on model performance

We set different numbers of local attention windows to explore variations in model performance. The attention window size is 2N+1, with N indicating the offsets to the left and right of the current timestep. Five scenarios are considered, with k values of 1, 2, 3, 4, and 5. The specific window sizes for each scenario are as follows: 1) For k=1, N is set to 1, which means the window size is 3; 2) For k=2, N is set to 1 and 2, which means the window size is 3 and 5; 3) For k=3, N is set to 1, 2, and 3, which means the window size is 3, 5, and 7; 4) For k=4, N is set to 1, 2, 3, and 4, which means the window size is 3, 5, 7, and 9; 5) For k=5, N is set to 1, 2, 3, 4, and 5, which means the window size is 3, 5, 7, 9, and 11. Experiments are conducted on the Makeup dataset for each scenario mentioned above. The results are presented in Fig. 4.

For the aspect extraction task, since only predictions that exactly match the labels are considered correct during the evaluation process, it is necessary to fully consider the balance between precision and recall. Thus, the impact of the number of windows on the F1-score is primarily observed. As shown in Fig. 4, the F1-score (blue line) initially increases with the number of windows, reaching its maximum at 3 windows, and then declines. This decline is because most aspect terms range from 2 to 5 characters, and the larger window introduces noise.

Analysis of attention weight visualization

To demonstrate the effect of the multi-size local attention mechanism, two comment texts, “速度快,购物体验感好,我很喜欢 (The speed is fast, the shopping experience is great, I really like it)” and “使用了下,味道清新,还不错,也不油腻,会再来!(Used it, the scent is refreshing, it’s pretty good, and not greasy. I’ll definitely come back!)”, are randomly selected. The aspect words in these texts are visualized after processing through a multi-scale local attention layer with window sizes of 3, 5, and 7, respectively, as shown in Fig. 5. The effectiveness of the multi-size local attention mechanism is elucidated by analyzing the following three scenarios.

(1) As Fig. 6 illustrates, with an observation window size of 3, local attention mechanisms allocate substantial weight to the focal character, and neighboring characters receive minimal weight. Moreover, for boundary characters of aspect terms such as “购(shopping)” and “味(scent)”, the surrounding non-aspect characters are assigned significantly lower weights than the aspect characters. This demonstrates that local attention with a window size of 3 not only preserves the inherent features of aspect characters but also effectively differentiates between non-aspect and aspect characters at the boundaries of aspect terms.

(2) Figure 7 illustrates the distribution of weights at the boundaries of the longer aspect term “购物体验感 (shopping experience)” for window sizes of 5 and 7. With a window size of 5, the total weight assigned to non-aspect characters for “购 (shopping)” is approximately 0.294; when the window size increases to 7, this total reduces to about 0.257. Generally, connections among aspect characters are more cohesive. When calculating weights for aspect characters, particularly at the boundaries of aspect terms, excessive weighting of non-aspect characters may introduce noise. Hence, larger window sizes prove more effective in handling longer aspect terms.

(3) Figure 8 displays the weight distribution at the boundaries of the shorter aspect term “味道 (scent)” for window sizes of 5 and 7. With a window size of 5, the total weight assigned to non-aspect characters for “味 (scent)” is approximately 0.4278, while with a window size of 7, it is about 0.875. For shorter aspect terms, smaller windows introduce less noise. Therefore, smaller window sizes have an advantage in processing shorter aspect terms.

In summary, local attention windows of varying sizes exhibit distinct advantages and limitations. The multi-scale local attention mechanism introduced in this study offers enhanced flexibility to accommodate aspect terms of differing lengths.

Conclusion

In this paper, we propose an aspect extraction model that leverages a multi-scale local attention mechanism. This mechanism facilitates representation learning from input texts across different attention window sizes and enhances aspect extraction by integrating multiple local attention features. This strategy effectively mitigates the issues of noise typically associated with global attention mechanisms and addresses the difficulties in determining optimal local attention window sizes due to the diverse lengths of aspect terms. Experimental results demonstrate that our proposed model outperforms existing models on the Zhejiang Cup e-commerce review mining dataset, achieving superior extraction performance.

Data availability

The dataset that support the findings of this study are available on request from the corresponding author upon reasonable request.

References

Pontiki, M. et al. Semeval-2014 task 4: Aspect based sentiment analysis. In Nakov, P. & Zesch, T. (eds.) Proceedings of the 8th International Workshop on Semantic Evaluation, SemEval@COLING 2014, Dublin, Ireland, August 23-24, 2014, 27–35 (The Association for Computer Linguistics, Dublin, 2014).

Pujara, J., Miao, H., Getoor, L. & Cohen, W. W. Knowledge graph identification. In Alani, H. et al. (eds.) The Semantic Web - ISWC 2013 - 12th International Semantic Web Conference, Sydney, NSW, Australia, October 21-25, 2013, Proceedings, Part I, vol. 8218, 542–557 (Springer, Heidelberg, 2013).

Poria, S., Cambria, E., Ku, L., Gui, C. & Gelbukh, A. F. A rule-based approach to aspect extraction from product reviews. In Lin, S., Ku, L., Cambria, E. & Kuo, T. (eds.) Proceedings of the Second Workshop on Natural Language Processing for Social Media, SocialNLP@COLING 2014, Dublin, Ireland, August 24, 2014, 28–37 (Association for Computational Linguistics and Dublin City University, Dublin, 2014).

Jakob, N. & Gurevych, I. Extracting opinion targets in a single and cross-___domain setting with conditional random fields. In Proceedings of the 2010 Conference on Empirical Methods in Natural Language Processing, EMNLP 2010, 9-11 October 2010, MIT Stata Center, Massachusetts, USA, A meeting of SIGDAT, a Special Interest Group of the ACL, 1035–1045 (ACL, Massachusetts, 2010).

Hamdan, H., Bellot, P. & Béchet, F. Lsislif: CRF and logistic regression for opinion target extraction and sentiment polarity analysis. In Cer, D. M., Jurgens, D., Nakov, P. & Zesch, T. (eds.) Proceedings of the 9th International Workshop on Semantic Evaluation, SemEval@NAACL-HLT 2015, Denver, Colorado, USA, June 4-5, 2015, 753–758 (The Association for Computer Linguistics, Colorado, 2015).

Ma, J., Yang, Y. & Chen, W. Distant supervision for person attribute recognition. J. Chin. Inform. Process. 34, 64–72 (2020).

Zhang, S. et al. Pre-trained language models for product attribute extraction. J. Chin. Inform. Process. 36, 56–64 (2022).

Kumar, A. et al. Aspect term extraction for opinion mining using a hierarchical self-attention network. Neurocomputing 465, 195–204 (2021).

Hannach, H. E. & Benkhalifa, M. A new semantic relations-based hybrid approach for implicit aspect identification in sentiment analysis. J. Inf. Knowl. Manag.19, 2050019:1–2050019:37 (2020).

Peters, M. E. et al. Deep contextualized word representations. In Walker, M. A., Ji, H. & Stent, A. (eds.) Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2018, New Orleans, Louisiana, USA, June 1-6, 2018, Volume 1 (Long Papers), 2227–2237 (Association for Computational Linguistics, 2018).

Devlin, J., Chang, M., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Burstein, J., Doran, C. & Solorio, T. (eds.) Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, June 2-7, 2019, Volume 1 (Long and Short Papers), 4171–4186 (Association for Computational Linguistics, Minneapolis, 2019).

Lan, Z. et al. ALBERT: A lite BERT for self-supervised learning of language representations. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020 (OpenReview.net, New Orleans, 2020).

Liu, Y. et al. Roberta: A robustly optimized BERT pretraining approach. CoRR arXiv:abs/1907.11692 (2019).

Mikolov, T., Sutskever, I., Chen, K., Corrado, G. S. & Dean, J. Distributed representations of words and phrases and their compositionality. In Burges, C. J. C., Bottou, L., Ghahramani, Z. & Weinberger, K. Q. (eds.) Advances in Neural Information Processing Systems 26: 27th Annual Conference on Neural Information Processing Systems 2013. Proceedings of a meeting held December 5-8, 2013, Lake Tahoe, Nevada, United States, 3111–3119 (2013).

Pennington, J., Socher, R. & Manning, C. D. Glove: Global vectors for word representation. In Moschitti, A., Pang, B. & Daelemans, W. (eds.) Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2014, October 25-29, 2014, Doha, Qatar, A meeting of SIGDAT, a Special Interest Group of the ACL, 1532–1543 (ACL, Doha, 2014).

Zhen, Y., Li, Y., Zhang, P., Yang, Z. & Zhao, R. Frequent words and syntactic context integrated biomedical discontinuous named entity recognition method. J. Supercomput. 79, 13670–13695 (2023).

Su, J., Yu, S. & Luo, D. Enhancing aspect-based sentiment analysis with capsule network. IEEE Access 8, 100551–100561 (2020).

Song, Y., Wang, J., Jiang, T., Liu, Z. & Rao, Y. Attentional encoder network for targeted sentiment classification. arXiv preprint arXiv:1902.09314 (2019).

Fadel, A. S., Saleh, M. E. & Abulnaja, O. A. Arabic aspect extraction based on stacked contextualized embedding with deep learning. IEEE Access 10, 30526–30535 (2022).

Karabila, I., Darraz, N., El-Ansari, A., Alami, N. & Mallahi, M. E. Bert-enhanced sentiment analysis for personalized e-commerce recommendations. Multim. Tools Appl. 83, 56463–56488 (2024).

He, B., Zhao, R. & Tang, D. Cabilstm-bert: Aspect-based sentiment analysis model based on deep implicit feature extraction. Knowl. Based Syst. 309, 112782 (2025).

Lin, J. & Liu, E. Research on named entity recognition method of metro on-board equipment based on multiheaded self-attention mechanism and cnn-bilstm-crf. Comput. Intell. Neurosci. 2022, 6374988 (2022).

Su, M., Wu, H., Li, J., Huang, J. & Zhang, S. Aemia: Extracting commodity attributes based on multi-level interactive attention mechanism. Data Analy. Knowl. Discovery 7, 108–118 (2023).

Ma, D., Li, S., Wu, F., Xie, X. & Wang, H. Exploring sequence-to-sequence learning in aspect term extraction. In Korhonen, A., Traum, D. R. & Màrquez, L. (eds.) Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, July 28- August 2, 2019, Volume 1: Long Papers, 3538–3547 (Association for Computational Linguistics, Florence, 2019).

Wei, Z. et al. Convolutional interative attention mechanism for aspect extraction. J. Comput. Res. Dev. 57, 2456–2466 (2020).

Chung, J., Gulcehre, C., Cho, K. & Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. In NIPS 2014 Workshop on Deep Learning, December 2014 (2014).

Chai, J., Shang, W. & Cao, J. Aspect term extraction based on bilstm-crf model. In Yao, Z., Xu, S., Ma, J., Du, W. & Lu, W. (eds.) 22nd IEEE/ACIS International Conference on Computer and Information Science, ICIS 2022, Zhuhai, China, June 26-28, 2022, 205–211 (IEEE, 2022).

Zhang, S., Zhu, H. Y., Xu, H., Zhu, G. & Li, K. A named entity recognition method towards product reviews based on bilstm-attention-crf. Int. J. Comput. Sci. Eng. 25, 479–489 (2022).

Liu, P. & Lv, S. Chinese roberta distillation for emotion classification. Comput. J. 66, 3107–3118 (2023).

Li, D., Yan, L., Yang, J. & Ma, Z. Dependency syntax guided bert-bilstm-gam-crf for chinese NER. Expert Syst. Appl. 196, 116682 (2022).

Xu, H., Liu, B., Shu, L. & Yu, P. S. Double embeddings and cnn-based sequence labeling for aspect extraction. In Gurevych, I. & Miyao, Y. (eds.) Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, ACL 2018, Melbourne, Australia, July 15-20, 2018, Volume 2: Short Papers, 592–598 (2018).

He, J. et al. A local and global context focus multilingual learning model for aspect-based sentiment analysis. IEEE Access 10, 84135–84146 (2022).

Acknowledgements

This work was funded by the National Key R&D Program of China (2023YFB2904000, 2023YFB2904004), Jiangsu Key Development Planning Project (BE2023004-2), Future Network Scientific Research Fund Project (No. FNSRFP-2021-YB-15).

Author information

Authors and Affiliations

Contributions

Conceptualization, QY, JZ and HD; validation, QY, JZ, WW and HX; writing—original draft preparation, QY and JZ; writing—review and editing, QY, SS and CY; supervision, YJ.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yang, Q., Zhu, J., Du, H. et al. A multi-scale local attention mechanism for aspect extraction. Sci Rep 15, 23420 (2025). https://doi.org/10.1038/s41598-025-06234-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-06234-z