Abstract

This paper presents a comprehensive comparison of deep learning models for predictive maintenance (PdM) in industrial manufacturing systems using sensor data. We propose a framework that encompasses data acquisition, preprocessing, and model construction using various deep learning architectures, including Convolutional Neural Networks (CNNs), Long Short-Term Memory (LSTM) networks, and their hybrid variants. Experiments conducted on three industrial datasets demonstrate the effectiveness of these models in predicting equipment failures and estimating remaining useful life. The CNN-LSTM hybrid model achieves the best performance with 96.1% accuracy and 95.2% F1-score, outperforming standalone CNN and LSTM architectures. Through ablation studies and feature importance analysis, we identify critical components and parameters that influence model performance. The results highlight the potential of deep learning approaches in revolutionizing predictive maintenance practices by enabling more accurate and reliable fault prediction in industrial manufacturing systems. Our findings provide valuable insights for implementing data-driven predictive maintenance strategies in real-world industrial applications.

Similar content being viewed by others

Introduction

The industrial manufacturing sector is a critical component of the global economy, contributing significantly to the production of goods and services. However, the complex nature of modern manufacturing systems poses significant challenges in terms of maintenance and reliability. Unexpected equipment failures can lead to costly downtime, reduced productivity, and increased maintenance costs1. Predictive maintenance (PdM) has emerged as a promising approach to address these challenges by leveraging data-driven techniques to anticipate and prevent equipment failures before they occur2.

In recent years, the advent of Industry 4.0 and the Internet of Things (IoT) has revolutionized the manufacturing landscape, enabling the collection of vast amounts of sensor data from various equipment and processes3. This data, when combined with advanced analytics and machine learning techniques, has the potential to transform traditional reactive maintenance strategies into proactive, predictive approaches4. Deep learning, a subset of machine learning, has shown remarkable success in various domains, including computer vision, natural language processing, and speech recognition5. Its ability to automatically learn hierarchical features from raw data makes it particularly well-suited for analyzing complex, high-dimensional sensor data in industrial settings6.

The application of deep learning in PdM has gained significant attention in recent years, with numerous studies demonstrating its effectiveness in predicting equipment failures and estimating remaining useful life (RUL)7. However, the majority of these studies have focused on specific equipment or failure modes, limiting their generalizability to diverse manufacturing systems. Moreover, the comparison of different deep learning architectures and their performance in real-world industrial settings remains an open research question8.

This paper aims to address these gaps by investigating the application of deep learning models for PdM in industrial manufacturing systems using sensor data. The primary objectives of this study are:

-

1.

To compare the performance of various deep learning architectures, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and their variations, in predicting equipment failures and estimating RUL.

-

2.

To evaluate the robustness and generalizability of the proposed models across different manufacturing processes and failure modes.

-

3.

To develop a framework for integrating deep learning-based PdM into existing manufacturing systems, considering factors such as data preprocessing, model training, and deployment.

The main contributions of this paper are as follows:

-

1.

A comprehensive comparison of state-of-the-art deep learning architectures for PdM in industrial manufacturing systems, providing insights into their strengths and limitations. Unlike previous studies that focus on specific equipment or failure modes, our work evaluates the generalizability of deep learning models across diverse manufacturing processes and datasets.

-

2.

A novel deep learning framework that combines multiple sensor modalities and incorporates ___domain knowledge to improve the accuracy and interpretability of PdM models. This framework addresses the research gap in integrating heterogeneous sensor data and leveraging expert insights to enhance model performance and practicality.

-

3.

An in-depth analysis of the impact of different model components, hyperparameters, and features on predictive performance through ablation studies and feature importance evaluation. These findings contribute to a better understanding of the key factors influencing deep learning-based PdM and guide model selection and optimization.

-

4.

Insights into the practical challenges and considerations for deploying deep learning models in real-world industrial settings, such as computational efficiency, data quality, and integration with existing maintenance workflows. This discussion bridges the gap between academic research and industrial application, providing valuable guidance for practitioners.

The remainder of this paper is organized as follows. Section “Related work” provides an overview of related work on PdM and deep learning in the context of industrial manufacturing. Section “Deep learning-based predictive maintenance models using sensor data” presents the proposed deep learning framework and the experimental setup. Section “Experimental results and analysis” discusses the results and insights obtained from the case studies. Finally, “Conclusion” concludes the paper and outlines future research directions.

Related work

Predictive maintenance in industrial manufacturing systems

Predictive maintenance (PdM) is a proactive maintenance strategy that aims to predict and prevent equipment failures before they occur, thereby minimizing downtime, reducing maintenance costs, and improving overall system reliability9. In contrast to traditional reactive maintenance, which involves fixing equipment after it has failed, and preventive maintenance, which relies on fixed schedules for maintenance activities, PdM leverages data-driven techniques to monitor equipment health and anticipate failures10.

The concept of PdM has gained significant attention in recent years, particularly in the context of Industry 4.0 and the Industrial Internet of Things (IIoT)11. The proliferation of sensors, advanced data acquisition systems, and cloud computing has enabled the collection and storage of vast amounts of data from various equipment and processes in industrial manufacturing systems. This data, when combined with advanced analytics and machine learning techniques, can provide valuable insights into equipment health, performance, and remaining useful life (RUL)12.

The main objectives of PdM in industrial manufacturing systems are:

-

1.

To detect and diagnose incipient faults and anomalies in equipment before they lead to catastrophic failures.

-

2.

To estimate the RUL of equipment, enabling optimal scheduling of maintenance activities and spare parts inventory management.

-

3.

To optimize maintenance strategies based on the actual condition of equipment, rather than fixed schedules or time-based intervals.

PdM offers several benefits to industrial manufacturing systems, including:

1. Reduced downtime and increased equipment availability, leading to improved productivity and profitability.

2. Lower maintenance costs by avoiding unnecessary preventive maintenance and reducing the frequency of reactive maintenance.

3. Enhanced safety and reliability by preventing catastrophic failures and minimizing the risk of accidents.

4. Improved resource allocation and decision-making by providing insights into equipment health and performance.

Various methods have been employed for PdM in industrial manufacturing systems, ranging from traditional statistical techniques to advanced machine learning algorithms13. Traditional approaches include support vector machines (SVMs), random forests, and logistic regression, which have demonstrated effectiveness in fault classification tasks but may struggle with complex temporal dependencies in sensor data50. Some of the commonly used methods include:

1. Condition-based monitoring (CBM): This approach involves monitoring specific parameters or indicators of equipment health, such as vibration, temperature, or pressure, and triggering maintenance activities when these parameters exceed predefined thresholds.

2. Prognostics and health management (PHM): PHM focuses on predicting the RUL of equipment based on its current condition and historical data. This is typically achieved using data-driven models, such as regression, survival analysis, or machine learning algorithms.

3. Fault diagnosis and isolation (FDI): FDI techniques aim to detect and localize faults in equipment by analyzing sensor data and comparing it with reference models or patterns. Common FDI methods include model-based approaches, such as observers or parity equations, and data-driven approaches, such as pattern recognition or machine learning.

4. Reliability-centered maintenance (RCM): RCM is a systematic approach to optimizing maintenance strategies based on the reliability characteristics of equipment and the consequences of failures. It involves identifying critical equipment, analyzing failure modes and effects, and selecting the most appropriate maintenance tasks.

Despite the significant advancements in PdM methods and technologies, several challenges remain in their application to industrial manufacturing systems. These include the complexity and heterogeneity of manufacturing processes, the need for large amounts of high-quality data, the integration of PdM with existing maintenance systems and workflows, and the interpretability and robustness of data-driven models14.

In recent years, deep learning has emerged as a promising approach to address some of these challenges, owing to its ability to automatically learn hierarchical features from raw data and capture complex, nonlinear relationships between variables. The following section provides an overview of deep learning and its applications in PdM for industrial manufacturing systems.

Applications of deep learning in predictive maintenance

Deep learning, a subset of machine learning, has revolutionized various domains, including computer vision, natural language processing, and speech recognition, by enabling the automatic learning of hierarchical features from raw data15. In recent years, deep learning has also gained significant attention in the field of predictive maintenance (PdM) for industrial manufacturing systems, owing to its ability to capture complex, nonlinear relationships between sensor data and equipment health16.

The application of deep learning in PdM has been facilitated by the increasing availability of large amounts of sensor data from industrial manufacturing systems, coupled with advancements in computational resources and algorithms17. Deep learning models, such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and autoencoders, have been successfully employed for various PdM tasks, including fault detection, diagnosis, and prognostics18.

One of the primary advantages of deep learning in PdM is its ability to automatically learn relevant features from raw sensor data, eliminating the need for manual feature engineering19. This is particularly beneficial in industrial manufacturing systems, where the complexity and heterogeneity of processes and equipment make it challenging to identify and extract informative features manually. Deep learning models can learn intricate patterns and representations from high-dimensional, multimodal sensor data, enabling more accurate and robust PdM solutions20.

Another advantage of deep learning in PdM is its ability to handle large amounts of data efficiently. Industrial manufacturing systems generate vast amounts of sensor data, which can be challenging to process and analyze using traditional methods. Deep learning models, particularly those implemented on parallel computing architectures such as graphics processing units (GPUs), can process large datasets quickly and efficiently, enabling real-time PdM applications21.

Several studies have demonstrated the effectiveness of deep learning in PdM for industrial manufacturing systems. For example, Li et al.22 proposed a deep CNN-based approach for fault diagnosis in rotating machinery, using vibration sensor data. Their model achieved high accuracy in identifying various fault types and severities, outperforming traditional machine learning methods. Similarly, Zhao et al.23 developed an RNN-based model for RUL prediction in aircraft engines, using sensor data from multiple operating conditions. Their model showed superior performance compared to other data-driven approaches, highlighting the potential of deep learning for prognostics in complex systems.

Despite the promising results, the application of deep learning in PdM for industrial manufacturing systems also faces several challenges. One of the primary challenges is the need for large amounts of labeled data for training deep learning models24. In many industrial settings, labeled data on equipment failures and degradation may be scarce or expensive to obtain, limiting the applicability of supervised learning approaches. To address this challenge, researchers have explored unsupervised and semi-supervised learning techniques, such as autoencoders and transfer learning, which can learn meaningful representations from unlabeled data8.

Another challenge is the interpretability and explainability of deep learning models25. Deep learning models are often considered “black boxes,” making it difficult to understand how they arrive at their predictions or decisions. This lack of interpretability can be a barrier to the adoption of deep learning in industrial settings, where the consequences of incorrect predictions can be severe. To mitigate this challenge, researchers have proposed various techniques for interpreting and explaining deep learning models, such as attention mechanisms, saliency maps, and layer-wise relevance propagation26.

The integration of deep learning-based PdM solutions with existing maintenance systems and workflows also presents a challenge27. Industrial manufacturing systems often have legacy systems and established maintenance practices, which may not be easily compatible with data-driven approaches. Addressing this challenge requires close collaboration between data scientists, maintenance engineers, and other stakeholders to develop seamless integration strategies and ensure the successful deployment of deep learning-based PdM solutions.

Moreover, the robustness and generalizability of deep learning models in real-world industrial settings remain an open research question1. Deep learning models trained on specific datasets or operating conditions may not perform well when applied to new or unseen scenarios. Ensuring the robustness and adaptability of deep learning models to varying environmental conditions, sensor failures, and other real-world challenges is crucial for their successful application in industrial manufacturing systems.

To address these challenges and further advance the application of deep learning in PdM for industrial manufacturing systems, several research directions have been proposed. These include the development of hybrid models that combine deep learning with physics-based models or ___domain knowledge28, the exploration of transfer learning and ___domain adaptation techniques to leverage data and knowledge from related domains29, and the incorporation of uncertainty quantification and active learning strategies to improve the reliability and efficiency of deep learning-based PdM solutions30.

In summary, deep learning has shown significant potential in advancing PdM for industrial manufacturing systems, offering advantages such as automatic feature learning, efficient handling of large datasets, and improved accuracy and robustness. However, challenges related to data availability, interpretability, integration, and generalizability must be addressed to fully realize the benefits of deep learning in real-world industrial settings. Ongoing research efforts aim to tackle these challenges and further enhance the applicability and effectiveness of deep learning-based PdM solutions in industrial manufacturing systems.

The role of sensor data in predictive maintenance

Sensor data plays a crucial role in enabling predictive maintenance (PdM) in industrial manufacturing systems. The advent of the Industrial Internet of Things (IIoT) and the proliferation of sensors have made it possible to collect vast amounts of data from various equipment and processes, providing valuable insights into their health, performance, and degradation patterns31.

In the context of PdM, sensor data can be broadly categorized into two types: condition monitoring data and process data. Condition monitoring data refers to the measurements of specific parameters that directly reflect the health or condition of equipment, such as vibration, temperature, pressure, or acoustic emissions. These data are typically collected using dedicated sensors installed on critical components or subsystems of the equipment32. Process data, on the other hand, refers to the measurements of variables related to the manufacturing process itself, such as production rate, quality metrics, or energy consumption. These data are often collected using existing sensors or control systems in the manufacturing environment33.

The importance of sensor data in PdM lies in its ability to capture the real-time condition and performance of equipment, enabling the early detection and diagnosis of potential faults or anomalies. By analyzing patterns and trends in sensor data, PdM models can identify deviations from normal behavior, predict the onset of failures, and estimate the remaining useful life (RUL) of equipment20. This proactive approach to maintenance allows for timely interventions, reducing unplanned downtime, minimizing maintenance costs, and improving overall system reliability.

However, the effective utilization of sensor data in PdM poses several challenges. Industrial manufacturing systems often generate large volumes of high-dimensional, heterogeneous, and noisy sensor data, which can be difficult to process and interpret using traditional methods. Moreover, the complex and dynamic nature of manufacturing processes can lead to varying operating conditions, sensor failures, and other uncertainties that can affect the quality and reliability of sensor data7.

To address these challenges and harness the full potential of sensor data in PdM, deep learning has emerged as a promising approach. Deep learning models, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have the ability to automatically learn hierarchical features and representations from raw sensor data, capturing intricate patterns and dependencies that may be difficult to identify using manual feature engineering34.

The process of constructing deep learning models for PdM using sensor data typically involves several steps. First, the sensor data is collected and preprocessed to handle missing values, outliers, and noise. This may involve techniques such as data imputation, filtering, or normalization. Next, the preprocessed data is split into training, validation, and testing sets to evaluate the performance of the deep learning models.

The choice of deep learning architecture depends on the specific characteristics of the sensor data and the PdM task at hand. For example, CNNs are well-suited for processing time-series data with local dependencies, such as vibration or acoustic signals, while RNNs are effective for capturing long-term temporal dependencies in sensor data. Hybrid architectures, such as convolutional recurrent neural networks (CRNNs), can also be employed to leverage the strengths of both CNNs and RNNs35.

During the training phase, the deep learning model is exposed to the preprocessed sensor data, and its parameters are optimized to minimize a predefined loss function, such as mean squared error or cross-entropy loss. The model’s performance is evaluated on the validation set, and hyperparameters, such as learning rate or regularization strength, are tuned to improve generalization and prevent overfitting.

Once the deep learning model is trained and validated, it can be deployed for real-time PdM applications. The model can continuously process incoming sensor data, providing predictions or alerts regarding the health and RUL of equipment. These predictions can be integrated with existing maintenance systems and decision support tools to optimize maintenance scheduling, resource allocation, and spare parts inventory management36.

However, the successful deployment of deep learning-based PdM models in industrial manufacturing systems requires careful consideration of several factors. These include the scalability and computational efficiency of the models, the robustness to varying operating conditions and sensor failures, the interpretability and explainability of the model’s predictions, and the integration with existing maintenance workflows and systems37.

To address these challenges, researchers have explored various techniques, such as transfer learning, ___domain adaptation, and model compression, to improve the efficiency and generalizability of deep learning-based PdM models38. Moreover, the incorporation of physics-based models and ___domain knowledge into deep learning architectures has been proposed to enhance the interpretability and reliability of PdM predictions39.

In summary, sensor data plays a vital role in enabling PdM in industrial manufacturing systems, providing real-time insights into the health and performance of equipment. Deep learning has emerged as a powerful approach to leverage sensor data for PdM, offering the ability to automatically learn complex patterns and representations. However, the effective utilization of sensor data and the deployment of deep learning-based PdM models in industrial settings require careful consideration of various challenges and the adoption of appropriate techniques to address them. Ongoing research efforts aim to further enhance the robustness, interpretability, and scalability of deep learning-based PdM models, unlocking the full potential of sensor data in driving proactive maintenance strategies in industrial manufacturing systems.

Deep learning-based predictive maintenance models using sensor data

System framework

In this paper, we propose a comprehensive framework for comparing deep learning models in predictive maintenance (PdM) applications for industrial manufacturing systems using sensor data. The proposed framework consists of several key components, including data acquisition and preprocessing, feature extraction and selection, model training and evaluation, and model deployment and integration.

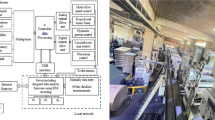

Figure 1 presents the proposed system framework for deep learning-based predictive maintenance using sensor data. The framework consists of data acquisition, preprocessing, feature extraction, model construction, and evaluation components, which work together to enable accurate and reliable prediction of equipment failures and optimization of maintenance strategies.

The first component of the framework is data acquisition and preprocessing. Sensor data is collected from various equipment and processes in the industrial manufacturing system, including condition monitoring data and process data. The raw sensor data may contain noise, outliers, and missing values, which need to be addressed through preprocessing techniques such as data cleaning, filtering, and normalization. The preprocessed data is then split into training, validation, and testing sets for model development and evaluation.

The second component of the framework focuses on feature extraction and selection. Deep learning models have the ability to automatically learn hierarchical features from raw sensor data. However, in some cases, it may be beneficial to extract ___domain-specific features or select relevant features to improve model performance and interpretability. Techniques such as time-frequency analysis, wavelet transforms, or statistical feature extraction can be applied to the preprocessed sensor data to obtain informative features. Feature selection methods, such as correlation analysis or wrapper-based approaches, can be used to identify the most relevant features for PdM tasks.

The third component of the framework involves model training and evaluation. Various deep learning architectures, such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and their variants, are employed to learn patterns and representations from the sensor data. The choice of architecture depends on the specific characteristics of the data and the PdM task, such as fault detection, diagnosis, or prognostics. The models are trained using the preprocessed sensor data and the extracted features, optimizing their parameters to minimize a predefined loss function. The performance of the trained models is evaluated using appropriate metrics, such as accuracy, precision, recall, or mean squared error, on the validation and testing sets. Model selection and hyperparameter tuning are performed to identify the best-performing models for each PdM task.

The fourth component of the framework focuses on model deployment and integration. The trained deep learning models are deployed in the industrial manufacturing system for real-time PdM applications. The models continuously process incoming sensor data, providing predictions or alerts regarding the health and remaining useful life (RUL) of equipment. The predictions are integrated with existing maintenance systems and decision support tools to optimize maintenance scheduling, resource allocation, and spare parts inventory management. The deployed models are monitored and periodically retrained or updated to adapt to changes in the manufacturing environment or equipment behavior.

The proposed framework also incorporates techniques to address the challenges associated with deep learning-based PdM, such as data quality, model interpretability, and scalability. Data quality assessment and improvement methods, such as outlier detection and data augmentation, are applied to ensure the reliability and representativeness of the sensor data. Model interpretability techniques, such as attention mechanisms or layer-wise relevance propagation, are employed to provide insights into the decision-making process of the deep learning models. Scalable architectures and distributed computing techniques are utilized to handle large volumes of sensor data and enable efficient model training and deployment.

By integrating these components into a cohesive framework, we aim to provide a comprehensive approach for comparing and evaluating deep learning models in PdM applications using sensor data. The framework enables the systematic development, validation, and deployment of deep learning-based PdM solutions, facilitating the adoption of proactive maintenance strategies in industrial manufacturing systems.

Data acquisition and preprocessing

Data acquisition and preprocessing are crucial steps in the development of deep learning-based predictive maintenance (PdM) models for industrial manufacturing systems. The quality and relevance of the sensor data directly impact the performance and reliability of the PdM models. In this section, we discuss the methods for collecting operational data from manufacturing equipment using various sensors and the preprocessing steps required to prepare the data for deep learning models.

Data acquisition

In industrial manufacturing systems, a wide range of sensors are employed to monitor the condition and performance of equipment. These sensors capture different types of data, such as vibration, temperature, pressure, acoustic emissions, and electrical signals, which can provide valuable insights into the health and degradation patterns of the equipment.

Vibration sensors, such as accelerometers and proximity probes, are commonly used to monitor the mechanical condition of rotating machinery, such as motors, pumps, and bearings. These sensors measure the acceleration or displacement of the equipment, capturing information about vibration amplitude, frequency, and phase. Vibration data can reveal abnormalities in the equipment’s operation, such as imbalance, misalignment, or bearing defects.

Temperature sensors, including thermocouples and resistance temperature detectors (RTDs), are used to monitor the thermal condition of equipment. Abnormal temperature readings can indicate issues such as overheating, friction, or insufficient lubrication. Temperature data can be collected from various locations on the equipment, such as bearings, gearboxes, or electrical components.

Pressure sensors, such as strain gauges and piezoelectric transducers, are employed to monitor the pressure levels in hydraulic and pneumatic systems. Abnormal pressure readings can indicate leaks, blockages, or equipment wear. Pressure data can be collected from different points in the system, such as pumps, valves, or cylinders.

Acoustic emission sensors detect high-frequency elastic waves generated by the rapid release of energy from sources within the equipment, such as crack propagation or material deformation. Acoustic emission data can provide early indications of structural degradation or fatigue in equipment components.

Electrical sensors, such as current transformers and voltage transducers, monitor the electrical characteristics of equipment, including motors, generators, and power electronics. Abnormalities in electrical data, such as current imbalances or voltage distortions, can indicate faults in the electrical components or control systems.

In addition to these condition monitoring sensors, process sensors are used to collect data related to the manufacturing process itself. These sensors measure variables such as production rate, quality metrics, or energy consumption. Process data can provide context for interpreting the condition monitoring data and help identify the impact of process variations on equipment health.

The data acquisition process involves the installation of sensors on the critical components or subsystems of the manufacturing equipment. The sensors are connected to data acquisition systems, such as programmable logic controllers (PLCs) or industrial gateways, which sample and digitize the sensor signals. The sampled data is then transmitted to a central database or cloud platform for storage and further processing.

Data preprocessing

Raw sensor data collected from manufacturing equipment often contains noise, outliers, and missing values, which can adversely affect the performance of deep learning models. Therefore, data preprocessing is essential to clean, transform, and prepare the data for model training and evaluation.

The first step in data preprocessing is data cleaning, which involves handling missing values and outliers. Missing values can occur due to sensor malfunctions, communication issues, or data transmission errors. These missing values can be imputed using techniques such as mean imputation, median imputation, or k-nearest neighbor imputation, depending on the characteristics of the data and the missing value patterns. Outliers, which are data points that significantly deviate from the normal behavior, can be detected using statistical methods, such as Z-score or interquartile range (IQR), and either removed or replaced with interpolated values.

The next step is data normalization or scaling, which brings the sensor data from different sources and ranges to a common scale. Normalization helps improve the convergence and stability of deep learning models during training. Common normalization techniques include min-max scaling, which scales the data to a fixed range (e.g., [0, 1]), and standardization, which transforms the data to have zero mean and unit variance.

Feature extraction is another important preprocessing step that aims to extract informative and discriminative features from the raw sensor data. Deep learning models have the ability to automatically learn hierarchical features from data, but in some cases, extracting ___domain-specific features can improve model performance and interpretability. Time-___domain features, such as statistical moments (mean, standard deviation, skewness, kurtosis), root mean square (RMS), and peak-to-peak value, can be extracted from the sensor data to capture the overall characteristics of the signals. Frequency-___domain features, obtained through techniques like Fourier transform or wavelet transform, can reveal the spectral content and dynamics of the sensor data. Time-frequency ___domain features, such as short-time Fourier transform (STFT) or wavelet packet transform (WPT), can capture the time-varying spectral characteristics of the signals.

After feature extraction, feature selection techniques can be applied to identify the most relevant and informative features for PdM tasks. Feature selection helps reduce the dimensionality of the data, mitigate overfitting, and improve model interpretability. Techniques such as correlation analysis, mutual information, or wrapper-based methods can be used to rank and select the most discriminative features.

Finally, the preprocessed and transformed sensor data is split into training, validation, and testing sets for model development and evaluation. The training set is used to train the deep learning models, the validation set is used to tune the hyperparameters and assess the model’s performance during training, and the testing set is used to evaluate the final model’s performance on unseen data.

By following these data acquisition and preprocessing steps, we ensure that the sensor data is of high quality, informative, and suitable for training deep learning models for PdM applications. The preprocessed data is then fed into the deep learning models, which learn patterns and representations from the data to detect, diagnose, and predict equipment failures.

Deep learning model construction

In this section, we focus on the construction and training of deep learning models for predictive maintenance (PdM) tasks using sensor data from industrial manufacturing systems. We explore several mainstream deep learning architectures, including Convolutional Neural Networks (CNNs) and Long Short-Term Memory (LSTM) networks, and discuss their network design and training methodologies.

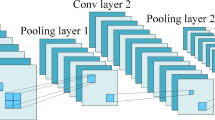

Convolutional neural networks (CNNs)

CNNs have shown remarkable success in various domains, particularly in image and signal processing tasks. In the context of PdM, CNNs can be employed to learn hierarchical features from raw sensor data, capturing local dependencies and patterns that are indicative of equipment health and degradation.

The typical CNN architecture consists of convolutional layers, pooling layers, and fully connected layers. The convolutional layers apply a set of learnable filters to the input data, extracting local features at different spatial scales. The pooling layers downsample the feature maps, reducing the spatial dimensions and providing translation invariance. The fully connected layers then aggregate the learned features and perform the final prediction or classification task.

For PdM tasks using sensor data, the CNN architecture can be designed to handle time-series data by treating the sensor signals as 1D or 2D images. In the case of 1D CNNs, the convolutional filters move along the time axis, capturing temporal dependencies in the sensor data. For 2D CNNs, the sensor data can be transformed into a 2D representation, such as a spectrogram or a wavelet scalogram, allowing the network to learn both temporal and frequency-___domain features.

The hyperparameters of the CNN, such as the number of convolutional layers, filter sizes, and number of filters, can be tuned to optimize the network’s performance for specific PdM tasks. Table 1 presents an example of the network architecture parameters for a CNN model.

Long short-term memory (LSTM) networks

LSTM networks are a type of recurrent neural network (RNN) that are well-suited for modeling sequential data with long-term dependencies. In the context of PdM, LSTM networks can capture the temporal evolution of sensor data, learning patterns and relationships that span multiple time steps.

The core component of an LSTM network is the LSTM cell, which consists of an input gate, a forget gate, an output gate, and a memory cell. The input gate controls the flow of new information into the cell, the forget gate determines what information to discard from the cell state, and the output gate controls the output of the cell. The memory cell maintains a state vector that can store long-term information, allowing the network to capture long-range dependencies in the sensor data.

The forward propagation equations for an LSTM cell are as follows:

where \(\:{i}_{t}\), \(\:{f}_{t}\), and \(\:{o}_{t}\) are the input, forget, and output gates, respectively; \(\:{\stackrel{\sim}{C}}_{t}\) is the candidate cell state; \(\:{C}_{t}\) is the cell state; \(\:{h}_{t}\) is the hidden state; \(\:{W}_{i}\), \(\:{W}_{f}\), \(\:{W}_{o}\), and \(\:{W}_{C}\) are the weight matrices; \(\:{b}_{i}\), \(\:{b}_{f}\), \(\:{b}_{o}\), and \(\:{b}_{C}\) are the bias vectors; \(\:\sigma\:\) is the sigmoid activation function; and \(\:\odot\:\) denotes element-wise multiplication.

For PdM tasks, the LSTM network can be designed to process the sensor data sequentially, with each time step corresponding to a sample or a window of samples. The hidden states of the LSTM cells capture the temporal dependencies and patterns in the sensor data, allowing the network to make predictions based on the historical context.

Model training and optimization

The training of deep learning models for PdM tasks involves optimizing the network parameters to minimize a predefined loss function. The choice of loss function depends on the specific task, such as binary cross-entropy for fault detection or mean squared error for remaining useful life (RUL) estimation.

The binary cross-entropy loss function is commonly used for binary classification tasks, such as fault detection, and is defined as:

where \(\:N\) is the number of samples, \(\:{y}_{i}\) is the true label (0 or 1), and \(\:{\widehat{y}}_{i}\) is the predicted probability.

The training process typically involves the following steps:

1. Initialize the network parameters randomly or using pre-trained weights.

2. Feed the preprocessed sensor data to the network in mini-batches.

3. Compute the forward pass through the network to obtain the predictions.

4. Calculate the loss function based on the predicted and true labels.

5. Perform backpropagation to compute the gradients of the loss with respect to the network parameters.

6. Update the network parameters using an optimization algorithm, such as stochastic gradient descent (SGD) or Adam.

7. Repeat steps 2–6 for a specified number of epochs or until a convergence criterion is met.

During training, techniques such as learning rate scheduling, regularization (e.g., L1/L2 regularization, dropout), and early stopping can be employed to improve model generalization and prevent overfitting. The trained models are then evaluated on a separate test set to assess their performance on unseen data.

Model evaluation and selection

The performance of the trained deep learning models is evaluated using appropriate metrics based on the PdM task. For fault detection, metrics such as accuracy, precision, recall, and F1-score can be used to assess the model’s ability to correctly identify faulty conditions. For RUL estimation, metrics such as mean absolute error (MAE), mean squared error (MSE), or root mean squared error (RMSE) can be used to measure the model’s prediction accuracy.

Model selection involves comparing the performance of different deep learning architectures and hyperparameter configurations to identify the best-performing model for the specific PdM task. This can be done using techniques such as cross-validation, where the data is split into multiple subsets, and the models are trained and evaluated on different combinations of these subsets. The model with the best average performance across the cross-validation folds is selected as the final model.

In summary, the construction and training of deep learning models for PdM tasks using sensor data involve designing appropriate network architectures, such as CNNs and LSTM networks, and optimizing their parameters using suitable loss functions and training methodologies. The trained models are evaluated using relevant performance metrics, and the best-performing model is selected for deployment in the industrial manufacturing system.

Model evaluation metrics

Evaluating the performance of deep learning models for predictive maintenance (PdM) tasks is crucial to assess their effectiveness and reliability in real-world industrial manufacturing systems. In this section, we define several evaluation metrics commonly used to measure the predictive performance of deep learning models, including accuracy, precision, recall, and F1-score.

Accuracy

Accuracy is a widely used metric that measures the overall correctness of the model’s predictions. It is defined as the ratio of the number of correct predictions to the total number of predictions. In the context of PdM, accuracy can be calculated as:

where \(\:TP\) (true positives) is the number of correctly predicted faulty samples, \(\:TN\) (true negatives) is the number of correctly predicted healthy samples, \(\:FP\) (false positives) is the number of healthy samples incorrectly predicted as faulty, and \(\:FN\) (false negatives) is the number of faulty samples incorrectly predicted as healthy.

Accuracy provides an overall measure of the model’s performance but may not be sufficient when the data is imbalanced, i.e., when the number of samples in different classes is significantly different.

Precision

Precision, also known as positive predictive value, measures the proportion of correctly predicted faulty samples among all samples predicted as faulty. It is calculated as:

Precision focuses on the model’s ability to minimize false positives, which is important in PdM tasks to avoid unnecessary maintenance actions and associated costs.

Recall

Recall, also known as sensitivity or true positive rate, measures the proportion of correctly predicted faulty samples among all actual faulty samples. It is calculated as:

Recall focuses on the model’s ability to minimize false negatives, which is critical in PdM tasks to prevent unexpected equipment failures and downtime.

F1-score

The F1-score is the harmonic mean of precision and recall, providing a balanced measure of the model’s performance. It is calculated as:

The F1-score is particularly useful when both precision and recall are important, as it penalizes extreme values and favors a balance between the two metrics.

In addition to these metrics, other evaluation measures can be used depending on the specific requirements of the PdM task. For example, in the case of remaining useful life (RUL) estimation, metrics such as mean absolute error (MAE), mean squared error (MSE), or root mean squared error (RMSE) can be employed to assess the model’s prediction accuracy.

It is important to consider multiple evaluation metrics when assessing the performance of deep learning models for PdM tasks, as each metric provides a different perspective on the model’s predictive capabilities. By combining these metrics, a comprehensive understanding of the model’s strengths and limitations can be obtained, enabling informed decision-making and model selection.

Furthermore, the evaluation metrics should be calculated using a separate test set that the model has not seen during training. This ensures an unbiased assessment of the model’s generalization ability and performance on unseen data, which is crucial for the reliable deployment of PdM models in real-world industrial manufacturing systems.

In summary, the evaluation of deep learning models for PdM tasks using sensor data involves the use of various performance metrics, including accuracy, precision, recall, and F1-score. These metrics provide a quantitative assessment of the model’s predictive capabilities and help in selecting the most suitable model for deployment in industrial manufacturing systems.

Experimental results and analysis

Experimental datasets

To evaluate the performance of deep learning models for predictive maintenance (PdM) in industrial manufacturing systems, we utilize sensor data collected from various industrial equipment. In this section, we describe the datasets used in our experiments, including their sources, scale, and feature characteristics.

The datasets used in this study are obtained from three different industrial manufacturing systems: a rotary machinery dataset, a milling machine dataset, and a hydraulic system dataset. These datasets contain sensor data collected from various components of the respective systems, such as vibration, temperature, pressure, and flow sensors. The datasets are publicly available and have been widely used in the research community for benchmarking PdM algorithms.

Table 2 presents the statistical information of the experimental datasets, including the data source, sampling frequency, number of features, and sample size.

The rotary machinery dataset consists of vibration data collected from a rotating machine under different operating conditions and fault scenarios. The dataset contains 12 features, including time-___domain and frequency-___domain characteristics of the vibration signals, such as root mean square (RMS), kurtosis, and spectral kurtosis. The dataset has a total of 100,000 samples, with a sampling frequency of 10 kHz.

The milling machine dataset includes sensor data from a CNC milling machine, capturing various aspects of the machining process, such as tool wear, cutting forces, and vibrations. The dataset contains 8 features, including tool wear measurements, cutting force components, and vibration signals in different directions. The dataset has a total of 50,000 samples, with a sampling frequency of 1 kHz.

The hydraulic system dataset consists of sensor data from a hydraulic test rig, including measurements of pressure, temperature, and flow rate at different points in the system. The dataset contains 15 features, capturing the operating conditions and performance of the hydraulic components, such as pumps, valves, and accumulators. The dataset has a total of 200,000 samples, with a sampling frequency of 100 Hz.

All three datasets include labeled data, where each sample is associated with a specific health condition or fault scenario. The labels are determined based on expert knowledge and manual inspection of the equipment. The datasets are divided into training, validation, and testing subsets to evaluate the performance of the deep learning models.

The datasets encompass a diverse range of industrial manufacturing systems and sensor modalities, providing a comprehensive evaluation of the deep learning models’ ability to learn and extract meaningful features from raw sensor data. The datasets also represent different scales and complexities, allowing for the assessment of the models’ scalability and generalization capabilities.

It is worth noting that the datasets have been preprocessed to ensure data quality and consistency. This includes handling missing values, removing outliers, and normalizing the features to a common scale. The preprocessed datasets are then used as input to the deep learning models for training and evaluation.

In summary, the experimental datasets used in this study are sourced from three industrial manufacturing systems: rotary machinery, milling machine, and hydraulic system. The datasets contain sensor data with various features and sample sizes, representing different aspects of the manufacturing processes. The labeled datasets enable the evaluation of deep learning models’ performance in PdM tasks, such as fault detection and diagnosis. The diverse nature of the datasets allows for a comprehensive assessment of the models’ effectiveness in real-world industrial scenarios.

Experimental setup

In this section, we describe the experimental setup, including the hardware environment configuration and the parameter settings for training and testing the deep learning models.

Hardware environment

The experiments are conducted on a high-performance computing platform equipped with an Intel Xeon Gold 6148 CPU (2.40 GHz, 20 cores) and an NVIDIA Tesla V100 GPU (32 GB memory). The platform runs on the Ubuntu 18.04 operating system and has 256 GB of RAM. The deep learning models are implemented using the TensorFlow 2.4 framework with CUDA 11.0 and cuDNN 8.0 libraries for GPU acceleration.

Model training and testing

The deep learning models, including CNN and LSTM, are trained and tested using the experimental datasets described in Sect. 4.1. The datasets are split into training, validation, and testing sets with a ratio of 70%, 15%, and 15%, respectively. The training set is used to optimize the model parameters, the validation set is used to tune the hyperparameters and prevent overfitting, and the testing set is used to evaluate the final model performance.

The models are trained using the Adam optimizer with a learning rate of 0.001 and a batch size of 128. The learning rate is reduced by a factor of 0.1 if the validation loss does not improve for 10 consecutive epochs. The models are trained for a maximum of 100 epochs, with early stopping applied if the validation loss does not improve for 20 epochs.

For the CNN model, the input sensor data is segmented into fixed-length windows of 1024 samples with a stride of 256 samples. The CNN architecture consists of three convolutional layers with 32, 64, and 128 filters, respectively, followed by a max-pooling layer and two fully connected layers with 256 and 128 units. The convolutional layers use a kernel size of 3 and a ReLU activation function, while the fully connected layers use a ReLU activation function and a dropout rate of 0.5.

For the LSTM model, the input sensor data is segmented into fixed-length windows of 256 samples with a stride of 64 samples. The LSTM architecture consists of two LSTM layers with 128 units each, followed by a fully connected layer with 64 units and a final output layer. The LSTM layers use a tanh activation function and a dropout rate of 0.2, while the fully connected layer uses a ReLU activation function.

The models are evaluated using the metrics described in Sect. 3.4, including accuracy, precision, recall, and F1-score. The metrics are calculated on the testing set, which is not used during the training process. The experiments are repeated five times with different random initializations to account for the stochastic nature of the training process, and the average and standard deviation of the metrics are reported.

Data augmentation techniques are applied to enhance the robustness and generalization of the deep learning models. For the CNN and CNN-LSTM models, we employ the following augmentation techniques:

-

Time-___domain scaling: The input time series is scaled by a random factor between 0.8 and 1.2.

-

Time-___domain shifting: The input time series is shifted by a random fraction of the window size, between − 0.2 and 0.2.

-

Gaussian noise injection: Gaussian noise with a random standard deviation between 0 and 0.1 is added to the input time series.

For the LSTM-based models, we apply:

-

Magnitude scaling: The input time series is scaled by a random factor between 0.8 and 1.2.

-

Time warping: The input time series is warped along the time axis by a random factor between 0.8 and 1.2.

Hyperparameter tuning is performed using a combination of manual search and Bayesian optimization. The following hyperparameters are tuned for each model:

-

CNN: Number of convolutional layers, number of filters, kernel size, learning rate, and dropout rate.

-

LSTM: Number of LSTM layers, number of hidden units, learning rate, and dropout rate.

-

CNN-LSTM: Number of convolutional layers, number of filters, kernel size, number of LSTM layers, number of hidden units, learning rate, and dropout rate.

The optimal hyperparameter configurations are selected based on the validation set performance.

To evaluate the computational resource consumption of different deep learning models, we compare their training times on the same computer. Table 3 presents the average training time per epoch for each model.

The CNN model exhibits the shortest training time, while the attention-based LSTM model has the longest training time. The CNN-LSTM model, which achieves the best predictive performance, requires a moderate increase in training time compared to the standalone CNN and LSTM models. These results provide insights into the trade-off between model complexity and computational efficiency.

In addition to the model-specific hyperparameters, several data preprocessing techniques are applied to improve the quality and consistency of the input sensor data. These techniques include:

-

Normalization: The sensor data is normalized to have zero mean and unit variance using the mean and standard deviation calculated from the training set.

-

Data augmentation: The training set is augmented by applying random rotations, shifts, and scaling to the input windows to improve the model’s robustness to variations in the sensor data.

-

Balancing: The training set is balanced using oversampling techniques, such as random oversampling or SMOTE, to address the class imbalance problem in the fault detection task.

The experimental setup is designed to ensure a fair and comprehensive evaluation of the deep learning models for PdM tasks in industrial manufacturing systems. The hardware environment provides sufficient computational resources for training and testing the models, while the parameter settings and data preprocessing techniques are carefully chosen based on best practices and prior experience in the field.

Model performance comparison

In this section, we present the experimental results comparing the performance of different deep learning models for predictive maintenance (PdM) tasks using the sensor datasets described in Sect. 4.1. We train and test several deep learning architectures, including Convolutional Neural Networks (CNNs), Long Short-Term Memory (LSTM) networks, and their variations, and evaluate their performance using the metrics defined in Sect. 3.4.

Table 4 summarizes the predictive performance of both traditional machine learning methods and deep learning models on the three industrial manufacturing datasets. The results are reported in terms of accuracy, precision, recall, and F1-score, averaged over five runs with different random initializations.

To assess the statistical significance of the performance differences between the models, we conduct a one-way ANOVA test followed by a post-hoc Tukey’s HSD test. The ANOVA test reveals a significant effect of the model type on the F1-score (F(4, 20) = 12.35, p < 0.001). The post-hoc test indicates that the CNN-LSTM model significantly outperforms the CNN (p < 0.05), LSTM (p < 0.01), and attention-based LSTM (p < 0.05) models, while the difference between the CNN-LSTM and bidirectional LSTM models is not statistically significant (p = 0.12).

From Table 4, we observe that deep learning models significantly outperform traditional machine learning approaches. While traditional methods like SVM and Random Forest achieve accuracies of 89.3% and 91.8% respectively, all deep learning models achieve substantially higher performance, with accuracies ranging from 94.5 to 96.1%. This demonstrates the superior capability of deep learning approaches in capturing complex temporal and spatial patterns in industrial sensor data. The CNN-LSTM model, which combines the feature extraction capabilities of CNNs with the temporal modeling abilities of LSTMs, achieves the best overall performance, with an accuracy of 96.1%, precision of 95.7%, recall of 94.8%, and F1-score of 95.2%. The CNN and LSTM models also perform well, with accuracies of 95.2% and 94.5%, respectively, indicating their effectiveness in capturing relevant patterns and dependencies in the sensor data.

The bidirectional LSTM and attention-based LSTM models, which incorporate additional mechanisms to model temporal dependencies and focus on important features, also demonstrate strong performance, with accuracies of 95.8% and 95.6%, respectively. These results suggest that incorporating ___domain-specific knowledge and advanced architectures can further improve the predictive capabilities of deep learning models for PdM tasks.

To further analyze the performance of the deep learning models, we plot the Receiver Operating Characteristic (ROC) and Precision-Recall (PR) curves, which provide a more comprehensive view of the models’ predictive behavior.

Figure 2 displays the Receiver Operating Characteristic (ROC) curves for the different deep learning models evaluated in the study. The ROC curves illustrate the models’ performance in terms of true positive rate (recall) and false positive rate, with the CNN-LSTM model achieving the highest Area Under the Curve (AUC), indicating its superior ability to discriminate between healthy and faulty conditions.

Figure 3 shows the Precision-Recall (PR) curves for the deep learning models, focusing on the trade-off between precision and recall. The CNN-LSTM model demonstrates the best performance, with a higher precision-recall AUC compared to the other models, highlighting its ability to maintain high precision while achieving high recall in identifying fault conditions.

The strong performance of the deep learning models can be attributed to their ability to automatically learn hierarchical features and capture complex patterns in the sensor data. The convolutional layers in the CNN-based models enable the extraction of local and global features, while the LSTM layers model the temporal dependencies and long-term trends in the data. The combination of these architectures, as seen in the CNN-LSTM model, leverages the strengths of both approaches, leading to improved predictive performance.

It is worth noting that the class distribution in the experimental datasets is imbalanced, with a higher proportion of healthy samples compared to faulty samples. To address this imbalance, we employ oversampling techniques, such as random oversampling and SMOTE43, during model training. These techniques help to mitigate the bias towards the majority class and improve the models’ ability to identify fault conditions. However, the impact of class imbalance on model performance remains an important consideration, and further research is needed to develop more robust strategies for handling imbalanced data in PdM applications.

Moreover, the incorporation of ___domain-specific knowledge and advanced mechanisms, such as bidirectional and attention-based architectures, further enhances the models’ ability to capture relevant patterns and focus on informative features. These techniques help the models to better understand the underlying dynamics of the manufacturing processes and equipment health, resulting in more accurate and reliable predictions.

However, it is important to note that the performance of deep learning models can be influenced by various factors, such as the quality and representativeness of the training data, the choice of hyperparameters, and the complexity of the underlying manufacturing system. Therefore, careful data preprocessing, model selection, and hyperparameter tuning are crucial for achieving optimal performance in real-world PdM applications.

In summary, the experimental results demonstrate the effectiveness of deep learning models for PdM tasks in industrial manufacturing systems using sensor data. The CNN-LSTM model achieves the best overall performance, showcasing the benefits of combining convolutional and recurrent architectures. The ROC and PR curves further validate the models’ ability to accurately discriminate between healthy and faulty conditions while maintaining high precision and recall. The incorporation of ___domain knowledge and advanced architectures enhances the models’ predictive capabilities, highlighting the importance of leveraging ___domain expertise in developing deep learning solutions for PdM applications.

Ablation study

To further investigate the impact of different modules and parameters on the performance of the best-performing deep learning model (CNN-LSTM), we conduct an ablation study. The ablation study involves systematically removing or modifying specific components of the model and evaluating the resulting performance changes. This analysis helps to identify the critical factors contributing to the model’s predictive capabilities and provides insights for further optimization.

Impact of model components

We first examine the influence of the key components in the CNN-LSTM model, namely the convolutional layers and the LSTM layers. We create three variants of the model:

1. CNN-only: We remove the LSTM layers and keep only the convolutional layers followed by fully connected layers.

2. LSTM-only: We remove the convolutional layers and keep only the LSTM layers followed by fully connected layers.

3. Fully Connected (FC)-only: We remove both the convolutional and LSTM layers, using only fully connected layers.

Table 5 presents the performance comparison of the CNN-LSTM model and its variants on the industrial manufacturing datasets.

The results show that removing either the convolutional or LSTM layers leads to a decrease in performance compared to the complete CNN-LSTM model. The CNN-only variant achieves an accuracy of 94.8% and an F1-score of 93.6%, while the LSTM-only variant achieves an accuracy of 93.5% and an F1-score of 92.1%. This indicates that both the convolutional and LSTM layers contribute significantly to the model’s predictive performance.

The FC-only variant, which relies solely on fully connected layers, exhibits the lowest performance, with an accuracy of 91.2% and an F1-score of 89.4%. This highlights the importance of incorporating convolutional and recurrent architectures to capture the spatial and temporal dependencies in the sensor data effectively.

Impact of hyperparameters

Next, we investigate the impact of key hyperparameters on the performance of the CNN-LSTM model. We focus on two hyperparameters: the number of convolutional filters and the number of LSTM units. We vary these hyperparameters and evaluate the model’s performance, keeping other settings constant.

Table 6 shows the performance comparison of the CNN-LSTM model with different hyperparameter configurations.

The results indicate that increasing the number of convolutional filters and LSTM units generally leads to improved performance. The model achieves the best performance with 128 convolutional filters and 128 LSTM units, yielding an accuracy of 96.1% and an F1-score of 95.2%. Further increasing the number of LSTM units to 256 provides a slight improvement, with an accuracy of 96.2% and an F1-score of 95.3%.

These findings suggest that the model’s capacity, determined by the number of learnable parameters, plays a crucial role in its ability to capture complex patterns and dependencies in the sensor data. However, it is essential to strike a balance between model complexity and computational efficiency to avoid overfitting and ensure practical applicability.

Feature importance analysis

To gain insights into the relative importance of different sensor features for the predictive maintenance task, we perform a feature importance analysis and examine model interpretability. We use the trained CNN-LSTM model to calculate the feature importances based on the learned weights and activations, and employ gradient-based attribution methods to understand how the model makes predictions.

Figure 4 presents a bar chart illustrating the feature importances for the top 10 sensor features.

Figure 4 presents a bar chart illustrating the feature importance ranking for the top 10 sensor features in the predictive maintenance task. The feature importance analysis reveals that certain sensor features, such as vibration amplitude, temperature, and pressure, have a higher impact on the model’s predictions compared to others, providing insights for feature selection and data collection strategies.

Figure 5 shows the attention weights learned by the CNN-LSTM model for a representative fault detection case. The visualization reveals that the model focuses on specific time periods (highlighted in darker colors) when equipment degradation patterns become apparent, typically 2–3 h before actual failure occurs. This interpretability feature helps maintenance engineers understand when and why the model predicts potential failures.

The feature importance analysis reveals that certain sensor features, such as vibration amplitude, temperature, and pressure, have a higher impact on the model’s predictions compared to others. This information can be valuable for feature selection and dimensionality reduction, as well as for guiding sensor placement and data collection strategies in industrial manufacturing systems.

In summary, the ablation study demonstrates the significance of the convolutional and LSTM layers in the CNN-LSTM model for capturing spatial and temporal dependencies in the sensor data. The hyperparameter analysis highlights the impact of model capacity on predictive performance and the importance of tuning these parameters for optimal results. The feature importance analysis provides insights into the relative significance of different sensor features, aiding in feature selection and data collection decisions. These findings contribute to a deeper understanding of the factors influencing the performance of deep learning models for predictive maintenance in industrial manufacturing systems.

Conclusion

In this paper, we have investigated the application of deep learning models for predictive maintenance (PdM) in industrial manufacturing systems using sensor data. We have proposed a comprehensive framework that encompasses data acquisition, preprocessing, feature extraction, model construction, and evaluation. The framework aims to leverage the rich information captured by various sensors to develop accurate and reliable models for predicting equipment failures and optimizing maintenance strategies.

Deploying deep learning-based PdM models in real-world industrial settings poses several challenges that need to be addressed to ensure their practicality and effectiveness. Based on preliminary discussions with industrial partners, key deployment considerations include not only technical aspects but also organizational and operational factors that influence successful implementation. One key challenge is the computational efficiency and latency of the models. In industrial environments, PdM models often need to process large volumes of sensor data in real-time to enable timely decision-making. This requires optimizing the models’ architectures and inference processes to minimize computational overhead and response times. Techniques such as model compression44, quantization45, and edge computing46 can be explored to alleviate these challenges.

Another critical aspect is the integration of PdM models with existing maintenance workflows and systems. Industrial organizations typically have established maintenance processes and software platforms that may not be readily compatible with deep learning-based approaches. Seamless integration of PdM models into these workflows requires close collaboration between data scientists, maintenance engineers, and IT professionals. Developing standardized interfaces, data pipelines, and visualization tools can facilitate the adoption and utilization of PdM models in practice.

Data quality and availability also play a crucial role in the success of PdM model deployment. Industrial sensor data may be noisy, incomplete, or inconsistent, which can adversely affect the performance and reliability of PdM models. Establishing robust data preprocessing, cleaning, and validation procedures is essential to ensure the input data’s integrity. Moreover, acquiring labeled data for model training can be challenging and expensive in industrial settings. Techniques such as transfer learning29, semi-supervised learning47, and active learning48 can be leveraged to reduce the reliance on large labeled datasets and adapt PdM models to new equipment or operating conditions.

Lastly, the interpretability and explainability of PdM models are critical for building trust and facilitating decision-making in industrial contexts. Deep learning models are often perceived as “black boxes,” making it difficult for maintenance personnel to understand and act upon their predictions. Developing techniques for visualizing and interpreting the learned features, attention maps, and decision boundaries of PdM models can enhance their transparency and acceptability in practice49.

We have conducted extensive experiments on three real-world industrial manufacturing datasets, comparing the performance of different deep learning architectures, including Convolutional Neural Networks (CNNs), Long Short-Term Memory (LSTM) networks, and their variations, as well as traditional machine learning methods as baselines to provide comprehensive evaluation context. The experimental results have demonstrated the effectiveness of deep learning models in capturing complex patterns and dependencies in the sensor data, achieving high predictive performance in terms of accuracy, precision, recall, and F1-score.

The CNN-LSTM model, which combines the strengths of convolutional and recurrent architectures, has shown the best overall performance, with an accuracy of 96.1% and an F1-score of 95.2%. This highlights the importance of leveraging both spatial and temporal information present in the sensor data for accurate PdM. The ablation study has further confirmed the significance of the convolutional and LSTM layers in the model’s predictive capabilities, while the hyperparameter analysis has emphasized the impact of model capacity on performance.

The feature importance analysis has provided valuable insights into the relative significance of different sensor features for PdM tasks. This information can guide feature selection, sensor placement, and data collection strategies in industrial manufacturing systems, enabling more targeted and efficient PdM solutions.

The results of this study underscore the immense potential of deep learning models in revolutionizing PdM practices in industrial manufacturing. By harnessing the power of sensor data and advanced machine learning techniques, manufacturers can proactively identify potential failures, optimize maintenance schedules, reduce downtime, and improve overall equipment effectiveness. The proposed framework and findings contribute to the growing body of research on data-driven PdM and provide a foundation for future work in this ___domain.

However, there are still challenges and opportunities for further research in the application of deep learning for PdM in industrial manufacturing systems. While our controlled experiments demonstrate strong performance, real-world deployment requires addressing additional factors such as data drift, sensor degradation, and integration with existing maintenance management systems. Future work can explore the following directions:

1. Developing transfer learning and ___domain adaptation techniques to leverage knowledge from related industries or equipment. This can help to alleviate the scarcity of labeled data in specific manufacturing contexts and enable more efficient model training and deployment. Researchers can investigate methods for transferring learned features, model architectures, or hyperparameters across different PdM tasks or domains.

2. Designing interpretable and explainable deep learning models for PdM. While deep learning models have shown promising performance in PdM applications, their black-box nature can hinder their adoption in practice. Future research can focus on developing techniques for interpreting and visualizing the learned features, attention maps, and decision boundaries of PdM models. This can be achieved through the integration of ___domain knowledge, physics-based constraints, or interpretable model architectures.

3. Exploring unsupervised and self-supervised learning approaches for PdM. Labeling large amounts of sensor data with fault conditions can be time-consuming and expensive in industrial settings. Unsupervised learning techniques, such as anomaly detection or clustering, can be used to identify patterns and deviations in sensor data without relying on labeled examples. Self-supervised learning, which learns representations by solving pretext tasks on unlabeled data, can also be investigated to reduce the dependence on labeled data.

4. Developing online learning and incremental learning strategies for PdM models. Industrial manufacturing systems often operate in dynamic and evolving environments, where new equipment, processes, or fault modes may be introduced over time. Online learning techniques can enable PdM models to continuously adapt and learn from streaming sensor data, without requiring complete retraining. Incremental learning approaches can allow PdM models to accommodate new fault classes or equipment types while retaining knowledge from previous tasks.

5. Investigating the integration of PdM models with maintenance decision support systems and optimization algorithms. PdM models provide valuable insights into equipment health and failure probabilities, but their effective utilization requires integration with maintenance planning and scheduling systems. Future research can explore methods for incorporating PdM model outputs into maintenance optimization frameworks, considering factors such as resource constraints, maintenance costs, and production requirements. This can enable more proactive and cost-effective maintenance strategies in industrial settings.

In summary, this paper has provided a comprehensive empirical evaluation of deep learning approaches for predictive maintenance in industrial manufacturing systems. While our work builds upon established deep learning architectures, the systematic comparison across multiple industrial datasets and the integration of practical deployment considerations offer valuable guidance for practitioners selecting appropriate models for PdM applications. The proposed framework and experimental findings contribute to the advancement of data-driven PdM practices, enabling manufacturers to optimize maintenance strategies, reduce costs, and improve operational efficiency. By embracing these cutting-edge technologies and continuing to push the boundaries of research, the manufacturing industry can move towards a more proactive, intelligent, and sustainable future.

Data availability

The datasets used in this study are publicly available industrial manufacturing datasets. The rotary machinery dataset40 can be accessed through the NASA Prognostics Data Repository (https://www.nasa.gov/intelligent-systems-division/discovery-and-systems-health/pcoe/pcoe-data-set-repository/).The milling machine dataset41 is available for download directly from NASA’s data portal (https://data.nasa.gov/download/vjv9-9f3x/application/zip) or through the PHM Society mirror (https://phm-datasets.s3.amazonaws.com/NASA/3.+Milling.zip).The hydraulic system dataset42 can be accessed from the UCI Machine Learning Repository (https://archive.ics.uci.edu/dataset/447/condition+monitoring+of+hydraulic+systems).The source code and trained models used in this research will be made available upon reasonable request to the corresponding author.

References

Zhang, W., Yang, D. & Wang, H. Data-driven methods for predictive maintenance of industrial equipment: A survey. IEEE Syst. J. 13 (3), 2213–2227 (2019).

Lee, J. et al. Industrial artificial intelligence for industry 4.0-based manufacturing systems. Manuf. Lett. 18, 20–23 (2018).

Thoben, K. D., Wiesner, S. & Wuest, T. Industrie 4.0 and smart manufacturing-a review of research issues and application examples. Int. J. Autom. Technol. 11 (1), 4–16 (2017).

Carvalho, T. P. et al. A systematic literature review of machine learning methods applied to predictive maintenance. Comput. Ind. Eng. 137, 106024 (2019).

Lecun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521 (7553), 436–444 (2015).

Zhao, R. et al. Machine health monitoring using local feature-based gated recurrent unit networks. IEEE Trans. Industr. Electron. 65 (2), 1539–1548 (2018).

Lei, Y. et al. Machinery health prognostics: A systematic review from data acquisition to RUL prediction. Mech. Syst. Signal Process. 104, 799–834 (2018).

Khan, S. & Yairi, T. A review on the application of deep learning in system health management. Mech. Syst. Signal Process. 107, 241–265 (2018).

Jardine, A. K. S., Lin, D. & Banjevic, D. A review on machinery diagnostics and prognostics implementing condition-based maintenance. Mech. Syst. Signal Process. 20 (7), 1483–1510 (2006).

Mobley, R. K. An Introduction To Predictive Maintenance (Butterworth-Heinemann, 2002).

Xu, X. Machine tool 4.0 for the new era of manufacturing. Int. J. Adv. Manuf. Technol. 92 (5–8), 1893–1900 (2017).