Abstract

Attention mechanisms have been introduced to exploit deep-level information for image restoration by capturing feature dependencies. However, existing attention mechanisms often have limited perceptual capabilities and are incompatible with low-power devices due to computational resource constraints. Therefore, we propose a feature enhanced cascading attention network (FECAN) that introduces a novel feature enhanced cascading attention (FECA) mechanism, consisting of enhanced shuffle attention (ESA) and multi-scale large separable kernel attention (MLSKA). Specifically, ESA enhances high-frequency texture features in the feature maps, and MLSKA executes the further extraction. The rich and fine-grained high-frequency information are extracted and fused from multiple perceptual layers, thus improving super-resolution (SR) performance. To validate FECAN’s effectiveness, we evaluate it with different complexities by stacking different numbers of high-frequency enhancement modules (HFEM) that contain FECA. Extensive experiments on benchmark datasets demonstrate that FECAN outperforms state-of-the-art lightweight SR networks in terms of objective evaluation metrics and subjective visual quality. Specifically, at a × 4 scale with a 121 K model size, compared to the second-ranked MAN-tiny, FECAN achieves a 0.07 dB improvement in average peak signal-to-noise ratio (PSNR), while reducing network parameters by approximately 19% and FLOPs by 20%. This demonstrates a better trade-off between SR performance and model complexity.

Similar content being viewed by others

Introduction

Image super-resolution (SR) is a low-level computer vision task aims at recovering high-resolution (HR) images from low-resolution (LR) counterparts by reconstructing missing high-frequency information1,2. It can compensate for the limitations of imaging device performance, effectively utilizing low-resolution image data to meet the demands of high-definition display devices, thereby enhancing the usability of low-quality images. It is classified as an ill-posed problem since a single LR input can correspond to multiple HR outputs. With the increasing demand for high resolution of images, many researchers have carried out extensive researches on SR model. In pursuit of better image quality, the complexity of the SR models gradually deepens, which makes it difficult to deploy the SR models in devices with limited computational resources. For facilitate the practical application, it is crucial to design resource-friendly SR algorithms.

With the advancements in hardware capabilities, a large amount of data have been applied in training networks for image SR. As a result, numerous deep learning-based SR methods have been proposed with impressive reconstruction performances. For instance, many SR models based on Convolutional Neural Networks (CNN)1,2,3,4,5 have been developed, where a large number of convolutional layers or residual blocks are stacked to leverage priors and intra-image information for improved reconstruction quality. However, the fixed-size receptive fields of convolutional kernels put limits on the network’s ability to flexibly learn relationships between pixels, necessitating very deep and complex network architectures to recover finer details, leading to a significant increase in computational cost. Moreover, recent advancements in Vision Transformers (ViT)6,7,8 have sparked researchers’ interest in exploring their application in low-level vision tasks9,10,11 due to their strong modeling capabilities with self-attention mechanisms5. For example, Liang et al.10 proposed SwinIR, which employs the Swin Transformer12 for image restoration, achieving superior quantitative performance compared to previous CNN-based SR methods. However, the computational cost of self-attention5 grows quadratically with the input feature size, hence, it is not favorable for efficient SR design.

Recently, lightweight SR models have received significant attention. Ahn et al.13 proposed the cascading residual network (CARN), which effectively captures complex image details through group convolutions and cascading residual blocks. Sun et al.14 introduced ShuffleMixer, which incorporates large convolutions and channel-shuffling operations to increase the receptive field for spatial information aggregation in lightweight SR designs. Gao et al.15 developed the lightweight bimodal network (LBNet) using symmetric CNN and recursive Transformers, maintaining the model’s ability to capture long-range dependencies while reducing complexity. To focus on recovering high-frequency image details, many lightweight SR models16,17,18 have introduced attention mechanisms. Liu et al.16 designed an attention-based multi-scale residual network (AMSRN) that employs a spatial and channel-wise attention residual (SCAR) block to extract valuable features in a lightweight manner. Similarly, Li et al.17 proposed an SR network called multi-scale channel attention network (MCSN), which combines channel attention and multi-scale feature extraction mechanisms to provide rich detail information for image reconstruction. Behjati et al.18 introduced the directional variance attention network (DiVANet), which captures long-range dependencies through the directional variance attention (DiVA) mechanism. Although these methods have achieved certain effectiveness, there is still room for further optimization in terms of image quality and computational complexity.

The aforementioned methods have made significant progress in the field of lightweight super-resolution. However, during the reconstruction process, the feature extraction capability of lightweight models is often limited, resulting in fine and complex texture features being frequently overlooked. This makes it challenging to capture the critical details that are beneficial for image restoration. To address these challenges, this paper proposes a lightweight image SR architecture that effectively extracts more high-frequency features and pixel relationships, enabling reconstruction with relatively fewer computations. Specifically, Enhanced Shuffle Attention (ESA) and Multi-scale Large Separable Kernel Attention (MLSKA) are combined in a cascading manner to build the Feature Enhanced Cascading Attention (FECA) as the core module of the network. Firstly, ESA enhances high-frequency detail information of value, and then MLSKA processes these features to achieve deep exploration of reconstruction features. Local attribution map (LAM)19 is applied to compare the performance of various methods. As shown in Fig. 1, our approach activates more pixels around the target region and has a higher diffusion index (DI)19, which demonstrates the high-frequency feature enhancement capability.

The main contributions of this paper can be summarized as follows:

-

1.

We propose a lightweight image SR network called Feature Enhanced Cascading Attention Network (FECAN), which combines Transformer structures with Feature Connect Block (FCB) to efficiently reconstruct images through effective feature propagation.

-

2.

We introduce Feature Enhanced Cascading Attention (FECA), a module that enhances important information in the feature maps using Enhanced Shuffle Attention (ESA) and further extracts and merges high-frequency features at different perceptual levels using Multi-scale Large Separable Kernel Attention (MLSKA). This improves the utilization of important texture information in the image, resulting in the recovery of more high-frequency details.

-

3.

To adapt to the requirements of different SR tasks, we propose models of varying complexities and evaluate them quantitatively and qualitatively on benchmark datasets. As shown in Fig. 2, the results demonstrate that FECAN achieves better peak signal-to-noise ratio (PSNR) measurements with fewer parameters compared to previous image SR methods.

Related work

In recent years, deep learning-based SR methods for images have made significant progress. In this section, lightweight image SR methods and attention-based image SR methods are reviewed briefly.

Lightweight image SR methods

Since the introduction of the super-resolution convolutional network (SRCNN) by Dong et al.1, lightweight architectures have been explored for the task of SR in deep convolutional networks. Subsequently, Tai et al.20 proposed the deep recursive residual network (DRRN) for single-image SR, which not only incorporates global residual learning (GRL) from input to output but also introduces local residual learning (LRL) to reduce the loss of image details after passing through deep networks. They also designed recursive blocks with a multi-path structure to increase network depth without adding additional parameters. Li et al.21 proposed the linearly-assembled pixel-adaptive regression network (LAPAR), which simplifies the SR task into a linear regression task with multiple base filters, and the final result is obtained by linearly combining the output of each filter. Gao et al.15 combined lightweight CNN and lightweight Transformer in the SR task and introduced the Lightweight Bimodal Network (LBNet). This method maximizes the utilization of feed-forward features to restore texture details and reduces model complexity through symmetric CNN and recursive Transformers. Sun et al.14 introduced ShuffleMixer, which incorporates large convolutions and channel split-shuffle operations to build lightweight SR models. Based on these two operations, an efficient shuffle mixer layer is constructed to effectively extract non-local feature representations. The authors of SAFMN22 proposed a multi-scale representation-based feature modulation mechanism to dynamically select representative features, achieving a good balance between SR performance and model complexity. Based on spatial multi-order context aggregation and adaptive channel-wise reallocation with the aid of the multi-layer perceptron (MLP), Gendy et al.23 proposed the MogaSRN model. They utilized the MLP multi-order gated aggregation block (MMogaB) to facilitate the interaction of context information with diverse complexities, aiding in the extraction of deep high-frequency features from images. Wu et al.24 introduced a fully 1 × 1 convolutional network, named Shift-Conv-based Network (SCNet), which incorporates a parameter-free spatial-shift operation. This network facilitates the aggregation of local features in the channel dimension without increasing computational overhead. Ahmad et al.25 employed the concept of a multi-path learning network to extract features at different scales and used a progressive upscaling network to prevent artifacts in the generated HR images. Yan et al.26 and Fu et al.27 integrated an efficient brightness enhancement module with generative adversarial networks to address the problem of low-light image enhancement.

Attention-based image SR methods

In recent years, many SR models have opted to incorporate attention mechanisms to focus on recovering information components that have a significant impact on image quality4,5,10,16,17,18,28,29. Zhang et al.4 were the first to introduce the channel attention in the SR tasks. By considering the interdependencies among channels to recalibrate features adaptively, they constructed an RCAN (residual channel attention network) model with over 400 layers, incorporating channel attention and dense connections, to achieve precise SR results. Dai et al.5 proposed the second-order attention network (SAN), which incorporates a channel attention mechanism based on second-order statistics, resulting in improved representational capacity. Furthermore, the model optimized the non-local mechanism, enabling it to capture long-range spatial contextual information and expand the receptive field. Niu et al.28 designed the holistic attention network (HAN), which utilizes layer attention modules (LAM) to learn the weights of different layer features. Additionally, they introduced channel-spatial attention modules (CSAM) to investigate the channel and pixel correlations of features across layers. Mei et al.29 proposed the non-local sparse attention network (NLSA) for deep single image SR. NLSA combines the advantages of sparse representation and non-local operations, achieving significant performance gains. Inspired by Vision Transformer12,30,31, self-attention5 has been utilized in SR to capture long-range correlations. Built upon the Swin Transformer block, Liang et al.10 proposed the SwinIR for image restoration tasks. This model leverages window-based attention mechanisms, and achieves excellent reconstruction results. To fully exploit the hierarchical information features that contribute to the final image restoration, Behjati et al.18 proposed the directional variance attention network (DiVANet). They introduced a novel directional variance attention (DiVA) mechanism to capture long-range spatial dependencies and leverage inter-channel dependencies to enhance the relevance of features. This approach improves the final output and prevents information loss during the subsequent operations within the network.

In this study, we synergistically combine two attention mechanisms, namely ESA and MLSKA. Firstly, ESA enhances the high-frequency feature relationships in the image that are beneficial for reconstruction. Secondly, MLSKA extracts and fuses high-frequency features from different hierarchical levels using varying perceptual fields, thereby improving the extraction capability of high-frequency features and achieving better reconstruction performance.

Proposed method

In this section, we present the overall structure of our proposed network in detail, as divided into two subsections: the network architecture and the High-Frequency Enhancement Module (HFEM).

Network architecture

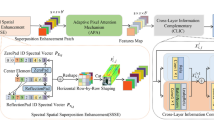

As shown in Fig. 3, the proposed a Feature Enhanced Cascading Attention Network (FECAN) consists of three modules: the shallow feature extraction module (SFEM), the deep feature extraction module (DFEM) and the image reconstruction module (IRM).

SFEM

The SFEM is first utilized to extract the shallow feature information of the input low-resolution (LR) image and filter out some of the low-frequency information by a single 3 × 3 convolution layer. The process is expressed as follows:

where \({I}_{LR}\) denotes the LR image, \({H}_{SFEM}\left(\cdot \right)\) denotes the shallow feature extraction module, and \({M}_{0}\) denotes the shallow feature maps.

DFEM

To further extract the rich high-frequency information, \({M}_{0}\) is further sent to DFEM, which consists of multiple cascaded High-Frequency Enhancement Modules (HFEMs) and a Large Separable Kernel Attention Tail (LSKAT). The process is expressed as follows:

where \({H}_{DFEM}(\cdot )\) denotes the deep feature extraction module and \({M}_{D}\) denotes the deep feature maps for final restoration. More specifically, intermediate features \({M}_{1}\),\({M}_{2}\), …, \({M}_{n}\) are extracted group by group as:

where \({H}_{{HFEM}_{i}}(\cdot )\) denotes the i-th HFEM, \({H}_{LSKAT}(\cdot )\) denotes a LSKAT layer, and n denotes the number of HFEM.

IRM

In this module, the shallow feature maps, deep feature maps, and image dimensional feature maps are fused to complete the super-resolution (SR) reconstruction of the image. The IRM is designed as a lightweight upsampling layer for rapid reconstruction. The process can be described as:

where \({I}_{SR}\) denotes the output images; \({H}_{Upsample}\left(\cdot \right)\) denotes the upsampling module, which consists of a single 3 × 3 convolution and a pixel shuffle operation32; \(Bicubic\left(\cdot \right)\) denotes the bicubic interpolation upsampling operation. By adding the result of \({I}_{LR}\) after bicubic operation to the final SR result, the network can focus on recovering high-frequency information, and the convergence speed of the network is accelerated.

High-frequency enhancement module (HFEM)

As shown in Fig. 3, the HFEM consists of three components: the Feature Enhanced Cascading Attention (FECA), the Spatial Gated Feed-Forward module (SGFF), and the Feature Connect Block (FCB). Specifically, for an input feature \({X}_{0}\in {R}^{C\times H\times W}\), where \({R}^{C\times H\times W}\) denotes a feature with shape \(C\times H\times W\), the whole process of HFEM is formulated as:

where \(LN\left(\cdot \right)\) denotes the Layer Normalization (LN)33; \({X}_{1}\) and \({X}_{2}\) are the intermediate feature groups generated during this process; \({H}_{FECA}\left(\cdot \right)\), \({H}_{SGFF}\left(\cdot \right)\), and \({H}_{FCB}\left(\cdot \right)\) represent the proposed FECA, SGFF, and FCB modules (introduced in the following sections), respectively; \(Y\) denotes the output feature of this module.

Feature enhanced cascading attention (FECA)

In deep learning, attention mechanisms have been widely used to improve models’ ability to recognize crucial information, enabling networks to focus on task-relevant details4,5,28,29. We propose a lightweight attention mechanism called FECA to better extract and enhance high-frequency feature information required for HR image reconstruction. As shown in Fig. 3, FECA consists of two cascaded attention mechanisms: Enhanced Shuffle Attention (ESA) is employed to enhance key information in the feature maps, while Multi-scale Large Separable Kernel Attention (MLSKA) extracts and fuses high-frequency features at different perceptual levels from the information enhanced by ESA. Given an input feature \(X\), the whole process of FECA can be described as:

where \({H}_{ESA}\left(\cdot \right)\) denotes the ESA and \({H}_{MLSKA}\left(\cdot \right)\) denotes the MLSKA. The cascaded use of these two attention mechanisms enables the model to acquire more precise and rich feature representations. \({H}_{DWConv}\left(X\right)\) denotes a 3 × 3 depth-wise convolution used in the Residual Convolution Connection (RCC) structure within FECA. Y denotes the output feature of this module. This structure combines input and output features, ensuring the effective propagation of feature information.

Enhanced shuffle attention (ESA)

SA-Net34 introduced a lightweight and effective attention module, Shuffle Attention, for deep Convolutional Neural Network (CNN), which efficiently combines spatial attention and channel attention mechanisms using shuffle units. This network has achieved impressive performances in high-level computer vision tasks such as image classification, object detection, and instance segmentation. Inspired by SA-Net, we propose ESA, which primarily consists of two 1 × 1 convolutions, Shuffle Attention, and two residual connections. This design promotes the fusion of input and output features, enhancing the network’s ability to represent feature dependencies in both spatial and channel dimensions.

Specifically, we first utilize a 1 × 1 convolution to process the input features, enabling cross-channel information interaction and fusion. Then, we integrate spatial and channel dimensions using Shuffle Attention to compute attention weights for the features. Subsequently, the input feature information processed by the previous 1 × 1 convolution is adjusted and fused through multiplication and addition operations. We apply another 1 × 1 convolution to extract the enhanced information. To enhance the network’s non-linear expressive power, we employ GeLU35 for non-linear mapping of the features in the final stage of the module, allowing the network to capture more complex features. Given an input feature X, the whole process of ESA can be expressed as follows:

where \({H}_{Conv1\times 1}\left(\cdot \right)\) denotes 1 × 1 convolution, \({H}_{SA}\left(\cdot \right)\) denotes shuffle attention modules, \({H}_{GeLU}\left(\cdot \right)\) denotes GeLU35 activation function, and \(\odot\) denotes element-wise product.

Multi-scale large separable Kernel attention (MLSKA)

The literature36 has shown that VAN36with large kernel attention (LKA) outperforms state-of-the-art ViT6 and Convolutional Neural Network (CNN) models in image classification, object detection, and semantic segmentation tasks. However, deep convolutional layers exhibit quadratic increases in computation and memory consumption as the kernel size increases37. To alleviate the issue of high computational complexity and extract high-frequency information at different levels, we include the concept of large separable kernel attention (LSKA)37 and combine it with a multi-scale mechanism to propose MLSKA.

Given the input feature \({X}_{in}\in {R}^{C\times H\times W}\) , where \({R}^{C\times H\times W}\) denotes a feature with shape \(C\times H\times W\), we employ a channel splitting operation to divide the feature into two parts. One part serves as the input X for the multi-scale attention mechanism, while the other part, denoted as the original feature Y, is preserved for subsequent computations:

where \({H}_{Split}\left(\cdot \right)\) denotes channel-splitting operation.

For the input of the multi-scale attention mechanism, we partition X into three groups and calculate their respective attention maps, as:

LSKA achieves an equivalent representation of LKA36 by splitting the 2D weight convolution kernels of depth-wise convolution and depth-wise dilation convolution into two cascaded 1D separable weight convolution kernels. This decomposition captures long-range dependencies using five convolutions for a K × K convolution: a 1 × (2d-1) depth-wise convolution \(H_{DW - 1} \left( \cdot \right)\), a (2d-1) × 1 depth-wise convolution \(H_{DW - 2} \left( \cdot \right)\), a \(1 \times \frac{K}{d}\) depth-wise d-dilation convolution \(H_{DWD - 1} \left( \cdot \right)\), a \(\frac{K}{d} \times 1\) depth-wise d-dilation convolution \(H_{DWD - 2} \left( \cdot \right)\), and a 1 × 1 convolution \(H_{Conv1 \times 1} \left( \cdot \right)\), where d denotes the dilation of the convolutional kernel. To overcome the issue of dilation convolutions disrupting the extraction of local structural information, we aggregate the original features through a gating mechanism, and such LSKA is then referred to as gated large separable kernel attention (GLSKA). For the \(i\)-th group feature \({X}_{i}\), the process of GLSKA-\(i\) can be expressed as follows:

where \({H}_{DWConv}\left(\cdot \right)\) denotes a (2d-1) × (2d-1) depth-wise convolution, \({H}_{Conv1\times 1}\left(\cdot \right)\) denotes 1 × 1 convolution, \(\odot\) denotes element-wise product, and \({Z}_{i} (i=\text{1,2},3)\) is the intermediate feature groups generated during this process.

Subsequently, the attention features obtained from different receptive fields are concatenated, and a 1 × 1 convolution is applied to aggregate these features, thereby constructing a comprehensive and accurate texture structure, as:

where \({H}_{Concat}(\cdot )\) denotes the concatenation operation along the channel dimension, \({H}_{Conv1\times 1}\) denotes a 1 × 1 convolution, and \(\widehat{Z}\) denotes the refined mixed feature.

After obtaining the refined mixed feature \(\widehat{Z}\), we apply the GeLU35 non-linear mapping to estimate the attention map. Through a multiplication operation, the original feature Y is adaptively adjusted, reducing the dimensionality differences between features. To ensure a smoother fusion process, an output feature is computed using a 1 × 1 convolution:

where \({H}_{GeLU}\left(\cdot \right)\) denotes GeLU activation function.

Spatial gated feed-forward module (SGFF)

In the Transformer block5, the presence of the feed-forward network (FFN) aids in learning more complex feature mapping relationships. However, the multi-layer perceptron (MLP)6 consisting of two convolutional layers and a non-linear activation layer, used as the FFN, is limited in its spatial information processing capability. Additionally, due to channel redundancy, the MLP incurs a higher computational cost.

Inspired by DAT38, we introduce the Spatial Gate (SG) into the FFN, utilizing two 1 × 1 convolutions to transform the features in the channel dimension for local contextual information aggregation. We refer to this modified FFN as the SGFF, as illustrated in Fig. 3. The SGFF consists of two 1 × 1 convolutions, the GeLU35 activation function, and the SG module (composed of a depth-wise convolution and element-wise multiplication). First, the initial 1 × 1 convolution encodes the spatial local context by doubling the number of channels in the input features and applies non-linear mapping using GeLU35. Next, the SG module is employed to divide the feature maps into convolution and multiplication branches. In the convolution branch, a single-layer deep convolution is utilized to weight the feature maps, enhancing the local feature representation. In the multiplication branch, the obtained convolutional features are multiplied with the initial features, enabling dynamic interaction between different channel information. Finally, a 1 × 1 convolution is used to reduce the number of channels back to the original input dimension. The whole process of SGFF can be expressed as follows:

where \(X\) denotes the input feature of SGFF, \(Y\) denotes the output feature of SGFF, \({H}_{Conv1\times 1}\left(\cdot \right)\) denotes the 1 × 1 convolution, \({H}_{DWConv}\left(\cdot \right)\) denotes a 3 × 3 depth-wise convolution, \({H}_{GeLU}\left(\cdot \right)\) denotes the GeLU35 activation function, \({H}_{Split}\left(\cdot \right)\) denotes the channel-splitting operation, and \(\odot\) denotes element-wise product.

Compared to MLP5, our SGFF reduces channel redundancy and is capable of extracting non-linear spatial information. Furthermore, the SG module strengthens the relationships between features from different channels within the module, thereby preserving more essential information.

Feature connect block (FCB)

To further enhance the performance of super-resolution (SR) reconstruction, we designed the FCB to integrate low-level detail information with high-level semantic information. This approach improves the network’s sensitivity to important visual information, enabling it to provide richer feature representations. The FCB consists of a deep convolutional layer and a long skip connection. By effectively organizing the flow of features, the FCB enhances model performance while maintaining a lightweight structure as much as possible. The process can be described as:

where \(X\) denotes the input feature of FCB, \(Y\) denotes the output of FCB, \({X}_{0}\) denotes the initial input feature of the whole HFEM, \({H}_{DWConv}\left(\cdot \right)\) denotes a 3 × 3 depth-wise convolution.

Experiments

This section is divided into four parts to analyze and evaluate the performance of the network. Firstly, the dataset and experimental implementation details are introduced. Secondly, ablation experiments are conducted on the key components of the network to verify its effectiveness. Thirdly, the proposed FECAN’s tiny version and lightweight version are compared with some state-of-the-art tiny and lightweight SR models to quantitatively and qualitatively evaluate their performance. Finally, the effectiveness of FECAN is further demonstrated through comparative experiments with classical version SR models, and the results of these experiments are analyzed.

Datasets and implementation

Datasets

According to previous works8,14,16, we employed the DIV2K39 and Flicker2K3 as the training data for network training, containing 800 and 2650 training images, respectively. In this work, we utilized bicubic interpolation (BI) downsampling to degrade the reference HR images to generate LR images. For testing purposes, we employ five commonly used benchmark datasets for performance comparison, including Set540, Set1441, B10042, Urban10043 and Manga10944. The peak signal-to-noise ratio (PSNR) and the structural similarity (SSIM) are applied as evaluation metrics, calculated on the luminance channel (Y) after converting the reconstructed SR images from RGB to the YCbCr color space.

Implementation details

To comprehensively evaluate the proposed method, we trained three different versions of FECAN: tiny, light, and classical, to address SR tasks of varying complexity levels. We set the number of HFEM to 5/24/36, channel numbers to 42/54/96, and the number of feature groups for shuffle attention to 3/3/6, respectively. For FECAN-tiny and FECAN-light, we randomly cropped 32 patches of size 64 × 64 pixels from LR images as the basic training input. For the classical version, we randomly cropped 32 patches of size 48 × 48 pixels from LR images as the basic training input. In MLSKA, the multi-scale mechanism employed three different decomposition patterns: 1 × 3–3 × 1–1 × 5–5 × 1–1 × 1, 1 × 5–5 × 1–1 × 7–7 × 1–1 × 1, and 1 × 7–7 × 1–1 × 9–9 × 1–1 × 1.

During the training stage, the data argumentation was performed via random horizontal flips and rotations. All models were trained using the Adam45 optimizer with \(\beta_{1} = 0.9\), and \(\beta_{2} = 0.99\). We set the initial learning rate to \(5 \times 10^{ - 4}\) and the minimum one to \(1 \times 10^{ - 7}\), updated by the Cosine Annealing scheme46. The number of iterations was set to 1600 K, and the model was saved every 5 K iterations, determining whether it was the current best model based on the PSNR and SSIM metrics. Following previous works22, we trained the network using a combination of L14 pixel loss and frequency loss based on FFT14, with weight parameters set as \(\lambda_{1} = 1.0\), and \(\lambda_{2} = 0.05\). All experiments were conducted with the PyTorch framework on two NVIDIA GeForce RTX 3090 GPUs.

Ablation study

In this section, we conducted extensive ablation studies on FECAN to understand and evaluate the roles of its different components. For a fair comparison, we used the × 4 FECAN-tiny as the baseline for conducting all ablation experiments, trained with the same settings, and tested the results on the Set540 and B10042 datasets.

Impact of FECA

FECA consists of three components: ESA, MLSKA, and RCC. To validate the effectiveness of FECA, we first removed the entire FECA from FECAN. As shown in Table 1, with FECA removed from the model, the PSNR values on the Set540 and B10042 datasets decrease by 0.42 dB and 0.19 dB, respectively. These results demonstrate that FECA significantly improves the network’s performance. With the cascaded attention architecture of FECA, which combines the information enhancement capability of ESA and the multi-level feature perception ability of MLSKA, the network can effectively explore and enhance the high-frequency feature information required for HR image reconstruction. It fully utilizes the correlation between important pixels in the feature maps, enabling the reconstruction of accurate high-frequency texture details. To further analyze the working mechanism of FECA, we conducted additional ablation experiments on its individual components.

ESA

As shown in Table 1, when ESA is absent, a decrease of 0.21 dB and 0.09 dB in PSNR values is observed on the Set540 and B10042 datasets, respectively. In ESA, SA34 efficiently integrates spatial and channel dimension information, and the use of 1 × 1 convolution and GeLU35 activation function effectively promotes the network’s learning of efficient information fusion and nonlinear feature mapping. Therefore, the introduction of this module leads to more active feature flow and interaction in the network34, making the texture feature dependencies more prominent and enhancing the complex information in the feature maps. The enhanced features are then fed to MLSKA, resulting in improved performance in SR reconstruction.

MLSKA

As shown in Table 1, the removal of MLSKA resulted in a decrease of 0.16 dB and 0.11 dB in PSNR values on the Set540 and B10042 datasets, respectively. This phenomenon can be attributed to the absence of this module, where the network relies solely on fixed receptive field small-kernel convolutions. The features learned from local receptive fields are limited, making it challenging to establish effective long-range feature dependencies and capture detailed texture information. In contrast, MLSKA incorporates a multi-scale perception mechanism and a large-kernel convolution structure. It integrates attention information from local to global scales and emphasizes key features while ignoring those of less importance by receiving enhanced information from ESA at different levels. This ability allows MLSKA to utilize complex and crucial high-frequency information for reconstruction, significantly improving the accuracy of reconstruction.

RCC

As shown in Table 1, the absence of RCC resulted in a decrease of 0.02 dB and 0.01 dB in PSNR values on the Set540 and B10042 datasets, respectively. The introduction of RCC allows the network to reuse features across layers with minimal additional parameters. Instead of relearning all features, the network focuses on learning the changing parts of the features, simplifying the model learning process. This mechanism aids the network in capturing features at different levels and enriches the expressive power of the network47.

As shown in Table 1, when sole ESA was used, there was a decrease of 0.23 dB and 0.12 dB in PSNR values on the Set540 and B10042 datasets, respectively. Similarly, when sole MLSKA was used, there was a decrease of 0.22 dB and 0.09 dB in PSNR values on the Set540 and B10042 datasets, respectively.

To further analyze the working mechanism of FECA, we visualized the output feature maps of all models in the ablation experiment group. As shown in Fig. 4, whether it is the absence of ESA, MLSKA, RCC, or entire FECA, it is difficult to learn high-frequency fine-grained texture details. This highlights the importance of FECA in extracting high-frequency information. From the results of each component ablation, ESA focuses on enhancing high-frequency texture structures, MLSKA extracts and refines texture features in a multi-level perception manner, and RCC compensates for the few missing information that was not learned through the cascaded use of attention. The coordinated action of these three components allows the network to retain and explore more high-frequency feature information, providing strong support for the SR reconstruction task.

Impact of FFN

FFN plays a crucial role in Transformer5 by enhancing the network’s expressive power through nonlinear transformations, enabling the network to learn and understand complex data patterns. In Transformer5, MLP6 is commonly used as the FFN. To enhance the utilization of spatial information by HFEM, we replaced MLP6 with SGFF, which obtains improvements of 0.01 dB and 0.02 dB in PSNR values on the Set540 and B10042 dataset, respectively, and a reduction of 7 K in parameters, as shown in Table 2. Furthermore, if FFN is not used, a decrease of 0.10 dB and 0.07 dB in PSNR values on the Set540 and B10042 datasets, respectively. SGFF ensures the global contextual relationships between pixels are implicitly modeled and controls the flow of features, allowing subsequent layers in the network hierarchy to focus specifically on finer image attributes, resulting in high-quality outputs38.

Impact of FCB

As shown in Table 3, the proposed method with the introduction of FCB achieved an improvement of 0.05 dB and 0.03 dB in PSNR values on the Set540 and B10042 datasets, respectively, compared to the method without FCB from Table 1. If only a depth-wise convolution were used without the residual connection, a decrease of 0.01 dB and 0.02 dB in PSNR values on the Set540 and B10042 datasets, respectively, can be observed. These observations can be attributed to the composition of FCB, which utilizes depth-wise convolutions and residual connections. Through the depth-wise convolution, FCB is able to extract high-frequency information from the images, while the residual connections contribute to learning deeper-level feature representations, facilitating the fusion of features at different levels. The joint design of these two components helps the network better capture and restore perceptual details of the images, thereby improving the visual consistency of the reconstructed images48. Furthermore, the design of FCB maintains the lightweight feature of model, as it enhances network performance while introducing only 2 K additional parameters.

Impact of tail

Before the upsampling stage, to further enhance the network’s ability to restore details, it is common to add a Tail block4 at the end of the DFEM. LSKAT is included in the proposed model in the form of LSKA37, as shown in Fig. 3. As shown in Table 4, compared to not using a Tail block, the introduction of LSKAT results in an improvement of 0.09 dB and 0.04 dB in PSNR values on the Set540 and B10042 datasets, respectively. This is because LSKAT exhibits a large receptive field and perceptual capability in feature extraction36,37, enabling the network to better capture and restore perceptual details of the images. In existing SR methods3,5,10,28,46, a 3 × 3 convolution is widely used as the Tail block. It can be observed that when using a 3 × 3 convolution, there is a decrease of 0.03 dB and 0.02 dB in PSNR values on the Set540 and B10042 datasets, respectively. This is because the 3 × 3 convolution has a small receptive field, limiting its ability to establish long-range dependencies, thus restricting the network’s reconstruction capability.

Impact of normalization

During the process of deepening the network layers, the internal covariate shift in the training process becomes increasingly severe, leading to unstable training and even collapse49. Therefore, to improve the stability of model training and enhance the model’s adaptability to different input data, we adopted LN33 as the normalization layer. As shown in Table 5, we first removed LN33 from the model, resulting in a decrease of 0.55 dB and 0.24 dB in PSNR values on the Set540 and B10042 datasets, respectively. This confirms the necessity of using normalization methods in the network. If Batch Normalization (BN)49 is used, it can be observed that there is a decrease of 2.99 dB and 1.27 dB in PSNR values on the Set540 and B10042 datasets, respectively, which has the worse results compared to not using any normalization method. This is because BN49 tends to disrupt the original contrast information of the images3. Therefore, LN33 is more suitable for SR tasks compared to BN49.

Impact of the frequency loss function

When using only pixel loss as the training objective, the model tends to overlook the high-frequency details of the images, resulting in overly smooth reconstructed images. Therefore, to enable the network to better restore the high-frequency details of the images while preserving the overall structure, we introduced a frequency loss function based on FFT14, combined with the L1 pixel loss function4, to train the network. As shown in Table 6, an improvement of 0.02 dB and 0.04 dB in PSNR values on the Set540 and B10042 datasets, respectively, can be observed. The experimental results demonstrate that training the network with a combination of pixel loss and frequency loss based on FFT14 can provide a more comprehensive assessment of image quality, thereby helping the network to better learn high-frequency details and improve the quality of the reconstructed images.

Impact of bicubic operation

In FECAN, we employ bicubic interpolation to upsample the LR images and add them to the SR results obtained after network processing. As shown in Table 7, after introducing this operation, an improvement of 0.05 dB and 0.02 dB in PSNR values on the Set540 and B10042 datasets, respectively, can be observed. This is because bicubic interpolation can generate smooth images with rich low-frequency information, which helps improving the visual quality of the images generated by the sub-pixel convolution layers in edge and texture regions. Additionally, it stabilizes network training, accelerates model convergence, and contributes to enhanced reconstruction performance.

Comparisons with tiny and lightweight SR methods

In this section, we compared our method’s reconstruction performance against state-of-the-art tiny and lightweight SR methods on five benchmark datasets at various scales to validate our approach.

Quantitative comparisons

As shown in Table 8, we compare FECAN-tiny with Bicubic, DRRN20, LAPAR-B21, SCNet-T24, ShuffleMixer-tiny14, and MAN-tiny50. As shown in Table 9, we compare FECAN-light with Bicubic, LBNet15, SwinIR-light10, DiVANet18, MogaSRN23, NGswin51, and MAN-light50. The implementation of the comparison models is taken from the authors’ publicly available source code, and the comparison data uses the results of other networks from published papers. Specifically, considering the average results on the × 4 SR benchmark datasets Set540, Set1441, B10042, Urban10043 and Manga10944, our FECAN-tiny outperforms the second-best model by 0.07/0.0008 in terms of the PSNR/SSIM metrics, with a parameter reduction of 19% and a FLOPs reduction of 20%. Similarly, our FECAN-light outperforms the second-best model by 0.11/0.0007 in terms of the PSNR/SSIM metrics, with a parameter reduction of 11% and a FLOPs reduction of 12%. These results demonstrate that due to the efficient design of FECAN, computational redundancy is reduced, and FECAN provides rich and crucial multi-level high-frequency information for image SR reconstruction, thereby improving the fidelity of the network in the reconstruction task.

Qualitative comparisons

As shown in Fig. 5, we compare the visual results of FECAN-tiny with four representative models, namely Bicubic, LAPAR-B21, ShuffleMixer-tiny14, and MAN-tiny50. For img011 image, our tiny model reconstructs the vertical stripe structure better than other methods. For BokuHaSitataKun, our tiny model exhibits strong restoration capability for letter images. As shown in Fig. 6, we compare the visual results of FECAN-light with six representative models, namely Bicubic, LBNet15, SwinIR-light10, DiVANet18, NGswin51, MAN-light50. For barbara and img062, our light model can reconstruct horizontal lines and grid textures accurately. For ppt3 and TetsuSan, our light model can restore the edge information of letter images to a large extent. For the complex texture structure in the YumeiroCooking image, while the listed methods can reconstruct most of the patterns, they suffer from serious distortion, whereas FECAN-light can accurately reconstruct the main structure of the texture. By modeling the enhanced high-frequency information at multiple levels, FECAN demonstrates significant advantages in restoring clear and accurate details compared to most SR methods.

Inference time comparisons

The inference time comparisons between FECAN-tiny, FECAN-light, and several representative lightweight SR methods, such as MAN-tiny50, SwinIR-light10, and MAN-light50, were conducted on B100 (× 4). As shown in Table 10, compared to MAN-tiny50, FECAN-tiny achieved a 47% reduction in inference time, while FECAN-light reduced inference time by 46% and 13% compared to SwinIR-light10 and MAN-light50, respectively. Overall, FECAN strikes a better balance between performance, model size, and computational efficiency, making it more competitive than the compared models.

Comparisons with classical SR methods

Similar to the tiny and lightweight versions, we compared our classical SR model FECAN against state-of-the-art classical SR methods.

Quantitative comparisons

As shown in Table 11, we compare FECAN with Bicubic, RCAN4, SAN5, HAN28, NLSA29, and SAFMN22. The implementation of the comparison models is taken from the authors’ publicly available source code, and the comparison data uses the results of other networks from published papers. The quantitative results demonstrate that on the × 4 SR benchmark datasets Set540, Set1441, B10042, Urban10043 and Manga10944, FECAN achieves improvements of 0.49/0.0031, 0.46/0.0022 and 0.38/0.0029 in average terms of PSNR/SSIM compared to RCAN4, NLSA29 and SAFMN22, respectively. Additionally, FECAN reduces the parameter count by 79%, 93% and 43%, and decreases the FLOPs by 80%, 94% and 44% compared to RCAN4, NLSA29, and SAFMN22, FECAN improves the network’s understanding of comprehensive pixel semantic information, thereby enhancing the effectiveness of image reconstruction.

Qualitative comparisons

As shown in Fig. 7, we also compare the visual results of FECAN with six representative models, namely Bicubic, RCAN4, SAN5, HAN28, NLSA29, and SAFMN22. For img078, FECAN preserves higher quality of the ventilation grille compared to other methods. Similarly, for img046, FECAN reconstructs clear grid structures from blurry inputs. FECA adequately considers high-frequency texture information, addressing the issue of oversmoothing in the reconstructed images. These results demonstrate the strong image reconstruction capabilities of the proposed network.

Conclusion

In this paper, we propose a lightweight Feature Enhanced Cascading Attention Network (FECAN), with the Feature Enhanced Cascading Attention (FECA) module that integrates cascaded Enhanced Shuffle Attention (ESA) and Multi-scale Large Separable Kernel Attention (MLSKA) as its core. This module captures crucial information details beneficial for image reconstruction and enhances the correlation between pixels regions at different distances. As a result, it can improve the reconstruction performance of image super-resolution (SR) across varying complexities by leveraging finer high-frequency features. Extensive experimental results demonstrate that FECAN achieves a good balance between image reconstruction performance and model complexity.

Although the proposed method outperforms various existing lightweight image super-resolution reconstruction approaches, certain limitations remain. In future work, training on more diverse datasets comprising real-world scenes could enhance the network’s generalization ability and performance. Additionally, further optimization by Model Pruning or Quantization may address the contradiction between SR speed and performance.

Data availability

Data will be made available on request. Requests for materials should be addressed to Y.S.

References

Dong, C., Loy, C. C., He, K. & Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38(2), 295–307. https://doi.org/10.1109/TPAMI.2015.2439281 (2016).

Kim, J., Lee, J. K., & Lee, K. M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern recognition (CVPR) 1646–1654. https://doi.org/10.1109/CVPR.2016.182 (2016).

Lim, B., Son, S., Kim, H., Nah, S., & Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops 136–144. https://arxiv.org/abs/1707.02921v1 (2017).

Zhang, Y. et al. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV) 286–301. https://doi.org/10.1007/978-3-030-01234-2_18 (2018).

Dai, T., Cai, J., Zhang, Y., Xia, S. T., & Zhang, L. Second-order attention network for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 11065–11074 (2019).

Dosovitskiy, A. et al. An image is worth 16 x 16 words: Transformers for image recognition at scale. https://arxiv.org/abs/2010.11929 (2020).

Carion, N. et al. End-to-end object detection with transformers. In European Conference on Computer Vision (ECCV) 213–229. https://doi.org/10.1007/978-3-030-58452-8_13 (Springer, 2020).

Touvron, H. et al. Training data-efficient image transformers & distillation through attention. In International Conference on Machine Learning 10347–10357 (PMLR, 2021).

Chen, H. et al. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 12294–12305. https://doi.org/10.1109/CVPR46437.2021.01212 (2021).

Liang, J. et al. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCVW) 1833–1844. https://doi.org/10.1109/ICCVW54120.2021.00210 (2021).

Gendy, G., Sabor, N., Hou, J., & He, G. A simple transformer-style network for lightweight image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 1484–1494 (2023).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) 9992–10002. https://doi.org/10.1109/ICCV48922.2021.00986 (2021).

Ahn, N., Kang, B., & Sohn, K. A. Fast, accurate, and lightweight super-resolution with cascading residual network. In Proceedings of the European Conference on Computer Vision (ECCV) 256–272. https://doi.org/10.1007/978-3-030-01249-6_16 (2018).

Sun, L., Pan, J. & Tang, J. Shufflemixer: An efficient convnet for image super-resolution. Adv. Neural. Inf. Process. Syst. 35, 17314–17326 (2022).

Gao, G. et al. Lightweight bimodal network for single-image super-resolution via symmetric cnn and recursive transformer. https://arxiv.org/abs/2204.13286 (2022).

Liu, H., Cao, F., Wen, C. & Zhang, Q. Lightweight multi-scale residual networks with attention for image super-resolution. Knowl. Based Syst. 203, 106103. https://doi.org/10.1016/j.knosys.2020.106103 (2020).

Li, W., Li, J., Li, J., Huang, Z. & Zhou, D. A lightweight multi-scale channel attention network for image super-resolution. Neurocomputing 456, 327–337. https://doi.org/10.1016/j.neucom.2021.05.090 (2021).

Behjati, P. et al. Single image super-resolution based on directional variance attention network. Pattern Recogn. 133, 108997. https://doi.org/10.1016/j.patcog.2022.108997 (2023).

Gu, J., & Dong, C. Interpreting super-resolution networks with local attribution maps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR) 9199–9208 (2021).

Tai, Y., Yang, J., & Liu, X. Image super-resolution via deep recursive residual network. In Proceedings of the IEEE Conference on Computer Vision and Pattern recognition (CVPR) 2790–2798. https://doi.org/10.1109/CVPR.2017.298 (2017).

Li, W. et al. Lapar: Linearly-assembled pixel-adaptive regression network for single image super-resolution and beyond. Adv. Neural Inf. Process. Syst. 33, 20343–20355. https://doi.org/10.48550/arXiv.2105.10422 (2020).

Sun, L., Dong, J., Tang, J., & Pan, J. Spatially-adaptive feature modulation for efficient image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (CVPR) 13190–13199 (2023).

Gendy, G., Sabor, N. & He, G. Lightweight image super-resolution based multi-order gated aggregation network. Neural Netw. 166, 286–295. https://doi.org/10.1016/j.neunet.2023.07.002 (2023).

Wu, G., Jiang, J., Jiang, K., & Liu, X. Fully 1×1 convolutional network for lightweight image super-resolution. arXiv preprint arXiv:2307.16140 (2023).

Ahmad, W. et al. A new generative adversarial network for medical images super resolution. Sci. Rep. 12, 9533. https://doi.org/10.1038/s41598-022-13658-4 (2022).

Yan, L. et al. Enhanced network optimized generative adversarial network for image enhancement. Multimed. Tools Appl. 80, 14363–14381. https://doi.org/10.1007/s11042-020-10310-z (2021).

Fu, J. et al. Low-light image enhancement base on brightness attention mechanism generative adversarial networks. Multimed. Tools Appl. 83, 10341–10365. https://doi.org/10.1007/s11042-023-15815-x (2024).

Niu, B. et al. Single image super-resolution via a holistic attention network. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XII. 191–207. https://doi.org/10.1007/978-3-030-58610-2_12 (Springer, 2020).

Mei, Y., Fan, Y., & Zhou, Y. Image super-resolution with non-local sparse attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 3516–3525. https://doi.org/10.1109/CVPR46437.2021.00352 (2021).

Li, Y., Zhang, K., Cao, J., Timofte, R., & Van Gool, L. Localvit: Bringing locality to vision transformers. https://arxiv.org/abs/2104.05707 (2021).

Tu, Z. et al. Maxvit: Multi-axis vision transformer. In European Conference on Computer Vision (ECCV) 459–479. https://doi.org/10.1007/978-3-031-20053-3_27 (Springer, 2022).

Shi, W. et al. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 1874–1883 (2016).

Ba, J. L., Kiros, J. R., & Hinton, G. E. Layer normalization. https://arxiv.org/abs/1607.06450 (2016).

Zhang, Q. L., & Yang, Y. B. Sa-net: Shuffle attention for deep convolutional neural networks. In ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2235–2239. https://doi.org/10.1109/ICASSP39728.2021.9414568 (2021).

Hendrycks, D., & Gimpel, K. Gaussian error linear units (gelus). https://arxiv.org/abs/1606.08415 (2016).

Guo, M. H., Lu, C. Z., Liu, Z. N., Cheng, M. M. & Hu, S. M. Visual attention network. Comput. Vis. Med. 9(4), 733–752. https://doi.org/10.1007/s41095-023-0364-2 (2023).

Lau, K. W., Po, L. M. & Rehman, Y. A. U. Large separable kernel attention: Rethinking the large kernel attention design in cnn. Expert Syst. Appl. 236, 121352. https://doi.org/10.1016/j.eswa.2023.121352 (2024).

Chen, Z. et al. Dual aggregation transformer for image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) 12312–12321 (2023).

Timofte, R., Agustsson, E., Van Gool, L., Yang, M. H., & Zhang, L. Ntire 2017 challenge on single image super-resolution: Methods and results. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) 114–125 (2017).

Bevilacqua, M., Roumy, A., Guillemot, C., & Alberi-Morel, M. L. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In: British Machine Vision Conference (BMVC) 135.1–135.10. https://doi.org/10.5244/C.26.135 (BMVA Press, 2012).

Zeyde, R., Elad, M., & Protter, M. On single image scale-up using sparse-representations. In International Conference on Curves and Surfaces 711–730. https://doi.org/10.1007/978-3-642-27413-8_47 (2010).

Arbelaez, P., Maire, M., Fowlkes, C. & Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 33(5), 898–916. https://doi.org/10.1109/TPAMI.2010.161 (2010).

Huang, J. B., Singh, A., & Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 5197–5206. https://doi.org/10.1109/CVPR.2015.7299156 (2015).

Matsui, Y. et al. Sketch-based manga retrieval using manga109 dataset. Multimed. Tools Appl. 76, 21811–21838. https://doi.org/10.1007/s11042-016-4020-z (2017).

Kingma, D. P., & Ba, J. Adam: A method for stochastic optimization. https://arxiv.org/abs/1412.6980 (2014).

Loshchilov, I., & Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. https://arxiv.org/abs/1608.03983 (2016).

Liu, J., Tang, J., & Wu, G. Residual feature distillation network for lightweight image super-resolution. In Computer Vision–ECCV 2020 Workshops: Glasgow, UK, August 23–28, 2020, Proceedings, Part III. 41–55. https://doi.org/10.1007/978-3-030-67070-2_2 (Springer, 2020).

Liu, J., Zhang, W., Tang, Y., Tang, J., & Wu, G. Residual feature aggregation network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2359–2368 (2020).

Ioffe, S., & Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International Conference on Machine Learning. 37, 448–456 (PMLR, 2015).

Wang, Y., Li, Y., Wang, G., & Liu, X. Multi-scale attention network for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 5950–5960 (2024).

Choi, H., Lee, J., & Yang, J. N-gram in swin transformers for efficient lightweight image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2071–2081. https://doi.org/10.1109/CVPR52729.2023.00206 (2023).

Acknowledgements

The authors thank the financial support from the Natural Science Foundation of Fujian Province, China (No.2023H0005).

Author information

Authors and Affiliations

Contributions

F.H. and H.L. contributed to conceptualization, methodology, software, and writing in the original draft preparation. L.C. analyzed and discussed the feasibility of the experimental design. Y.S reviewed and edited the original document, provided resources, and coordinated the entire project. M.Y. contributed by participating in research background and application demand demonstration and analysis.

Corresponding authors

Ethics declarations

Competing interests

No conflict of interest exists in the submission of this manuscript, and the manuscript is approved by all authors for publication. We would like to declare on behalf of our co-authors that the work described was original research that has not been published previously, and is not under consideration for publication elsewhere, in whole or in part. All the authors listed have approved the manuscript that is enclosed.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Huang, F., Liu, H., Chen, L. et al. Feature enhanced cascading attention network for lightweight image super-resolution. Sci Rep 15, 2051 (2025). https://doi.org/10.1038/s41598-025-85548-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-85548-4