Abstract

Large-scale sparse multi-objective optimization problems are prevalent in numerous real-world scenarios, such as neural network training, sparse regression, pattern mining and critical node detection, where Pareto optimal solutions exhibit sparse characteristics. Ordinary large-scale multi-objective optimization algorithms implement undifferentiated update operations on all decision variables, which reduces search efficiency, so the Pareto solutions obtained by the algorithms fail to meet the sparsity requirements. SparseEA is capable of generating sparse solutions and calculating scores for each decision variable, which serves as a basis for crossover and mutation in subsequent evolutionary process. However, the scores remain unchanged in iterative process, which restricts the sparse optimization ability of the algorithm. To solve the problem, this paper proposes an evolution algorithm with the adaptive genetic operator and dynamic scoring mechanism for large-scale sparse many-objective optimization (SparseEA-AGDS). Within the evolutionary algorithm for large-scale Sparse (SparseEA) framework, the proposed adaptive genetic operator and dynamic scoring mechanism adaptively adjust the probability of cross-mutation operations based on the fluctuating non-dominated layer levels of individuals, concurrently updating the scores of decision variables to encourage superior individuals to gain additional genetic opportunities. Moreover, to augment the algorithm’s capability to handle many-objective problems, a reference point-based environmental selection strategy is incorporated. Comparative experimental results demonstrate that the SparseEA-AGDS algorithm outperforms five other algorithms in terms of convergence and diversity on the SMOP benchmark problem set with many-objective and also yields superior sparse Pareto optimal solutions.

Similar content being viewed by others

Introduction

A multitude of Multi-Objective Optimization Problems (MOPs)1 exist in both real-world and industrial production. When decision variable numbers are hundreds or thousands, such problems are classified as Large-Scale Multi-Objective Optimization Problems (LSMOPs)2. Instances include the optimization of millions of weights in deep neural networks3, unsupervised learning for data clustering4, network node issues in community detection5, vehicle routing problems6, and so on. More and more practical applications of LSMOPs need to be solved. Within these applications, a subset of LSMOPs is characterized by sparse Pareto optimal solutions, i.e., most decision variables of the optimal solutions are zero7. For instance, in community network node detection, nodes within the same community must be densely interconnected, whereas nodes across different communities should be sparsely linked5. In neural network training, a significant number of weights are set to zero to minimize both training error and model complexity8. Similarly, portfolio optimization problem is to maximize return and minimize risks, only a small fraction of all possible investments is selected9. These Large-Scale Sparse Multi-Objective Optimization Problems (LSSMOPs)10 are widespread in practical applications, and their study holds substantial practical significance.

During the past decades, a main focus of evolutionary algorithms has been to solve LSMOPs11,12,13. A number of multi-objective evolutionary algorithms for solving LSMOPs have been developed14,15,16,17, which can be roughly divided into three categories. The first category uses divide-and-conquer strategies18 to divide decision variables into several groups, and then optimizes each group of decision variables alternately. The second category adopts transformation strategies19 to transform the original problems into low-dimensional sub-problems, hence reducing the dimensionality of the decision variable. The last category employs novel search strategies20 to effectively generate offspring solutions. In spite of the effectiveness of these strategies on LSMOPs, they cannot provide good optimization performance for LSMOPs, as they do not consider the sparse characteristic of Pareto optimal solutions21,22,23. In LSSMOPs, only a few key decision variables determine the quality of the solution and the values of remaining decision variables should be zero in Pareto optimal solutions7. Ordinary large-scale multi-objective optimization algorithms implement undifferentiated update operations on all decision variables, which not only wastes computing resources and reduces search efficiency, but also makes the Pareto solutions obtained by the algorithms fail to meet the sparsity requirements, so it is difficult to obtain high-quality solutions.

Recently, some algorithms are especially designed for LSSMOPs. These algorithms can be broadly categorized into two classes11. The first class consists of methods based on prior knowledge. Tian et al.7. introduced SparseEA in 2019, which employs a new initialization strategy and genetic operator to ensure solution’s sparsity base on prior knowledge of decision variables. In 2021, Tan et al.24 proposed MDRSAEA, which obtains prior knowledge of decision variables based on non-dominated sorting of feature selection and utilizes this knowledge to perform multi-stage dimension reduction on the search space. Ding et al.11. proposed MSKEA in 2022, which introduces three different types of sparsity knowledge to estimate the sparse distribution of Pareto optimal solutions, and ultimately maintains a balance between exploration and exploitation. In 2022, Wang et al.25. proposed ST-CCPSO, which uses prior knowledge of decision variables, and employs cumulative gradient values as the criterion for setting decision variables to zero. It ultimately achieves a balance between exploration and exploitation through a competitive particle swarm optimizer. Shao et al.26. proposed NUCEA in 2023, which introduces a non-uniform clustering to divide binary variables into groups of different sizes based on prior knowledge of bi-level encoding of decision variables, so the sparsity of the algorithm could be controlled by optimizing binary variables. Another class of approaches is to mine the sparse distribution of Pareto optimal solutions during the evolution. Tian et al.27. proposed MOEA/PSL in 2020, and two unsupervised neural networks are used to learn the sparse distribution and the compact representation of the decision variables. Additionally, Tian et al.28 introduced PM-MOEA in 2020, which suggests an evolutionary pattern mining approach to mine the sparse distribution of Pareto optimal solutions, and thus, the search space is considerably reduced. In 2021, Wang et al.29 presented the S-ECSO based on the CSO30, where a strongly convex operator is proposed to directly generate sparse solutions by utilizing the sparse distribution of Pareto optimal solutions, enhancing algorithm’s convergence and sparsity. In 2023, Wu et al.31. proposed SGECF, where a sparsity-guided elitism co-evolutionary framework is used to determine the distribution of sparse solutions and guide the generation of offspring.

So far, only a limited number of algorithms have been developed to solve LSSMOPs, and most of the algorithms in the first class of methods adopt the bi-level encoding strategy proposed in SparseEA as their base strategy, such as MDRSAEA, MSKE and NUCE. SparseEA adopts decision variable vector and binary mask vector to represent an individual, where the mask vector is used to control the sparsity of the individual, and the score is calculated for each decision variable. In iterative process of SparseEA, fixed crossover probability and mutation probability are used to perform crossover operation and mutation operation on the decision variable vector, and the crossover operation and mutation operation of mask vector are carried out by using the decision variable score during initialization. It can be seen that crossover probability and mutation probability will affect the update efficiency of the decision variable vector, and the decision variable score will affect the update quality of the mask vector, thus affecting the sparsity of the solution. However, in the process of evolution, individuals in the population will have differences between good and bad, and limited computing resources should be allocated to more promising individuals for crossover and variation. Therefore, the crossover probability, variation probability and decision variable score should change with the advantages and disadvantages of individuals in order to screen out more promising individuals. Otherwise, the invariable crossover probability, mutation probability and decision variable score will seriously restrict the improvement of algorithm performance.

To deal with above issue, this paper proposes an evolution algorithm with adaptive genetic operator and dynamic scoring mechanism for large-scale sparse many-objective optimization (SparseEA-AGDS) by building upon the SparseEA algorithm framework. The principal contributions are as follows:

(1) The adaptive genetic operator is proposed, where the probabilities of crossover and mutation for individuals are updated based on the changes of the non-dominated layer during each iteration. This update grants superior individuals increased chances for genetic operations, thereby aiding in the enhancement of the algorithm’s convergence and diversity.

(2) Establish a dynamic scoring mechanism. In each iteration, based on the changes of individual’s non-dominated layers, the decision variable score is recalculated using a weighted accumulation method. This method increases the chances of crossover and mutation of the superior decision variables, and improves sparsity of Pareto solutions.

It is worth mentioning that the SparseEA-AGDS algorithm has no additional parameter settings, so that the user of the algorithm does not face the difficulty of tuning parameters.

Related works

Definitions in LSSMOPs

Without loss of generality, considering minimization optimization as an example32, MOPs is defined as

where \(\:\varOmega\:\) is the search space and\(\:\:\left\{F\left({\mathbf{X}}_{i}\right)|{\mathbf{X}}_{i}\in\:\varOmega\:\right\}\:\)denotes the attainable objective space. M denotes the number of objective functions, and D denotes the number of the decision variable. In general, MOPs with\(\:\:D\ge\:100\:\)is called LSMOPs. In LSMOPs, if most decision variables of the optimal solutions are zero, then the problem is considered LSSMOPs.

SparseEA algorithm

The SparseEA algorithm is a multi-objective evolutionary algorithm specifically designed for LSSMOPs7, and the core of the SparseEA consists of the population initialization strategy and the genetic operator strategy. The method principle of SparseEA are as follows.

(1) The population initialization strategy is composed of three parts, which are sparse representation of decision variables, calculation of decision variable score and generation of initial population.

Sparse representation of the decision variables

Let \(\:{\mathbf{X}}_{i}\) denote the i-th individual, which is defined as

where\(\:{\:dec}_{d}\:\)denotes the real variable and\(\:\:{mask}_{d}\:\)denotes the binary variable of the d-th dimension of the decision variable. During the evolution,\(\:{\:dec}_{d}\:\)can record the best decision variable found so far, while\(\:{\:mask}_{d}\:\)can record the decision variable that should be set to zero, thereby controlling the sparsity of the solutions. If the decision variables are binary numbers,\(\:{\:dec}_{d}\:\)are always set to 1.

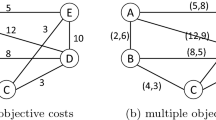

Decision variable score

The score of decision variable is determined by the non-dominated rank of decision variable and reflects the probability that the decision variable should be set to zero. A smaller non-dominated rank corresponds to a lower score, which means the lower probability that this decision variable should be set to zero. To calculate the non-dominated rank of decision variable, matrix G of size\(\:\:D\times\:D\:\)is constructed, where the first D denotes the number of individuals and the second D denotes the decision variable dimension of individuals. The G is obtained by the dot product of matrix dec and matrix mask:

Where dec is a D-dimensional random matrix of real variable, and mask is a D-dimensional identity matrix, the actual computation of G is given by (4).

As can be seen from (4), in each individual\(\:{\:\mathbf{X}}_{i}\:\)in G at this time, only one dimension of the decision variable is non-zero, while the other dimensions are zero. So the non-dominated layer of individual \(\:{\:\mathbf{X}}_{i}\:\)can be used as the non-dominated rank of the decision variable deci, i that is non-zero. Repeat the above operation, and accumulate the non-domination rank of each decision variable. The cumulative result is used as the score of each dimensional decision variable, denoted by Si, and put into the score matrix \(\:\mathbf{S}=[{S}_{1},\cdots\:,{S}_{i},\cdots\:{,S}_{D}]\).

Generation of initial population

In order to generate an initial population of size N, firstly, a random real variable matrix dec and a binary variable matrix mask of size\(\:\:N\times\:D\:\)are generated, with all values of mask being 0. Then, each individual performs binary tournament selection mechanism33 on decision variables according to the score matrix S to identify a quantity of\(\:\:c\times\:D\:\)positions, where c denotes a random number between [0,1]. At these positions, the values in the mask are set to 1. Finally, initial population P is obtained by dec×mask.

(2) In the genetic operator strategy, parent populations Q generate offspring populations O through crossover and mutation operation of dec and mask.

Crossover and mutation operations on Dec

The position index matrix K of the parent population is first generated, with the size of N×D. N row vectors in K correspond to N individuals in the population. Subsequently, a random matrix d with elements ranging from 0 to 1 is generated to select individuals for crossover and mutation operations, with the size of N×1. Finally, fixed crossover probability\(\:\:{P}_{c0}\:\)and mutation probability \(\:{P}_{m0}\:\)are separately set, and each element in d is compared with the \(\:{P}_{c0}\:\)and\(\:{\:P}_{m0}\). If d(i) is less than \(\:{P}_{c0}\:\)or\(\:{\:P}_{m0}\), then crossover or mutation operation is performed on the i-th individual of the population, and the elements in row i of K are either 1 or 0, representing whether decision variable corresponding to that position should perform crossover and mutation operation or not.

Crossover and mutation operations on mask

In the parent population Q, parent individuals who perform crossover and mutation operations are denoted as\(\:\:{Q}_{k1}\)and\(\:{\:Q}_{k2}\), and the offspring individuals are denoted as \(\:{O}_{k1}\)and\(\:{O}_{k2}\),\(\:\:{k}_{1},{k}_{2}\in\:\left[1,N\right]\:\)and\(\:\:{k}_{1}\ne\:{k}_{2}\). During the crossover operation, positions with the same ___location in\(\:{\:Q}_{k1}\:\)and\(\:\:\:{Q}_{k2}\:\)but different logical values are picked out, and two positions are randomly selected from them, which correspond to two decision variable dimensions, respectively. Based on the initialization decision variable scores of these two decision variables dimensions, the binary tournament selection strategy33 is used to select a dimension for crossover operation. During the mutation operation, the initialization decision variable scores of the parent individuals and the binary tournament selection strategy33 are again used to select a decision variable dimension to carry out logical value inversion.

Shortcomings of the SparseEA algorithm

Although the SparseEA algorithm has shown excellent experimental results in LSSMOPs, there are still three aspects that can be further optimized:

(1) In each iteration, the crossover probability and mutation probability of dec remain constant. Whether an individual performs crossover or mutation operation is primarily determined by the random matrix d, which has nothing to do with whether the individual is excellent. Thus, the potential development of outstanding individuals has been affected.

(2) In each round of crossover and mutation operation of mask, the positions for crossover or mutation are selected always based on the initialized decision variable score. However, as the updating of population, the relative merits of decision variables may change, and the score of decision variable should be updated dynamically.

(3)SparseEA employs crowding distance-based environmental selection strategy. When dealing with many-objective optimization problems, the number of non-dominated individuals grows exponentially, which means the selection pressure of Pareto dominance relations drops sharply. This situation makes it difficult to distinguish between the superior and inferior individuals, ultimately leading to a decline in algorithm performance.

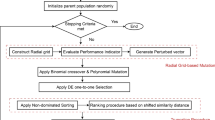

SparseEA-AGDS algorithm

To address several improvable aspects of the SparseEA algorithm, this paper proposes the SparseEA-AGDS algorithm. Adaptive genetic operators strategy and dynamic scoring mechanism are proposed, and reference point-based environmental selection strategy is introduced. The above three strategies improve the ability of the SparseEA-AGDS algorithm to handle large-scale sparse many-objective problems.

Adaptive genetic operator

The adaptive genetic operator strategy is proposed to address the issues during the crossover and mutation process of dec in the SpaeseEA. It dynamically adjusts the crossover probability and mutation probability of individual according to individual’s superiority or inferiority in each round of iteration. This strategy provides more chances for the excellent individuals and improves the convergence and diversity of the algorithm. The following are the specific implementation steps of the adaptive genetic operator strategy, and Algorithm 1 presents the pseudo-code of the strategy.

Calculation of the parent individual’s non-dominant layer

The fast non-dominated sorting approach34 was used to calculate the non-dominated layers of individuals in the parent population, and the non-dominated layer of \(\:{\mathbf{X}}_{i\:}\)is defined as \(\:{r}_{i}\). The better \(\:{\mathbf{X}}_{i}\) corresponds to a smaller \(\:{r}_{i}\).

Calculation of the probability that the parent individual to be selected

According to the \(\:{r}_{i}\), the selected probability for parent individual is calculated by Eq. (5), where \(\:{P}_{s,i}\) denotes the selected probability of \(\:{\mathbf{X}}_{i}\), and maxr denotes the largest non-dominated layer. It can be seen that the better \(\:{\mathbf{X}}_{i}\) is, the smaller \(\:{r}_{i}\) is, and the higher \(\:{P}_{s,i}\) is.

Calculation of adaptive crossover probability and adaptive mutation probability

Adaptive crossover probability \(\:{P}_{c,i}\:\)and adaptive mutation probability \(\:{P}_{m,i}\:\)of\(\:\:{\mathbf{X}}_{i\:}\)are calculated by Eqs. (6) and (7) respectively\(\:.\) The better \(\:{\mathbf{X}}_{i}\:\)corresponds to the higher \(\:\:{P}_{c,i}\:\)and\(\:\:{P}_{m,i}\:\).

Adaptive inheritance to produce offspring

In adaptive inheritance process of dec, the generation and use of logical matrix K and matrix d are the same as that in SparseEA algorithm. The difference is that instead of using fixed probabilities \(\:{P}_{c0}\:\)and\(\:{\:P}_{m0}\), adaptive probabilities \(\:{P}_{c,i}\) and\(\:\:{P}_{m,i}\) are used. So, each individual’s probability of undergoing crossover and mutation operations will be changed according to their respective levels of superiority or inferiority.

From the above, it can be indicated that the more superior the \(\:{\mathbf{X}}_{i},\:\)the higher the probability of undergoing crossover and mutation operations. Take N = 4, D = 6 as an example, Figs. 1 and 2 show the adaptive genetic operator strategy of dec, where the value of \(\:{P}_{C0}\) and \(\:{P}_{m0}\) are randomly set to 0.9 and 0.03, respectively.

Dynamic scoring mechanism

The dynamic scoring mechanism is proposed to address the issues during crossover and mutation process of mask in SpaeseEA. This mechanism updates the decision variable score based on the changes of individual’s non-dominated layers during the iteration process, so as to increase the chances of superior decision variables to perform crossover and mutation operation. The score update of the decision variable is based on two aspects, one is the non-dominated layers of the individual, and the other is which decision variables of this individual are selected. The followings are the specific implementation steps of dynamic scoring mechanism, and Algorithm 2 presents the pseudo-code of it.

Calculation of the non-dominated layer score

The non-dominated layer score\(\:{S}_{i}\_r\) of individual \(\:{\mathbf{X}}_{i\:}\) is calculated by Eq. (8), where \(\:{r}_{i}\) is the non-dominated layer of \(\:{\mathbf{X}}_{i\:}\). The non-dominated layer scores of N individuals form a \(\:N\times\:1\) matrix \(\:\mathbf{S}\_\mathbf{r}={[{S}_{1}\_r,{S}_{2}\_r,\cdots\:,{S}_{N}\_r]}^{\varvec{T}}\). In calculating the non-dominated layer of individuals, we use fast non-dominated sorting method of literature34. If an individual \(\:{\mathbf{X}}_{i\:}\) is not dominated by any other individuals in the population, it is in the first non-dominated layer, corresponding to \(\:{r}_{i}=1\). If an individual \(\:{\mathbf{X}}_{i\:}\) is not dominated by any other individuals in the population other than the individuals in the first non-dominated layer, it is in the second non-dominated layer, corresponding to \(\:{r}_{i}=2\), and so on. The better \(\:{\mathbf{X}}_{i}\:\)is, the smaller \(\:{r}_{i}\) is, and the higher \(\:{S}_{i}\_r\) is.

Calculation of the weighted score for decision variable

The transpose matrix of \(\:\:\mathbf{S}\_\mathbf{r}\)is multiplied with mask to obtain a weighted score matrix sumS with size of\(\:\:1\times\:D\:\). The calculation process is shown in Eq. (9), where\(\:\:sum{S}_{d}=\sum\:_{i=1}^{N}{S}_{i}\_r\times\:{mask}_{i,d}\:\)denotes the weighted score of the d-th dimension decision variable. It can be seen from Eq. (9) that when \(\:\:{mask}_{i,d}\:\) is 0, the result of multiplying\(\:\:{mask}_{i,d}\:\)and \(\:{S}_{i}\_r\)is 0, and only when the value of\(\:\:{mask}_{i,d}\:\)is 1, the non-dominated layer score \(\:{S}_{i}\_r\:\)could affect \(\:sum{S}_{d}\). So, the weighted score is not only related to the \(\:{S}_{i}\_r\), but also related to whether the decision variable in mask is selected or not.

Update of the decision variable score

The score of the d-th dimension decision variable, denoted as \(\:{S}_{d}\), is calculated according to Eq. (10), where maxS is the largest value in sumS. It can be observed that a higher\(\:\:sum{S}_{d}\:\)corresponds to a lower\(\:\:{S}_{d}\).

Inheritance to produce offspring

During genetic operation, unlike SparseEA algorithm, which uses fixed initialization decision variable score, SparseEA-AGDS algorithm adopts updated decision variable score \(\:{S}_{d}\). It can be observed that the better \(\:{\mathbf{X}}_{i}\:\)is, the smaller the ordinal number of non-dominated layer \(\:{r}_{i}\) is, the higher the non-dominated layer score \(\:{S}_{i}\_r\) is, the higher the weighted score \(\:sum{S}_{d}\) is, and the smaller the decision variable score \(\:{S}_{d}\) is. The binary tournament selection strategy33 is used to select a dimension with a smaller updated decision variable score\(\:\:{S}_{d}\:\)for crossover and mutation operation. Take D = 8 as an example, Figs. 3 and 4 show the crossover and mutation process of mask in dynamic scoring mechanisms, where the value of \(\:{\:r}_{i}\:\) is randomly set.

Reference point-based environmental selection strategy

The non-dominated individuals grow exponentially as the number of objective functions increases, and the selection pressure of the Pareto dominance relationship drops sharply. In response, the reference point-based environmental selection strategy35 is introduced into SparseEA-AGDS algorithm to address the issue of high-dimensional convergence difficulties. The following are the specific implementation steps of reference point-based environmental selection strategy.

Generation of reference points and normalization of population

The reference point matrix Z within the hyper-plane is firstly constructed based on the scale of the initial population N and the number of objective functions M. Subsequently, the population individuals and reference points undergo normalization.

Associate individuals with reference lines

Reference line of each reference point is calculated based on Z. Then, the distance between individual and its nearest reference line is calculated, thus each individual is associated with its nearest reference line.

Generation of parent population

Individuals in the non-criticality non-dominated layer are placed into population Q, firstly. Then, in the criticality non-dominated layer \(\:{r}_{c}\), in order of the number of individuals associated with each reference line, from least to most, the reference line is selected and its individuals are placed into population Q, until Q is full of N individuals.

SparseEA-AGDS algorithm

As previously mentioned, the SparseEA-AGDS algorithm is proposed within the SparseEA algorithm framework and consists of three strategies, which are adaptive genetic operator, dynamic scoring mechanism and reference point-based environmental selection strategy. Algorithm 3 provides the pseudo-code for the SparseEA-AGDS.

Computation complexity analysis

Time complexity analysis

Assuming the population size is N, the dimension of individual decision variables is D, the number of objectives is M, and the number of iterations is \(\:{\gamma\:}_{iter}\).

Consider the complexity of one iteration of the entire SparseEA algorithm, the basic operations and their worst-case complexities are as follows.

-

(1)

initializing decision variable scores is \(\:{\rm\:O}\left({D}^{2}\right)\);

-

(2)

fast non-dominated sorting is \(\:{\rm\:O}\left({N}^{2}M\right)\)[34];

-

(3)

population initialization is \(\:{\rm\:O}\left(ND\right)\);

-

(4)

crossover operation of dec is \(\:{\rm\:O}\left(ND\right)\);

-

(5)

mutation operation of dec is \(\:{\rm\:O}\left(ND\right)\);

-

(6)

crossover operation of mask is \(\:{\rm\:O}\left(ND\right)\);

-

(7)

mutation operation of mask is \(\:{\rm\:O}\left(ND\right)\);

-

(8)

crowding distance-based environmental selection strategy is \(\:{\rm\:O}\left({N}^{2}M\right)\)34.

The population undergoing \(\:{\gamma\:}_{iter}\) iterations, and the overall complexity of the SparseEA algorithm is \(\:max\left\{{\rm\:O}\right({D}^{2}),\:{\rm\:O}(K{N}^{2}M),\:{\rm\:O}(KND\left)\right\}\).

Consider the complexity of one iteration of the entire SparseEA-AGDS algorithm, the basic operations and their worst-case complexities are as follows.

-

(1)

initializing decision variable scores is \(\:{\rm\:O}\left({D}^{2}\right)\);

-

(2)

fast non-dominated sorting is \(\:{\rm\:O}\left({N}^{2}M\right)\);

-

(3)

population initialization is \(\:{\rm\:O}\left(ND\right)\);

-

(4)

calculation of adaptive crossover probability and mutation probability is \(\:{\rm\:O}\left(N\right)\);

-

(5)

crossover operation of dec is \(\:{\rm\:O}\left(ND\right)\);

-

(6)

mutation operation of dec is \(\:{\rm\:O}\left(ND\right)\);

-

(7)

calculation of dynamic scores of decision variables is \(\:{\rm\:O}\left(ND\right)\);

-

(8)

crossover operation of mask is \(\:{\rm\:O}\left(ND\right)\);

-

(9)

mutation operation of mask is \(\:{\rm\:O}\left(ND\right)\);

-

(10)

reference point-based environmental selection strategy is \(\:\text{m}\text{a}\text{x}\left\{{\rm\:O}\right({N}^{2}M),\:{\rm\:O}({N}^{2}{log}^{M-2}N\left)\:\right\}\)35.

The population undergoing \(\:{\gamma\:}_{iter}\) iterations, and the overall complexity of the SparseEA-AGDS algorithm is \(\:\text{m}\text{a}\text{x}\left\{{\rm\:O}\right({D}^{2}),\:{\rm\:O}(K{N}^{2}{log}^{M-2}N),\:{\rm\:O}(K{N}^{2}M),\:{\rm\:O}(KND\left)\right\}\).

Space complexity analysis

Assuming the population size is N, the dimension of individual decision variables is D, the number of objectives is M, and the number of iterations is \(\:{\gamma\:}_{iter}\).

Consider the space complexity of the entire SparseEA and SparseEA-AGDS algorithm, the basic operations and their worst-case complexities are the same, as follows.

-

(1)

population space is \(\:{\rm\:O}\left(N\right(D+M\left)\right)\);

-

(2)

space of decision variable scoring table is \(\:{\rm\:O}\left({D}^{2}\right)\).

In summary, the overall space complexity of the algorithm is \(\:\text{m}\text{a}\text{x}\left\{{\rm\:O}\right({D}^{2}),\:{\rm\:O}(N(D+M)\left)\right\}\).

Experimental results and analysis

In this section, the experimental settings are firstly introduced. Subsequently, performance verification experiments of the adaptive genetic operator, dynamic scoring mechanism and reference point-based environmental selection strategy are conducted separately. Finally, the proposed SparseEA-AGDS algorithm has been tested on SMOP test suite, comparing against representative algorithms, i.e. NSGA-III35, LMOCSO36, MOEA-PSL27, PM-MOEA28, SparseEA7, NUCEA26 and SGECF31.

Experimental settings

(1) Experimental environment: All experiments are carried out on a PC with AMD i5-8250U 1.6 GHz CPU, 64GB RAM, Windows 10. All the experiments are conducted on the evolutionary multi-objective optimization platform PlatEMO37.

(2) Benchmark problems: The SMOP test suite that proposed by Tian et al.7. was utilized, and the parameters settings of the benchmark problems are as shown in Table 1, where maxFE denotes the maximum number of function evaluations.

(3) Parameter Settings of Compared algorithms: The parameters of all compared algorithms are consistent with the optimal parameter settings in the original articles of them, as shown in Table 2. For algorithms that use simulated binary crossover and polynomial variation methods, \(\:{p}_{c0}\) and \(\:{p}_{m0}\) denote fixed crossover probability and fixed mutation probability, and \(\:\eta\:\) denotes the distribution indices of crossover and mutation.

(4) Evaluation metrics: Since the true PF of all benchmark problems are known, the inverted generational distance (IGD)40 is adopted to measure the quality of solution. IGD is calculated with 10,000 points that are uniformly sampled on the true Pareto front. For each algorithm on each problem, the mean value and standard deviation of solutions gained by 30 independent runs are as evaluation indicators, and the standard deviation is recorded in parentheses. The Wilcoxon rank-sum test41 was used to perform statistical analysis, with a significance level of 0.05. In all tables, “+”, “-” and “=” indicate that the result of compared algorithm is better than, worse than, or approximately equal to the SparseEA-AGDS, respectively. IGD is calculated by Eq. (11).

where H denotes the point set uniformly sampled on the real PF. POP denotes the Pareto solution set obtained by algorithm, and \(\:{pop}_{i}\) is a solution in the POP. The \(\:dis({pop}_{i},H)\) denotes the minimum Euclidean distance between \(\:{pop}_{i}\) and each individual in H.

Ablation study

Effectiveness of the adaptive genetic operator

To verify the effect of adaptive genetic operator on the performance of algorithm, the strategy is applied to the SparseEA algorithm to form SparseEA-AG, which is companied with SparseEA. Under the same initial population conditions, comparison experiments are conducted on SMOP benchmark problems with M = 2, 3 and D = 100. The IGD values obtained from the experiments are presented in Table 3, where the best results are highlighted. The percentage of non-zero decision variables is illustrated in Fig. 5. The following conclusions can be drawn from Table 3; Fig. 5.

(1) In Table 3, according to the IGD results, SparseEA-AG achieves the best IGD values on 11 of 16 problems, and for the statistical results of the Wilcoxon rank-sum test, SparseEA-AG outperforms the SparseEA on 10 problems and is inferior to the SparseEA on 4 problems. It is apparent that adaptive genetic operator plays a positive role in enhancing the algorithm’s convergence and diversity.

(2) From Fig. 5, the percentage of non-zero decision variables of SparseEA-AG surpass the SparseEA on SMOP2, SMOP6 and SMOP8 problems when M = 2. And SparseEA-AG outperforms the SparseEA on 6 of 8 problems when M = 3. It can be seen that the SparseEA-AG algorithm obtains Pareto optimal solution with better sparsity when dealing with many-objective problems.

In summary, adaptive genetic operator has a significant contribution on improving the convergence and diversity of algorithm, and sparsity of Pareto solutions for many-objective problems.

Effectiveness of dynamic scoring mechanism

To verify the effect of dynamic scoring mechanism on the performance of algorithm, the strategy is applied to the SparseEA algorithm to form SparseEA-DS, which is comparied with SparseEA. Under the same initial population conditions, comparison experiments are conducted on SMOP benchmark problems with M = 2, 3 and D = 100, 500, 1000. The IGD values obtained from the experiments are presented in Table 4. The percentage of non-zero decision variables is illustrated in Fig. 6 and statistics of wins on this percentage is presented in Table 5. The following conclusions can be drawn from Table 4; Fig. 6; Table 5.

(1) In Table 4, according to the IGD results, SparseEA-DS achieves the best IGD values on 26 of 48 problems, and especially surpasses the SparseEA algorithm on SMOP4, SMOP5 and SMOP6 problems which are similar to the feature selection problem. For the statistical results of Wilcoxon rank-sum test, SparseEA-DS outperforms the SparseEA on 8 problems, which are all situated on SMOP4, SMOP5 and SMOP6. And the performance of SparseEA-DS is similar to that of SparseEA on 31 problems. It can be seen that the SparseEA-DS algorithm can show better diversity and convergence in dealing with problems similar to feature selection, but has a limited effect on improving IGD.

(2) It can be seen from the results in Fig. 6; Table 5 that the SparseEA-DS wins more times on the percentage of non-zero decision variables with M = 3 when compared to that with M = 2 at any dimension D, and also wins more times than SparseEA. This indicates that the SparseEA-DS algorithm can obtain Pareto optimal solution with better sparsity in high-dimensional problems.

Based on the aforementioned discussions, dynamic scoring mechanism can show good convergence and diversity in dealing with problems similar to feature selection, and obtains better sparse Pareto optimal solutions when facing large-scale many-objective problems.

Effectiveness of reference point-based environmental selection strategy

To verify the effect of the reference point-based environmental selection strategy on the performance of the algorithm, the strategy is applied to the SparseEA algorithm to form SparseEA-RP, which is comparied with SparseEA. Under the same initial population conditions, comparison experiments are conducted on SMOP benchmark problems with M = 3, 5, 10 and D = 100, 500, 1000. The IGD values obtained from the experiments are presented in Table 6 and statistics of wins is presented in Table 7. The percentage of non-zero decision variables is illustrated in Fig. 7. The following conclusions can be drawn from Tables 6 and 7; Fig. 7.

(1) In Table 6, according to the IGD results, SparseEA-RP achieves the best IGD values on 54 of 72 problems, which is approximately 3/4 of the total problems. For the statistical results of Wilcoxon rank-sum test, SparseEA-RP surpasses the SparseEA on 50 problems. It is apparent that the SparseEA-RP can indicate good convergence and diversity.

(2) In Table 7, when D increased from 100 to 1000 under M = 3,10 conditions, the wins of SparseEA-RP are all significantly better than the SparseEA. However, as the dimension of decision variables increases under M = 5 conditions, the performance decline of SparseEA-RP is pronounced. It can be seen that the performance of the SparseEA-RP algorithm is easily influenced by the dimension of the decision variables, and an increase of dimension leads to a decrease of IGD values.

(3) From Fig. 7, the SparseEA-RP achieves better percentage of non-zero decision variables on 3 problems with M = 3. While it achieves better percentage of non-zero decision variables on all problems with M = 5, and achieves better percentage of non-zero decision variables on 7 problems with M = 10. It is apparent that the SparseEA-RP algorithm significantly enhances the sparsity of Pareto optimal solutions in large-scale many-objective sparse problems.

To conclude, although the IGD of SparseEA-RP is easily affected by the dimension of decision variables, the SparseEA-RP effectively resists the potential impact of increasing the dimension of the objective on the algorithm, making algorithm suitable for many-objective optimization problems. And the SparseEA-RP has better convergence and diversity when compared to the SparseEA on the statistical results of Wilcoxon rank-sum test. In addition, SparseEA-RP has a striking contribution to the sparsity of Pareto solutions when the dimension of M and D are large.

Computational efficiency of SparseEA-AGDS

To verify the efficiency of the proposed algorithm, SparseEA-AGDS is compared with seven representative algorithms, i.e., NSGA-III35, LMOCSO36、MOEA/PSL27, PM-MOEA28, SparseEA7, NUCEA26 and SGECF31. The comparison experiments are conducted on SMOP benchmark problems with M = 3, 5, 8, 10, 15 and D = 100, 500, 1000, respectively. The IGD values obtained from the experiments are presented in Table 8, the histogram of IGD values is illustrated in Fig. 8 and statistics of wins on IGD values is presented in Table 9. The percentage of non-zero decision variables is illustrated in Fig. 9 and statistics of wins on this percentage is presented in Table 10. Since the eight benchmark problems, SMOP1–SMOP8, have three different frontiers, i.e., SMOP1-SMOP3 are linear, SMOP4-SMOP6 are concave, and SMOP7-SMOP8 are convex, three benchmark problems are randomly selected from three different frontiers to intuitively compare algorithms’ Pareto front with the true Pareto front. Taking SMOP2, SMOP4, and SMOP8 as examples, the Pareto front of eight algorithms on them with M = 3 and D = 100, 1000 are illustrated in Fig. 10. Since neither NSGA-III and LMOCSO are specific algorithms for handling LSSMOPs, they do not participate in comparing the runtime of the algorithms. The statistics of wins on runtime for the MOEA / PSL, PM-MOEA, SparseEA, NUCEA, SGECF and SparseEA-AGDS algorithm are shown in Table 11. The following conclusions can be drawn from Table 8; Fig. 8; Table 9; Fig. 9; Table 10; Fig. 10; Table 11.

(1) In Table 8; Fig. 8, and Table 9, according to the statistical results of Wilcoxon rank-sum test, SparseEA-AGDS surpasses the LMOCSO on 83 of 120 problems, and surpasses the NUCEA on 78 problems, and surpasses the PM-MOEA on 73 problems, and surpasses the SparseEA, NSGA-III, SGECF and MOEA/PSL on 72, 71, 65, 55 problems, separately. For the histogram of IGD values and the statistics of wins on IGD values, SparseEA-AGDS achieves the best IGD values on 41 problems, and NSGA-III, LMOCSO, MOEA/PSL, PM-MOEA, SparseEA, NUCEA and SGECF achieves the best IGD values on 26, 2, 15, 6, 4, 3, 23 problems, separately. It is apparent that the SparseEA-AGDS can indicate good convergence and diversity.

(2) In Fig. 9; Table 10, under M = 3 conditions, the SparseEA-AGDS algorithm’s wins on percentage of non-zero decision variables are less than the SparseEA. But all of the wins of SparseEA-AGDS surpass other seven algorithms when M = 5, 8, 10 and 15. It can be seen that the SparseEA-AGDS can obtain Pareto optimal solutions with better sparsity, especially when dealing with many-objective large-scale sparse optimization problem.

(3) In Fig. 10, with M = 3 and D = 100, the SparseEA-AGDS algorithm can better converge to the true Pareto front and has a more uniform distribution when compared to the other seven algorithms in SMOP2 and SMOP4 problems. And with M = 3 and D = 1000, SparseEA-AGDS shows the best convergence and uniformity in three problems when compared to the other seven algorithms. It can be seen that the SparseEA-AGDS algorithm can promote the convergence and uniformity of Pareto front.

(4) In Table 11, according to the statistical results of runtime Wilcoxon rank-sum test, although SparseEA-AGDS is inferior to SparseEA, it is far better than the other four comparison algorithms. The SparseEA-AGDS algorithm surpasses MOEA/PSL on 119 of 120 problems, surpasses PM-MOEA on all 120 problems, and surpasses the NUCEA and SGECF on 106, 101 problems, respectively. Due to the calculation of adaptive genetic operator and dynamic evaluation of decision variable scores, that the runtime of SparseEA-AGDS is longer than that of SparseEA. However, in the percentage of non-zero decision variables, SparseEA-AGDS outperformed SparseEA on 86 of 120 problems, and surpasses SparseEA on 72 problems in IGD values. The SparseEA-AGDS algorithm achieved superior performance of sparse optimization by the increased runtime.

To sum up, when dealing with many-objective large-scale sparse optimization problems, the SparseEA-AGDS algorithm can promote the convergence and uniformity of the Pareto front, and also can gain Pareto solutions with better sparsity.

Conclusions

Many researchers utilize multi-objective evolutionary algorithms to solves real-world optimization problems42. However, the performance of multi-objective evolutionary algorithms is often not satisfactory when solving large-scale sparse many-objective optimization problems. Consequently, this paper proposes SparseEA-AGDS algorithm based on the SparseEA algorithm framework. The SparseEA-AGDS algorithm introduces adaptive genetic operators and dynamic scoring mechanism to dynamically updates the crossover probability, mutation probability, and decision variable scores based on the quality of individuals, thereby increasing the chances for superior individuals to undergo crossover and mutation operation. Additionally, SparseEA-AGDS introduces the reference point-based environmental selection strategy to improve the algorithm’s ability to handle many-objective optimization problems.

The results of the ablation study indicate that, the SparseEA-AG has positively contributed in enhancing the diversity and convergence of the algorithm. The SparseEA-DS also can show good convergence and diversity in dealing with problems similar to feature selection, and obtain better sparse Pareto optimal solutions. The SparseEA-RP effectively resists the potential impact of increasing the dimension of the objective on the algorithm, making algorithm suitable for many-objective optimization problems. In summary, the SparseEA-AGDS algorithm can promote the convergence and diversity of the algorithm when dealing with many-objective large-scale sparse optimization problems, and also can obtain better sparse Pareto solutions.

Data availability

The data presented in this study are available on request from corresponding author.

References

Pasha, L. et al. Exact and metaheuristic algorithms for the vehicle routing problem with a factory-in-a-box in multi-objective settings. Adv. Eng. Inform. 52, 101623 (2022).

Omidvar, M. N., Li, X., Mei, Y. & Yao, X. Cooperative co-evolution with differential grouping for large scale optimization. IEEE Trans. Evol. Comput. 18(3), 378–393 (2014).

Yao, X. A review of evolutionary artificial neural networks. Int. J. Intell. Syst. 8(4), 419–440 (1993).

Anil, K., Jain, M., Murty, N. & Flynn, P. J. Data clustering: A review. ACM Comput. Surveys. 31(3), 264–323 (1999).

Cai, Q., Gong, M., Ma, L. & Jiao, J. A survey on network community detection based on evolutionary computation. Int. J. Bio-Inspired Comput. 6(5), 279–291 (2014).

Marinakis, Y. & Marinaki, M. A particle swarm optimization algorithm with path relinking for the ___location routing problem. J. Math. Modelling Algorithms. 7(1), 59–78 (2008).

Tian, Y., Zhang, X., Wang, C. & Jin, Y. An evolutionary algorithm for large-scale sparse multi-objective optimization problems. IEEE Trans. Evol. Comput. 24(2), 380–393 (2020).

Jin, Y. & Sendhoff, B. Pareto-based multi-objective machine learning: an overview and case studies. IEEE Trans. Syst. Man. Cybernetics Part. C: Appl. Reviews. 38(3), 401–410 (2008).

Lwin, K. & Qu, R. A learning-guided multi-objective evolutionary algorithm for constrained portfolio optimization. Appl. Soft Comput. 14(12), 1155–1169 (2014).

Tian, Y., Zhang, X. & Jin, Y. An improved large-scale sparse multi-objective evolutionary algorithm using unsupervised neural network. IEEE Trans. Cybernetics. 50(1), 1–14 (2020).

Tian, Y. et al. Evolutionary large-scale multi-objective optimization: A survey. ACM Comput. Surveys. 54(8), 1–34 (2021).

Shao, S. et al. A permutation group-based evolutionary algorithm for car sequencing problems in assembly lines, International Conference on Data-driven Optimization of Complex Systems. 1–8 (2023).

Yin, F. & Cao, B. A two-space-decomposition-based evolutionary algorithm for large-scale multi-objective optimization. Swarm Evol. Comput. 101397, 101397 (2023).

Fan, W. et al. Two-stage distribution-ally robust optimization model of integrated energy system group considering energy sharing and carbon transfer. Appl. Energy. 331, 120426 (2023).

Si, L. et al. Linear subspace surrogate modeling for large-scale expensive single/multi-objective optimization. IEEE Transactions on Evolutionary Computation(2023).

Huang, C. et al. Co-evolutionary competitive swarm optimizer with three-phase for large-scale complex optimization problem. Inf. Sci. 619, 2–18 (2023).

Tian, Y. et al. Integrating conjugate gradients into evolutionary algorithms for large-scale continuous multi-objective optimization. IEEE/CAA J. Automatica Sinica. 9(10), 1801–1817 (2022).

Liu, S., Lin, Q., Tian, Y. & Tan, K. C. A variable importance-based differential evolution for large-scale multi-objective optimization. IEEE Trans. Cybernetics. 52(12), 13048–13062 (2021).

Feng, Y., Feng, L., Kwong, S. & Tan, K. C. A multi-variation multi-factorial evolutionary algorithm for large-scale multi-objective optimization. IEEE Trans. Evol. Comput. 26(2), 248–262 (2021).

Lin, Q. et al. An adaptive two-stage evolutionary algorithm for large-scale continuous multi-objective optimization. Swarm Evol. Comput. 77, 101235 (2023).

Ding, Z., Chen, L., Sun, D. & Zhang, X. A multi-stage knowledge-guided evolutionary algorithm for large-scale sparse multi-objective optimization problems. Swarm Evol. Comput. 73, 101119 (2022).

Smith, J. & Brown, A. An evolutionary algorithm based on rank-1 approximation for sparse multi-objective optimization. Springer 56(6), 1581–1586 (2023).

Wang, H., Fang, D. G. & Chow, Y. L. An evolutionary algorithm for large-scale sparse multi-objective optimization problems. Proceedings of the Genetic and Evolutionary Computation Conference Companion. 1561–1568 (2019).

Tan, Z., Wang, H. & Liu, S. Multi-stage dimension reduction for expensive sparse multi-objective optimization problems. Neurocomputing 440, 159–174 (2021).

Ye, Q. et al. A cluster-based competitive particle swarm optimizer with a sparse Truncation operator for multi-objective optimization. Swarm Evol. Comput. 71, 101083 (2022).

Shao, S., Tian, Y. & Zhang, X. A non-uniform clustering based evolutionary algorithm for solving large-scale sparse multi-objective optimization problems, Bio-Inspired Computing: Theories and Applications, Communications in Computer and Information Science. 103–116 (2024).

Tian, Y., Lu, C., Zhang, X. & Cheng, F. Solving Large-Scale Multi-Objective optimization problems with sparse optimal solutions via unsupervised neural networks. IEEE Trans. Cybernetics. 51(6), 3115–3128 (2021).

Tian, Y., Lu, C., Zhang, X., Cheng, F. & Jin, Y. A pattern mining-based evolutionary algorithm for large-scale sparse multi-objective optimization problems. IEEE Trans. Cybernetics. 52(7), 6784–6797 (2022).

Wang, X., Zhang, K., Wang, J. & Jin, Y. An enhanced competitive swarm optimizer with strongly convex sparse operator for large-scale multi-objective optimization. IEEE Trans. Evol. Comput. 26, 859–871 (2022).

Cheng, R. & Jin, Y. A competitive swarm optimizer for large scale optimization. IEEE Trans. Cybernetics. 45(2), 191–204 (2014).

Wu, C., Tian, Y., Zhang, Y., Jiang, H. & Zhang, X. A sparsity-guided elitism co-evolutionary framework for sparse large-scale multi-objective optimization. Proceedings of the IEEE Congress on Evolutionary Computation 1–8 (2023).

Li, W., Zhang, T., Wang, R., Huang, S. & Liang, J. Multi-modal Multi-objective optimization: comparative study of the State-of-the-Art. Swarm Evol. Comput. 82, 101253 (2023).

Goldberg, D. E. & Deb, K. A comparative analysis of selection schemes used in genetic algorithms. Elsevier 1, 69–93 (1991).

Deb, K., Agrawal, S., Pratap, A. & Meyarivan, T. A fast and elitist multi-objective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 6(2), 182–197 (2002).

Deb, K. & Jain, H. An evolutionary many-objective optimization algorithm using reference-point-based non-dominated sorting approach, part i: solving problems with box constraints. IEEE Trans. Evol. Comput. 18, 577–601 (2014).

Tian, Y., Zhang, X., Li, H. & Zhang, X. Efficient large-scale multi-objective optimization based on a competitive swarm optimizer. IEEE Trans. Cybernetics 50(8), 3696–3708 (2019).

Tian, Y., Cheng, R., Zhang, X. & Jin, Y. PlatEMO: A MATLAB platform for evolutionary multi-objective optimization. IEEE Comput. Intell. Mag. 124), 73–87 (2017).

Zhang, X., Zheng, X., Cheng, R., Qiu, J. & Jin, Y. A competitive mechanism based multi-objective particle swarm optimizer with fast convergence. Inf. Sci. 415, 28–44 (2018).

Khan, W. & Zhang, Q. MOEA/D-DRA with two crossover operators. UK Workshop on Computational Intelligence (UKCI). 1–6 (2010).

Ishibuchi, H., Masuyama, N. & Nojima, Y. Modified distance calculation in generational distance and inverted generational distance. Lect Notes Comput. Sci. 319, 15892–15908 (2015).

Wilson, P. Extension of the Wilcoxon rank sum test for complex sample survey data. J. R. Stat. Soc. 61(4), 653–707 (2012).

Li, F., Shang, Z., Shen, H., Liu, Y. & Huang, P. Q. Combining modified inverted generational distance indicator with reference-vector-guided selection for many-objective optimization. Appl. Intell. 53, 12149–12162 (2023).

Funding

This work was supported by the Basic research project of Science and Technology Department of Yunnan Province (Grant Number 202201AT070021), Open Subject of Yunnan Key Laboratory of Unmanned Autonomous Systems (Grant Number 202408YB07), and the National Natural Science Foundation of China (Grant Number 62161052 and 61963038).

Author information

Authors and Affiliations

Contributions

W. Z. wrote a manuscript; X. W. obtained funding sources; Z. D. is responsible for data analysis; J. T. and Y. F. are responsible for proofreading the manuscript. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, X., Zhao, W., Tang, JN. et al. Evolution algorithm with adaptive genetic operator and dynamic scoring mechanism for large-scale sparse many-objective optimization. Sci Rep 15, 9267 (2025). https://doi.org/10.1038/s41598-025-91245-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-91245-z