Abstract

The agricultural industry is experiencing revolutionary changes through the latest advances in artificial intelligence and deep learning-based technologies. These powerful tools are being used for a variety of tasks including crop yield estimation, crop maturity assessment, and disease detection. The cotton crop is an essential source of revenue for many countries highlighting the need to protect it from deadly diseases that can drastically reduce yields. Early and accurate disease detection is quite crucial for preventing economic losses in the agricultural sector. Thanks to deep learning algorithms, researchers have developed innovative disease detection approaches that can help safeguard the cotton crop and promote economic growth. This study presents dissimilar state-of-the-art deep learning models for disease recognition including VGG16, DenseNet, EfficientNet, InceptionV3, MobileNet, NasNet, and ResNet models. For this purpose, real cotton disease data is collected from fields and preprocessed using different well-known techniques before using as input to deep learning models. Experimental analysis reveals that the ResNet152 model outperforms all other deep learning models, making it a practical and efficient approach for cotton disease recognition. By harnessing the power of deep learning and artificial intelligence, we can help protect the cotton crop and ensure a prosperous future for the agricultural sector.

Similar content being viewed by others

Introduction

South Asia is the home of about half of the world’s malnourished population where agriculture is their primary source of livelihood1. These countries are known for their agricultural exports which comprise a significant portion of their gross domestic product (GDP). The fertile soil in the region allows for high crop yields, leading to surplus production and subsequent exports for over 50 years. This benefits farmers and cotton-related crops, which strengthens the country’s economy, thereby increasing the country’s GDP2. Therefore, to achieve economic stability and maximize crop revenue, it is crucial to achieve high-quality and high-quantity yields3. However, achieving better yield is difficult when pests, weeds, and animals attack the crops. Despite current practices, crop losses due to these factors remain substantial. In fact, between 2001 and 2003, 19 regions were reported bearing losses up to 50% for wheat, over 80% for cotton, as well as 26-29% for soybeans. These numbers are expected to increase in the near future2.

Pakistan is a developing country with a total area of 796,095 \(km^2\), out of which 24.44% is arable land. Agriculture is the most significant contributor to Pakistan’s economy, sharing approximately 19% of total GDP, although there is potential in the agriculture sector to increase this percentage4. However, climate change, pest attacks, and water shortages have hindered agricultural growth. Pakistan has two cropping seasons and the first season starts in April and continues till December. Crops such as cotton, maize, green gram, mash, barley, sorghum, rice, and sugarcane are grown in this season. The second season continues from December to April, and yields crops like wheat, gram, and lentil barley. Pakistan’s primary crops include cotton, sugarcane, rice, wheat, and maize.

Cotton is particularly important for Pakistan’s economy, accounting for 55% of the country’s total foreign exchange earnings and contributing 10% to GDP5. Pakistan is the fifth-largest cotton producer in the world, after China, India, America, and Indonesia6. Cotton crop is primarily grown in the provinces of Sindh and Punjab which produce approximately 75% of the total cotton crop produced in Pakistan annually. This crop is sown in March and April in Sindh, and in May to August in Punjab7. There are 59 different cotton varieties, each suited to different environmental conditions, planting times, recommended areas, and yield potential6. However, these varieties have to face various issues including disease attacks, requiring diligent care and timely spraying to prevent significant crop losses. Figure 1 shows cotton production during recent years6.

An overview of cotton production for 2016 to 2021 in Pakistan6.

The cotton crop, being the most vulnerable crop, is attacked by numerous pests and diseases that significantly affect its yield and quality. A number of diseases that affect cotton crops include Wilt, Rust, Anthracnose, Sore-Shin, Minor Boll-Rots, Mealybug, and Thrips8. Early detection of these diseases is crucial for obtaining a high-quality yield, and it is essential to implement effective management practices to control their spread. The cotton mealybug is a notorious sucking pest that feeds on the cell sap from cotton plants leaves, fruit, and twigs. This results in delayed growth and late opening of bolls, which can severely affect the yield. Mealybugs emit a sweet compound that draws ant colonies and encourages the growth of black sooty mold9. Thrips, a type of small insects, hardly visible to the unaided eye destroy the cotton crop seedlings at a mega level. With their pointed mouth parts, they suck the fragile leaves and terminal the buds and consume the juices. As a result, leaves get twisted and curl upward, develop a silvery tint, or grow brown edges. Light infestations of Thrips slow down the growth and maturation of plants, whereas strong infestations can kill terminal buds or even whole plants. Alternate branching patterns can also result from damaged terminal buds. Season and ___location have a significant impact on the length and severity of Thrips infestations9.

Anthracnose is another common cotton disease that affects both the leaves and fruits. It is caused by a fungus from the Colletotrichum plant pathogenic group, which causes water-soaked dark lesions to appear on both the fruits and leaves10. Blight is another disease that damages various fruits and crops, including cotton. Symptoms of blight infection include yellowish color and dry leaves, and it is caused by the Helminthosporium turcicum Pass fungus, which severely damages cotton crops and renders them useless11.

Deep learning (DL) has revolutionized numerous fields, showcasing remarkable success in areas such as healthcare for disease diagnosis12,13, autonomous systems for self-driving vehicles14, network security15, Natural language processing16, agriculture17, etc. Particularly in the agriculture ___domain, DL has been applied for tasks such as crop yield prediction18, classification using aerial imagery19, agricultural field monitoring20, stress identification in plants21, infected leaf detection22,23, etc. Highlighting the advancements of DL underscores its transformative potential and contextualizes its relevance to the present study24.

Cotton is an important cash crop, and its diseases significantly impact the yield. Detection of diseases at an early stage helps farmers in taking preemptive measures to help improve cotton crop yields. DL-based object detection can automate the process, saving time and resources. These are the key factors that encourage researchers to work on these diseases and are the real motivation behind this study as well. This research primarily focuses on the accurate and timely detection of diseases in cotton crops. In this regard, the following are the main contributions of this study.

-

Collecting real-world data concerning four diseases found in cotton crops. The data are collected for diseases including ‘mealy bug’, ‘anthracnose’, ‘thrips’, and ‘blight’.

-

Evaluating deep learning models, particularly, convolutional neural networks (CNNs) variants for their capability to detect diseases involving image processing.

-

Preprocessing image data involving various steps such as color conversion, noise removal, etc., to improve the model’s training.

-

Comparing results of various models with respect to their accuracy, sensitivity, and specificity for detecting cotton crops, so that a robust and efficient approach can be provided for the best detection accuracy.

The rest of this paper is organized as follows. Section "Literature review" provides an overview of AI and DL models. Section "Methodology" is allocated to methodology which describes the experimentation methodology applied in this paper. Moving forward, Section "Experimental results" contains all the details about experiments and result predictions. Finally, the discussion is concluded in Section "Conclusions".

Literature review

As technology advances, experiments lead to new inventions, and these experiments and inventions are applied in new fields. One such technology is artificial intelligence (AI), which has significantly impacted various domains including agriculture25. AI has facilitated a shift from hard-coded (non-evolving) solutions to automated mechanisms for a range of tasks through machine learning and DL. For example, to identify unique patterns across a large volume of resources, pages, links, and more, data mining is an effective technique26. During the COVID pandemic and beyond, researchers have utilized software engineering techniques and DL to enable remote learning, detect COVID based on images of X-ray, and analyze COVID-19 tweets for sentiment27,28,29. The problem addressed in30 is the detection of cotton plant diseases in order to yield healthy crops without any disease. The proposed work in the paper is to use deep transfer learning, specifically, the advantages of ResNet trained on ImageNet combined with the Xception component, for the recognition of cotton crop leaf diseases. Disease detection in cotton plants is accomplished in31 using DL techniques, more specifically deep CNNs. It applies a pre-trained model that was obtained from typical huge datasets to a particular job that was taught using their own data. Based on the experimental findings, ResNet-50 achieved validation and training accuracy of 0.98 and 0.95, respectively, with validation training loss of 0.5 and training loss of 0.33%.

In agriculture, AI in conjunction with advanced algorithms and approaches to computer vision and DL, is highly beneficial in tasks such as detecting fruit and crop maturity, disease detection, and classification, based on feature extraction32 and other techniques33. Disease detection has been a major focus of research in agriculture, with many methods employed to achieve the most accurate results possible. Utilizing photographs of numerous cotton leaf spots that have been infected with various illnesses, sophisticated computing systems have been created, and pattern recognition techniques have been applied to improve disease detection accuracy33,34.

DL has revolutionized how diseases are detected and classified in plants using computer-based techniques35. Due to its useful applications, it has been widely accepted in a variety of industries and is growing in popularity36. DL provides a mapping between input and output, making it an ideal choice for automated disease detection and classification. DL has been effectively used in recent years to recognize diseases in rice plants37, wheat disease detection and classification38, maize crop disease detection34,39, tomato disease detection40, soybean disease detection41, oilseed rape pest detection42, brain tumor detection and classification43, medical image steganalysis44, and transportation management, among many others45. CNNs are the most commonly used DL models46. In CNN, each node in a neural network is a mathematical function that takes numerical input from incoming edges, processes it, and then outputs numerical results. The challenge is to get as close as possible to the actual label/classification, which is the accuracy of that model. Deep learning has also been utilized for projects like predicting traffic47, object recognition48, natural language processing49, and autonomous unmanned aerial vehicle weed management50.

The researchers collected a dataset of affected leaves from cotton crops from various locations in Sindh, Pakistan11. They employed the Inception v4 architecture as a CNN to recognize diseased plant leaves, and 98.26 accuracy has been achieved. DL and ML algorithms were used for crop disease identification. The Inception v4 architecture was utilized as a CNN for disease prediction.

The problem addressed in the article30 is the detection of cotton plant diseases in order to yield healthy crops without any disease. The proposed approach uses deep transfer learning, for the diagnosis of cotton plant diseases, specifically a combination of the advantages of ResNet pre-trained on ImageNet and the Xception component. Disease detection in cotton plants is accomplished using DL techniques, more specifically deep CNNs. It applies a pre-trained model that was obtained from typical huge datasets to a particular job that was taught using their own data. According to the experimental findings, ResNet-50 achieved training and validation accuracy of 0.95 and 0.98, respectively, with training loss of 0.33 and validation loss of 0.5%.

Due to plant diseases, the agricultural industry experiences substantial losses in food production and the extinction of species. Early detection of plant leaf ailments is essential to minimize economic losses and enhance food production quality51. The article52 utilized CNN-based pre-trained models, such as DenseNet-121, ResNet-50, VGG-16, and Inception V4, for efficient plant disease identification. The authors focused on fine-tuning the hyperparameters of popular pre-trained models and evaluated the performance through prediction of precision, recall, accuracy, and F1 score. DenseNet-121 achieved a classification accuracy of 99.81, which outperformed cutting-edge models. Future research will focus on issues with real-time data collecting and create a multi-object DL model that can identify plant diseases from a group of leaves, helping farmers and the agricultural industry identify leaf diseases in real-time leaf disease recognition53.

The study54presents a hybrid DL method for evaluating leaf diseases against tomatoes. The method combines image features extracted by pre-trained CNNs with environmental metadata (e.g., temperature and humidity) for enhanced accuracy. Bayesian optimization fine-tunes the model’s hyperparameters to achieve optimal performance. The proposed approach outperformed traditional methods in accuracy, precision, and recall, demonstrating the importance of integrating multimodal data. This framework provides a robust solution for precise and efficient disease classification in tomato plants.

The authors55 address the challenge of wheat leaf disease classification with limited datasets by evaluating data augmentation strategies and CNN-based models. Augmentation techniques like flipping, rotation, and cropping were applied to enhance dataset diversity. Pre-trained CNN architectures, including ResNet and VGG, were fine-tuned on the augmented data. Results showed significant performance improvements, with ResNet achieving the highest accuracy. The study highlights the effectiveness of data augmentation and CNNs in improving disease classification with limited data resources.

In56, the focus is on enhancing crop productivity and sustainability by manipulating advanced DL techniques for disease identification in maize leaves. A large dataset is utilized to train a Vision Transformer (ViT) model, enabling accurate and efficient detection of various maize leaf diseases. This approach not only improves agricultural diagnostics but also contributes to sustainable farming practices by minimizing crop loss and promoting early intervention. The study highlights the potential of state-of-the-art AI models in addressing critical challenges in agriculture. Similarly, excellent results are reported in57 for sugarcane classification using ViT model.

Along the same lines58, explores advancements in DL techniques for the precise classification of grape leaves and the effective diagnosis of grape diseases. By utilizing state-of-the-art models, the research aims to improve disease identification accuracy, contributing to better vineyard management and enhanced agricultural productivity. This approach highlights the role of modern AI in addressing challenges in viticulture and promoting sustainable farming practices.

The study59 presents a customized CNN approach for accurately identifying weeds in cotton crops. By tailoring the CNN architecture to the specific needs of agricultural environments, the model enhances weed detection and classification, enabling targeted weed management. This advancement supports precision farming, reduces the overuse of herbicides, and promotes sustainable cotton cultivation practices.

Convolution neural network

In the area of image processing, CNN is the commonly employed DL model. Input data is represented via multidimensional arrays in CNN35. It functions nicely for a lot of labeled data. The entire input images are extracted by CNN, and this area is referred to as the receptive field. By the important function of the receptive field, it distributes weights to each neuron60. The three main parts of CNN are the convolution layer, the pooling layer, and the fully linked layer. The following section contains a brief discussion of various DL techniques. The research aims to develop a DL method for the recognition of cotton crop diseases like blight, anthracnose, and curl leaf virus.

VGG16

A DL model named VGG was first presented in ILSVRC 2014. ImageNet large-scale visual identification challenge is the testbed for a few generations of large-scale image classification systems. VGG16 accepts images as input with fixed dimensions of 224\(\times\)224. Afterward, preprocessing is done using a VGG16 preprocessor which performs the task of the meaning of subtracting RGB, using training data from each pixel to calculate, the image passes through the convolutional layers. 3\(\times\)3 convolutional filters are employed. Three fully connected layers proceed to the convolutional layer stack. The first two have 4096 channels, third, however, has 1000 classifications as ImageNet had thousand species to classify. The last layer is the SoftMax layer. For each network, the fully connected layer configuration is the same. This model has 138,357,544 parameters and is 528MBs in size. Its time per inference step for CPU and GPU is 69.50ms and 4.16ms respectively61.

DenseNet

DenseNet picks the idea of non-linear flow between layers. It proposes a different connectivity pattern, i.e. direct connections between every layer and every layer after it, which means that the last layer has a feature map of every layer before it.

The combination of all the feature maps is referred to as x1, where x0 is the layer and l is another layer. Three dense blocks make up DenseNet, each with an equal number of layers. The input is subjected to a convolution of 16 output channels prior to entering the first dense block. Each side of the input for convolutional layers with 3\(\times\)3 kernel size is zero-padded by one pixel to maintain the fixed size of the feature map. Following a 1\(\times\)1 filter, a 2\(\times\)2 average pooling layer serves as a connecting layer between two dense blocks. After the last Dense Layer block a Softmax activation function is used. There are numerous versions with various layer depths62.

EfficientNet

This model is inspired by MnasNet which is a deep learning model designed with a focus on mobile phone devices. EfficientNet has no multi-objective neural architecture search, rather, it uses the same search space as Mnasnet63. It uses the following formula as an optimization goal.

where T is the goal FLOPS, w = -0.07 is the hyperparameter for managing the trade-off between accuracy and FLOPS, ACC(m) and FLOPS(m) denote the accuracy and flops of model m.

EfficientNet optimizes the flops rather than latency since this model does not target the hardware. Efficientnet-B0 is the base model. It contains 1 layer of convolution layer of 3\(\times\)3 filters followed by 16 layers of MBConv layer where MBConv layer means mobile inverted bottleneck convolution layer and conv 1\(\times\)1 layer and pooling layer. All other variants of this model are made by using different compound coefficients.63.

InceptionV3

InceptionV3 was first presented in the year 2015 and it is an improvement upon the already used InceptionV2 model. It has 42 layers in total and results in a lower rate of error than its counterparts i.e. InceptionV1 and InceptionV2. Its improvements mainly come from Smaller convolutions as a result of factorization, grid size reduction done efficiently, etc.64.

MobileNet

As a type of factorized convolution, depth-wise separable convolution layers that factorize a standard convolution are split into a depth-wise convolution and a pointwise convolution, which is a 1\(\times\)1 convolution are the foundation of Mobilenet. It has 6 pairs of convolution layers followed by the depth-wise convolution layer with a variable filter shape. Then a convolution layer with 1\(\times\)1 \(\times\) 256 \(\times\) 512 filter shape followed by 5 pairs of DWConv of 3\(\times\)3\(\times\)512 dw layer and Conv layer with 1\(\times\)1\(\times\)512\(\times\)512. Then, two pairs of DWConv layer and Conv layer, average pooling layer, flatten layer, and softmax at last65. MobileNetV2 supports input sizes larger than 32 \(\times\) 32 and employs inverted residual blocks with bottlenecking techniques66.

NasNetMobile

This model was introduced by Google. It is a DL model that comes trained on images of the popular dataset ImageNet having almost a million images. It is capable of classification of images into almost 1000 categories based on their classes. It takes input images of size 224\(\times\)224 dimensions. This model has 5,326,716 parameters and is 23MB in size. Its time per inference step for CPU and GPU is 27.04ms and 6.70ms respectively.

ResNet

ResNet is inspired by the VGG DL model. The residual network is shown in Fig. 3. In traditional convolutional feed-forward networks, the output layer of the lth layer is connected to the input layer of the (l + 1)th layer. ResNet includes a skip connection that omits the identity function’s non-linear changes.

The convolution layers have 3\(\times\)3 filters following two rules. First, the output feature map size of the layers uses the same number of filters. Second, if the feature map is cut in half, the number of filters must be increased in addition to maintaining the layer’s time complexity. Convolutional layers that have a stride of 2 perform downsampling. Because of ImageNet classification, the network has 1000 fully connected layers and a global average pooling layer at the end. The total number of weighted layers is the name of the model. So, ResNet-50 has 50 layers, 101 having 101 layers67.

Xception

Xception model has its influences from VGG-16, Inception, ResNet, and a number of other deep learning-based models that have more than 30 layers to form out the feature extraction process. With the exception of the first and last, these are divided into 14 modules and have linear residual connections surrounding them7. This model has 22,910,480 parameters and is 88MB in size. Its time per inference step for CPU and GPU is 109.42ms and 8.06ms respectively. Table 1 provides hyperparameters for all models used for experiments.

Methodology

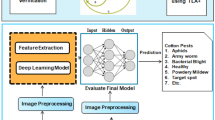

Cotton crops suffer from a plethora of diseases. In this work, authors have focused mainly on the detection of Mealybug, Anthracnose, Thrips, and Blight diseases in cotton crops using DL-based models. Figure 2 depicts the proposed disease detection methodology that consists of four main phases. Data collection, data preprocessing, model training, and validation and detection.

The first layer in the proposed methodology refers to the collection of input data or information that is provided to our system for training of detection model. This input dataset includes images of cotton crops including healthy and disease-affected plants in this case. After data collection, the augmentation technique is used to expand in scope and variety of input datasets by applying different techniques to the same data. For example, in image processing, augmentation may involve randomly cropping, rotating, or flipping images. After the application of augmentation techniques, images are labeled as per defined disease classes. Model training is the process of using the preprocessed input data and associated labels to train a DL algorithm or CNN. It involves feeding the data through the model and adjusting its parameters to reduce the variance between valuable output and real labels. Output is the final result produced by the proposed model, which can take many forms depending on the specific task being performed. As shown in Fig. 2, labeled images are used as input to the detection model and it is trained to achieve the goal of accurate detection of diseases in cotton crops.

Dataset collection

In this work, real data on cotton crop diseases is collected from a cotton crop field located in South Punjab, Pakistan. Image data was collected when the crop was 7 weeks old. The data is collected in the month of August and during this month, the sun rises around 5:35 am and sets around 6:40 pm in the selected region of south Punjab. So, the first interval starts early morning before sunrise from 5:30 am to 7:00 am. Then after a break of 2 hours, data is collected around the midday time starting from 9:00 am to 11:00 am. The third interval starts after noon from 12:30 pm to 2:00 pm and the fourth interval starts in the evening from 5:00 pm to 7:00 pm. In the month of August, the weather of South Punjab remains very hot and dry and the temperature in a day remains between \(88^\circ\) F \(100^\circ\) F and sometimes goes beyond the upper limit. Humidity is always high during this period and remains between 40 to 50%. The dataset consisted of 5,600 color images of cotton plants captured by Vivo S1 Pro having a rear camera of 48MP. Using this device, high-resolution images of 4000\(\times\)3000 pixels were captured. The data was collected in different illumination conditions and images were captured at three different time intervals of the day i.e. morning, afternoon, and evening to avoid the uniformity in data.

Uniform datasets can possibly suffer from overfitting problems and result in higher accuracy but actually fail in the detection of diseases in real environments. Mealybugs and thrips do not specifically attack leaves, so the images of plant stems were collected for this purpose. For instance, the mealybug starts attacking the plant stem and then slowly moves towards the leaves. An overview of the disease-affected plants is given in Fig. 3.

There were 1400 images for each disease i.e. mealy bug, anthracnose, thrips, and blight in the dataset. The dataset was cleaned by removing the noisy and blurred images before further processing. As a result of this process, one thousand images for each category were selected and augmentation was performed to increase dataset size which ended up with a total of 8000 images for all diseases. DL models were trained on 2,000 images from all classes i.e. mealy bug, anthracnose, thrips, and blight. The reason for selecting an equal number of images from all classes is to avoid model biases or leaning towards a specific class by learning more of its features while avoiding other classes. For training, images were resized to 224\(\times\)224 pixels. Table 2 shows image augmentation details.

Data preprocessing

Data preprocessing is crucial for model training as well. It enhances the quality of data to get meaningful features from the data. The process of the data input to output is shown in Fig. 4. Data preprocessing refers to the technique(s) of converting raw data into processed data to make it suitable for training deep learning models. VGG16 preprocessor transforms images from RGB to BGR, after this each color channel is centered zero. In this procedure, input data are normalized to have a mean of 0 and standard deviation of 1 with respect to the ImageNet dataset, without scaling.

Training of models

For experimentation, 18 DL CNN ground models were trained on the collected dataset to see which model achieved high accuracy for recognition and classification on the basis of performance metrics and hyper-parameters. All models have distinct configurations of convolution layer, flatten layer, dense layer, and pooling layers, For instance, VGG16 has 23 layers and it has two sets of two convolution layers and the pooling layer, then 3 sets of 3 convolution layers followed by the pooling layer and at the end, there is a global pooling layer. In each model, an input layer was added to obtain the images. Afterward, a rectified linear unit (ReLu) was added as an activation function, and a flatten layer with 64 nodes was added after the dense layer. Then, another flatten layer proceeded by a dense layer with 2 nodes as it is an output layer and the number of classes to be detected is two, and SoftMax as an activation function was used. Input layer, flatten, and dense layers configuration was integrated into all models. This was done to have a fair comparison while analyzing the results of these models. Each model was executed for 20 epochs and one epoch is defined as the number of forward and backward passes of the entire training dataset the learning algorithm has completed. The 20 epochs were selected on the observation that most of the models give the highest prediction within 20 epochs, not after that.

Experimental results

This section briefly discusses performance metrics and experimental setup first. Afterward, the results of all models are discussed. For the evaluation of trained models on the basis of prediction, different well-known performance metrics are used which are discussed here.

Performance metrics

The formula for calculating accuracy is given below.

Basically, four things are needed for obtaining accuracy i.e., true positive (TP), true negative (TN), false positive (FP), and false negative (FN). TP is the number of actual positives that the model predicted correctly. TN is the actual positive that the model predicted incorrectly. FP is actual negatives that the model predicted incorrectly. FN is the actual negatives that the model predicted correctly. Recall is the measure of the model correctly recognizing true positives. It basically tells about the correctly recognized disease spots in plants. Mathematically it could be defined as shown below4.

Recall serves as a gauge of how well the model can locate the pertinent facts. It is also known as the true positive rate (TPR). Precision is the ratio between the TP and all the Positives. In this case, it could be used to highlight the disease spots that were correctly recognized out of the total number of disease spots in the given testing dataset. The mathematical formula to calculate the Precision value is given below28.

We considered the accuracy of the model as the performance metric. Also, results are finalized after multiple successful iterations of models on the same data27.

Experimental setup

All the experiments are executed on a machine with a dedicated graphics card for model training and testing. Specifications for the used machine include Core i5 10th Gen Clocked at 2.90 GHz and NVIDIA GTX 970 4GB GPU. To evaluate how well-trained CNN models perform on unknown data, the dataset was split in the ratio of 70%, 20%, and 10% for training, testing, and prediction purposes respectively39. Below is the detail of all results achieved from all the applied deep learning models on the same dataset using this experimental setup.

Results using VGG16

The experiments were started with the VGG16 model which achieved higher than 98% accuracy and the loss value reported during the training process was 0.0042. Training and validation results of the VGG16 model for accuracy and loss are shown in Fig. 5. In the figure, training epochs are represented on the x axis while loss or accuracy values are shown on the y axis50. It can be seen that the accuracy of VGG16 improved during epochs and the model achieved its maximum performance after the 17th epoch. The performance remained almost constant after that point towards the ending epochs.

Results of DenseNet model

After experimenting with the VGG16 model, DenseNet is applied for the task of cotton disease detection i.e. mealy bug, anthracnose, thrips, and blight. Three variations of DenseNet i.e. DenseNet121, DenseNet169, and DenseNet201 are applied. All three models performed similarly with a minor detection difference in terms of accuracy. Training and validation graphs for accuracy and loss for different deployed DenseNet models are shown in Fig. 6. Graphs contain epochs for all deployed models on the x axis and other metrics such as accuracy, loss, etc. on the y axis. It can be seen that DenseNet models have shown sudden graph ups and downs (variations) in near about the 10th epoch. All the models i.e. DenseNet121, DenseNet169, and DenseNet201 reported accuracy of more than 98% with loss of 0.217, 0.007, and 0.015, respectively.

Results using EfficientNet model

EfficientNet model was deployed for cotton disease detection after three variations of DenseNet models. The efficientNet model comes in different variations; we opted for the first three models i.e. EfficientNetB0, EfficientNetB1, and EfficientNetB2 for our experiments. For this model the learning rate is set to 1e-2, the dropout rate is 0.2 and the batch size used for this model is 64. Training and validation graphs for all these EfficientNet models are given in Fig. 7. As with the above graphs, similarly, epochs are given at the x axis and other metrics are given at the y axis of graphs.

It can be seen that there are variations in accuracy graphs till the 10th epoch. After the 10th epoch, all EfficientNet models are performing almost the same in terms of accuracy. The accuracy reported from all the EfficientNet models reaches up to 99%.

Results Using InceptionV3 model

The Inception V3 model from Google is used for the detection of mealybug, anthracnose, thrips, and blight diseases. It has reported a loss of 0.0022 with more than 95% accuracy. For this model the learning rate is set to 0.0001, the dropout rate is 0.6 and the batch size used for this model is 64. Graphs for training and validation accuracy and loss for the InceptionV3 model are shown in Fig. 8.

It can be observed that InceptionV3 models fluctuate a bit in both the training and validation phases and it takes more time to slowly reach the maximum accuracy. It takes more time in comparison to other models that have already been tried.

Results using MobileNet

The next model in the line of experiments is MobileNet. It is a DL-based model optimized and created with a focus on mobile devices. These kinds of models are optimized well to work under lower specifications to achieve maximum detection accuracy and performance out of mobile hardware. These models are prime options for developing mobile-based detection applications. For our experimentation, we chose the MobileNetV1 and MobileNetV2 models. For this model the learning rate is 0.01, the dropout is 0.9, and the batch size is 32. Validation accuracy and loss graphs can be seen below in Fig. 9. MobileNetV1 and MobileNetV2 models achieved almost similar training and validation accuracy of 97%.

Results using Xception model

Similar to other models, the Xception model is implemented for cotton crop disease detection. This model performed quite well and achieved an accuracy of more than 98%. Graphs for training and validation accuracy and loss are given in Fig. 10. Xception’s accuracy graph went straight up in the first few epochs then it struggled a bit and slowly increased accuracy within more epoch cycles.

Results using NasNet mobile

Continuing on with experiments, the next model online for testing is NasNet mobile architecture. NasNet performed badly in comparison to other applied DL models. The NasNet mobile model has been able to achieve maximum training accuracy of 95% and validation accuracy of up to 95%. It is certainly not the better option to try while other DL models are available. It even took more time, and yet did not perform up to the mark. For this model the learning rate is 0.01, dropout is 0.6 and the batch size used for this model is 64 as well. Its graphs for training and validation accuracy as well as loss can be seen in Fig. 11.

Results using ResNet model

In the end, an array of deep learning models that were trained for cotton disease detection are a collection of different variants of ResNet models. Different variations of ResNet models are applied and their graphs are shown in Fig. 12 to Fig. 17.

The graphs of ResNet52 training and validation accuracy and loss are shown in Fig. 12, followed by graphs of ResNet52V2 and ResNet101 training and validation accuracy and loss in Fig. 13, and Fig. 14. Similarly, training and validation accuracy and loss for ResNet101V2, ResNet152, and ResNet152V2 are given in Figs. 15, 16, and 17, respectively. Results show that out of all the tested ResNet models, ResNet152 outperformed all the other competing variations of ResNet models in terms of inference time and accuracy for cotton disease detection. For the ResNet model, the learning rate is 0.01, dropout is 0.2 but the batch size was set as 32 or 64 for six different models of ResNet. A summary of results for all variants of ResNet is provided in Table 3.

Top performing models

After analyzing the results and performance metrics of all the models, the results of the top four best models are discussed here with respect to their overall, as well as, class-wise performance.

Table 4 shows the comparative analysis of the top four best-performing models concerning the accuracy, precision, F1 score, and recall metrics. It can be observed that the models perform well in general obtaining a 0.97 to 0.99 score for all metrics except for variations in class-wise performance. For example, the DenseNet model obtains an accuracy score of 0.95 for the healthy class. Similarly, the precision of the Tharpis class is 0.95 using the EfficientNet model, and recall for the Blight class is also 0.95 from the same model. The DenseNet model shows an F1 score of 0.96 for the Belight class. For the rest, the scores for all performance metrics vary between 0.97 and 0.99 indicating the superb performance of transfer learning models.

Discussion and analysis

The comparative analysis of state-of-the-art models for cotton disease detection reveals that while several architectures like EfficientNet, ResNet101, and DenseNet exhibit decent performance, their accuracy remains below 85%, as shown in Table 5. This limitation can be attributed to suboptimal feature extraction or shallow learning for complex patterns in disease images. Lightweight models such as MobileNet and NasNet Mobile trade accuracy for faster inference, making them unsuitable for high-precision applications. In contrast, ResNet152 surpasses these models by achieving an accuracy of 99%, due to its deeper architecture and efficient residual connections. These features enable robust feature representation and mitigate vanishing gradient issues, ensuring better generalization. While ResNet152 is computationally heavier than MobileNet, its performance justifies its use for real-world scenarios requiring high accuracy and reliability in disease classification tasks. This study highlights ResNet152’s potential as a superior solution for cotton disease detection and its significant contribution to advancing precision agriculture practices. Future work could explore hybrid architectures and optimized lightweight models to balance accuracy and efficiency.

Many DL CNN models such as VGG16, AlexNet, DensNet, EfficientNet, Inception, MobileNet, NasNetMobile, Resnet, Xception, etc. have been proposed for the detection and classification of various weeds and diseases for cotton crops. These different methods include machine learning models, deep learning models, and image processing techniques. In addition, in the existing research, both supervised and unsupervised learning, as well as, reinforcement learning-based models have been designed and deployed for various tasks related to agriculture. Taken from Punjab, Rahim Yar Khan Pakistan, very little work has been done on cotton crops due to scarcity of data. This research particularly contributes in that direction and collects a large data from the actual fields from the process of germination to the arrival of the product. A rich variety of deep learning models have been implemented on the gathered dataset to analyze their performance for cotton crop disease detection.

While pre-trained models differ in terms of convolutional layers, their results also vary. Disease detection in crops is a difficult task as different diseases may look similar on crop leaves. Researchers have worked on various datasets that are available on GitHub and Kaggle. Since these datasets are generally on a small scale, deep learning models do not provide sufficient results. Therefore, a large dataset for the cotton crop in this research is expected to solve this issue.

Timely detection of cotton diseases is an important requirement of modern agriculture. In this regard, the proposed model has given excellent results. Dataset obtained from real fields, which included images of different classes including Blight, Thrips, Mealybug, and Anthracnose, is used to train different CNN models. The model was trained on a larger dataset with the layers transformation, and the augmentation technique was applied to further increase the data size. In the data augmentation technique, we used various means to augment the data like horizontal shift, rotation, vertical shift, and zooming. This research trained several models like MobileNet, NasNetMobile, Resnet, Xception, etc., and used performance metrics like precision, F1 score, recall, and accuracy. Results indicate that the VGG16 has an accuracy score of 0.943, DenSeNet achieved a 0.98 accuracy score while the EfficientNet models reached up to 0.99 accuracy score.

On the other hand, Inception has more than a 0.95 accuracy score, and MobileNetV1 and MobileNetV2 models achieved almost similar training and validation accuracy of 0.97. The Xception model performed quite well and achieved an accuracy score of 0.98 percent. The NasNet Mobile model has been able to achieve a maximum accuracy score of 0.95. In addition, an array of deep learning models that were trained for cotton disease detection are a collection of different variants of ResNet models. Different variations of ResNet models are also applied. It is observed that the ResNet152 model obtains the best results for training, validation, and testing.

The quality and diversity of the dataset play a significant role; a well-annotated and balanced dataset allows CNNs to generalize better, minimizing overfitting. The choice of hyper-parameters, including kernel size, number of layers, activation functions, and optimization techniques, also influences model performance. Furthermore, data augmentation techniques such as rotation, scaling, and flipping enhance model robustness by introducing variability. Lastly, the computational resources available, including high-performance GPUs and efficient training strategies, contribute to optimizing model convergence and improving overall classification performance. These factors collectively explain why certain models outperform others on the given dataset.

Certain models, such as NasNetMobile, underperformed due to various challenges inherent to their architecture and computational requirements. While NasNetMobile achieved a maximum accuracy of 95%, it exhibited slower convergence and higher computational demands compared to other models. This underperformance may be attributed to its architectural complexity, which requires substantial computational resources and careful hyperparameter tuning to optimize performance. A computational analysis of trade-offs between lightweight models like MobileNet and complex models such as ResNet152 reveals critical differences. For instance, NasNetMobile, with a moderate number of trainable parameters, balances accuracy, and resource consumption but still requires longer training times compared to MobileNet. MobileNet, being lightweight, offers faster training and inference times, making it suitable for deployment in resource-constrained environments, albeit with slightly lower accuracy.

Practical implications

The findings of this study highlight the potential of the ResNet152 model as a reliable tool for cotton disease detection, with practical applications in real-time agricultural management systems. By integrating the model into mobile or web-based applications, farmers can upload images captured by smartphones or drones for instant disease analysis and actionable recommendations, enabling early detection, reduced crop losses, and optimized pesticide usage. However, practical deployment faces challenges, including the high computational demands of ResNet152, which require powerful hardware like GPUs or optimized edge devices, making it less accessible in resource-limited regions. Additionally, the costs associated with hardware, cloud integration, and model maintenance could hinder adoption among small-scale farmers, necessitating cost-effective solutions. Scalability across diverse environments and field conditions, along with the need for periodic model retraining, further complicates deployment. Lastly, in areas with limited internet access, offline capabilities or edge computing solutions will be crucial. Addressing these challenges can enable ResNet152 to become a key component in precision agriculture, empowering farmers with advanced technology for sustainable crop management. In particular, the following benefits can be obtained.

-

Improved precision agriculture: By leveraging deep learning models like ResNet152, this study highlights the potential for AI-driven automated disease detection, enabling early intervention to prevent crop losses. This can enhance precision agriculture practices, leading to higher yields and optimized pesticide usage, reducing costs and environmental impact.

-

Need for scalable and low-cost solutions: The study’s reliance on high-computation models suggests the necessity of developing lightweight, mobile-friendly alternatives for real-time disease detection. Edge computing and cloud-based models can help farmers in resource-limited settings by providing accessible, cost-effective solutions without requiring powerful GPUs.

-

Integration with IoT and smart farming technologies: Deep learning-based disease detection could be integrated with IoT-enabled smart farming systems, allowing real-time monitoring using drones, sensors, and automated spraying systems. This can help create a fully automated crop management system that alerts farmers to infections and suggests targeted treatments.

Challenges and limitations

The study on cotton crop disease detection using deep learning, particularly ResNet152, demonstrates high accuracy but has several limitations. The model’s real-world applicability is restricted due to its reliance on high-resolution images, which may not always be feasible in real farming conditions with poor lighting, extreme weather, or newly emerging diseases not included in the training dataset. Additionally, the model requires high computational power, making it unsuitable for real-time applications on mobile devices or resource-limited environments. The dataset, though diverse, includes only four disease types and may not generalize well across different regions. Moreover, the risk of overfitting exists due to dataset augmentation and uniform class distribution, which may not reflect real-world disease occurrence. The study lacks real-time deployment testing and does not address cost-effective solutions, such as lightweight models or edge computing for offline detection.

One of the primary limitations of this study is the potential performance drop of the ResNet152 model under extreme real-world variations, such as severe weather conditions (e.g., excessive rain, drought, or poor lighting) and the presence of atypical or newly emerging cotton diseases not included in the training dataset. These factors could lead to reduced accuracy and misclassification due to the lack of robust generalization for such out-of-distribution data. Additionally, the model’s reliance on high-quality images might limit its effectiveness in scenarios involving blurred, noisy, or low-resolution images, which are common in real-world field conditions.

Conclusions

Recent advancements in deep learning have opened up new opportunities for the deployment of artificial intelligence techniques in various fields, including agriculture. In particular, deep learning models have shown excellent performance in crop management practices and particularly in disease detection. In this work, four cotton diseases were targeted Mealybug, Anthracnose, Thrips, and Blight. To explore the effectiveness of different deep learning models for cotton disease detection, eighteen deep learning models were trained and tested for performance comparison. Models were trained on the dataset collected from real fields, under various illumination conditions. The dataset was preprocessed and augmented to make it suitable for deep learning models. Through experimentation with different configurations and hyperparameters, it was observed that DenseNet169, EfficientNetB1, MobileNetV2, and ResNet152 outperformed other models in terms of detection accuracy and performance. Among these four models, the ResNet152 model demonstrated the highest accuracy in terms of training, testing, and validation. This leads to the conclusion that ResNet152 has the capability to detect crop diseases with high accuracy and it can be used in real-time scenarios. This research demonstrates the potential of deep learning models to improve significant disease detection in agriculture and other fields. In the future, these models can be embedded into the variable rate spraying technologies for precise spraying agrochemicals in the fields. In the future, integrating IoT devices and edge computing for real-time, lightweight disease detection and extending the model to other crops using transfer learning. Additionally, collaboration with agronomists and expanding datasets for diverse conditions will enhance accuracy and generalizability.

Data availability

The data can be requested from the corresponding authors.

Change history

24 June 2025

A Correction to this paper has been published: https://doi.org/10.1038/s41598-025-07853-2

References

Pandey, V. L., Dev, S. M. & Jayachandran, U. Impact of agricultural interventions on the nutritional status in south asia: A review. Food policy 62, 28–40 (2016).

Zhang, Q., Men, X., Hui, C., Ge, F. & Ouyang, F. Wheat yield losses from pests and pathogens in china. Agriculture, Ecosystems & Environment 326, 107821 (2022).

Iqbal, T. et al. Development of real time seed depth control system for seeders. Environmental Sciences Proceedings 23(1), 7 (2022).

Azam, A. & Shafique, M. Agriculture in pakistan and its impact on economy. A Review. Inter. J. Adv. Sci. Technol 103, 47–60 (2017).

Latif, M.R., et al. Cotton leaf diseases recognition using deep learning and genetic algorithm. Computers, Materials & Continua 69(3) (2021)

Abbas, S., & Halog, A.: Analysis of pakistani textile industry: Recommendations towards circular and sustainable production. Circular Economy: Assessment and Case Studies, 77–111 (2021)

Chollet, F.: Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1251–1258 (2017)

Chohan, S., Perveen, R., Abid, M., Tahir, M.N., & Sajid, M.: Cotton diseases and their management. Cotton Production and Uses: Agronomy, Crop Protection, and Postharvest Technologies, 239–270 (2020)

Manavalan, R. Towards an intelligent approaches for cotton diseases detection: A review. Computers and Electronics in Agriculture 200, 107255 (2022).

Toscano-Miranda, R. et al. Artificial-intelligence and sensing techniques for the management of insect pests and diseases in cotton: A systematic literature review. The Journal of Agricultural Science 160(1–2), 16–31 (2022).

Anwar, S., Kolachi, A.R., Baloch, S.K., & Soomro, S.R.: Bacterial blight and cotton leaf curl virus detection using inception v4 based cnn model for cotton crops. In: 2022 IEEE 5th International Conference on Image Processing Applications and Systems (IPAS), pp. 1–6 (2022). IEEE

Fatima, A., et al.: Deep learning-based multiclass instance segmentation for dental lesion detection. In: Healthcare, 11, 347 (2023). MDPI

Karamti, H. et al. Improving prediction of cervical cancer using knn imputed smote features and multi-model ensemble learning approach. Cancers 15(17), 4412 (2023).

Ashraf, I., Kang, M., Hur, S. & Park, Y. Minloc: Magnetic field patterns-based indoor localization using convolutional neural networks. IEEE Access 8, 66213–66227 (2020).

Ashraf, I. et al. A deep learning-based smart framework for cyber-physical and satellite system security threats detection. Electronics 11(4), 667 (2022).

Jalal, N., Mehmood, A., Choi, G. S. & Ashraf, I. A novel improved random forest for text classification using feature ranking and optimal number of trees. Journal of King Saud University-Computer and Information Sciences 34(6), 2733–2742 (2022).

Pacal, I. et al. A systematic review of deep learning techniques for plant diseases. Artificial Intelligence Review 57(11), 304 (2024).

Hoque, M.J., Islam, M.S., Uddin, J., Samad, M.A., De Abajo, B.S., Vargas, D.L.R., & Ashraf, I.: Incorporating meteorological data and pesticide information to forecast crop yields using machine learning. IEEE Access (2024)

Jamil, M., Rehman, H., SaleemUllah, I. A. & Ubaid, S. Smart techniques for lulc micro class classification using landsat8 imagery. Comput. Mater. Contin 74(3), 5545–5557 (2023).

Kanwal, T. et al. An intelligent dual-axis solar tracking system for remote weather monitoring in the agricultural field. Agriculture 13(8), 1600 (2023).

Ali, T. et al. Smart agriculture: utilizing machine learning and deep learning for drought stress identification in crops. Scientific Reports 14(1), 1–16 (2024).

Tanveer, M. U. et al. Novel transfer learning approach for detecting infected and healthy maize crop using leaf images. Food Science & Nutrition 13(1), 4655 (2025).

Pacal, I., & Işık, G.: Utilizing convolutional neural networks and vision transformers for precise corn leaf disease identification. Neural Computing and Applications, 1–18 (2024)

Santos, L., Santos, F.N., Oliveira, P.M., & Shinde, P.: Deep learning applications in agriculture: A short review. In: Robot 2019: Fourth Iberian Robotics Conference: Advances in Robotics, 1, 139–151 (2020). Springer

Aqib, M., Mehmood, R., Alzahrani, A., & Katib, I.: In-memory deep learning computations on gpus for prediction of road traffic incidents using big data fusion. Smart Infrastructure and Applications: Foundations for Smarter Cities and Societies, 79–114 (2020)

Mahmood, N., et al. : Mining software repository for cleaning bugs using data mining technique. Computers, Materials & Continua 69(1) (2021)

Chintalapudi, N., Battineni, G. & Amenta, F. Sentimental analysis of covid-19 tweets using deep learning models. Infectious disease reports 13(2), 329–339 (2021).

Ali, S., Hafeez, Y., Abbas, M. A., Aqib, M. & Nawaz, A. Enabling remote learning system for virtual personalized preferences during covid-19 pandemic. Multimedia Tools and Applications 80, 33329–33355 (2021).

Ismael, A. M. & Şengür, A. Deep learning approaches for covid-19 detection based on chest x-ray images. Expert Systems with Applications 164, 114054 (2021).

Rajasekar, V., Venu, K., Jena, S. R., Varthini, R. J. & Ishwarya, S. Detection of cotton plant diseases using deep transfer learning. J. Mobile Multimedia 18(2), 307–324 (2022).

Saqib, M. A., Aqib, M., Tahir, M. N. & Hafeez, Y. Towards deep learning based smart farming for intelligent weeds management in crops. Frontiers in Plant Science 14, 1211235 (2023).

Anitha, K., & Srinivasan, S.: Feature extraction and classification of plant leaf diseases using deep learning techniques. Computers, Materials & Continua 73(1) (2022)

Altalak, M., Ammad uddin, M., Alajmi, A. & Rizg, A. Smart agriculture applications using deep learning technologies: A survey. Applied Sciences 12(12), 5919 (2022).

Khan, F. et al. Deep learning-based approach for weed detection in potato crops. Environmental Sciences Proceedings 23(1), 6 (2022).

Aqib, M., Mehmood, R., Alzahrani, A., Katib, I. & Albeshri, A. A deep learning model to predict vehicles occupancy on freeways for traffic management. Int. J. Comput. Sci. Netw. Secu 18, 1–8 (2018).

Khalid, S., Oqaibi, H. M., Aqib, M. & Hafeez, Y. Small pests detection in field crops using deep learning object detection. Sustainability 15(8), 6815 (2023).

Latif, G., Abdelhamid, S. E., Mallouhy, R. E., Alghazo, J. & Kazimi, Z. A. Deep learning utilization in agriculture: Detection of rice plant diseases using an improved cnn model. Plants 11(17), 2230 (2022).

Kumar, D. & Kukreja, V. Deep learning in wheat diseases classification: A systematic review. Multimedia Tools and Applications 81(7), 10143–10187 (2022).

Haque, M. A. et al. Deep learning-based approach for identification of diseases of maize crop. Scientific reports 12(1), 6334 (2022).

Fuentes, A., Yoon, S., Kim, S. C. & Park, D. S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 17(9), 2022 (2017).

Tetila, E. C. et al. Detection and classification of soybean pests using deep learning with uav images. Computers and Electronics in Agriculture 179, 105836 (2020).

He, K., Zhang, X., Ren, S., & Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Yapici, M., Karakiş, r., & Gürkahraman, K.: Improving brain tumor classification with deep learning using synthetic data. Computers, Materials and Continua 74(3) (2023)

Karakis, R.: Mi-steg: A medical image steganalysis framework based on ensemble deep learning. Computers, Materials & Continua 74(3) (2023)

Aqib, M. et al. Rapid transit systems: smarter urban planning using big data, in-memory computing, deep learning, and gpus. Sustainability 11(10), 2736 (2019).

Aqib, M., Mehmood, R., Alzahrani, A., & Katib, I.: A smart disaster management system for future cities using deep learning, gpus, and in-memory computing. Smart infrastructure and applications: Foundations for smarter cities and societies, 159–184 (2020)

Aqib, M. et al. Smarter traffic prediction using big data, in-memory computing, deep learning and gpus. Sensors 19(9), 2206 (2019).

Redmon, J., Divvala, S., Girshick, R., & Farhadi, A.: You only look once: Unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788 (2016)

Abdel-Hamid, O. et al. Convolutional neural networks for speech recognition. IEEE/ACM Transactions on audio, speech, and language processing 22(10), 1533–1545 (2014).

Dutta, A. K., Albagory, Y., Sait, A. R. W. & Keshta, I. M. Autonomous unmanned aerial vehicles based decision support system for weed management. Cmc-Computers Materials & Continua 73(1), 899–915 (2022).

Khan, F. et al. A mobile-based system for maize plant leaf disease detection and classification using deep learning. Frontiers in Plant Science 14, 1079366 (2023).

Kaleem, A., Aqib, M., Saleem, S. R. & Cheema, M. J. M. Feasibility of ultrasonic sensors in development of real-time plant canopy measurement system. Environmental Sciences Proceedings 23(1), 22 (2022).

Eunice, J., Popescu, D. E., Chowdary, M. K. & Hemanth, J. Deep learning-based leaf disease detection in crops using images for agricultural applications. Agronomy 12(10), 2395 (2022).

Khan, B. et al. Bayesian optimized multimodal deep hybrid learning approach for tomato leaf disease classification. Scientific Reports 14(1), 21525 (2024).

Ramadan, S.T.Y., et al. : Improving wheat leaf disease classification: Evaluating augmentation strategies and cnn-based models with limited dataset. IEEE Access (2024)

Pacal, I. Enhancing crop productivity and sustainability through disease identification in maize leaves: Exploiting a large dataset with an advanced vision transformer model. Expert Systems with Applications 238, 122099 (2024).

Paçal, İ & Kunduracıoğlu, İ. Data-efficient vision transformer models for robust classification of sugarcane. Journal of Soft Computing and Decision Analytics 2(1), 258–271 (2024).

Kunduracioglu, I. & Pacal, I. Advancements in deep learning for accurate classification of grape leaves and diagnosis of grape diseases. Journal of Plant Diseases and Protection 131(3), 1061–1080 (2024).

Faisal, H. M. et al. A customized convolutional neural network-based approach for weeds identification in cotton crops. Frontiers in Plant Science 15, 1435301 (2025).

Tharsanee, R., Soundariya, R., Kumar, A.S., Karthiga, M., & Sountharrajan, S.: Deep convolutional neural network–based image classification for covid-19 diagnosis. In: Data Science for COVID-19, pp. 117–145. Elsevier, ??? (2021)

Simonyan, K., & Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Huang, G., Liu, Z., Van Der Maaten, L., & Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708 (2017)

Tan, M., & Le, Q.: Efficientnet: Rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning, pp. 6105–6114 (2019). PMLR

Chulu, F., et al. : A convolutional neural network for automatic identification and classification of fall army worm moth. International Journal of Advanced Computer Science and Applications 10(7) (2019)

Howard, A.G., et al. : Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 (2017)

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., & Chen, L.-C.: Mobilenetv2: Inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520 (2018)

He, Y., et al.: Application of deep learning in integrated pest management: A real-time system for detection and diagnosis of oilseed rape pests. Mobile Information Systems 2019 (2019)

Funding

This research is funded by the European University of Atlantic.

Author information

Authors and Affiliations

Contributions

HMF conceived the idea, performed data analysis and wrote the original draft. MA conceived the idea, performed data curation and wrote the original draft. SUR performed data curation, formal analysis, and designed methodology. KM dealt with software, performed visualization and carried out project administration. SAO provided resources, dealt with software and performed validation. RCI acquired the funding for research, and performed visualization and initial investigation. IA supervised the research, performed validation and review and edit the manuscript. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no conflict of interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: In the original version of this Article, Saif Ur Rehman was incorrectly listed as a corresponding author. The correct corresponding authors for this Article are Muhammad Aqib and Imran Ashraf. Correspondence and request for materials should be addressed to [email protected] and [email protected].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Faisal, H.M., Aqib, M., Rehman, S.U. et al. Detection of cotton crops diseases using customized deep learning model. Sci Rep 15, 10766 (2025). https://doi.org/10.1038/s41598-025-94636-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-94636-4