Abstract

This paper addresses the trajectory tracking control of a Gough–Stewart platform (GS platform) with an uncertain load. The uncertainty of this load leads to external disturbance to the parallel robot, which affects the dynamic coupling among the six degrees of freedom (DOF) and the tracking performance. Even though many researchers focus on improving the system robustness and tracking accuracy, there still exist two main problems: the system’s internal uncertainties, including the modeling, manufacturing, and assembly errors of the parallel robot affect the control accuracy; the uncertain external disturbance varies in an extensive range and reduces the stability and tracking accuracy of the system. Therefore, we propose a novel control methodology: the dynamic Image-based visual servoing (IBVS) Radial basis function neural network (RBFNN) real-time compensation controller. This control considers an acceleration model of visual servoing and performs real-time compensation for the enormous uncertain disturbance from the load with RBFNN. The stability of the proposed controller is fully investigated with the Lyapunov method. Simulations are performed on a GS platform with an uncertain load to test the controller’s performance. It turns out that this controller provides good tracking accuracy and robustness simultaneously.

Similar content being viewed by others

Introduction

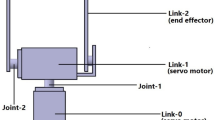

Parallel robots show better performance in high speed and acceleration, payload, stiffness, and accuracy compared with serial robots1. They are widely applied in machines, spacecraft docking mechanisms, flight simulators, motion simulators, and vibration simulators2,3,4. Generally, these parallel robots are required to follow a predefined trajectory when performing tasks, such as waveform replication. As a result, accurate trajectory tracking control is a crucial system requirement5. In addition, in most cases, parallel robots are needed to carry a load (especially when the mass of the load is much bigger than the moving platform) when operating tasks and this uncertain load always leads to a large tracking error6. Consequently, controlling parallel robots with an uncertain load is challenging but essential.

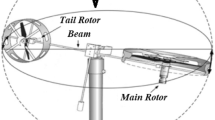

The Gough–Stewart platform (GS platform) is 6 degrees of freedom (DOF) parallel robot with complex architecture and a highly nonlinear dynamic system. Although many investigations focus on the control of the GS platform with uncertain load7,8,9,10, two problems remain difficult to solve. One is that the complex structure of parallel robots leads to a high non-linear relationship between the control input and output, increasing the difficulties in obtaining and solving the forward kinematic model (FKM)11. Moreover, for a model-based controller with an inner sensor, the high control accuracy of parallel robots is usually linked to detailed and accurate robot models. In reality, even detailed models still suffer from inaccuracy because of system internal uncertainties, such as manufacturing and assembly errors. Therefore, we need to find an alternative control method to bypass the complex FKM and increase the robustness of the system’s internal uncertainties. The other problem is that when an uncertain load is attached to the moving platform of the GS platform, this load becomes the main external disturbance and always significantly affects the performance of the system. It is difficult to identify the dynamic parameters of the load, and this strong external disturbance affects the robustness and stability of the system. It has been proven in Pi and Wang8 that when the load is uncertain and varies over time, it is usually difficult to guarantee the accuracy and robustness of the controller simultaneously.

For the first problem, an alternative way is the application of an operational space control scheme that uses an external sensor. External sensor-based control offers precise relative placement of a sensor and an observed object. It has a large feature convergence ___domain and is robust to modelling errors12. Image-based visual servoing (IBVS) is a kind of external sensor-based controller that applies visual information obtained from the camera observation to close the control loop directly in the image plane13. Compared with another kind of visual servoing, Position-based visual servoing (PBVS) is more robust with the camera calibration errors and ensures that the target can always be observed. It has been successfully applied to control parallel robots14,15,16,17. Based on the conventional IBVS, several advanced visual servoing controllers have been developed, such as proportional controller12, sliding mode control18, adaptive visual servoing controller19,20. It is worth mentioning that all the controllers mentioned above are designed to generate a velocity screw to drive the robot. As a result, the kinematic model of robots is considered, and the velocity command can only allow for a proportional controller to ensure error convergence. This method leads to an overshoot of the system and can not promise the smoothness of the trajectory. In addition, the noise from the camera observation and the motion vibration of the robot system may cause sudden variations in the velocity command. Therefore, the IBVS generating only the velocity screw is not proper for the trajectory control of parallel robots. In order to improve the control performance, the dynamic IBVS controller, which links the dynamics of robotics systems to the visual feedback, is developed21,22,23,24,25. This controller considers the second-order interaction model which relates image features acceleration and robot kinematics, dynamics so that we can get the computed torque controller directly from the image feature space. With this controller, a smoother response and better performance of the controller can be achieved. It should be noted that from the literature22,23,24,25, the dynamic IBVS controller is only validated on serial robots or mobile robots, and few researchers apply it to the control of parallel robots. In addition, the conventional dynamic IBVS has not been proven to realize an accurate trajectory tracking control for parallel robots with uncertain load disturbance.

For the second problem, we see that a GS platform with an uncertain load can be seen as a complicated, dynamically coupled and highly time-varying nonlinear system. The uncertain load brings unmatched external disturbance that varies in an extensive range so that stability and tracking accuracy is affected for a completely model-based controller. InAghili and Namvar26; Pi and Wang8, force sensors measure the load disturbance, and the corresponding adaptive control law is designed with force-sensing feedback. Du et al.27 proposes adding a variable structure compensator to generate a compensation torque for the load uncertainty. However, additional inner sensors and mechanical structures increase the design difficulty and the robot’s footprint. Meanwhile, other intelligent control methods were also investigated to overcome system uncertainties. Data-driven adaptive controllers are proposed to update the inverse kinematic model to adapt to various changes in the load28,29. Nevertheless, the optimizer limits the controller’s performance, and these model-free controllers are tough to realize and ensure convergence in reality. Sliding mode control is also proposed to reduce the impact of disturbances30,31,32,33. However, practical measurement noises can make the sliding mode manifold unreachable or the closed-loop system inhomogeneous, which means that the stability analysis of the controller is challenging. More seriously, the chattering phenomenon is annoying. Besides, one common problem of the controllers mentioned above is that they are all designed in the framework of the actuator joint space. Consequently, the system’s internal uncertainties still affect positioning accuracy during the implementation. Neural networks have been widely applied to control uncertain robot systems due to their high adaptability and learning ability34. Compared with other types of neural networks (Back Propagation Neural Network (NN)35, Recurrent NN36, Markovian NN37, Hopfield NN38, etc.), the radial basis function neural network (RBFNN) is a kind of local approximation neural network and is easier to be implemented and analyzed due to its simple and smooth characteristics7,39,40. It has a high convergence rate, high approaching precision, and can avoid local optima, which can meet the requirement of real-time control of the robot. Moreover, the perfect approximation ability of RBFNN helps improve the robustness of the control system and handles periodic disturbances39,40. Different from approximating the whole robot system (GS platform with an uncertain load) directly, which is hard to realize and ensure convergence in practice, in this paper, we propose to create an RBFNN real-time compensator in the framework of image feature space to estimate and compensate for the unknown part of the robot system caused by the external disturbance. The errors of image features and the corresponding time derivative are set to be the input of RBFNN, which reflect the impact of external disturbance on the system. Then, the output of RBFNN will be a compensative input in the dynamic IBVS controller. In this way, stable dynamical behaviours can be realized, and the compensator helps improve the tracking precision so that the controller accuracy can be achieved and the robustness to the system uncertainties and the external disturbance can be guaranteed simultaneously.

In this paper, focus on the trajectory tracking control of a Gough–Stewart platform with an uncertain load, which can be seen as a complicated, dynamically coupled, nonlinear system with internal uncertainties and external disturbance, we exclusively pursue the dynamic IBVS RBFNN real-time compensation control scheme. Main contributions and innovations are summarized as follows:

-

To bypass the complex structure and improve the robustness of the modelling, manufacturing, and assembly error of the Gough–Stewart platform, a dynamic IBVS of the GS platform is investigated that produces the acceleration screw by considering second-order models in visual servoing so that smoother and more reliable feature tracking responses can be achieved.

-

In light of the unmatched external disturbance of the robot system caused by the uncertain load, an RBFNN real-time compensator is thus developed in the framework of the image feature space. This compensator can adaptively compensate for the visual disturbance by correcting the image feature to reject this unmatched disturbance. Moreover, since the compensator is created in the framework of image feature space, the robustness to the system uncertainties can be promised meanwhile.

-

Comprehensive comparisons with conventional and existing methods convincingly demonstrate the remarkable superiority of this novel proposed dynamic IBVS RBFNN real-time compensation control scheme regarding tracking accuracy, convergence, robustness to system uncertainties and external disturbance.

The remainder of this paper is organized as follows: a simple recall on kinematic and dynamic models of IBVS is given in Section “IBVS kinematic and dynamicdecoupling”, then the inverse dynamic model of the GS platform with an uncertain load is presented in Section “Modeling of the GS platformwith an external load”. In Section “RBFNN real-time compensatorin the image feature space of aGough-Stewart platform withan uncertain load”, we develop the novel dynamic IBVS RBFNN real-time compensation controller. Its stability analysis is proven in Section “ Stability analysis”. Numerical simulations are performed in Section “Numerical simulations”. In the end, we draw the conclusion in Section “Conclusion”.

Dynamic IBVS of the Gough–Stewart platform

IBVS kinematic and dynamic decoupling

For IBVS, the motion of the image feature \(\mathbf{s}\in \mathbb {R}^{m\times 1}\) can be related to the spatial relative camera-object kinematics screw \(\varvec{\tau }\in \mathbb {R}^{6\times 1}\) with the so-called interaction matrix. The interaction matrix \(\mathbf{L}_{s}\) is defined as follows12:

\(\varvec{\tau }\) can be linked to the active joint velocities \(\dot{\mathbf{q}}\in \mathbb {R}^{n\times 1}\) by the robot kinematic matrix \(\mathbf{J}\). Then we have:

Where \(\mathbb {T}\) is matrix that transforms the kinematic screw to the image frame.

In order to realize the dynamic sensor-based control, the acceleration of the image feature \(\ddot{\mathbf{s}}\) must be considered. The second-order interaction model of IBVS can be obtained by the differentiation of Eq. (1):

in which

where \(\mathbf{a}\) is the linear spatial relative camera-object acceleration screw, v and w are the camera’s angular velocity and acceleration respectively. Eq. (4) is obtained in the condition that there is relative rotation between the camera frame and the observed object.

After some simple manipulation, we can rewrite Eq. (3) as:

\(\mathbf{H}_{s}\) represents the time derivative of the interaction matrix and the Coriolis acceleration. We can write it in the following form:

where the expression of \(\Omega _{x}\) and \(\Omega _{y}\) can be found in Keshmiri et al.41 (Eq.15 and 16 in page 3).

Assuming an eye-to-hand model13,24, then the second-order model of the visual servoing can be written as:

in which \(\varvec{\mu }_{s}\doteq \mathbf{L}_{s}\mathbb {T}\dot{\mathbf{J}}\dot{\mathbf{q}}+\mathbf{H}_{s}\).

Considering the dynamic behaviour of a general robotic system:

Where \(\mathbf{q}\) is the generalized actuator coordinate vector, \(\mathbf{M}_{\mathbf{q} }(\mathbf{q} )\in \mathbb {R}^{n\times n}\) is the symmetric and positive definite inertia matrix, \(\mathbf{C}_{\mathbf{q} }(\mathbf{q}, \dot{\mathbf{q}})\dot{\mathbf{q}}\) is the term of Coriolis and centrifugal torques, \(\mathbf{G}_{\mathbf{q} }(\mathbf{q})\) represents the gravitational term, \(\Gamma \in \mathbb {R}^{n\times 1}\) is the projection of the generalized torques in the actuator space.

Therefore, by mixing (2) and (7) into (8), we have:

We suppose that the dynamic IBVS control loop is a typical linear proportional differential (PD) control law as it was done in Fusco et al.24, then, we have the following resultant control law in the image feature space:

Where \(\ddot{\mathbf{s}}^{d}\) is the desired acceleration of the image feature in image space, \(\mathbf{e}_{s}\) is the image feature error \(\mathbf{e}_{s}=\mathbf{s}^{d}-\mathbf{s}\), and \(\dot{\mathbf{e}}_{s}\) is its velocity. \(\mathbf{K}_{ps}\) and \(\mathbf{K}_{vs}\) are positive diagonal matrices in order to turn the linear behaviour of the system error: \(\ddot{\mathbf{e}}_{s}+\mathbf{K}_{v}\dot{\mathbf{e}}_{\mathbf{s}}+\mathbf{K}_{p}\mathbf{e}_{\mathbf{s}}=0\).

Modeling of the GS platform with an external load

The GS platform is a 6 \(U\underline{P}S\) robot. It has six degrees of freedom (DOF). The moving platform is connected to the fixed base by 6 individual prismatic actuators \(B_{i}P_{i}~(i=1\cdots 6)\).(Fig. 1). \(B_{c}-x_{0}y_{0}z_{0}\) is the base frame fixed to the center of the base B of the GS platform. \(C-{x}_{c}y_{c}z_{c}\) is the camera frame C. \(P_{c}-x_{p}y_{p}z_{p}\) is the moving platform frame P, \(L_{c}-x_{l}y_{l}z_{l}\) is the frame L fixed to the load whose origin \(L_{c}\) is the center of the load.

The inverse kinematic modeling of the GS platform has been detailed studied in Khalil and Guegan42 and we do not mention again in this paper. The dynamic models of the GS platform can be given by the following equation 43:

where \(\mathbf{F}_{p}\in \mathbb {R}^{6\times 1}\) represents the dynamics of the moving platform, k is the number of the robot legs, \(\mathbf{J}_{pi}\) is the matrix which represents the mapping between the Cartesian coordinates of the end of the leg i and the Cartesian coordinates of the end effector, \(\mathbf{J}_{i}\) the Jacobian matrix of the serial kinematic structure of the leg i. \(\mathbf{E}_{i}\) is the inverse dynamic model of leg i, which is a function of joint variables \((\mathbf{q},\dot{\mathbf{q}},\ddot{\mathbf{q}})\).

We denote \(\mathbf{F}_{p}\) the dynamics of the moving platform in the Cartesian task space. It can be obtained as:

Where \(\chi\) is the Cartesian coordinates of the moving platform, \(m_{p}\) and \(\mathbf{w}\) are the mass and angular velocity of the moving platform respectively. \(\mathbf{I}_{p}\) represents the inertia matrix of the moving platform and \(\mathbf{MS}_{p}\) is the vector of first moments of the platform around the origin of the platform frame:

\({M\tilde{\mathbf{S}}}_{p}\) denotes the skew matrix associated with \(\mathbf{MS}_{p}\). \(\mathbf{I}_{3}\) represents the \(3\times 3\) identity matrix, and the gravity acceleration is g.

Then, considering the uncertain system that a GS platform with a load fixed to its moving platform, we assume that the eccentric position vector of the centre of mass of the load with respect to the frame of L is \(^{L}\mathbf{x}=[\Delta x, ~ \Delta y, ~ \Delta z]^{T}\), which is totally unknown.

The inertia matrix of the load with respect to the frame L can be expressed as:

Then, the elements of the inertia matrix of the load with respect to the moving platform frame P, \(^{P}\mathbf{I}_{l}\) can be obtained by:

In which \(m_{l}\) is the mass of the external load. The inertia matrix of the load with respect to the base frame can be obtained by:

In which \(\mathbf{R}\) is the rotation matrix of the moving platform.

The expression of the vector \(\mathbf{x}\) expressed in the frame B can be written as:

Therefore, considering (10), (11), (12), and we do not take the dynamic of the legs of the GS platform into consideration (compared with the load and the moving platform, the dynamic of the electric driven legs can be ignored)7, the result control law of visual servoing the Gough–Stewart platform with an uncertain load can be written as (17).

In (17), we have \(\mathbf{t}=\ddot{\mathbf{s}}^{d}+\mathbf{K}_{vs}\dot{\mathbf{e}}_{\mathbf{s}}+\mathbf{K}_{ps}\mathbf{e}_{\mathbf{s}}\) and \(\mathbf{MS}=[m_{l}l_{x},~ m_{l}l_{y},~ m_{l}l_{z}]^{T}\). \(\mathbb {A}\) is the part of the dynamic of the moving platform and \(\mathbb {B}\) the part of the dynamic of the uncertain load. The eccentric position vector of the center of mass of the load \(\mathbf{x}\) is time-varying and we can not get the accurate model in advance. If the mass of the load is big and \(\mathbb {B}\) is not taken into account, the tracking accuracy of the system will be seriously affected.

Since the Gough–Stewart platform has six DOF, to fully control them, observing a minimum of three points is enough. Considering the problem of avoiding the global minima, we propose to take 4 points in one plane as the target to be observed12. In this case, the eye-to-hand visual servoing model is applied. We fix the camera in space, and attach the target to the bottom plane of the moving platform of the GS platform. The image feature \(\mathbf{s}\) is defined as \(\mathbf{s}=[x_1, y_1, x_2, y_2, x_3, y_3, x_4, y_4]^{T}\in \mathbb {R}^{8}\), where \((x_{i},y_{i})\) is the normalized coordinate of the point \(\mathbf{A}_{i}\) (see Fig. 2).

Sensor-based neural network compensation control

RBFNN real-time compensator in the image feature space of a Gough–Stewart platform with an uncertain load

As we presented in Section “Dynamic IBVS of theGough–Stewart platform” and (17), with the pole placement method, the positive diagonal matrices \(\mathbf{K}_{vs}\) and \(\mathbf{K}_{ps}\) can ensure the system error trend to zero. However, this formulation is deduced under the assumption that perfect model information of \(\mathbb {A}\) and \(\mathbb {B}\). In reality, for the uncertain load, the mass, centroid position, moment of inertial are completely unknown. In some cases, its centroid position is time-varying. Then we get the novel error equation:

or

where \(\hat{\mathbf{K}}_{ps}\) and \(\hat{\mathbf{K}}_{vs}\) are constant coefficients. \(\Delta \mathbf{F}=-({\mathbf{J}^{T}\begin{bmatrix} m_{p}\mathbf{I}_{3} & \varvec{0} \\ \varvec{0}& \mathbf{I}_{p} \end{bmatrix}(\mathbf{L}_{s}\mathbb {T}\mathbf{J})^{+}})^{+}\mathbb {B}\) which represents the external uncertainty of the system caused by the uncertain load.

It has been demonstrated in Li et al.44; Van Cuong and Nan45; Li et al.46 that the Gaussian radial basis function can be applied to approximate the nonlinear system. Then, we propose to apply the adpative RBFNN to compensate for the uncertain disturbance \(\Delta \mathbf{F}\) directly in the feature space. The structure of the RBFNN is illustrated in Fig. 3, it is composed of three layers: the input layer, the hidden layer, and the output layer. In the input layer, the inputs are \(\varvec{x}=[x_{1}, x_{2},\cdots , x_{k}]\in \mathbb {R}^{k}\) and they are transformed to the hidden layer with a nonlinear transformation. In this case, we apply Gaussian functions as RBFs for their properties of strictly positive and continuous on \(\mathbb {R}^{n}\) and noise suppression39.

in which j represents the rank of the node in the hidden layer, \(\varvec{c}\) and b are, respectively, the centre vector and the width of the Gaussian function. \(\varphi _{i}\) being the Gaussian activation function for neural net j.

The output of the RBFNN is a linear weighted combination:

in which \(\mathbf{W}\) represents the weight matrix. \(\varvec{\eta }\) is the approximation error and is bounded \(\left| \varvec{\eta } \right| <{\eta }^{*}\), \({\eta }^{*}>0\).

Taking the RBFNN compensator into consideration, the control law (17) can be transformed to:

where \(\Delta \hat{ \mathbf{F}}\) represents the estimated output of the RBFNN.

We define \({\varvec{x}}=[{\mathbf{e}_{s}}~~{\dot{\mathbf{e}}}_{s}]^{T}\) to be the variable, then the state equation can be obtained as:

where

The approximation law of RBFNN is given as Li et al.44; Dai et al.7:

The optimal weight \(\mathbf{W}^{*}\) can drive the output of RBFNN to reach the desired value, and \(\hat{\mathbf{W}}\) is an estimate of this weight’s value. The ideal updating weight \(\mathbf{W}^{*}\) must satisfy with \(\mathbf{W}^{*}=argmin(\Delta \mathbf{F}(\varvec{x})-\Delta \hat{\mathbf{F}}(\varvec{x}, \mathbf{W}))\),then, we design the corresponding adaptive law as:

where \(\gamma > 0\) is a positive gain. The definition of \(\mathbf{P}\) will be given in the following stability analysis.

The bounded optimal approximate error is defined as:

Then (23) can be rewritten as:

Training process of the RBFNN is done by the following algorithm:

-

Step 0: Initialize all weights as zeros.

-

Step 1: Calculate all elements of the output \(\Delta \mathbf{F}\) by equation (24).

-

Step 2: Calculate the error \(\varvec{\eta }\) by equation (26).

-

Step 3: Update the weights using the adaptive law (25).

-

Step 4: Calculate the Euclidean norm of \(\varvec{\eta }\), the \(\left\| \varvec{\eta }\right\|\).

-

Step 5: Return to Step 2 and repeat the calculations until the value of \(\left\| \varvec{\eta }\right\|\) is less than the desired error \(\varvec{\eta }_{0}\).

The diagram of the proposed control scheme designed based on (22) is illustrated in Fig. 4. As shown in Fig. 4, the desired acceleration of image feature \(\ddot{\mathbf{s}}^{d}\) in (22) is predefined. \(\mathbf{e}_{s}\) and \(\dot{\mathbf{e}}_{s}\) in (22) are directly obtained from the image plane. \(\varvec{\mu }_{s}\) is retrieved from the visual information \(\mathbf{s}\) and the interaction matrix \(\mathbf{L}\). The uncertain disturbance \(\Delta \hat{\mathbf{F}}\) is estimated by the RBFNN real-time compensator with the network input data \(\mathbf{e}_{s}\) and \(\dot{\mathbf{e}}_{s}\) through the training process we presented above. Then, with the dynamic IBVS controller, the control input to the robot system \(\Gamma\) is eventually obtained.

Stability analysis

The Lyapunov function is designed as:

where \(\gamma > 0\) is a positive constant. tr() represents the trace of the matrix. The real parts of eigenvalues of \(\mathbf{A}\) is negative, then, there must be a symetric and positive \(\mathbf{P}\) and \(\mathbf{Q}\) that satisfy the following Lyapunov equation:

We denote \(\mathbf{V}_{1}=\frac{1}{2}\varvec{x}^{T}\mathbf{P}\varvec{x}\),\(\mathbf{V}_{2}=\frac{1}{2\gamma }tr(\tilde{\mathbf{W}})\), and \(\mathbf{M}=\mathbf{B}[\tilde{\mathbf{W}}^{T}\varvec{\varphi }(\varvec{x})+\varvec{\eta }]\).

Take the time derivative of the Lyapunov equation, we have:

In addition, \(\varvec{x}^{T}\mathbf{P}\mathbf{B}\varvec{\eta }=\varvec{\eta }^{T}\mathbf{B}^{T}\mathbf{P}\varvec{x}\), \(\varvec{x}^{T}\mathbf{P}\mathbf{B}\tilde{\mathbf{W}}^{T}\varvec{\varphi }(\varvec{x})=\varphi ^{T} (\varvec{x})\tilde{\mathbf{W}}^{T}\mathbf{B}^{T}\mathbf{P}\varvec{x}\), and \(\mathbf{P}^{T}=\mathbf{P}\).

Then we have:

Since \(\varvec{\varphi }^{T}(\varvec{x})\tilde{\mathbf{W}}\mathbf{B}^{T}\mathbf{P}\varvec{x}\) is a real number, then

The time derivative of the Lyapunov function can be transformed to:

Because \(\dot{\tilde{\mathbf{W}}}=-\dot{\hat{\mathbf{W}}}\), then, with the adaptive law we gave in (25), we have:

where \(\left\| .\right\|\) is the Euclidean norm, \(\lambda _{min}(\mathbf{Q})\) and \(\lambda _{max}(\mathbf{P})\) are the minimum and maximum eigenvalue of \(\mathbf{Q}\) and \(\mathbf{P}\). We assume \(\dot{\mathbf{V}}=0\), then \(\lambda _{min}(\mathbf{Q})=\frac{2\lambda _{max}(\mathbf{P})\left\| \varvec{\eta }\right\| }{\left\| \varvec{x}\right\| }\) can be deduced and the convergence region of \(\varvec{x}\) is \(\left\| \varvec{x}\right\| < \frac{2\lambda _{max}(\mathbf{P})\left\| \varvec{\eta }\right\| }{\lambda _{min}(\mathbf{Q})}\). When \(\left\| \varvec{x}\right\| > \frac{2\lambda _{max}(\mathbf{P})\left\| \varvec{\eta }\right\| }{\lambda _{min}(\mathbf{Q})}\), \(\dot{\mathbf{V}}<0\) and \(\varvec{x}\) will converge to the convergence region. In conclusion, \(\varvec{x}\) can always converge to a sufficiently small ___domain through the adjustment of \(\mathbf{Q}\) and the system is stable.

Numerical simulations

Specification of the simulation environment

To test the effectiveness of the dynamic IBVS RBFNN real-time compensation controller, we perform numerical simulations in a Simulink/SimMechanics environment. A model of the Gough–Stewart platform is created in SimMechanics concerning the parameters given in Tab. 1. To further imitate real scenarios, we add the uncertainty of 10% on the inertia parameters of the moving platform and the load, 50 \(\mu\)m error on the geometric parameters.

In this simulation, the model of a single eye-to-hand camera is created in Simulink. We fix the camera along the \(z_{0}\) axis of the word frame (see Fig. 2) so that the target can be observed symmetrically. The resolution of the camera is \(1920 \times 1200\) pixels and the focal lengths along X axis and Y axis are 10 mm. The X-axis and Y-axis scaling factors are all 100 pixels/mm. The camera is fixed in space, and the target is attached to the bottom plane of the moving platform of the GS platform. As it was studied in Zhu et al.16, the camera observation error is the main reason for the positioning error of IBVS. In this case, we add \(\pm 0.1\) pixel noise on the camera observation as it was did in Bellakehal et al.47 (see Fig. 2).

For the RBFNN real-time compensator, the parameters in (23) are given here:

Considering the requirement of the real-time control, the hidden layer of RBFNN contains 15 nodes. According to the range of the input data of RBFNN, the center vector of RBFNN nodes is evenly distributed in [-1, 1] and the width value of Gaussian function is set to be 3. The weights of RBFNN are all initialized as zeros.

In order to simulate an uncertain load, a mass block is fixed to the moving platform. The mass of the block is time-varying: \(m=300+50\sin (\pi t /2)\) kg. In addition, we define a time-varying position of the load centroid with respect to the moving platform frame C as follows:

The initial pose of the Gough–Stewart platform is \(\chi _{0}=[0,0,0.7836,0,0,0]^{T}\) with respect to the base frame \(B_{c}-x_{0}y_{0}z_{0}\) (the orientation is defined with ZXY Euler angles). Two complex time-varying trajectory of the moving platform are given in Table. 2 and Table. 3.

As the comparison, four controllers are developed in this simulation to demonstrate the superiorities of the proposed dynamic IBVS RBFNN real-time compensation controller.

-

Controller 1 is a conventional model-based computed torque controller (CTC). No noise is added on the measurement feedback.

-

Controller 2 is a conventional dynamic IBVS controller proposed in Keshmiri et al.22. The camera observation noise is \(\pm 0.1\) pixel as it was in Bellakehal et al.47.

-

Controller 3 is an adaptive Sliding mode controller proposed in Shao et al.48. Six force sensors are attached between the actuators and the moving platform. The observation noise of the force sensor is set to be \(\pm \frac{1}{160}\) N as it was in Bellakehal et al.47.

-

Controller 4 is a dynamic IBVS RBFNN real-time compensation controller. The camera observation noise is \(\pm 0.1\) pixel.

First of all, no external load is attached to the Gough–Stewart platform and it is controlled with Controller 1 and Controller 2 to track the Trajectory 1 and Trajectory 2. The tracking error results and the orientation results are illustrated from Fig. 5 to Fig. 8.

From the results, we see that when using the classical model-based CTC with accurate feedback and no external load added on the moving platform, the maximal track errors are about 0.003 m to 0.004 m, and the orientation errors are about 0.02 to 0.04 rad. The convergences of the track error and orientation error are achieved after almost 1 second. When we apply the dynamic IBVS controller, the corresponding Cartesian tracking error reduces to 0.0002 m, which is 10 to 15 times better than that of the CTC, and the corresponding orientation errors are about 0.002 rad, which are ten times better than that of the CTC. Moreover, the tracking error and orientation error are of fast convergence to zero in 0.1 second. The results demonstrate that the classical model-based CTC is unsuitable for the control of parallel robots since the manufacturing and assembly errors did affect the tracking performance. The dynamic IBVS controller is more robust to the system error. Although the camera observation noise imposes negative influences, the controller can still promise perfect tracking accuracy.

Besides, to further demonstrate that the uncertain load does affect the system controller performance, we add the uncertain load defined above with time-varying mass and centroid to the moving platform of the GS platform and drive it to track Trajectory 1 and Trajectory 2 with Controller 2. The simulation results are depicted in Fig. 9 and 10.

As we see from the results, when an uncertain load is attached to the robot, this massive disturbance affects the tracking performance of the dynamic IBVS controller. The tracking error and the orientation error are almost four or five times worse than the situation without uncertain load. It takes about 0.4 s for the track error and orientation error to converge to zero. These results prove the content we gave in Section “RBFNN real-time compensatorin the image feature space of aGough-Stewart platform withan uncertain load” that if the compensation of this disturbance caused by the uncertain load is not considered, the tracking performance will be greatly affected.

In the next step, we drive the Gough–Stewart platform with time-varying mass and centroid load to track Trajectory 1 and Trajectory 2 with Controller 3 and Controller 4. The results are illustrated from Fig. 13 to 16.

Controller 3 is a method proposed in Pi and Wang8 which takes force sensors to measure the load disturbances directly and applies the adaptive Sliding mode controller to improve the tracking performance of the Gough–Stewart platform. From the results in Fig. 11 and 12, we see that the Cartesian track errors are about 0.004 m and the orientation errors are about 0.008 rad. The convergence takes about 0.5 s. We can see that the tracking accuracy is worse than Controller 2. It is because this controller is designed in the framework of the actuator space, then, it is not robust to the manufacturing and assembly errors.

The simulation results of Controller 4 are shown in Fig. 13 and 14. We see that when RBFNN is added to compensate the external disturbance in the framework of the image feature space, both the Cartesian track precision and orientation accuracy become better. The Cartesian tracking errors are almost 0.0002 m and the orientation errors are about 0.002 rad, and they are of fast convergence to zero in 0.2 s. There are no big differences between them and the results in Fig. 7 and 8, which proves that RBFNN real-time compensation we created in the image feature space did compensate the impact of the external disturbance to the system.

In addition, the control signals of Controller 3 and Controller 4 are illustrated in Fig. 15 and Fig. 16. Compared with Controller 4, the chattering phenomenon of the sliding mode control is serious, increasing the difficulty in practical application. More detailed analysis of the simulation results are given in Tab. 4.

In conclusion, we see that for the trajectory tracking of a Gough–Stewart platform, the tracking accuracy (both position and orientation) of the classical model-based CTC is poor. When we apply the dynamic IBVS controller, even though the uncertainty of the geometric parameters and dynamic inertia parameters are added to the model, the pixel noise is taken into account, the tracking accuracy can still be guaranteed. The positioning accuracy and orientation accuracies are 15 times and 10 times, respectively, better than those of the CTC, and the convergence rate is improved. However, when the Gough–Stewart platform carries an uncertain load, the performances of the dynamic IBVS controller (convergence rate, tracking accuracy) are affected by external disturbance. Then, the dynamic IBVS RBFNN real-time compensation controller is applied, and the tracking performances are improved significantly. The Cartesian tracking and orientation accuracies are 3 times and 4 times respectively better than that of the dynamic IBVS controller (they are almost the same as the performances of the dynamic IBVS controller when no uncertain load is added), and faster convergence can be achieved. In addition, compared with another sliding mode controller proposed in Pi and Wang8, the controller we proposed reaches more than 10 times better tracking accuracy and 2 times better convergence rate. Besides, the chattering phenomenon is efficiently reduced. Additionally, We do not need to add the force sensors to measure the external disturbances as it did in Pi and Wang8, which improves the design difficulty and structural complexity. Therefore, all these simulation results demonstrate that the dynamic IBVS RBFNN real-time compensation controller provides the best performance in controlling the Gough–Stewart platform with an uncertain load.

Conclusion

In this paper, to realize the trajectory tracking control of a Gough–Stewart platform with an uncertain load, we developed a dynamic Image-based visual servoing Radial basis function neural network real-time compensation controller. This controller applies the dynamic IBVS that produces the acceleration screw by considering second-order models in visual servoing and develops an RBFNN real-time compensator in the image feature space to estimate the system uncertainties with visual feedback. The stability has been proven. Comparison simulations were performed on a Gough–Stewart platform with uncertain load against a classical model-based CTC, a conventional dynamic IBVS controller and an adaptive sliding mode controller. The results validated that this novel controller is robust to manufacturing and system errors and external disturbance and has the best tracking accuracy. However, all these results are verified only through simulations and are not verified by robot prototype experiments. Therefore, future work will focus on conducting experimental validation of the proposed controller on real prototypes. In addition, in this case, we do not consider the kinematic singularity and visual servoing singularity in the control process. Another important research direction may be addressing on avoiding robot singularity and controller singularity.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Merlet, J. P. Parallel Robots (Springer, 2006).

Fichter, E. F., Kerr, D. R. & Rees-Jones, J. The Gough-Stewart platform parallel manipulator: A retrospective appreciation. Proc. Inst. Mech. Eng. C J. Mech. Eng. Sci. 223(1), 243–281. https://doi.org/10.1243/09544062JMES1137 (2009).

Cirillo, A. et al. Optimal custom design of both symmetric and unsymmetrical hexapod robots for aeronautics applications. Robot. Comput. Integr. Manuf. 44, 1–16. https://doi.org/10.1016/j.rcim.2016.06.002 (2017).

Yang, J. et al. Dynamic modeling and control of a 6-DOF micro-vibration simulator. Mech. Mach. Theory 104, 350–369 (2016).

Ghobakhloo, A., Eghtesad, M. & Azadi, M. Position control of a stewart-gough platform using inverse dynamics method with full dynamics. In 9th IEEE International Workshop on Advanced Motion Control, 2006, 50–55 (2006). https://doi.org/10.1109/AMC.2006.1631631.

Wang, Z. et al. Hybrid adaptive control strategy for continuum surgical robot under external load. IEEE Robot. Autom. Lett. 6(2), 1407–1414. https://doi.org/10.1109/LRA.2021.3057558 (2021).

Dai, X., Song, S., Xu, W., Huang, Z. & Gong, D. Modal space neural network compensation control for Gough-Stewart robot with uncertain load. Neurocomputing 449, 245–257 (2021).

Pi, Y. & Wang, X. Trajectory tracking control of a 6-DOF hydraulic parallel robot manipulator with uncertain load disturbances. Control. Eng. Pract. 19(2), 185–193. https://doi.org/10.1016/j.conengprac.2010.11.006 (2011).

Yang, J. et al. Dynamic modeling and control of a 6-DOF micro-vibration simulator. Mech. Mach. Theory 104, 350–369. https://doi.org/10.1016/j.mechmachtheory.2016.06.011 (2016).

Shariatee, M. & Akbarzadeh, A. Optimum dynamic design of a Stewart platform with symmetric weight compensation system. J. Intel. Robot. Syst. 103(4), 66 (2021).

Zhu, M., Huang, C., Song, S. & Gong, D. Design of a Gough-Stewart platform based on visual servoing controller. Sensors 22(7), 2523 (2022).

Chaumette, F. & Hutchinson, S. Visual servo control. I. Basic approaches. IEEE Robot. Autom. Mag. 13(4), 82–90 (2006).

Chaumette, F. & Hutchinson, S. Visual Servoing and Visual Tracking (Springer, 2008).

Andreff, N., Dallej, T. & Martinet, P. Image-based visual servoing of a Gough-Stewart parallel manipulator using leg observations. Int. J. Robot. Res. 26(7), 677–687 (2007).

Briot, S., Rosenzveig, V., Martinet, P., Özgür, E. & Bouton, N. Minimal representation for the control of parallel robots via leg observation considering a hidden robot model. Mech. Mach. Theory 106, 115–147 (2016).

Zhu, M., Chriette, A. & Briot, S. Control-Based Design of a DELTA Robot. In ROMANSY 23 - Robot Design, Dynamics and Control, Proceedings of the 23rd CISM IFToMM Symposium, (2020).

Zhu, M., Briot, S. & Chriette, A. Sensor-based design of a delta parallel robot. Mechatronics 87, 102893 (2022).

Kim, J.-K., Kim, D.-W., Choi, S.-J. & Won, S.-C. Image-based visual servoing using sliding mode control. In: 2006 SICE-ICASE International Joint Conference, 4996–5001 https://doi.org/10.1109/SICE.2006.315092 (2006).

Wang, H., Liu, Y.-H. & Zhou, D. Adaptive visual servoing using point and line features with an uncalibrated eye-in-hand camera. IEEE Trans. Robot. 24(4), 843–857 (2008).

Fang, Y., Liu, X. & Zhang, X. Adaptive active visual servoing of nonholonomic mobile robots. IEEE Trans. Industr. Electron. 59(1), 486–497 (2011).

Piepmeier, J. A., McMurray, G. V. & Lipkin, H. Uncalibrated dynamic visual servoing. IEEE Trans. Robot. Autom. 20(1), 143–147 (2004).

Keshmiri, M., Xie, W.-F. & Mohebbi, A. Augmented image-based visual servoing of a manipulator using acceleration command. IEEE Trans. Industr. Electron. 61(10), 5444–5452. https://doi.org/10.1109/TIE.2014.2300048 (2014).

Vandernotte, S., Chriette, A., Martinet, P. & Roos, A.S. Dynamic sensor-based control. In: 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), pp. 1–6 (2016). https://doi.org/10.1109/ICARCV.2016.7838639.

Fusco, F., Kermorgant, O. & Martinet, P. A comparison of visual servoing from features velocity and acceleration interaction models. In: 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE 4447–4452 (2019).

Lee, S. & Chwa, D. Dynamic image-based visual servoing of monocular camera mounted omnidirectional mobile robots considering actuators and target motion via fuzzy integral sliding mode control. IEEE Trans. Fuzzy Syst. 29(7), 2068–2076. https://doi.org/10.1109/TFUZZ.2020.2985931 (2021).

Aghili, F. & Namvar, M. Adaptive control of manipulators using uncalibrated joint-torque sensing. IEEE Trans. Robot. 22(4), 854–860. https://doi.org/10.1109/TRO.2006.875489 (2006).

Du, B., Ling, Q. & Wang, S. Improvement of the conventional computed-torque control scheme with a variable structure compensator for delta robots with uncertain load. In: 2014 International Conference on Mechatronics and Control (ICMC), 99–104 https://doi.org/10.1109/ICMC.2014.7231525 (2014).

Yip, M. C. & Camarillo, D. B. Model-less hybrid position/force control: A minimalist approach for continuum manipulators in unknown, constrained environments. IEEE Robot. Autom. Lett. 1(2), 844–851. https://doi.org/10.1109/LRA.2016.2526062 (2016).

Li, M., Kang, R., Branson, D. T. & Dai, J. S. Model-free control for continuum robots based on an adaptive Kalman filter. IEEE/ASME Trans. Mechatron. 23(1), 286–297. https://doi.org/10.1109/TMECH.2017.2775663 (2018).

Guan, C. & Pan, S. Adaptive sliding mode control of electro-hydraulic system with nonlinear unknown parameters. Control Theory Appl. 16(11), 1275–1284 (2008).

Xu, J., Wang, Q. & Lin, Q. Parallel robot with fuzzy neural network sliding mode control. Adv. Mech. Eng. 10(10), 1687814018801261. https://doi.org/10.1177/1687814018801261 (2018).

Yang, C., Chen, C., He, W., Cui, R. & Li, Z. Robot learning system based on adaptive neural control and dynamic movement primitives. IEEE Trans. Neural Netw. Learn. Syst. 30(3), 777–787 (2019).

Huang, H., Zhang, T., Yang, C. & Chen, C. L. P. Motor learning and generalization using broad learning adaptive neural control. IEEE Trans. Industr. Electron. 67(10), 8608–8617. https://doi.org/10.1109/TIE.2019.2950853 (2020).

Huang, S. N., Tan, K. K. & Lee, T. H. Adaptive motion control using neural network approximations. Automatica 38(2), 227–233 (2002).

Wang, S., Zhu, H., Wu, M. & Zhang, W. Active disturbance rejection decoupling control for three-degree-of- freedom six-pole active magnetic bearing based on bp neural network. IEEE Trans. Appl. Supercond. 30(4), 1–5. https://doi.org/10.1109/TASC.2020.2990794 (2020).

Yang, J., Xie, Z., Chen, L. & Liu, M. An acceleration-level visual servoing scheme for robot manipulator with IoT and sensors using recurrent neural network. Measurement 166, 108137. https://doi.org/10.1016/j.measurement.2020.108137 (2020).

Shi, P., Li, F., Wu, L. & Lim, C.-C. Neural network-based passive filtering for delayed neutral-type semi-Markovian jump systems. IEEE Trans. Neural Netw. Learn. Syst. 28(9), 2101–2114. https://doi.org/10.1109/TNNLS.2016.2573853 (2017).

Guan, Z.-H., Chen, G. & Qin, Y. On equilibria, stability, and instability of hopfield neural networks. IEEE Trans. Neural Netw. 11(2), 534–540. https://doi.org/10.1109/72.839023 (2000).

Poultangari, I., Shahnazi, R. & Sheikhan, M. RBF neural network based pi pitch controller for a class of 5-mw wind turbines using particle swarm optimization algorithm. ISA Trans. 51(5), 641–648 (2012).

Beyhan, S. & Alcı, M. Stable modeling based control methods using a new RBF network. ISA Trans. 49(4), 510–518. https://doi.org/10.1016/j.isatra.2010.04.005 (2010).

Keshmiri, M., Xie, W.-F. & Mohebbi, A. Augmented image-based visual servoing of a manipulator using acceleration command. IEEE Trans. Industr. Electron. 61(10), 5444–5452 (2014).

Khalil, W. & Guegan, S. Inverse and direct dynamic modeling of Gough-Stewart robots. IEEE Trans. Robot. 20(4), 754–761 (2009).

Khalil, W. & Ibrahim, O. General solution for the dynamic modeling of parallel robots. J. Intell. Robot. Syst. 49(1), 19–37 (2007).

Li, Y., Qiang, S., Zhuang, X. & Kaynak, O. Robust and adaptive backstepping control for nonlinear systems using RBF neural networks. IEEE Trans. Neural Netw. 15(3), 693–701 (2004).

Van Cuong, P. & Nan, W. Y. Adaptive trajectory tracking neural network control with robust compensator for robot manipulators. Neural Comput. Appl. 27(2), 525–536 (2016).

Li, Y., Yang, C., Yan, W., Cui, R. & Annamalai, A. Admittance-based adaptive cooperative control for multiple manipulators with output constraints. IEEE Trans. Neural Netw. Learn. Syst. 30(12), 3621–3632. https://doi.org/10.1109/TNNLS.2019.2897847 (2019).

Bellakehal, S., Andreff, N., Mezouar, Y. & Tadjine, M. Vision/force control of parallel robots. Mech. Mach. Theory 46(10), 1376–1395 (2011).

Shao, K., Tang, R., Xu, F., Wang, X. & Zheng, J. Adaptive sliding mode control for uncertain Euler-Lagrange systems with input saturation. J. Franklin Inst. 358(16), 8356–8376 (2021).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 62303095), Fundamental Research Funds for the Central Universities 2682025CX080, and the Natural Science Foundation of Sichuan (NO:2024NSFSC0141).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Data simulation, manuscript revision and proofreading were performed by Minglei Zhu, Shijie Song, Yuyang Zhao, Jun Qi and Dawei Gong. The first draft of the manuscript was written by Minglei Zhu and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical approval

The authors respect the Ethical Guidelines of the Journal.

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Qi, J., Song, S., Zhao, Y. et al. NN-based visual servoing compensation control of a Gough–Stewart platform with uncertain load. Sci Rep 15, 15500 (2025). https://doi.org/10.1038/s41598-025-98798-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-98798-z