Abstract

Backscatter communication, which transmits information by passively reflecting radio frequency (RF) signals, has become a focal point of interest due to its potential to significantly enhance the energy efficiency of Wireless Power (WPMEC) networks and extend the operational lifespan of terminal devices. However, there is little research on the integration of user cooperation in WPMEC scenarios within volatile network environments. In this paper, we propose a dynamic task offloading algorithm for a Backscatter-assisted WPMEC system, which involves two (MDs) and a Hybrid Access Point (HAP) with user cooperation. We formulate the energy efficiency (EE) maximization problem as a stochastic programming problem, considering the randomness of task arrivals and time-varying wireless channels. By leveraging Dinkelbach’s method and stochastic network optimization technique, we transform the problem into a series of deterministic sub-problems for each time slot, and convert the non-convex sub-problem into convex ones. We propose a low-complex EE maximization algorithm to solve the convex problems efficiently. We conduct extensive simulations to validate the performance of our algorithm under various system parameter settings. Experimental results demonstrate that our algorithm not only outperforms the benchmark algorithms by approximately 23%, but also stabilize all queues within the MEC system.

Similar content being viewed by others

Introduction

In recent years, the integration of Internet of Things (IoT) technology with advanced communication systems has facilitated a wide range of mobile devices (MDs)—such as cameras, sensors, and wearable devices-to collect and exchange data seamlessly1. This proliferation has given rise to numerous application scenarios that leverage wireless devices, such as autonomous driving, face recognition, Virtual Reality (VR), and e-health2. However, these applications often demand substantial computational power and low latency, presenting challenges for MDs, which typically have limited computing capabilities and limited battery lifespan. Mobile Edge Computing (MEC) has risen as a effective solution to these challenges. By offloading computationally intensive tasks to MEC servers, MDs with constrained resources can markedly enhance their computational capacity and reduce latency.

However, wireless devices often have limited battery capacity, which cannot sustain prolonged operation. Consequently, the frequent replacement of MDs’ batteries presents a significant challenge. Wireless Power Transfer (WPT) has developed as an potential solution to this challenge3,4. WPT utilizes a Hybrid Access Point (HAP) to broadcast Radio Frequency (RF) energy that can be harvested by wireless devices. By integrating Energy Harvesting (EH) technology, these devices can convert captured RF signals into electrical energy5, which can then be utilized to process incoming computational tasks. The integration of wireless power and edge computing technologies in Wireless Powered Mobile Edge Computing (WPMEC) system significantly extends the battery lifespan of wireless devices and markedly enhances their computational capabilities.

In addition to battery limitations, the double-near-far effect can significantly impact network performance, with devices far from the HAP experiencing poor channel conditions6. To counteract this issue, a user cooperation (UC) mechanism has been implemented. In this mechanism, devices that are in close proximity to the HAP act as relays, forwarding signals for those located at a greater distance. This strategy not only mitigates the inefficiency of remote nodes offloading tasks directly to the Access Point (AP) but also optimizes the utilization of idle computational resources within the network, thereby enhancing the overall computational efficiency of the system. For example7 demonstrates how user cooperation can boost the computational efficiency of a WPMEC system under dynamic channel conditions and varying task arrivals. Additionally, other studies, such as8,9, have shown that user cooperation can effectively reduce the impact of the double-near-far effect. However, the aforementioned studies have not yet explored the potential of Backscatter technology to improve energy efficiency further.

Backscatter communication (BackCom) has attracted considerable attention in recent years for its novel approach to wireless communication10. In BackCom systems, the transmitter operates in full-duplex mode, functioning in a passive mode. It modulates and reflects the incident signal to the receiver, eliminating the need to generate a carrier frequency, while simultaneously harvesting energy to support its circuitry consumption11,12. This method differs from traditional active communication (AC), where the transmitter first harvests energy and then uses it to transmit data, following the harvest-then-transmit (HTT) protocol. While AC typically consumes more energy than BackCom, it generally offers a higher data transfer rate. The trade-offs between EH and data transfer are inherent in both BackCom and AC.

The motivation behind this study stems from the recognition that while integrating BackCom and AC paradigms has shown promise in enhancing the energy efficiency (EE) of WPMEC systems8,13, current research is predominantly confined to static, single-time slot scenarios. These studies often assume constant channel conditions and user data arrivals, which contrasts sharply with the dynamic, stochastic nature of real-world MEC networks where data arrivals and channel states are subject to continuous variation. This volatility complicates the prediction and management of network operations, thereby necessitating the development of robust algorithms capable of optimizing long-term energy utilization efficiency and maintaining system queue stability. Addressing these challenges is not only of theoretical interest but also of paramount practical importance, as it directly impacts the sustainability and reliability of MEC services in fluctuating operational environments. Our study, therefore, aims to bridge this gap by proposing an algorithmic framework that can adeptly navigate the complexities of volatile network conditions, ensuring optimal energy efficiency and queue stability in WPMEC networks.

In this paper, we tackle the long-term EE maximization for a Backscatter assisted WPMEC network with user cooperation by jointly optimizing the wireless powered time fraction, BackCom offloading time fraction, AC offloading time fraction, offloading data size and transfer power of MDs. The problem introduces considerable difficulties in two main aspects: (1) The randomness of task arrivals and fluctuating wireless channel states impose challenges to achieving optimal EE while ensuring the stability of queue system; (2) The integration of BackCom and AC brings a strong coupling of energy harvest time and task offloading. To address these challenges, we formulate a stochastic optimization problem and propose an efficient, low-complexity algorithm by leveraging techniques such as the Dinkelbach method and the Lyapunov optimization framework. We first transform the stochastic optimization problem into a series of deterministic problems for each time slot by leveraging the drift-plus-penalty technique. Then, We transform the non-convex subproblem at each time slot into a convex optimization problem by employing the variable substitution method for an efficient solution. We propose a low-complexity dynamic EE maximization algorithm that operates online without requiring prior system information.

Our primary contributions are listed as follows:

-

We introduce a novel dynamic task offloading model to optimize EE for a WPMEC network with integration of BackCom and AC communication under user cooperation, taking into account the randomness of task arrival and time-varying wireless channels. Our model effectively balances the trade-off between energy efficiency and system queues stability, while mitigating the double-near-far effect. Additionally, we explore the use of variable data weighting to motivate proximal users to relay data for distant users, enhancing overall network efficiency.

-

We have developed an online algorithm to maximize the EE metric of the WPMEC network. This is achieved by determining the allocation of time fractions, data offloading, transmission power, and backscatter reflection coefficients. To address the coupling of control decisions over time, we employ Dinkelbach’s method and the Lyapunov optimization framework. This approach decouples the stochastic fractional optimization problem into deterministic sub-problems for each time slot, transforming it into a convex problem that ensures an efficient and optimal solution. Additionally, by adjusting the control parameter V, our algorithm achieves a balance between queue length variations and optimization objectives. This capability allows for effective management of system queue length and stability.

-

We present a rigorous mathematical analysis to demonstrate the performance of our proposed algorithm, which achieves a balanced trade-off between energy efficiency and queue stability within the bounds of [O(1/V), O(V)]. . Extensive simulation experiments are conducted to verify the algorithm’s effectiveness and practical applicability. Our algorithm improves energy efficiency by 23% compared to existing benchmark algorithms, while maintaining stable system queues in dynamic environments.

The rest of this paper is organized as follows: “Related work” presents the details of model for Backscatter-assisted WPMEC system. In “System model”, we formulate a stochastic programming optimization problem aiming to maximize energy efficiency. “Problem formulation” details the application of the Dinkelbach’s method and Lyapunov optimization techniques to simplify the problem, including the algorithm design and theoretical performance analysis. “Algorithm design” presents an extensive simulation-based evaluation of the proposed algorithm’s performance. Finally, we summarize the paper and outline potential directions for future research in “Simulation results”.

Related work

Task offloading in WPMEC

The integration of WPT with MEC as been thoroughly examined in recent studies as an effective solution for enhancing the energy and computational capacities of wireless devices14,15,16,17. Ernest and Madhukumar18 introduced an energy efficiency maximization algorithm leveraging multi-agent deep reinforcement learning for a MEC-supported vehicular network, with jointly considering transmission and computation latencies outperforming existing strategies. Zhang et al.19 developed an algorithm that optimizes charging times and data offloading rates for WPMEC sensor system, aiming to enhance computational rates across various scenarios. Li et al.5 studied the system latency minimization problem for an Intelligent Reflecting Surfaces (IRS)-assisted multi-ID MEC system, and presented a hybrid multiple access scheme and optimization framework combined with Frequency Division Multiple Access (FDMA) and Non-Orthogonal Multiple Access (NOMA) technologies. Additionally, in20, the authors introduced a deep reinforcement learning-based approach for WPT-aided mobile edge computing to dynamically adapt to real-time changes, make swift decisions, and optimize both task offloading and energy resource allocation. Our previous research7 introduced an online control algorithm for dynamic task offloading in WPMEC networks under dynamic network conditions, designed to maximize long-term system energy efficiency. However, the aforementioned studies did not take into account the use of Backscatter technique to further enhance the energy utilization efficiency of wireless power transfer.

User cooperation in WPMEC

To address the double-near-far effect and optimize resource utilization, numerous researchers have employed user cooperation mechanisms7,9,21,22,23. He et al.22 presented a user cooperation scheme, aiming to maximize the network’s total throughput by jointly optimizing the local computing frequency, transmit power, task distribution, and time allocation. Wang et al.21 introduced a user collaborative mechanism for a NOMA assisted WPT-MEC network, designed an iterative-based optimal algorithm to minimize overall system energy consumption by leveraging Lagrangian method. Zhang et al.24 presented a hierarchical reinforcement learning-based algorithm for joint caching and resource allocation in a cooperative MCE system, aiming to optimize resource utilization and balance server loads through service caching and workload offloading decisions. Sun et al.25 proposed an iterative optimization algorithm for minimizing end-to-end latency in an MEC network supporting IoT applications, by jointly optimizing user association and resource allocation in a three-phase operation protocol. Su et al.9 explored optimizing the energy beamforming and resource allocation to enhance computation efficiency for WPMEC system with the integration of user cooperation and NOMA, taking into account non-linear energy harvesting model.

Backscatter communication in WPMEC

In recent years, the integration of BackCom and AC has emerged as an effective approach to enhance network energy efficiency, leveraging the unique characteristics of Backscatter technology to balance transmission rates and energy consumption, thereby significantly improving the system’s overall performance8,26,27,28,29. Lyu et al.30 proposed a hybrid HTT and BackCom framework for cognitive wireless powered IoT networks, optimizing time allocation and mode combination to maximize system throughput. Ye et al.27 introduced a bisection-based iterative algorithm for minimizing data offloading and computing delays in a WPMEC network with hybrid BackCom and AC for IoT networks. Shi et al.31 proposed a scheme for maximizing the weighted sum of computation bits in a Backscatter-assisted WPMEC network, considering a practical non-linear EH model with hybrid HTT and Backscatter communications. Wu and He26 proposed an efficient iterative algorithm for EE maximization in a multi-access WPMEC system with the help of a relay. Lin et al.32 presents an optimization framework for a BackCom NOMA system, aiming to maximize the sum uplink rate by optimizing reflection coefficients and establishing association policies between base stations and backscatter devices. Fu et al.33 addressed the energy efficiency fairness among IoT nodes in a UAV-enabled WPMEC network with integrated BackCom and AC, proposed an optimization framework that maximizes the worst-case IoT node’s energy efficiency by jointly optimizing UAV transmit power and trajectory, IoT nodes’ BackCom and AC parameters, and local computing configurations. However, the aforementioned studies primarily focus on optimizing a single time slot and do not account for the dynamic fluctuations inherent in MEC network environments.

The prior relevant works are summarized in Table 1, which evaluates the optimization objectives, decision variables, and solution methods of the models. Unlike existing research on hybrid communication modes (e.g., BackCom and active communication) and user cooperation in WPMEC networks8, our work differs in the following key aspects: (1) Dynamic Network Optimization: We address the challenge of maximizing time-averaged energy efficiency in dynamic network environments by accounting for the near-far effect through user cooperation and considering the dynamic variations in nodes’ battery levels. (2) Incentive-Driven Cooperation: Weighted incentives are introduced to motivate proximal nodes to assist distant nodes in offloading computational tasks, thereby enhancing cooperative efficiency and improving overall system performance. (3) Robust Algorithm Design: Leveraging the Lyapunov optimization framework, Dinkelbach’s method, and variable substitution for convex optimization, we develop an online offloading and resource allocation algorithm. This algorithm operates independently of statistical or future system information and ensures queue stability under dynamic conditions.

System model

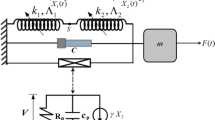

We consider a typical WPMEC network that comprises two MDs and a HAP, depicted in Fig. 1. The HAP directly connects with a MEC server to provide computation task offloading services and equipped with an RF energy transmitter to support wireless power to MDs. \(\text {MD}_{1}\), one of the MDs, is located at a significant distance from the HAP. While \(\text {MD}_{2}\), the second MD, is in a more advantageous position due to its proximity to the HAP and acts as an intermediary. Both \(\text {MD}_{1}\) and \(\text {MD}_{2}\) are equipped with both a BackCom circuit and an AC circuit, enabling them to select between backscatter and active communication modes. This basic model, which includes two mobile nodes, can be applied in scenarios such as Intelligent Transportation Systems (ITS), the Industrial Internet of Things (IIoT), and Smart Cities. By integrating backscatter communication with MEC, it enhances the data processing rate at the edge and improves overall system energy efficiency.

Time Division Multiple Access (TDMA) technique is utilized to avoid signal interference34, meaning that different MD nodes are allocated communication time in discrete time slots. The WPMEC system operates in a discrete time-slot mode over a time horizon period, with each time slot set to last T seconds. As depicted in Fig. 2, each time slot is further divided into five time fractions dedicated to energy harvesting and task processing for the different MDs. At the start of each time slot, both MDs initiate the capture of RF signals transmitted by the HAP for the purpose of energy harvesting. A partial offloading strategy is utilized for offloading computational tasks.

Due to poor channel conditions between \(\text {MD}_{1}\) and the HAP, compounded by the near-far effect, directly offloading tasks to HAP is not feasible for the \(\text {MD}_{1}\). Instead, \(\text {MD}_{1}\) offloads tasks to \(\text {MD}_{2}\), which forwards them to the HAP.

The task offloading process in the Backscatter-assisted WPMEC system proceeds through four distinct phases: (1) WPT Phase, where the HAP wirelessly charges both \(\text {MD}1\) and \(\text {MD}2\) using RF signals for a duration of \(\varepsilon _0^t\); (2) BackCom Phase, during intervals \(\varepsilon _{1}^{t}\) and \(\varepsilon _{2}^{t}\), \(\text {MD}_1\) offloads computational task data to \(\text {MD}_2\), which then offloads the data to the HAP; (3) AC Phase, where \(\text {MD}_2\) receives tasks from \(\text {MD}_1\) and offloads them to the HAP; and (4) Result Return Phase, where the computational results, typically small in size, are returned to \(\text {MD}_1\). In this phase, the time slot \(\varepsilon _{s}^{t}\) is negligible and can be approximated as \(\varepsilon {s}^{t} \approx 0\).

Note that the two-user basic model presented in this paper can be readily extended to scenarios involving multiple user nodes. This can be achieved by implementing a matching algorithm to pair distant nodes with nearby ones. By allocating orthogonal frequency bands to each pair, independent operation can be ensured, allowing our user cooperation model to be applied effectively to each pair. Here we focus on a single pair of users within a resource block not only reduces system complexity but also maintains practical relevance.

The key symbols and definitions used in this paper are listed in Table 2.

Energy harvesting model

The HAP, which has a dependable power source, is tasked with transmitting RF energy to the MDs located throughout its service area. In the initial phase, the HAP broadcasts RF signals to all MDs for a duration of \(\varepsilon _{0}^{t}\). Subsequently, \(\text {MD}_{1}\) offloads tasks to \(\text {MD}_{2}\) during the time fraction \(\varepsilon _{1}^{t}\) using BackCom, while \(\text {MD}_{2}\) can simultaneously harvest energy. In a similar fashion, \(\text {MD}_{2}\) offloads tasks to the HAP during the time fraction \(\varepsilon _{2}^{t}\) using BackCom, and \(\text {MD}_{1}\) harvests energy. Let \(E_{1,\textrm{wp}}^{t}\) and \(E_{2,\textrm{wp}}^{t}\) denote the harvested energy of \(\text {MD}_{1}\) and \(\text {MD}_{2}\) in the first phase, respectively. Thus, we have8

and

where \(0<\mu <1\) represents the energy conversion efficiency, \(P_{0}\) denotes the RF transmission power of the HAP. \(h_{1}^{t}\) and \(h_{2}^{t}\) represent the channel gains from the HAP to \(\text {MD}_{1}\) and \(\text {MD}_{2}\) respectively, which remain unchanged within the same time slot.

Dynamic queues model

To simulate the dynamic changes in user task data arrival and node battery levels, we have introduced the dynamic queuing model. Both \(\text {MD}_{1}\) and \(\text {MD}_{2}\) maintain a buffer queue for caching incoming task, which is processed on a first-in, first-out (FIFO) principle. Let \(Q_i\left( t\right) ,i\in \{1,2\}\) denote the task queue lengths of \(\text {MD}_{1}\) and \(\text {MD}_{2}\) at time slot t, respectively. The length of queue \(Q_i\left( t\right)\) evolves as follows:

where \(A_i\left( t\right)\) represents the task data arriving at the \(\text {MD}_{i}\) during time slot t. We assume that the task arrival is an arbitrary process over time, there is a upper-bound by \(A_{max}\). \(d_{i,\textrm{loc}}^{t}\), \(d_{i,\textrm{off}}^{t}\) represent the offloading task data and the local processing data at \(\text {MD}_{i}\), respectively.

Similarly, we assume that MD nodes are equipped with batteries and maintain a battery energy level queue. The energy captured through wireless charging is first stored in the batteries, and the battery power is consumed for local computing and task offloading. Concurrently, the battery energy level has an upper limit \(B_{\text {max}}\) and a lower limit \(B_{\text {min}}\). The \(B_{\text {min}}\) level is essential to sustain the basic operations of the MD IoT system34. Therefore, the battery energy level of the \(MD_i\) dynamically changes as:

where \(H_{i,loc}^{t}\) and \(H_{i,off}^{t}\) represent the energy consumption for local computing and task offloading, respectively. The total energy harvested by \(\text {MD}_{i}\) at slot t is given by \(E_i^{t} = E_{i,\textrm{wp}}^{t} + E_{i,\textrm{ba}}^{t}\), where \(E_{i,\textrm{wp}}^{t}\) is as previously defined, and \(E_{i,\textrm{ba}}^{t}\) denotes the energy harvested during BackCom data transmission.

Local computing model

Upon task arrival at a node, local processing is prioritized; task offloading is considered only when local processing is not feasible. Since each MD is equipped with a battery, the maximum duration for local computation is denoted by \(T\). Let \(f_{1}\) and \(f_{2}\) denote the local CPU frequencies of \(\text {MD}_{1}\) and \(\text {MD}_{2}\), \(\phi _{1}\) and \(\phi _{2}\) denote the CPU cycles required to process one bit of task at the \(\text {MD}_{1}\) and \(\text {MD}_{2}\), respectively. Furthermore, the maximum amount of local computation data at \(\text {MD}_{i}\) cannot exceed the current backlog of \(Q_i(t)\). Thus, the amount of locally computed task data can be expressed as

and the corresponding energy consumption is8

where \(\kappa _{i}\) denotes the computing energy efficiency parameter of \(MD_{i}\)8. The expression \(\kappa _{i} \left( f_{i}\right) ^{2}Q_i\left( t\right) \phi _{i}\) represents the power consumption used for processing all current task data in queue \(Q_i(t)\). Note that if the local processing capacity of \(\text {MD}_{i}\) exceeds the current backlog of \(Q_i(t)\), then the amount of task for local computing is \(Q_i(t)\) and the processing time is \(\frac{Q_{i}\left( t\right) \phi _{i}}{f_{i}}\), so we can derive the energy consumption for local computing as \(\kappa _{i}\left( f_{i}\right) ^{3}\frac{Q_{i}\left( t\right) \phi _{i}}{f_{i}}=\kappa _{i}\left( f_{i}\right) ^{2}Q_{i}\left( t\right) \phi _{i}\) ; otherwise, the amount of task is \({f_{i}T}/{\phi _{i}}\), with the corresponding energy consumption being \(\kappa _{i} \left( f_{i}\right) ^{3}T\) as in14.

Task offloading model

BackCom data transmission

During the time period \(\varepsilon _{1}^{t}\), \(\text {MD}_{1}\) offloads tasks to \(\text {MD}_{2}\) by BackCom. Let \(0\le \beta _{1}\le 1\) represent the reflection coefficient of \(\text {MD}_{1}\), where \(\beta _{1}\) represents the fraction of the received signal that is used as the carrier for data transfer, and the remaining fraction \((1-\beta _{1})\) is allocated to energy harvesting35. Let \(g_{12}^{t}\) represent the channel gain between \(\text {MD}_{1}\) and \(\text {MD}_{2}\). Based on Shannon’s theorem36, the amount of tasks offloaded from \(\text {MD}_{1}\) to \(\text {MD}_{2}\) satisfies8

where \(\zeta\) denotes the performance gap reflecting real modulation11, \(\sigma ^{2}\) is the noise power. The corresponding energy consumption for the circuit is

where \(P_{1}^{\textrm{ba}}\) is the circuit power consumption of \(\text {MD}_{1}\) by BackCom, which is a constant value depending on the circuit structure. By employing BackCom technology, data transmission and energy harvesting occur concurrently. Thus, the energy harvested by \(\text {MD}_{1}\) during \(\varepsilon _{1}^{t}\) is8

After receiving the tasks offloaded from \(\text {MD}_{1}\) , \(\text {MD}_{2}\) will relay a portion of them to the HAP. Note that \(\text {MD}_{2}\) will also offload its tasks to the HAP. At time \(\varepsilon _{2}^{t}\), the task data transmitted by \(\text {MD}_{2}\) through BackCom is constrained by

where \(\beta _{2}^t\) is the reflection coefficient of BackCom at \(\text {MD}_{2}\). \(h_{2}^{t}\) represents the channel gain from the HAP to the \(\text {MD}_{2}\) at time slot t, and \(g_{2a}^{t}\) denotes the channel gain from the \(\text {MD}_{2}\) to the HAP at time slot t. The corresponding energy consumption for the circuit is

where \(P_{2}^{\textrm{ba}}\) is the circuit power consumption of \(\text {MD}_{2}\) by BackCom. The energy harvested by BackCom during \(\varepsilon _{2}^{t}\) is

AC data transmission

After the BackCom offloading is completed, the AC mode offloading is initiated, which proceeds through an offloading process similar to that of BackCom. The AC mode can achieve a higher data transmission rate, but it is incapable of energy harvesting during the offloading phase. During the time fraction \(\varepsilon _{3}^{t}\), the upper limit of the amount of task from \(\text {MD}_{1}\) offloading to \(\text {MD}_{2}\) can be expressed as

where \(0\le P_{1}^{t}\le P_{\textrm{max}}\) represents the transmit power allocated to AC at \(\text {MD}_{1}\)8, \(\sigma ^{2}\) is the noise power and \(g_{12}\) represents the channel gain from \(\text {MD}_{1}\) and \(\text {MD}_{2}\). The corresponding AC offloading energy consumption is

where \(P_{1}^{ac}\) denotes the circuit power of \(\text {MD}_{1}\) through AC which is a constant value8.

The upper limit of the amount task offloading from \(\text {MD}_{2}\) to HAP by AC during \(\varepsilon _{4}^{t}\) is given by

where \(0\le P_{2}^{t}\le P_{\textrm{max}}\) denotes the transmit power of the \(\text {MD}_{2}\) through AC. Let \(P_{2}^{ac}\) denote the circuit power of \(\text {MD}_{2}\) through AC. The energy consumed for task offloading AC at \(\text {MD}_{2}\) in slot t is

Network stability and utility

In a dynamic WPMEC network system, maintaining stability is vital due to the stochastic arrival of tasks and varying channel conditions37. We first define system queue stability as follows.

Definition 1

(Queue stability): A discrete queue Q(t) is strong stable37 if

The total processed tasks \(D_{\textrm{tot}}(t)\) and the total energy consumed \(E_{\textrm{tot}}(t)\) at time slot t in a WPMEC system, can be expressed as follows, respectively

and

where \(\omega _{1}\) and \(\omega _{2}\) represent the task weights of \(\text {MD}_{1}\) and \(\text {MD}_{2}\) respectively. \(d_{i,\textrm{off}}^{t}=d_{i,\textrm{ba}}^{t}+d_{i,\textrm{ac}}^{t}\) represent the total offloading task data at \(\text {MD}_{i}\), and \(H_{i,\mathrm {{off}}}^{t}=H_{i,\mathrm {{ba}}}^{t}+H_{i,\mathrm {{ac}}}^{t}\) represent the total energy consumption of offloading task at \(\text {MD}_{i}\).

Definition 2

(Utility-energy efficiency ): The network utility \(\rho _{EE}\) is defined as the time-averaged amount of computation data achieved per unit of energy consumed38. It represents the ratio of long-term completed tasks to energy consumed, as follows:

Problem formulation

In this paper, we seek to design a dynamic offloading algorithm to maximize the \({ \rho _{EE}}\) subject to constraints of the system queue stability, by making decisions on time allocation \(\varvec{\varepsilon }^t=\left[ \varepsilon _{0}^t,\varepsilon _{1}^t,\varepsilon _{2}^t,\varepsilon _{3}^t,\varepsilon _{4}^t\right]\), power allocation \(\varvec{P}^t=\left[ P_{1}^t,P_{2}^t\right]\), reflection coefficients \(\varvec{\beta }^t=\left[ \beta _{1}^t,\beta _{2}^t\right]\) and the amount of offloaded tasks \(\varvec{d}^t=\left[ d_{1,\mathrm {{ba}}}^{t},d_{1,\mathrm {{ac}}}^{t},d_{2,\mathrm {{ba}}}^{t},d_{2,\mathrm {{ac}}}^{t}\right]\) at each time slot t. Simultaneously, our algorithm should ensure the stability of the system network when faced with randomly arriving task loads and dynamically changing wireless channel conditions. By denoting \(\varvec{\varepsilon }=\{\varvec{\varepsilon }^t\}_{t=1}^K\), \(\varvec{P}=\{\varvec{P}^t\}_{t=1}^K\), \(\varvec{\beta }=\{\varvec{\beta }^t\}_{t=1}^K\), and \(\varvec{d}=\{\varvec{d}^t\}_{t=1}^K\) the maximization of \(\rho _{EE}\) for a Backscatter-assisted WPMEC with user cooperation can be formulated as the following problem (P0):

where constraint (20b) ensures that the total offloading time in each slot does not exceed the available time. Constraint (20c) maintains the battery levels within the allowable range for both mobile devices. Constraints (20d) guarantee the stability of data queues. Constraint (20e) indicates that the amount of processed task in the current time slot must not exceed the current queue length. Constraint (20f) guarantees that tasks offloaded by \(\text {MD}{1}\) to \(\text {MD}{2}\) can be processed within the same slot. Constraints in (20g) denote the maximum offloading data depending on the channel condition. The problem is a fractional stochastic programming issue, which presents significant challenges due to the following aspects: (1) The stochastic nature of task arrivals, time-varying channel conditions, and dynamic battery level changes introduce uncertainty into the optimization challenge; (2) The temporal coupling in the time fraction and energy consumption exhibited by BackCom and AC poses a considerable challenge in determining the allocation of offloading time.

Due to the fractional nature of the objective function in problem P0, traditional optimization techniques aren’t directly applicable. Therefore, we leverage Dinkelbach’s method39, which is widely used for solving fractional programming problems in energy efficiency optimization, to transform P0 into a more tractable form. Let \(\rho _{EE}^{*}\) denote the optimal value of \(\rho _{EE}\), we obtain Theorem 1.

Theorem 1

The optimal \(\rho _{EE}^{*}\) is achieved if and only if

Proof

According to the Dinkelbach’s algorithm, the objective function of problem \(P1\) can be equivalently reformulated into the following parameterized subtractive form38:

where \(\textbf{v}=\{\varvec{\varepsilon },\varvec{P},\varvec{\beta },\varvec{d}\}\) and \(F(\textbf{v}, u)\) is a strictly monotonically non-increasing function with respect to \(u\). Here, \(u\) represents the energy efficiency value derived based on \(\textbf{v}\). The Dinkelbach algorithm alternately updates \(u\) and \(\textbf{v}\) through iterative optimization. During each iteration, the non-negative parameter \(u\) is updated until it converges to the optimal value \(\rho _{EE}^{*}\).

Let \(\textbf{v}^*\) denote the optimal strategy for the problem. At optimality, we have \(u^* =\rho _{EE}^{*}= \overline{D(\textbf{v}^*)} /\overline{E(\textbf{v}^*)}\), which satisfies the conditions of Theorem 1. Therefore, Theorem 1 is proven. \(\square\)

Similar to the Dinkelbach’s method in38, a new parameter u(t) is introduced as follows:

where \(u(0)=0\). Thus, problem P0 is transformed into:

where u(t) is a specified parameter that that needs to be updated at each time slot. It is important to note that u(t), as determined by (22), will converge to \(\rho _{EE}^{*}\) over time38. Consequently, this transformation is rjustified and yields the same optimal solution as P0. While problem P1 is more manageable than problem P0, it still faces several challenges The constraints (20c) and (20d), along with the equation (4), result in an interdependence of battery levels across various time slots throughout the period, which means that the current energy consumption affects future battery levels. Moreover, the unpredictability of the stochastic task arrivals and the fluctuating channel states add another complexity to the problem. The difficulty in accurately forecasting these elements leads to an inherent temporal coupling in the decision-making process.

Algorithm design

Lyapunov optimization formulation

To decouple the battery energy level across time slots at \(\text {MD}_{1}\) and \(\text {MD}_{2}\), we utilize two virtual queues for battery level: \(\widehat{B}_{i}(t)={B}_{i}(t)-{B}_{\textrm{max}},i\in \{1,2\}\). In order to optimize the task queue and the energy queue simultaneously, we define a combined queue vector \({\theta }\left( t\right) \triangleq \left[ Q_{i}\left( t\right) ,\widehat{B}_{i}(t)\right] ,i\in \{1,2\}\). Following Lyapunov optimization framework, we obtain the quadratic Lyapunov function and the Lyapunov drift37 as

and

Based the Lyapunov optimization framework37, one slot drift-plus-penalty of \({\theta }\left( t\right)\) can be expressed as

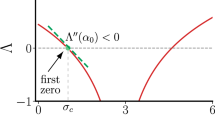

where control parameter V is positive that helps balance the trade-off between network EE and queue stability. A higher value of V leads the algorithm to prioritize network EE, which may result in an increased backlog in the task queue. An upper bound of \(\Delta _{V}\left( {\theta }\left( t\right) \right)\) are derived as Lemma 1.

Lemma 1

For any control decisions \(\left\{ \varvec{\varepsilon },\varvec{P},\varvec{\beta },\varvec{d}\right\}\) at each time slot t, the one slot Lyapunov drift-plus-penalty \(\Delta _{V}\left( {\theta }\left( t\right) \right)\) has the following upper bound:

where B is a positive constant that satisfies the following for all t:

Proof

By using the inequality \(\left( max[a-b,0]+c\right) ^{2}\le a^{2}+b^{2}+c^{2}+2a\left( c-b\right)\), \(\forall a,b,c\ge 0\) and combining the definition of task queues and battery energy queues Eqs. (3)–(4), we have

Combining the above inequalities (29) and (30) proves the result. \(\square\)

Based on the drift-plus-penalty method, we seek to minimize the upper bound given in the right-hand-size of (27) at each slot t. Notably the value of A(t), \(Q_{i}(t)\) and \(\widehat{B}_{i}(t)\) can be observed at the beginning of each time slot t. By eliminating the constant term in the RHS (right hand side) of (27), the problem P1 can be transformed into a one-time slot problem as

Due to the coupling between the parameters \(\varvec{\varepsilon }\), \(\varvec{P}\) and \(\varvec{\beta }\), the proposed problem P2.1 is still a non-convex problem. To decouple these parameters, we introduce auxiliary variables \(\varphi _{1}^t=P_{1}^{t}\varepsilon _{3}^{t}\), \(\varphi _{2}^t=P_{2}^{t}\varepsilon _{4}^{t}\), \(\varphi _{3}^t=\varepsilon _{1}^{t}\beta _{1}\), \(\varphi _{4}^t=\varepsilon _{2}^{t}\beta _{2}\). Then the problem P2.1 can be simplified as:

where \(C_{1}=Q_{1}(t)+\omega _{1}V\), \(C_{2}=Q_{2}(t)+\omega _{2}V\), \(C_{3}=Vu(t)-\widehat{B}_{1}(t)\), \(C_{4}=Vu(t)-\widehat{B}_{2}(t)\), \(C_{5}=\mu h_{1}^{t}P_{0}\widehat{B}_{1}(t)\), \(C_{6}=\mu h_{2}^{t}P_{0}\widehat{B}_{2}(t)\), \(C_{7}=C_{3}P_{1}^{\textrm{ac}}\), \(C_{8}=C_{4}P_{2}^{\textrm{ac}}\).

Lemma 2

P2.2 is a convex optimization problem that can be effectively addressed using convex optimization tools like CVX40.

Proof

In problem (P2.2), the objective function (32a) is linear with respect to all variables. Constraints (32b)–(32e) are all linear inequality constraints. What’s more, for constraint (32f), \(\log _{2}\left( 1+\dfrac{\zeta P_{0}h_{1}^{t}g_{12}^{t}\varphi _{4}^t}{\varepsilon _{1}^t\sigma ^{2}}\right)\) is the perspective of \(\log _{2}\left( 1+\dfrac{\zeta P_{0}h_{1}^{t}g_{12}^{t}\beta _{1}}{\sigma ^{2}}\right)\) which is concave with respect to \(\varphi _{4}\). Since the perspective operation preserves convexity41, \(\varepsilon _{1}^{t}\log _{2}\left( 1+\dfrac{\zeta P_{0}h_{1}^{t}g_{12}^{t}\varphi _{4}^t}{\varepsilon _{1}^t\sigma ^{2}}\right)\) is concave with \(\varphi _{4}\) and \(\varepsilon _{1}^{t}\) and (32f) is a convex constraint. For the same reason, (32g)–(32i) are all convex constraints. Thus, P2.2 is a convex optimization problem. \(\square\)

According to Lemma 2, at each time slot, we need to solve a convex problem P2.2 that involves a limited number of variables. This approach allows us to achieve optimal long-term average EE without requiring knowledge of future system information. Our proposed algorithm, the Dynamic Offloading for Backscatter-Assisted WPMEC Algorithm (DOBAM), is outlined in Algorithm 1.

Algorithm performance analysis

In each time slot, our algorithm solves a convex optimization problem (P2.2) involving 12 decision variables. The time complexity of solving this problem is \({O(n^{3.5}\log (1/\epsilon ))}\), where n denotes the number of decision variables (n = 12). Due to the small number of variables, the problem can be efficiently solved using interior-point methods or tools like CVX, ensuring rapid computation. The effectiveness of our dynamic control algorithms in achieving the optimal long-term time-average solution is demonstrated through the following theorems.

Theorem 2

The optimal long-average utility function obtained by P1 is limited by a lower bound that is independent with the time space. The following solutions can be achieved by the algorithm: (1) \(u(t)\ge u^{*}-B/(Ve^\textrm{ave}),\)

(2) All queues \(Q_i(t)\), \(\widehat{B}_{m}(t)\), \(\widehat{B}_{h}(t)\) are mean rate stable, and thus the constraints are satisfied.

Proof

For any \(\varepsilon > 0\), by integrating these results to (27) and taking the limit as \(\varepsilon \rightarrow 0\), we observe that the outcome is independent of the queue status \({\theta }(t)\). Thus, we have

Notice that u(t) is a constant and independent of the current queue status \({\theta }(t)\). By applying the iterated expectation and summing the above inequality over time \(t\in \{0,1,...,K-1\}\), we obtain:

Dividing both sides of (34) by VK and taking the limit as \(K\rightarrow \infty\), and using Jensen’s inequality, we get:

Given that \(\underset{K\rightarrow \infty }{\textrm{lim} }\frac{1}{K}\overset{K-1}{\underset{t=0}{{\displaystyle \sum }}}\mathbb {E}\left\{ u(t)\right\} \le u\), , we conclude:

Thus, we derive that:

\(\square\)

Theorem 3

Let \(E_{tot}^{upper}\) be the upper bound of \(e_{1}(t)\), the time-average sum rate of queue length is bounded by

Proof

By taking iterated expectation and using telescoping sums over \(t\in \{0,1,...,K-1\}\), we have

Dividing both sides of (38) by \(K\varepsilon\), taking \(K\rightarrow \infty\), rearranging terms yield

Rearranging the terms, we obtain:

Thus, the time-average sum rate of the queue lengths is bounded as required. \(\square\)

Theorems 2 and 3 provide a comprehensive mathematical performance analysis of our proposed algorithm. The control parameter V, which serves as a weight balancing the queue stability and optimization objective, is used to adjust the trade-off between system queue stability and the optimization goal. The above theorems show that the time-average EE \(\eta _{EE}\) scales as O(1/V), while the queue length increases at a rate of O(V). As V increases, the algorithm tends to prioritize optimizing the system’s EE, while decreasing V focuses more on stabilizing the queue length. Thus, we can tune V to achieve a trade-off characterized by [O(1/V), O(V)] between \(\eta _{EE}\) network EE and task queue length. According to Little’s Law37, latency is proportional to the time-average queue length. This indicates that our proposed algorithm can effectively balance efficiency and latency, which is crucial in many real-world applications where both performance and response time are important.

Simulation results

In this section, extensive numerical simulation are conducted to verify the performance of our proposed algorithm. Each simulation experiment lasts a total of 5000 time slots, with each time slot lasting for 1 s. We can also adjust the duration of time slots to accommodate various scenario requirements. The experiments were conducted on a high-performance computing platform, featuring a 2.10 GHz Intel(R) Xeon(R) Silver 4116 CPU, with simulations implemented in the Python language.

To simulate the impact of physical distance on the channel, we employed the free-space path loss model to simulate signal propagation, where the average channel gain \(\overline{h}\) is calculated by the following formula42:

where \(A_{d}\) is the antenna gain, \(f_{c}\) represents the carrier frequency, \(d_{e}\) denotes the path loss exponent, and \(d_{i}\) represents the distance between nodes (in meters).

The dynamic channel gains for WPT and task offloading, following the Rayleigh fading model, are represented by the vector \(\mathbf {h^{t}}=\left[ a_{1}^{t}h_{1}^{t},a_{2}^{t}h_{2}^{t},a_{3}^{t}g_{12}^{t},a_{4}^{t}g_{2a}^{t}\right]\). In the model, the channel fading factors \(\left[ a_{1}^{t}, a_{2}^{t}, a_{3}^{t}, a_{4}^{t}\right]\) all follow an exponential distribution with an expectation of 1, simulating the natural variability of wireless channels. We assume that the vector of fading factors remains constant at \(\left[ 1.0,1.0,1.0,1.0\right]\) within a time slot.

To model dynamically varying loads, we employed the most typical exponential distribution to represent task arrivals, with arrival rates \(\lambda _1\) and \(\lambda _2\) being 1.2 and 1.5, respectively.

The main source code is available online at https://github.com/Toxic-Gulu/bac-with-ac. Other simulation parameters are detailed in Table 38.

To comprehensively evaluate the performance of our algorithm, we conducted comparative simulations with three representative benchmarks as follows:

-

(1)

UC with the AC scheme (UAC)7: In the WPMEC system, task offloading is facilitated through user collaboration, where \(\text {MD}_{1}\) and \(\text {MD}_{2}\) communicate exclusively via the AC mode, without the integration of Backscatter modules. This approach only leverages the AC communication model for data transmission.

-

(2)

UC with the BackCom scheme (UBC)8: The WPMEC network employs a collaborative approach among users, with inter-user communication solely relying on the Backscatter technique.

Specifically, the HAP continuously broadcasts RF energy to the users throughout each time slot, resulting in the highest energy expenditure for the HAP due to its non-stop energy emission.

-

(3)

Without UC With Integrated BackCom and AC scheme (BC+AC)43: The WPMEC network forgoes user collaboration, with \(\text {MD}_{1}\) and \(\text {MD}_{2}\) establishing direct communication links with the HAP. In this configuration, \(\text {MD}_{1}\) and \(\text {MD}_{2}\) are autonomous, eschewing collaborative interactions. Furthermore, each device is integrated with a Backscatter module, enabling the utilization of both BackCom and AC for their communication needs.

-

(4)

Random offloading scheme (ROS): The WPMEC network employs a typical task offloading approach where \(\text {MD}_{1}\) and \(\text {MD}_{2}\) independently offload a random subset of their tasks to the HAP. There is no collaboration between the two nodes in terms of communication. Importantly, both nodes are not integrated with Backscatter modules, and they exclusively utilize AC communication.

To ensure a fair comparison, all baseline algorithms are implemented utilizing the Lyapunov optimization framework, which is designed to maintain system stability.

Figure 3 demonstrates the performance comparison of EE under different schemes, with parameters set as \(V=40\), \(\zeta =-16\) dB, and \(W=1.2\) MHz. We find that our proposed algorithm performs the best in terms of EE, followed by the UBC, then the UAC, the BC+AC ranking fourth, and the ROS method performing the worst. Compared to the other four schemes, our proposed algorithm has improved the EE by 23%, 38%, 48% and 64%, respectively. This superior performance highlights the advantage of integrating both BackCom and AC. The scheme of UBC, suffers from limited transmission capability when \(\zeta\) is small, leading to poor performance. Although the UAC can transmit sufficient data, its high circuit power consumption results in it ranking third. Due to the poor channel conditions of remote users, the BC + AC, as well as the ROS method, also have lower energy efficiency. This indicates that even with the integration of BackCom and AC, poor channel conditions between \(\text {MD}_{1}\) and HAP may still limit energy harvesting and task offloading. This further emphasizes the importance of user cooperation in enhancing the performance of remote users.

Figure 4 illustrates the impact of controlling the performance gap \(\zeta\) on EE. The EE for the other four schemes improve with an increase in \(\zeta\), contrasting with the UAC and ROS scheme’s stable EE. Our proposed scheme consistently delivers the best system performance and the ROS method performing the worst. With \(\zeta>\) − 17 dB, the UBC scheme outperforms the UAC scheme in EE, prompting the system to favor the BackCom mode. Upon reaching a higher threshold, the UAC scheme’s EE matches that of our proposed scheme, leading to its predominance use by users. Conversely, when \(\zeta<\) − 21 dB, our scheme defaults to the AC mode. The BC + AC and the ROS scheme experience the least performance gains due to suboptimal channel conditions. Overall, the proposed scheme outshines others in flexibility and adaptability, adeptly tuning to varying \(\zeta\) levels for optimal EE.

Figure 5 demonstrates the impact of network bandwidth on the performance under different schemes, with the time slot set to 2000. As shown in Fig.5, within the bandwidth range of [1.00, 1.45] \(\times\) \(10^6\) Hz, the EE of all schemes increases with the expansion of bandwidth. This is attributed to the increased bandwidth allows more tasks to be transmitted to the edge server for processing using edge computing resources. Our proposed scheme consistently outperforms other schemes across the different bandwidth scenarios, especially as the bandwidth approaches 1.45MHz. At this point, the advantage in energy efficiency becomes markedly evident, substantially outperforming other baseline algorithms. This not only showcases the high adaptability of our scheme in managing bandwidth but also underscores its effectiveness in utilizing network resources in high-bandwidth scenarios, thereby maximizing energy efficiency.

In Fig. 6, we evaluate the system performance of various algorithms under different weight configurations, where the weight \(\omega\) varies in the range [0, 3], with \(\omega _{1}=\omega /(\omega +1)\) and \(\omega _{2}=1/(\omega +1)\). As \(\omega\) increases, the EE of all schemes generally declines because a higher \(\omega\) implies that tasks offloading from \(\text {MD}_{1}\) receive more resources at the expense of overall EE. Our algorithm consistently performs the best across all weight settings and the ROS algorithm exhibits the poorest performance among the evaluated approaches. Specifically, at \(\omega =3\), our algorithm achieves an EE improvement of 23%, 38%, 46% and 55% over other algorithms. This demonstrates that our algorithm can more effectively leverage the advantages of the UC scheme, combined with BackCom and AC. Additionally, Fig. 6 indicates that excessively high weights for edge devices can rapidly degrade network performance, underscoring the importance of proper weight distribution. In Fig. 6, we observe an inversion of the black curve, which occurs due to the system’s inclination to allocate more resources to support the task offloading of \(\text {MD}_{1}\) as the weight system increases. Schemes without user cooperation, constrained by the absence of collaborative efforts and poor channel conditions, experience a sharp decline. Consequently, at a weight coefficient of 0.6, a crossover of the curves is observed, where the EE represented by the black curve falls below that of any other scheme with user cooperation.

Figure 7 illustrates the impact of the control parameter \(V\) on EE and average queue length, with the following parameter settings: \(\zeta = - 18\) dB, \(W = 1.2\) MHz, a distance of 120 m between \(\text {MD}_1\) and \(\text {MD}_2\), and a task arrival rate at \(\text {MD}_2\) of \(\lambda _2 = 1.5\) Mbps. As \(V\) increases, EE improves, but the system queue length also increases. The parameter \(V\) acts as a weight in the offset-plus-penalty expression, which balances the optimization objectives. When \(V\) increases, the algorithm places more emphasis on optimizing EE, while reducing its focus on queue stability, resulting in an increase in the queue length. When \(V\) reaches a certain threshold, the gain in EE becomes saturated, and further increases in \(V\) no longer significantly affect either the energy efficiency or the queue length. The experimental results shown in Fig. 7 are consistent with the theoretical analysis presented earlier. In practical applications, according to Little’s Law, the queue length is directly proportional to user waiting time. Thus, the value of \(V\) can be adjusted based on the required waiting time to ensure that the system optimizes its objective function while satisfying Service Level Agreements (SLA) for time constraints. Physically, decreasing \(V\) accelerates task processing rates, thereby reducing the queue length more rapidly and ensuring system stability. On the other hand, increasing \(V\) shifts the algorithm’s focus towards maximizing energy efficiency at the expense of queue stability, which leads to an increase in queue length.

Figure 8 illustrates the optimal time allocation of \(\varvec{\varepsilon }\) versus the performance gap \(\zeta\). Initially, when \(\zeta\) is low, despite the lower energy consumption of the BackCom mode, achieving a larger number of computational bits at the same energy consumption is challenging. Consequently, MDs prefer the AC mode and allocate more time \(\varepsilon ^*_0\) for energy collection to meet data processing needs. As \(\zeta\) increases, \(\varepsilon ^*_0\) gradually decreases, and when \(\zeta\) exceeds − 20 dB, \(\varepsilon ^*_0\approx {0}\). This is because, as \(\zeta\) increases, the BackCom mode can not only achieve more computational bits at the same energy consumption but also collect sufficient energy to support the circuit consumption under the AC mode, significantly improving energy efficiency. Therefore, as \(\zeta\) increases, users are more inclined to choose the BackCom mode, using its reflection capability to perform task transmission and energy collection to meet the circuit consumption44. This scheme ensures that under different \(\zeta\) conditions, the system can operate at the highest efficiency, optimizing energy usage.

Figure 9 illustrates that the EE under varying distances between \(\text {MD}_{1}\) and \(\text {MD}_{2}\), with \(V=40\). The distance between the remote MD and the helper device ranges from 80 to 180 m. It is observed that EE decreases as the distance increases. This is because an increase in distance leads to a reduction in channel gain, necessitating more time and higher power to transmit data to maintain a shorter data queue, thereby increasing energy consumption. This indicates that in practical deployment, the distance between edge node devices and helper devices should be kept within a reasonable range to avoid a rapid decline in network performance. This assessment not only quantifies the specific impact of distance on energy efficiency but also emphasizes the importance of considering the distance factor in the design of efficient networks.

In Fig. 10, we evaluate the EE and the average task queue length as the task arrival rate at \(\text {MD}_{2}\) varies. The distance between \(\text {MD}_{1}\) and \(\text {MD}_{2}\) is set to 120 m, and control parameter V is set to 40. As the computation task data arrival rate at \(\text {MD}_{2}\) increases, the EE decreases. The rise in data rate causes the local data queue to expand, necessitating a higher data transmission rate from the system. This not only entails processing a larger volume of data but also results in greater energy expenditure to maintain shorter data queues, thereby increasing overall energy consumption. Despite this trade-off in energy efficiency, our algorithm optimizes energy usage, ensuring that the system maintains efficient data processing and rapid response capabilities even as data rates increase.

In practice, our algorithm can be deployed on edge servers with substantial computing power, enabling efficient execution and effective problem solving. The algorithm functions as follows: at the beginning of each time slot, all MDs transmit their network status information to the edge server, which performs centralized computation and returns the corresponding decisions to the respective nodes. Since the amount of data transmitted is minimal, the associated time overhead is negligible.

Our experimental results validate the superior energy efficiency (EE) of our proposed algorithm across various network settings and parameters. By integrating BackCom with AC, our algorithm achieves EE improvements of 23%, 38%, 48%, and 64% over the UBC, UAC, BC + AC, and ROS methods, respectively. This enhancement underscores the critical role of user collaboration in enhancing the performance of remote users and highlights the importance of considering distance factors in network design. With the increase in bandwidth, particularly approaching 1.45 MHz, the EE of all schemes significantly improves, indicating that our algorithm can adapt to high-bandwidth environments and efficiently utilize resources. The increase in the control parameter \(V\) helps to enhance EE, but there is a saturation point, indicating that parameter adjustment requires precise control. An increase in the performance gap \(\zeta\) makes users more inclined to choose the BackCom mode, further boosting EE.

In summary, our algorithm can dynamically adjust parameters to accommodate diverse network conditions, striking a balance between EE and data processing requirements. It exhibits remarkable adaptability and robustness with respect to bandwidth, control parameter \(V\), and performance gap \(\zeta\) ensuring optimal energy efficiency in a wide range of scenarios.

Conclusions

The integration of BackCom and AC is pivotal for enhancing the performance of WPMEC systems across diverse user scenarios, as it can efficiently harness the wireless power signal. In this research, we have investigated a user-collaborative WPMEC system aided by the Backscatter technique, with the objective of maximizing EE within a fluctuating network environment. To tackle the challenges posed by dynamic task loads and time-varying channel conditions, we have proposed an online control algorithm. This algorithm utilizes fractional programming, Lyapunov optimization theory, and convex optimization techniques, which simplifies the problem into a convex optimization form. It also decouples the stochastic optimization problem into deterministic optimization sub-problems for each time slot, solving them efficiently. Our simulation results demonstrate that our scheme significantly enhances EE while ensuring system stability, outperforming existing baseline algorithms.

For future research, we plan to integrate deep reinforcement learning (DRL) and predictive technologies into the stochastic network queue decision-making process. Specifically, we aim to leverage stochastic network optimization techniques to ensure system stability and meet long-term average constraints. DRL algorithms, such as Proximal Policy Optimization (PPO) and Soft Actor-Critic (SAC), will be applied within actor-critic frameworks to optimize decision-making in dynamic network environments. Additionally, we intend to utilize Long Short-Term Memory (LSTM) networks and attention mechanisms to predict task flows and network states in large-scale IoT networks, thereby enhancing the efficiency of system decision-making. This integration is expected to improve the intelligence and adaptability of our approach. Furthermore, we will investigate dynamic task offloading strategies within larger-scale IoT user collaboration models in future work, with the goal of further improving system performance under real-world conditions.

Data availability

Data are contained within the article.

References

Zhao, R., Zhu, F., Tang, M. & He, L. Profit maximization in cache-aided intelligent computing networks. Phys. Commun. 58, 102065 (2023).

Wang, X. et al. Wireless powered mobile edge computing networks: A survey. ACM Comput. Surv. 55(13s), 1–37 (2023).

Wang, F., Xu, J. & Cui, S. Optimal energy allocation and task offloading policy for wireless powered mobile edge computing systems. IEEE Trans. Wirel. Commun. 19(4), 2443–2459 (2020).

Wei, Z., Zhang, B., Lin, S., & Wang, C. A self-oscillation WPT system with high misalignment tolerance. IEEE Trans. Power Electron.

Li, G. et al. Latency minimization for IRS-aided noma MEC systems with WPT-enabled IOT devices. IEEE Internet Things J. 10(14), 12156–12168 (2023).

Ju, H. & Zhang, R. Throughput maximization in wireless powered communication networks. IEEE Trans. Wirel. Commun. 13(1), 418–428 (2013).

H. He, C. Zhou, F. Huang, H. Shen, & S. Li, Energy-efficient task offloading in wireless-powered mec: A dynamic and cooperative approach. Mathematics. 12(15). https://doi.org/10.3390/math12152326.

He, Y., Wu, X., He, Z. & Guizani, M. Energy efficiency maximization of backscatter-assisted wireless-powered MEC with user cooperation. IEEE Trans. Mob. Comput. 23(2), 1878–1887 (2023).

Su, B., Ni, Q., Yu, W. & Pervaiz, H. Optimizing computation efficiency for noma-assisted mobile edge computing with user cooperation. IEEE Trans. Green Commun. Netw. 5(2), 858–867 (2021).

Hoang, D. T., Niyato, D., Wang, P., Kim, D. I. & Han, Z. Ambient backscatter: A new approach to improve network performance for RF-powered cognitive radio networks. IEEE Trans. Commun. 65(9), 3659–3674 (2017).

Shi, L., Ye, Y., Chu, X., Sun, S. & Lu, G. Energy-efficient resource allocation for backscatter-assisted wireless powered MEC. IEEE Trans. Veh. Technol. 72(7), 9591–9596 (2023).

Ye, Y., Shi, L., Chu, X. & Lu, G. Throughput fairness guarantee in wireless powered backscatter communications with HTT. IEEE Wirel. Commun. Lett. 10(3), 449–453 (2020).

Ye, Y., Shi, L., Chu, X., Hu, R. Q. & Lu, G. Resource allocation in backscatter-assisted wireless powered MEC networks with limited MEC computation capacity. IEEE Trans. Wirel. Commun. 21(12), 10678–10694 (2022).

Ji, L. & Guo, S. Energy-efficient cooperative resource allocation in wireless powered mobile edge computing. IEEE Internet Things J. 6(3), 4744–4754 (2018).

Sabella, D., Vaillant, A., Kuure, P., Rauschenbach, U. & Giust, F. Mobile-edge computing architecture: The role of MEC in the internet of things. IEEE Consum. Electron. Mag. 5(4), 84–91 (2016).

Huang, M., Liu, W., Wang, T., Liu, A. & Zhang, S. A cloud-MEC collaborative task offloading scheme with service orchestration. IEEE Internet Things J. 7(7), 5792–5805 (2019).

Sun, G., Wang, Z., Su, H., Yu, H., Lei, B., & Guizani, M. Profit maximization of independent task offloading in MEC-enabled 5g internet of vehicles. IEEE Trans. Intell. Transp. Syst. 1–13. https://doi.org/10.1109/TITS.2024.3416300 (2024).

Ernest, T. Z. H. & Madhukumar, A. Computation offloading in MEC-enabled IOV networks: Average energy efficiency analysis and learning-based maximization. IEEE Trans. Mob. Comput.

Zhang, S., Bao, S., Chi, K., Yu, K. & Mumtaz, S. Drl-based computation rate maximization for wireless powered multi-ap edge computing. IEEE Trans. Commun.

Wu, X., Yan, X., Yuan, S., & Li, C. Deep reinforcement learning-based adaptive offloading algorithm for wireless power transfer-aided mobile edge computing. In IEEE Wireless Communications and Networking Conference (WCNC), vol. 2024, 1–6 (IEEE, 2024).

R. Wang, J. Chen, B. He, L. Lv, Y. Zhou, & L. Yang, Energy consumption minimization for wireless powered NOMA-MEC with user cooperation. In 2021 13th International Conference on Wireless Communications and Signal Processing (WCSP) 1–5 (IEEE, 2021).

He, B., Bi, S., Xing, H., & Lin, X. Collaborative computation offloading in wireless powered mobile-edge computing systems. In IEEE Globecom Workshops (GC Wkshps),vol. 2019, 1–7 (IEEE, 2019).

Iwaki, A., Sanada, K., Hatano, H. & Mori, K. Performance analysis of wireless powered communication networks based on harvest-then-access scheduling considering double-near far problem, IEICE Technical Report. IEICE Tech. Rep. 122(145), 42–47 (2022).

Zhang, W., Zhang, G. & Mao, S. Deep-reinforcement-learning-based joint caching and resources allocation for cooperative MEC. IEEE Internet Things J. 11(7), 12203–12215. https://doi.org/10.1109/JIOT.2023.3333826 (2024).

Sun, Y., Xu, J. & Cui, S. User association and resource allocation for MEC-enabled IOT networks. IEEE Trans. Wirel. Commun. 21(10), 8051–8062 (2022).

Wu, T. & He, H. An efficient energy efficiency maximization algorithm for backscatter-assisted wp-mec network with relay. In Proceedings of the 2024 16th International Conference on Machine Learning and Computing, ICMLC ’24, Association for Computing Machinery, New York, NY, USA, 720–727. https://doi.org/10.1145/3651671.3651712 (2024).

Ye, Y., Shi, L., Chu, X., Li, D. & Lu, G. Delay minimization in wireless powered mobile edge computing with hybrid backcom and at. IEEE Wirel. Commun. Lett. 10(7), 1532–1536 (2021).

Correia, R., Carvalho, N. B. & Kawasaki, S. Continuously power delivering for passive backscatter wireless sensor networks. IEEE Trans. Microw. Theory Tech. 64(11), 3723–3731 (2016).

Han, K. & Huang, K. Wirelessly powered backscatter communication networks: Modeling, coverage, and capacity. IEEE Trans. Wirel. Commun. 16(4), 2548–2561 (2017).

Lyu, B., Guo, H., Yang, Z. & Gui, G. Throughput maximization for hybrid backscatter assisted cognitive wireless powered radio networks. IEEE Internet Things J. 5(3), 2015–2024 (2018).

Shi, L., Ye, Y., Chu, X. & Lu, G. Computation bits maximization in a backscatter assisted wirelessly powered MEC network. IEEE Commun. Lett. 25(2), 528–532 (2020).

Lin, D., Wang, K., Wang, T. & Ding, Z. Uplink data rate maximization in multi-cell backcom noma systems. IEEE Open J. Commun. Soc. 5, 526–539. https://doi.org/10.1109/OJCOMS.2023.3349277 (2024).

Fu, Z., Shi, L., Ye, Y., Zhang, Y., & Zheng, G. Computation EE fairness for a UAV-enabled wireless powered MEC network with hybrid passive and active transmissions. IEEE Internet Things J..

Bi, S. & Zhang, Y. J. Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading. IEEE Trans. Wirel. Commun. 17(6), 4177–4190 (2018).

Xu, Y., Gu, B., Hu, R. Q., Li, D. & Zhang, H. Joint computation offloading and radio resource allocation in MEC-based wireless-powered backscatter communication networks. IEEE Trans. Veh. Technol. 70(6), 6200–6205 (2021).

Verdu, S. Fifty years of Shannon theory. IEEE Trans. Inf. Theory 44(6), 2057–2078 (1998).

Neely, M. Stochastic Network Optimization with Application to Communication and Queueing Systems (Springer Nature, 2022).

Sun, M. et al. Resource management for computation offloading in d2d-aided wireless powered mobile-edge computing networks. IEEE Internet Things J. 8(10), 8005–8020 (2020).

Dinkelbach, W. On nonlinear fractional programming. Manag. Sci. 13(7), 492–498 (1967).

Diamond, S. & Boyd, S. Cvxpy: A python-embedded modeling language for convex optimization. J. Mach. Learn. Res. 17(83), 1–5 (2016).

Zawawi, Z. B., Huang, Y. & Clerckx, B. Multiuser wirelessly powered backscatter communications: Nonlinearity, waveform design, and sinr-energy tradeoff. IEEE Trans. Wirel. Commun. 18(1), 241–253 (2018).

Wu, T., He, H., Shen, H. & Tian, H. Energy-efficiency maximization for relay-aided wireless-powered mobile edge computing. IEEE Internet Things J. 11(10), 18534–18548. https://doi.org/10.1109/JIOT.2024.3366982 (2024).

Shi, L., Ye, Y., Chu, X. & Lu, G. Computation bits maximization in a backscatter assisted wirelessly powered MEC network. IEEE Commun. Lett. 25(2), 528–532. https://doi.org/10.1109/LCOMM.2020.3027294 (2021).

Xu, Y. & Gui, G. Optimal resource allocation for wireless powered multi-carrier backscatter communication networks. IEEE Wirel. Commun. Lett. 9(8), 1191–1195. https://doi.org/10.1109/LWC.2020.2985010 (2020).

Author information

Authors and Affiliations

Contributions

Methodology, H.H.; Validation, Z.C. and Y.Y.; Formal analysis, H.H.; Investigation, H.H. and H.F.; Resources, H.H.; Data curation, H.H. and S.H.; Writing—original draft, H.H. and H.F.; Writing—review and editing, H.H. and X.J.; Supervision, L.S. and S.H.; All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

He, H., Zhou, C., Huang, F. et al. User-cooperative dynamic resource allocation for backscatter-aided wireless-powered MEC network. Sci Rep 15, 16822 (2025). https://doi.org/10.1038/s41598-025-99481-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-99481-z