Abstract

It is still challenging to diagnose biliary atresia (BA) in current clinical practice. The study aimed to develop a multimodal model incorporated with uncertainty estimation by integrating sonographic images and clinical information to help diagnose BA. Multiple models were trained on 384 infants and validated externally on 156 infants. The model fused with sonographic images and clinical information yielded best performance, with an area under the curve (AUC) of 0.941 (95% CI: 0.891-0.972) on the external dataset. Moreover, the model based on sonographic video still yielded AUC of 0.930 (0.876-0.966). By excluding 39 cases with high uncertainty (>0.95), accuracy of the model improved from 84.6% to 91.5%. In addition, six radiologists with different experiences showed improved diagnostic performance (mean AUC increase: 0.066) when aided by the model. This fusion model incorporated with uncertainty estimation could potentially help radiologists identify BA more accurately and efficiently in real clinical practice.

Similar content being viewed by others

Introduction

Biliary atresia (BA) is a severe infantile hepatobiliary disease characterized by the obstruction or absence of the bile ducts, leading to cholestasis in the liver and subsequent liver damage1,2. Kasai procedure is the preferred treatment strategy for BA and its success depends on the age of the infant at the time of surgery and the extent of liver damage1,2. Treatment before 60 days of life is considered to have the best chance of delaying the need for transplantation3. Therefore, early diagnosis is crucial for timely surgery and better outcomes.

Previously, we developed a deep learning model based on ultrasound (US) gallbladder images that showed usefulness in helping radiologists without much experience in the diagnosis of BA4,5. However, the model’s reliance on single-modality data and lack of uncertainty estimation limited clinical utility, indicating need for optimization. In addition to gallbladder imaging, other US features, such as triangular cord thickness6,7,8, as well as shear wave elastography (SWE)9,10,11, have also proven useful in diagnosing BA. Moreover, serum parameters, such as gamma-glutamyl transferase (GGT)9,12,13, direct bilirubin (DB)14,15 are observed to rise in infants with BA. Given these additional diagnostic indicators, there remains significant potential for improving the deep learning model in diagnosing BA. However, these valuable diagnostic features have not yet been incorporated into automated diagnosis systems. When the diagnosis provided by the gallbladder artificial intelligence (AI) model is inconsistent with other diagnostic features, radiologists need to spend more time and energy to analyze the reasons behind this information. This inconsistency may complicate the diagnostic process and increase the burden of radiologists. Thus, a deep learning model that puts all these useful US features and clinical meta-data together might perform better in the diagnosis of BA and show excellent generality in clinical setting.

In addition to providing clinical diagnoses, it is crucial that the model provides its prediction risk. This means that models should ideally be able to provide a degree of prediction uncertainty, particularly when faced with situations where they are uncertain about making decisions based on the input data. Ideally, models should output high uncertainty when there is a significant risk of misclassification, and low uncertainty when there is a high likelihood of correct classification for a given patient, so that radiologists can be more cautious in their decision-making under the condition of high prediction uncertainty from the model. Several studies have explored the method of uncertainty estimation for natural image tasks16,17 and medical image tasks18,19,20, but there are lack of studies on the application of uncertainty estimation for US images.

Therefore, this study aims to develop a multimodal deep learning model constructed with different fusion combinations of conventional ultrasound images of gallbladder and triangular cord, shear wave elastography images of liver parenchyma and clinical information to more accurately identify BA in jaundiced infants, and provide uncertainty estimations of the model’s predictions to better assist radiologists in decision-making in clinical practice.

Results

Characteristics of infants

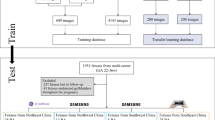

A total of 628 infants with serum hyperbilirubinemia were enrolled in this multicenter study. Infants from hospital 1 between January 2018 and September 2022 were randomly divided into training cohort (n = 384) and internal testing cohort (n = 88) in an approximately 80:20 ratio. Infants from the other 5 hospitals (n = 156) between January 2022 and December 2023 were used as external testing cohort (Fig. 1). The clinical characteristics of the different cohorts were listed in Table 1. The number of infants in each cohort and the corresponding US images and videos were detailed in Supplementary Table 1. Notably, infants in the external testing cohort were significantly younger than those in the training and internal testing cohorts (P < 0.05). While the distributions of the three liver markers (ALT, AST, GGT levels) were similar between the training and internal testing sets, significant differences were observed between the internal and external testing sets (P < 0.05). No significant statistical differences were observed in the distribution of TB and DB levels across the three cohorts (P = 0.067 and P = 0.111, respectively).

Hospital 1, the First Affiliated Hospital of Sun Yatsen University. The other 5 hospitals are: Shenzhen Children’s Hospital, Fujian Provincial Maternity and Children’s Hospital, Children’s Hospital of Zhejiang University School of Medicine, Union Hospital, Tongji Medical College, Huazhong University of Science and Technology and West China Hospital, Sichuan University.

Training and selecting the optimal deep-learning model

Based on the different combinations of the data from 384 infants in the training set, two single-modality models (Gallbladder model and Triangular cord model) and four fusion models were trained using a five-fold cross-validation combined with an ensemble method. Four fusion models included a Conventional US model trained using both gallbladder and triangular cord US images, a Gallbladder-clinical model trained using gallbladder US images and clinical data, a Triangular cord-clinical model trained using triangular cord US images and clinical data and a Conventional US-clinical model trained using gallbladder and triangular cord US images, and clinical data. Among the above six models, the Conventional US-clinical model performed the best on the internal validation set, yielding an area under the receiver operating characteristic curve (AUC) of 0.968 (95% CI: 0.906–0.994) and area under Precision-Recall curve (AUPR) of 0.979 (95% CI: 0.966–0.996) for diagnosing BA, respectively (Table 2).

A total of 161 infants from the Hospital 1 have also undergone SWE examination, and therefore these infants formed a subgroup (i.e., the SWE cohort). On the SWE cohort, a Conventional US-SWE-clinical model was also trained using gallbladder and triangular cord US images, 2D-SWE images and clinical data. When tested in the internal test cohort, the Conventional US-SWE-clinical model (AUC 0.923, 95% CI: 0.792–0.984) did not perform better than the Conventional US-clinical model in diagnosing BA (AUC 0.952, 95% CI: 0.832–0.995, P = 0.350) trained on the same training cohort.

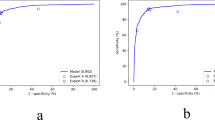

External evaluation of multimodal fusion models

In order to more realistically evaluate the performance of the model, it was necessary to test the model on an external testing cohort. The Conventional US-clinical model yielded the highest AUC among the different models in diagnosing BA, with an accuracy of 84.6%, sensitivity of 85.5%, specificity of 83.6%, respectively (Table 3). The ROC curves of the model also confirmed its superior performance over the Conventional US model (AUC 0.941 vs 0.936), or the model that fused a single US feature with clinical information (AUC 0.922 for Gallbladder-clinical model and 0.889 for Triangular cord-clinical model, respectively) (Fig. 2a). However, after Bonferroni correction for multiple comparisons, no significant differences were observed. The heatmap generated by class activation map (CAM) showed that the multimodal model could focus on the target area (gallbladder and triangular cord on US images) used by radiologists for diagnosis, indicating that its predictions were reliable (Fig. 3). Due to the lack of SWE images in the external cohort, the Conventional US-SWE-clinical model was not included for comparison.

Model generalization to an external video dataset

A video-based methodology was designed for real-time analysis in this study to be in line with actual clinical work scenarios. A pretrained US foundation model (USFM)21 and a pretrained gallbladder segmentation model trained by DeepLab4 were added to select the optimal standard diagnostic plane with the highest confidence in each video. Subsequently, the Conventional US-clinical model was tested using relevant data from the external cohort of 156 infants. The Conventional US-clinical model still performed best in diagnosing BA (AUC 0.930), outperforming the Conventional US model (AUC 0.845) or single-modality US models (AUC 0.750 for Gallbladder model and 0.763 for Triangular cord model, respectively) (Table 4 and Fig. 2b). However, the model did not perform better when tested on videos than on images (0.930 vs 0.942, P = 0.562) in the infant cohort for which videos were available. This may be due to the fact that the images manually selected by radiologists are more representative than the automatically segmented images.

Uncertainty estimation

In clinical practice, the AI model should provide both the diagnosis (BA or non-BA) and the associated uncertainty estimate. If the estimated uncertainty is reliable, the diagnosis of the cases with low uncertainty can be confidently trusted, while those cases with high uncertainty should be submitted to radiologists or the physicians for secondary evaluation. To demonstrate the effectiveness of the estimated uncertainty, an empirical evaluation was designed to simulate clinical diagnosis in practical applications. First, the normalized entropy for each patient was calculated using Eq. (1). Then a threshold of T = 0.95 was chosen to filter out infants with an entropy greater than T, which was determined from the internal dataset by maximizing the Youden index22. Initially, 24 infants on the external validation cohort were misclassified by the Conventional US-clinical model, resulting in an accuracy of 84.6%. By implementing Eq. (1) as the uncertainty scoring function, the model effectively identified and excluded high-uncertainty cases, filtering out 14 originally misclassified infants (Fig. 4) along with 25 correctly classified cases. This uncertainty-based screening significantly improved the Conventional US-clinical model’s diagnostic accuracy from 84.6% to 91.5% by selectively eliminating problematic cases. These 39 infants should receive further examination to obtain a more definitive diagnosis, such as intraoperative cholangiography or surgical exploration.

A sonographic gallbladder image (a) and a triangular cord image (b) of a 30-day-old male infant with biliary atresia. The DB and GGT levels of this infant were 75.4 mmol/L and 93.0 U/L respectively. The model initially classified this infant as non-biliary atresia with an average probability of 0.442. Due to the high uncertainty score, this infant would be filtered out by the model and need to undergo further examinations to obtain a definitive diagnosis.

Analysis of model misdiagnoses

The model initially misclassified 24 cases (12 BA and 12 non-BA), all characterized by poorly filled or non-filled gallbladders appearing abnormally small. Heatmap analysis of these misdiagnosed cases revealed four distinct attention patterns: (1) 9 cases (37.5%) showed no attention to either gallbladder or triangular cord regions, with 3 subsequently excluded due to high uncertainty; (2) 5 cases (20.8%) attended to triangular cord but overlooked gallbladder features, with 3 excluded for high uncertainty; (3) 3 cases (12.5%) focused on gallbladder while ignoring triangular cord, all excluded for high uncertainty; and (4) 7 cases (29.2%) demonstrated attention to both anatomical regions, yet 5 were still excluded due to high uncertainty. This pattern analysis suggests that while inadequate attention to key anatomical features contributes to misdiagnosis, even when both regions are properly attended to, uncertainty thresholds remain crucial for reliable case exclusion.

Model assists radiologists in diagnosis

In the external validation cohort utilizing US videos, the Conventional US-clinical model demonstrated significantly superior diagnostic performance for the diagnosis of BA compared to the radiologists’ independent diagnosis [AUC: 0.930 (95% CI 0.876–0.966) vs. radiologists’ mean AUC: 0.807 (range 0.692–0.851), all p < 0.05] (Table 5). With the assistance by Conventional US-clinical model (offering prediction results, uncertainty scores, US image heatmaps), all radiologists showed improved AUC (mean improved AUC: 0.066, range 0.027 to 0.147), with 3/6 achieving statistically significance (P < 0.05) (Table 5). The findings highlight two important values of this model: (1) as a compensatory aid for non-specialized practitioners in pediatric biliary US interpretation, and (2) as an augmentative system that elevates diagnostic accuracy even among fellowship-trained pediatric radiologists for BA detection.

Furthermore, the integration of AI model assistance led to a significant improvement in inter-radiologist agreement in the diagnosis of BA. Cohen’s kappa coefficient increased from a moderate agreement level (mean κ = 0.571, range: 0.406–0.739) to a substantial agreement level (mean κ = 0.696, range: 0.633–1.000), representing a clinically meaningful enhancement in diagnostic consistency.

Discussion

In order to address the challenges of early diagnosis of BA, a multimodal model was developed by integrating data from multiple modalities, including conventional US, clinical data, and laboratory test results. This multimodal deep learning model was able to fuse these heterogeneous modalities, and performed significantly better than the single-modal model in diagnosing BA. Model interpretability was enhanced through heatmap visualization, revealing clinically relevant decision-making patterns. External validation using independent video datasets confirmed the model’s robustness (sensitivity: 86.3%, specificity: 81.8%), with segmentation preprocessing effectively mitigating variability from operator-dependent image selection. The implementation of normalized entropy for uncertainty estimation provided additional clinical utility by identifying cases requiring further review (high-uncertainty cases: 25%). This safety mechanism enables targeted radiologists to focus on diagnostically challenging cases. Furthermore, the model demonstrated universal performance enhancement across all six participating radiologists (mean AUC improvement: 0.066), benefiting both radiologists with limited pediatric experience and fellowship-trained pediatric radiologists in BA detection.

This study developed a multimodal model that integrates data from different modalities to overcome limitations of single-modality approaches in BA diagnosis. By synthesizing readily accessible US and clinical features, the model provides radiologists with more comprehensive diagnostic capabilities, particularly valuable in resource-limited settings where advanced imaging expertise may be unavailable. While the multimodal model’s AUC improvement over single-modality approaches may lack statistical significance and requires additional diagnostic resources (including increased computational complexity and operational demands, and additional data acquisition requirements), its clinical value remains substantial for BA. This is particularly true for rare, high-stakes conditions like BA, where even marginal gains in sensitivity or specificity can meaningfully reduce diagnostic errors with potentially life-altering consequences. Furthermore, the model provides particular value in supporting less-experienced radiologists, enhancing both their diagnostic confidence and interpretation consistency.

Fully exploring and integrating the characteristics of different modalities of BA can improve diagnostic accuracy and model practicality, but adding more features does not always guarantee better performance. In this study, the addition of 2D-SWE images actually decreased the diagnostic performance of the fusion model. Some inevitable confounding factors in clinical practice may potentially affect the accuracy of 2D-SWE measurement results, such as the fasting time and quiet state of infants before examination. Previous study has also shown that adding 2D-SWE cannot further improve the effectiveness of gallbladder combined with triangular cord in diagnosing BA11. Potential contributing factors might include: (1) limited SWE sample size reducing statistical power; (2) lower diagnostic utility of SWE images compared to gallbladder and triangular cord images. In many cases, some features may be redundant or highly correlated with each other, leading to unnecessary duplication of information. Including redundant features can increase the complexity of the model without adding additional value.

Screening out groups with high uncertainty from the model classification results is crucial for the application of the model in real clinical scenarios. In clinical applications, wrong predictions would lead to serious consequences to infant with BA. The prediction values of deep learning-based models were usually affected by data noise, model errors, or other random factors, resulting in unreliable prediction results output by the model. In error analysis, we found that even when the model paid attention to the target features, its diagnosis might not be reliable. Through uncertainty estimation, the credibility of the prediction results can be quantified to help radiologists understand the reliability of the model in different scenarios. This helps radiologists assess potential risks and avoid making high-risk decisions based solely on the model’s outputs. The uncertainty estimates from the AI model can be used as a clinical decision support tool to automatically categorize cases into two management pathways: high-certainty predictions proceed through the standard workflow, and all cases predicted to be BA require referral to the pediatric surgery department for surgical evaluation. High-uncertainty cases should be immediately referred to experienced pediatric radiologists for further review.

In addition, we introduced US video in this study for automated feature identification to reduce the subjectivity of radiologists’ manual image selection. The subjective interpretation of conventional US features has been demonstrated to suffer from large inter-observer variability. In this study, we firstly trained the segmentation model to accurately detect target images based on predefined features (gallbladder and triangular cord), and then the selected images were sent to the diagnostic model to obtain a diagnosis of BA or non-BA. This eliminates the subjectivity introduced by human judgment, providing a more objective assessment of the diagnostic features showed in the video, thereby can potentially be applied to automatically diagnose BA via US videos obtained from novices.

There are several limitations in this study. Firstly, the generalizability of the AI model proposed in this study may be limited by the specific population distribution (younger infants), resulting in diagnostic bias. The natural history of untreated BA demonstrates age-dependent progression, characterized by deteriorating liver function tests and increasingly thickened triangular cord. External validation across broader age ranges is needed. Secondly, the proportion of BA infants enrolled in different data sets in this study is inconsistent with the actual prevalence of BA in the population. The diverse prevalence might decrease the potential generalizability of the findings. Future work will explore the potential strategies such as threshold adjustment or transfer learning to mitigate the impact. Thirdly, while the model demonstrated robustness across imaging systems from multiple manufacturers in this study, its clinical applicability may still be constrained by dependencies on advanced imaging technology. To enhance real-world utility, future validation should be specifically assessed on images obtained by portable or low-cost US devices for resource-limited settings. Last but not least, the study omitted some diagnostic features (e.g., hepatic subcapsular flow23) due to their operator-dependent nature.

In conclusion, the proposed multimodal fusion model showed potential for automated diagnosis of BA based on US videos, demonstrating improved performance over single-modality approaches. With the assistance of uncertainty estimation, the fusion model could potentially filter out cases with high diagnostic uncertainty, thereby helping radiologists make their decision more efficiently and accurately. Notably, the model demonstrated particular value in assisting less-experienced radiologists, significantly improving their diagnostic performance for BA. However, these findings warrant further validation in larger, multicenter cohorts to address limitations such as dataset diversity and clinical workflow integration.

Methods

Patient selection

This multicenter study was partially initiated from a study entitled as Machine learning based on the gallbladder morphology for screening BA among infants with conjugated hyperbilirubinemia, which was registered at www.chictr.org.cn in 2018 (ChiCTR1800017428) and approved by the Ethics Committee of the First Affiliated Hospital of Sun Yat-sen University in 2019 ([2019]083). In addition, the study across multiply medical centers was obtained additional ethical approval from the Ethics Committee of Fujian Maternity and Child Health Hospital in 2020(2020KY077) and the Ethics Committee of the First Affiliated Hospital of Sun Yat-sen University in 2023 ([2023]032). Written informed parental consent was obtained for each participant.

Between January 2018 and September 2022, infants with serum hyperbilirubinemia from hospitals 1 were enrolled. Infants should receive the conventional US examination at the participating centers, with a final diagnosis of BA or non-BA confirmed through surgical exploration, intraoperative cholangiography, or 1 year follow-up after US examination. In addition, infants from the other 5 hospitals between January 2022 and December 2023 were used as independent external validation cohorts using the same criteria as mentioned above. The flowchart of the patient inclusion and exclusion criterion was shown in Fig. 1. Finally, a total of 472 infants from hospital 1 were included, and were randomly divided into training cohort (80%) and validation cohort (20%). A total of 156 infants from the other 5 hospitals formed an external test cohort (Fig. 1). It is worth noting that 161 infants from the Hospital 1 have also undergone SWE examination, and therefore these infants formed a subgroup (i.e., the SWE cohort).

Ultrasound and clinical data collection

Each infant underwent the conventional US examination to obtain US images and videos of the gallbladder and triangular cord. US Images and videos should be acquired with a high-frequency probe without any interference objects (such as measuring calipers, arrows, etc.) in the area where the gallbladder or triangular cord was located. Each video was recorded for about 3–10 s. Only one gallbladder and one triangular cord video were retained for each infant for subsequent analysis. For the infants in the SWE cohort, SWE examinations were performed with the AixPlorer scanner (Supersonic, Paris, France) incorporating a SL15-4 curvilinear transducer (4–15 MHz). SWE images with more than 90% of the region of interest (ROI) box filled with color and no interfering objects within the ROI box were considered acceptable. Further details on US image and video acquisition criteria could be found in the Supplementary Note 1.

Clinical data including age and sex were recorded at the time of US examination. Serologic data including alanine aminotransferase (ALT), aspartate aminotransferase (AST), GGT, total Bilirubin (TB) and DB level were obtained within one week of the US exanimation as part of routine clinical care.

Data preprocessing

To alleviate the influence of irrelevant information in the US images, the gallbladder and triangular cord in the US image were extracted as ROI for subsequent image analysis. The study utilized adaptive Canny edge detection24 for ROI extraction in two contexts: (1) isolating the US scan area in triangular cord images and (2) identifying the color-mapped region in SWE images. Initial findings suggest that this method achieves rapid and reliable ROI segmentation, demonstrating its effectiveness in medical image processing. The ROIs in gallbladder US images were automatically extracted by a DeepLab V3 segmentation model trained in the previous study4. Prior to model input, all images underwent comprehensive data augmentation procedures. Specifically, random region cropping was performed on the original images to generate standardized samples of 224 × 224 pixels. Subsequently, a rotation transformation with a 50% probability was introduced to apply a random angular deflection within the range of ±15° to the samples. Finally, pixel values were normalized. More details on preprocessing of image data were shown in the Supplementary Note 2.

As for clinical information, 7 indicators were initially evaluated, including sex, age, ALT, AST, GGT, TB and DB level. Numerical data were normalized to mitigate discrepancies, facilitating more efficient and effective learning processes. Subsequently, these indicators were used to build a diagnostic model, and the SHapley Additive exPlanations (SHAP) value25 was calculated to estimate the contribution of each indicator in the inference stage, as shown in Fig. 5. More details could be found in Supplementary Note 3. The top four indicators (GGT level, DB level, age and sex) were selected for further fusion analysis because they had the largest and nearly equal contributions to the diagnostic results, thereby mitigating the risk of over-fitting.

Diagnostic model development

The modeling process was mainly divided into three parts: image modality modeling, image and clinical information alignment, and ensemble training and inference strategy. In the first part, two single-modality imaging models (Gallbladder model and Triangular cord model) were developed. For each model, ResNet-10126 pretrained on ImageNet-1K27 was employed as image encoder. The ResNet-101 architecture26 was utilized with its original layer configuration, consisting of 101 convolutional layers organized into four residual blocks. Each residual block contained multiple stacked bottleneck modules and skip connections. More details about the bottleneck modules and skip connections could be found in the Supplementary Note 4.

In the second part, this study considered two types of multimodal modeling, including modeling process of two kinds of US image and modeling process of image and clinical information modalities. For the modeling process of different image modalities like the gallbladder and triangular cord US images, a ResNet was constructed as image encoder to extract features for each image modality. Subsequently, features of all modalities were concatenated to form joint features, which enabled the model to learn the information from different modality features simultaneously. For the modeling process involving image and clinical information modalities, it was important to emphasize that these data types differ in dimensions and should not be simply merged. Therefore, we developed an alignment module that integrated image features with clinical information. Specifically, a multi-layer perceptron followed by a Sigmoid function was used to transform input clinical information into channel weights. Then those weights element-wise multiplied with the corresponding channel in image features in the residual blocks and the concatenated features. Finally, the joint features were processed through a softmax classifier to obtain the final diagnostic results of the test image (Fig. 6 and supplementary Fig. 1). More detailed about the model architecture could be found in the Supplementary Note 4.

In the third part, following the previous work4, this study adopted a five-fold cross validation and ensemble training strategy to develop different intelligent diagnosis models (Fig. 7). First, the internal training cohort was partitioned into five equal subsets, ensuring that each subset contained images from an equivalent number of infants. Each model was trained on four of these subsets, with training halted when performance began to decline on the remaining validation subset. During validation, the optimal model was selected based on the AUC metric. In this way, five distinct models were trained, each cultivating diverse knowledge through training and validation on different subsets. This diverse knowledge enhanced the overall generalization capacity of the ensemble model. In the test phase, the predictions of each model were aggregated through a voting mechanism to output a final prediction for each test image.

Segmentation model development for US videos

Real-time dynamic observation of gallbladder and triangular cord was more in line with actual clinical work scenarios. Thus, a video-based methodology was designed to automatically select images that clearly display the gallbladder and triangular cord, and to localize these regions for the automatic diagnosis of BA from each video (segmentation process shown in Fig. 6). The automatic localization of gallbladder regions on each video was obtained by a pretrained gallbladder segmentation model DeepLab4, while the triangular cord region was obtained by a pretrained US foundation model (USFM)21. The segmentation model for triangular cord was trained on 440 triangular cord US images, which were randomly selected from 86 triangular cord US videos from training cohort and then annotated by roughly drawing the boundary of the triangular cord regions. Standard diagnostic plane selection was objectively determined through quantitative analysis of segmented anatomical area size, a strategy validated in prior ultrasound imaging study4. The automated selection pipeline consisted of three key steps: (1) processed each video frame through pretrained segmentation models for both gallbladder and triangular cord structures, (2) calculated absolute pixel areas for all segmented regions, and (3) independently ranked frames for each anatomical structure by segmentation size. The top 5% of frames with the largest segmented regions were selected for subsequent diagnostic analysis. This selection threshold was empirically determined through preliminary experiments (Supplementary Note 5), with supporting results provided in the supplementary Table 2. Furthermore, the segmented ROI images were resized and normalized before being fed into the diagnose model to maintain consistency and standardization.

Heatmaps to interpret AI diagnosis

To assure trust by human experts, an understandable decision-making process is desired in clinical practice. In the realm of AI interpretation, CAM28 is a prevalent technique to visualize and understand the decision-making of convolutional neural networks (CNNs). This study performed experiments using both internal and external test datasets, analyzing each image with five distinct models. This approach generated five independent activation maps for every image. To unify these diverse perspectives into a single cohesive representation, the study implemented an integration process in which the activation maps were averaged. This aggregation resulted in a final activation map that reflected the combined insights from all models.

Uncertainty estimation

Predictive uncertainty can arise from the inherent noise of the data (data uncertainty) or lack of knowledge about the model parameters (model uncertainty)29. It’s crucial to capture these uncertainties in order to filter out those patients whose diagnosis are most likely incorrect when the predictive uncertainties are high. There are several metrics to estimate the predicted uncertainty, e.g., maximum softmax probability (MSP)30, entropy31, evidential uncertainty32 and energy33. Empirical result shows that entropy is the most effective metric to estimate uncertainty for an ensemble of models34. Let \({\rm{x}},{\rm{M}},{\rm{K}}\) denote a test sample, the number of models and the number of classes respectively, the probability of the ensemble can be combined by all probability \({{\bf{p}}}_{{\rm{i}}}\) output by the \({\rm{i}}\)-th model, that is,

And the entropy of the prediction can be calculated easily by

To ensure the entropy is within range \([0,\,1]\), the normalized entropy can be calculated by

With Eq. (1) as the uncertainty scoring function, the prediction of misclassified samples was more likely to be estimated as higher uncertainty because diverse models tend to produce varying probability distributions for ambiguous samples. Consequently, the ensemble would give higher uncertainty for misclassified samples, aligning with our objective of enabling the model to “know what it doesn’t know.”

Models assist radiologists in diagnosis

We conducted a retrospective reader study involving 3 fellowship-trained pediatric radiologists (2–3 years of experience) and 3 abdominal radiologists (3–5 years of experience). All readers independently interpreted the external testing set videos and clinical information initially, and then reevaluated the same cases with AI support (prediction results, uncertainty estimation scores and US image heat maps). The initial diagnosis and AI-assisted diagnosis were recorded.

The test data of each infant in external testing cohorts was presented to readers randomly, and all these 6 radiologists have not read any of the patient’s images or videos before attending this study and were blinded to any other patient’s information during their diagnoses.

Statistical analysis

The diagnostic performance of different models was measured by the AUC and the AUPR. Differences between various AUCs were compared using a Delong test, a non-parametric method chosen for its robustness with limited sample sizes. More comprehensive metrics including accuracy, sensitivity and specificity were calculated. Significance was assessed using two-sided tests with Bonferroni correction for multiple comparisons. Adjusted P-values exceeding 1.0 were truncated to 1.0, indicating no significance. The analyses were performed with MedCalc Statistical Software version 15.2.2 (MedCalc) and SPSS software package version 27 (IBM).

Data availability

The ultrasound images and videos are not publicly available by hospital regulations to protect patient privacy. Limited data access is obtainable upon reasonable request by contacting the corresponding author.

Code availability

The code associated with this study will be made publicly available on GitHub (https://github.com/Reckless0/Multimodal-Biliary-Atresia-Diagnosis.git).

References

Hartley, J. L., Davenport, M. & Kelly, D. A. Biliary atresia. Lancet 374, 1704–1713 (2009).

Tam, P. K. H. et al. Biliary atresia. Nat. Rev. Dis. Prim. 10, 47 (2024).

Shneider, B. L. et al. A multicenter study of the outcome of biliary atresia in the United States, 1997 to 2000. J. Pediatr. 148, 467–474 (2006).

Zhou, W. et al. Ensembled deep learning model outperforms human experts in diagnosing biliary atresia from sonographic gallbladder images. Nat. Commun. 12, 1259 (2021).

Zhou, W. et al. Interpretable artificial intelligence-based app assists inexperienced radiologists in diagnosing biliary atresia from sonographic gallbladder images. BMC Med. 22, 29 (2024).

Lee, H. J., Lee, S. M., Park, W. H. & Choi, S. O. Objective criteria of triangular cord sign in biliary atresia on US scans. Radiology 229, 395–400 (2003).

Zhou, L. Y. et al. Optimizing the US diagnosis of biliary atresia with a modified triangular cord thickness and gallbladder classification. Radiology 277, 181–191 (2015).

Choi, S. O., Park, W. H., Lee, H. J. & Woo, S. K. Triangular cord’: a sonographic finding applicable in the diagnosis of biliary atresia. J. Pediatr. Surg. 31, 363–366 (1996).

Liu, Y. et al. The utility of shear wave elastography and serum biomarkers for diagnosing biliary atresia and predicting clinical outcomes. Eur. J. Pediatr. 181, 73–82 (2022).

Wang, X. et al. Utility of shear wave elastography for differentiating biliary atresia from infantile hepatitis syndrome. J. Ultrasound Med. 35, 1475–1479 (2016).

Zhou, L. Y. et al. Liver stiffness measurements with supersonic shear wave elastography in the diagnosis of biliary atresia: a comparative study with grey-scale US. Eur. Radio. 27, 3474–3484 (2017).

Dong, R. et al. Development and validation of novel diagnostic models for biliary atresia in a large cohort of Chinese patients. EBioMedicine 34, 223–230 (2018).

Weng, Z. et al. Gamma-glutamyl transferase combined with conventional ultrasound features in diagnosing biliary atresia: a two-center retrospective analysis. J. Ultrasound Med. 41, 2805–2817 (2022).

Harpavat, S. et al. Diagnostic yield of newborn screening for biliary atresia using direct or conjugated bilirubin measurements. JAMA 323, 1141–1150 (2020).

Harpavat, S., Garcia-Prats, J. A. & Shneider, B. L. Newborn bilirubin screening for biliary atresia. N. Engl. J. Med. 375, 605–606 (2016).

Zhu, F., Cheng, Z., Zhang, X.-Y. & Liu, C.-L. Rethinking confidence calibration for failure prediction. In European conference on computer vision, pp. 518-536. Cham: Springer Nature Switzerland (2022).

Zhu, F., Cheng, Z., Zhang, X.-Y. & Liu, C.-L. Openmix: Exploring outlier samples for misclassification detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12074-12083 (2023).

Zou, K. et al. Reliable multimodality eye disease screening via mixture of student’st distributions. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 596-606. Cham: Springer Nature Switzerland (2023).

Kiyasseh, D., Cohen, A., Jiang, C. & Altieri, N. A framework for evaluating clinical artificial intelligence systems without ground-truth annotations. Nat. Commun. 15, 1808 (2024).

Xia, T., Dang, T., Han, J., Qendro, L. & Mascolo, C. Uncertainty-aware health diagnostics via class-balanced evidential deep learning. IEEE J. Biomed. Health Informatics 28, 6417–6428 (2024).

Jiao, J. et al. USFM: A universal ultrasound foundation model generalized to tasks and organs towards label efficient image analysis. Med. Image Anal. 96, 103202 (2024).

Youden, W. J. Index for rating diagnostic tests. Cancer 3, 32–35 (1950).

Lee, M. S. et al. Biliary atresia: color doppler US findings in neonates and infants. Radiology 252, 282–289 (2009).

Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 8, 679–698 (1986).

Lundberg, S. A. & Lee, S. I. A unified approach to interpreting model predictions. Advances in neural information processing systems 30 (2017).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770-778 (2016).

Deng, J. et al. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, pp. 248-255. Ieee (2009).

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A. & Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2921-2929 (2016).

Gal, Y. Uncertainty in deep learning. University of Cambridge (2016).

Hendrycks, D. & Gimpel, K. A Baseline for Detecting Misclassified and Out-of-Distribution Examples in Neural Networks." In International Conference on Learning Representations (2017).

Lakshminarayanan, B., Pritzel, A. & Blundell, C. Simple and scalable predictive uncertainty estimation using deep ensembles. Adv. Neural Inf. Process. Syst. 30, 6405–6416 (2017).

Sensoy, M., Kaplan, L. & Kandemir, M. Evidential deep learning to quantify classification uncertainty. Adv. Neural Inf. Process. Syst. 31, 3183–3193 (2018).

Liu, W., Wang, X., Owens, J. & Li, Y. Energy-based out-of-distribution detection. Adv. Neural Inf. Process. Syst. 33, 21464–21475 (2020).

Ovadia, Y. et al. Can you trust your model’s uncertainty? evaluating predictive uncertainty under dataset shift. Adv. Neural Inf. Process. Syst. 32, 14003–14014 (2019).

Acknowledgements

This work was supported by National Natural Science Foundation of China (No. 82271996, 82402299, 62071502, U1811461), the Major Research plan of the National Science Foundation of China (No. 92059201), Guangdong High-level Hospital Construction Fund, and China Postdoctoral Science Foundation (No. 2023M744077).

Author information

Authors and Affiliations

Contributions

L.Y.Z. and W.Y.Z. designed the study, W.Y.Z., R.L. and Y.H.Z. performed experiments, statistical analysis and drafted the manuscript. L.Y.Z. and R.X.W. revised the manuscript. Z.J.W. and G.L.H. collected data and analyzed experimental results, S.W., B.X., Z.J.T., C.Y., H.L.Y., J.X.L., W.L. participated in the literature search, data preparation, and manuscript editing. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhou, W., Lin, R., Zheng, Y. et al. Multimodal model for the diagnosis of biliary atresia based on sonographic images and clinical parameters. npj Digit. Med. 8, 371 (2025). https://doi.org/10.1038/s41746-025-01694-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-025-01694-z