Abstract

Sebaceous gland carcinoma (SGC) and some poorly differentiated squamous cell carcinomas (SC) of the eyelid may have overlapping clinical and histopathologic features, leading to potential misdiagnosis and delayed treatment. The authors developed a deep learning (DL)-based pathological analysis framework to classify SGC and SC automatically. In total, 282 whole slide images (WSIs) were used for training, validating and inner testing the DL framework and 36 WSIs were obtained from another hospital as an external testing dataset. In WSI level, the diagnostic accuracy of SGC and SC achieved 84.85% and 75.12%, respectively, in the internal testing set and reached 22.22% and 77.78%, respectively, in the external testing set. The accuracy of the pathologists could be improved with the AI framework (60.0 ± 9.8% vs. 76.8 ± 9.6%). This AI-based automatic pathological diagnostic framework achieved the performance of a well-experienced pathologist and can assist pathologists in making diagnoses more accurately, especially for non-ophthalmic pathologists.

Similar content being viewed by others

Introduction

The prevalence of eyelid malignancies is about 5.1–15.7 cases/100,000 individuals annually1, accounting for about 5–10% of all skin malignancies2. Eyelid malignancies impair the functional integrity essential for globe protection while carrying mortality risks due to metastatic dissemination to regional lymph nodes and distant organ systems1,3. Squamous cell carcinoma (SC) and sebaceous gland carcinoma (SGC) of the eyelids account for 3.4–12.6% and 0.6–10.2% of eyelid malignancies, respectively. They are the second and third most common malignant tumors of the eyelid, surpassed only by basal cell carcinoma1.

The treatment modalities and prognoses of SGC and SC differ, influencing patient management1,2. For example, SGC, owing to its pagetoid spread and multifocal nature, often requires map biopsies to define tumor margins and guide surgical excision4. This technique is not typically applied to wide local excision with clear margins of the SC, as it tends to exhibit more localized growth5. Additionally, definitive radiotherapy is generally less effective for SGC than for SC, which tends to result in better radiosensitivity in clinical practice6,7. Therefore, identifying SC and SGC at the onset of medical care is extremely important to avoid adverse outcomes due to delays in diagnosis. However, SGC typically masquerades as chalazion, chronic blepharoconjunctivitis and blepharitis, which leads to clinical misdiagnosis7,8,9. Around 40–75% of cases of SGC are initially misdiagnosed by inexperienced pathologists, and the most common histopathological misdiagnosis of SGC is SC9. Therefore, innovative means need to be developed to reduce work intensity and improve efficiency.

Whole slide imaging (WSI) was developed in 1999 and has since served as a valuable tool in the field of digital pathology10. WSI allows the examination of a slide and annotation on a computer screen11. Recently, artificial intelligence (AI)-based computational pathology has been used to analyze digital pathological slides automatically and assist in the traditional pathological examination of slides11. In the past few years, deep learning (DL)-based AI techniques have advanced considerably in their application in medical fields, including ophthalmology12,13. Therefore, this study established DL models to automatically differentiate eyelid SGC and SCC based on WSIs.

Results

Development of the diagnostic framework

The AI diagnostic framework consists of five modules (Fig. 1): a preprocessing module, a meaningless tile filter module, a style transfer module, a tile identification module, and a results merging module. The preprocessing module divides the WSIs (40×) into tiles (512 × 512). Then, a meaningless tile filter module was used to filter out the background and meaningless tiles, including white, black, white and black tiles, blur tiles, tiles containing broken cells due to improper storage, ratio of cells <60%14, tiles with non-cellular structures, and contaminated tiles (shown in Supplementary Fig. 1). This study trained three ResNet101 models for filtering out these useless tiles in the meaningless tile filter module with the dataset including all cut tiles annotated by three pathologists (Q. Zhang and H. Zhang and Piao). Due to the differences between dyeing methods, saving methods, saving durations, etc., the heterogeneity of WSIs from different healthcare centers could not be avoided. The heterogeneity deteriorates the performance of each module when the models are provided data from external testing datasets. To solve this problem, the cycle generative adversarial network (CycleGAN)15 model was applied to generate tiles with the appearance of training data (training dataset and validation dataset) and a style similar to that of unseen tiles (from the external testing dataset) to prevent performance degeneration for the external testing dataset. This auxiliary module did not require additional annotation, and thus, it can be quickly integrated into the tile identification module. The remaining tiles were subsequently classified as normal (including stroma), SC, and SGC tiles through the tile identification module. This study selected five candidate convolutional neural networks (CNNs) for this module: ResNet10116, ResNeXt10117, Wide ResNet10117,18, DenseNet12119, and EfficientNetb520 with consistent hyperparameter settings (e.g., learning rate, epochs). The results merging module was applied to merge the identification results for all useful tiles in two manners: the final diagnostic result was based on the probability or the number of each type of tile (normal, SC, and SGC): the comparison between the average probability of SGC and SC or the comparison of number of the tiles identified as SGC and SC. All models were trained with weights pre-trained on the ImageNet dataset21.

Training phase: First, the slides from BTH were cutted into tiles (preprocessing module) for training three meaningless tile filter models (meaningless tile filter module) whose weights were then fixed. Then the slides from TMCPLA were also cutted into tiles and all tiles were sifted by the three filter models. Next, CycleGAN were used to produce the tiles with the appearance similar to the tiles from BTH and style similar to the tiles from TMCPLA (style transfer module). Consequently, all tiles passed through the three filter models and the produced tiles from CycleGAN were used to train the models in tile identification module. Finally, the identification results for all tiles were merged to obtain the result for one slide (results merging module). Testing phase: A slide was cutted into tiles and all tiles were sifted through three filter models. All tiles passed through the three filter models were identified by the model in tile identification module. Finally, the identification results for all tiles were merged to obtain the result for the slide.

For the meaningless tile filter module, the model was trained and validated via five fold cross-validation in terms of the tile level. The model was selected with the best performance to use in the framework. However, for the tile identification module, the models were trained in a subject-independent fivefold cross-validation manner22,23; i.e., the tiles from one subject could not be divided into a training dataset and a testing dataset simultaneously. Because imbalanced classification problems occurred commonly in this study, the class weight was also adopted to tradeoff the effect of imbalanced datasets. The hyperparameters of all models in the diagnostic framework are shown in Supplementary Table 1.

Participants

This study obtained WSIs from 198 patients who underwent SGC or SC resection surgery (without biopsy samples) and pathological examination at Beijing Tongren Hospital and the Third Medical Center of Chinese PLA General Hospital. The baseline characteristics of patients in the training set, the internal testing set, and the external testing set were described in Supplementary Tables 2 and 3.

Dataset composition

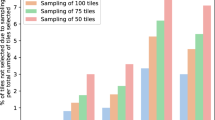

The training dataset consisted of 47 WSIs (from 21 patients) and was cut into 124,360 tiles, with 26 SGC WSIs (from 19 patients) and 21 SC WSIs (from 12 patients). The internal testing dataset consisted of 235 WSIs (from 154 patients), which were cut into 2680 tiles (after filtering), with 202 SGC WSIs (from 129 patients) and 33 SC WSIs (from 25 patients). The external testing dataset was obtained from the Third Medical Center of Chinese PLA General Hospital. The external dataset included 36 WSIs from 13 patients, comprising 27 SGC WSIs from 10 patients and nine SC WSIs from three patients.

Performance of quality control for tiles

Supplementary Tables 4 and 5, Supplementary Fig. 2 presented the performance of meaningless tile filter module of internal cross-validation. Because the black, white, and black‒white tiles were easy to identify, the accuracy of the first and second filter models surpassed 0.99. The mean value of the accuracy for the third filter model surpassed 0.83 for all six classes (including others). The number of classes in the third filter model was much greater than that in the first and second filter models; thus, the result did not reach the performance of the first and second filter models. Additionally, the misclassification between the five classes (blur tiles, tiles containing broken cells due to improper storage, a ratio of cells <60%, tiles with non-cellular structure, and contaminated tiles) did not disturb the performance of the diagnosis module because the accuracy for others (qualified tiles) was almost 0.8. Notably, one SGC WSI in the internal testing dataset was discarded because all the tiles from it were evaluated as unqualified by the meaningless tile filter module. Therefore, 234 WSIs (201 SGC and 33 SC) were ultimately used as an internal testing set.

Performance for differential classification at the tile level

The confusion matrices of all five types of CNNs in the tile identification module are shown in Fig. 2. The detailed performance metrics for overall performance and each class are shown in Supplementary Tables 6 and 7. The receiver operating characteristic curve and precision-recall curves for each class were used for internal cross-validation (Supplementary Fig. 3). ResNeXt performed the best, with a mean accuracy of 0.9054, and the mean accuracy of all five models was >0.83. The area under the receiver operating characteristic curve (AUROC) values of all five models for all three classes were >0.97.

Performance for differential classification at the WSI level

The heat maps in the internal testing dataset at the WSI level are shown in Fig. 3, and the accuracy for the whole internal and external testing datasets is shown in Table 1. The accuracy corresponded to two merging manners in the results merging module. Except for DenseNet, which performed the worst, the differences for the other models in the internal testing dataset were not as prominent as those in the external testing dataset. The accuracy of DenseNet was 30/33, 30/33, 90/201, and 87/201 (internal testing dataset); 9/9, 9/9, 0, and 0 (external testing dataset) for SC and SGC, respectively. The performance of Wide ResNet was the best at the level (bold words in Table 1), especially for the external testing datasets.

Comparison between pathologists without and with AI assistance

As shown in Fig. 4, the accuracy of the pathologists’ assessment could be improved with the assistance of an AI framework. The accuracy of each pathologist participating in the comparison was statistically analyzed. After the AI-assistance, the diagnostic accuracy of five pathologists for 25 WSI increased from 60.0 ± 9.8% to 76.8 ± 9.6% (mean ± standard deviation), with a statistically significant difference (P = 0.002, t = 7.203, degrees of freedom = 4). The overall diagnostic accuracy of total 25 WSIs for resident pathologist 1, attending pathologist 2, attending pathologist 3, associate chief pathologist 4, and associate chief pathologist 5 has been improved by 12.0%, 24.0%, 12.0%, 20.0%, 16.0%, respectively. For resident pathologist 1, the diagnostic accuracy of SGC and SC improved by 6.67% and 20%, respectively. (from 66.7% to 73.3% and from 30% to 50%, respectively). For attending pathologist 2, the diagnostic accuracy of SGC and SC has been improved by 26.7% and 20%, respectively. (from 60% to 86.7%, 30% to 50%, respectively); for attending pathologist 3, the diagnostic accuracy of both SGC and SC has been improved by 13.3% and 10% (from 80% to 93.3%, 40% to 50%, respectively); for Associate Chief Pathologist 4, the diagnostic accuracy of both SGC and SC improved by 20.0% (from 73.3% to 93.3% and from 50.0% to 70.0%, respectively); moreover, for Associate Chief Pathologist 5, the diagnostic accuracy of SGC improved by 26.7% (from 66.7% to 93.3%), without any improvement in the diagnostic accuracy of SC (maintaining 80.0%). The changes in diagnostic accuracy for five pathologists using AI assistance are outlined in Table 2. Shown in Fig. 5, a significant reduction in average diagnostic time was observed only for associate chief pathologist 2, with a decrease of 15.6 s (from 32.24 s to 16.64 s) (P < 0.0001). The time required for diagnosis with or without AI did not differ significantly among the other pathologists.

Discussion

This was the first study to develop a DL-based pathological classification system for eyelid SGC and SC. The DL framework demonstrated stable capabilities in achieving rapid differential diagnosis at the tile level and the WSI level, showing sensitivity and specificity comparable to those of experienced pathologists.

Computer-aided pathological diagnosis systems have been shown to help in the subtype classification of lung cancer24 and in assessment for metastatic disease in lymph nodes25. They may be combined with genomic data to establish a multimodal recurrence-scoring prediction system for renal cell carcinoma26. These studies have shown that AI models may assist pathologists or potentially extract additional features that may provide additional prognostic information not immediately visible on standard histopathologic examination.

In 2020, Wang et al.27 first applied computer-aided pathological diagnosis technology in ophthalmology (a DL-based system for eyelid malignant melanoma detection). The methodological parallels between our approach and the previous study illustrate the potential of AI-driven techniques for pathological applications in ophthalmology.

The framework described herein showed good discriminative ability at all levels. Misdiagnosis rates ranging from 40–75% have been reported in the histopathological assessment of SGC when general pathologists initially evaluate it without subspecialty training in ophthalmic pathology7,9. However, the algorithm can automatically determine the diagnostic result for each tile and merge them for the whole slide, which can be completed in seconds. This study included more than two slides from the same patient in the training set, validation set, and external testing set to test the comprehensive ability.

This DL-based framework can serve as a reliable tool to assist pathologists in improving the efficiency and accuracy of diagnosis with WSIs of eyelid SC and SGC. The tumor heatmap generated by the proposed framework provides a spatially resolved probability landscape across gigapixel WSIs and may assist in visually revealing the ___location of the tumor. Our automatic diagnostic framework could repeatedly diagnose a slide within seconds, which reduced the heavy workload of pathologists to some extent. The tumor heatmap generated by framework provides a spatially resolved probability landscape across gigapixel WSIs, which could visually reveal the ___location of tumors. This system can also significantly promote medical equity and achieve remote medical care in areas with inadequate medical conditions.

The framework also exhibited excellent generalizability. The diversity of slide color and quality due to different methods of making slides, different scanners, and different digital pathology slide storage formats has hindered the promotion of AI-assisted pathology diagnosis models. Paraffin sections and frozen sections were included from the multiple-center dataset, which were scanned by two different scanners and stored in formats to train and validate the generalizability of the model.

Additionally, this model has a quality control function and can automatically discard unqualified parts. Roughly obtained, poorly fixed, or improperly processed slides can interfere with the ability of pathologists to assess morphology28. Therefore, quality control, including digital pathology, is crucial for pathological diagnosis29. Thus, the model was designed to remove unqualified images in the first step to prevent machines from judging the nature of tumors.

The WSI of the DL framework judgment errors were analyzed in the internal testing set. Notably, three of these WSIs also confused pathologists (one SGC slide and two SC slides), which could not be evaluated accurately at the time. These poorly differentiated pathological sections lacking classical histological features also confused chief pathologists and were confirmed through multicenter expert consultations. The advantage of AI is that it does not require the time needed to train an experienced pathologist, and there is no subjectivity in tissue pathology analysis21. However, the AI model for pathology was subject to certain limitations. The training of these models is limited by the quality and quantity of the training set, as well as, the heterogeneity of the tumors21.

This study further examined the responses of each pathologist in the comparison of diagnoses with and without AI assistance. It was found that four out of five pathologists marked one slide as “undecidable” after AI assistance. This occurred because the model identified all regions as cancerous when they were actually normal, while the true cancerous regions were removed as low-quality tiles. This indicates a need for enhancement in both classification accuracy and quality control within our model.

Our study had several limitations. First, the sample size was small, and no publicly available dataset containing digital pathological slides of two types of malignant eyelid tumors for training or validation was found. However, our sample size was larger than that used for the previous pathological differentiation system for eyelid tumors27,30, and it was also acceptable compared to similar published studies31,32. Second, since the study employed five preexisting CNNs, the internal process is a “black box” and not explainable21. Third, the quality control system could not achieve 100% specificity, implying that a small portion of high-quality small tiles were also screened out. For example, the WSIs discarded by the meaningless tile filter module may interfere with the pathologist’s judgment to some extent. A large portion (about 30%) of the areas met our quality control requirements and were meaningful for pathological diagnosis. However, our conclusion is reliable, and this deep learning model can improve the diagnostic accuracy of pathologists. Fourth, this study prospectively collected slides from one external validation hospital; although the production methods and scanners used were different, the population was also from China. The performance of the model on slides of other races needs to be validated. Fifth, this study only performed three classification tasks, achieving the most difficult pathological differentiation of two types of eyelid malignant tumors. Our research group aims to expand the pathological classification to other benign and malignant eyelid tumors based on this research. In addition, unfortunately, biopsies were not included. The performance of the AI diagnostic framework on intraoperative frozen section specimens and FFPE surgical resection samples is satisfactory.

The findings of our study suggest that deep learning models have the potential to be applied in the differential diagnosis of malignant eyelid tumors that may show some overlapping histopathological features. In future studies, this methodology will be adapted to develop a multiclass system for diverse eyelid tumors to assist in AI-driven classification in ophthalmic pathology.

Materials and methods

Study population and data preparation

This study involves human participants and was approved by The Ethics Committee of Beijing Tongren Hospital, Capital Medical University (TRECKY2018-056-GZ(2022)-07), and the Third Medical Center of Chinese PLA General Hospital. Participants gave informed consent to participate in the study before taking part. This study collected pathological slides (including formalin-fixed paraffin-embedded (FFPE) and intraoperative frozen sections from pathological slides) and medical records of patients who were diagnosed with SGC or SC and underwent surgeries at Beijing Tongren Hospital (BTH) from June 2006 to November 2022. The gold standard for diagnosis in this study was histopathological confirmation by two independent subspecialty-trained ophthalmic pathologists (with >20 years of experience in ocular pathology), with the final diagnostic consensus reached through multidisciplinary consultation, including treating ophthalmologists. Immunohistochemical markers (EMA, AR and Ki-67) were systematically applied in diagnostically challenging cases, followed by molecular testing (P53 mutation analysis) when required. In total, 228 H&E-stained sections from 148 eyelid SGC patients and 54 H&E-stained sections from 37 eyelid SC patients were included, and all selected slides were scanned by separate technicians into digital pathological slides using a digital slice scanning equipment (NANO ZOOMER XR, Hamamatsu, Japan and Pannoramic DESK P250, 3D HISTECH, Hungary). The schematic flowchart of data collection was shown in Supplementary Fig. 4. All WSIs were stored in the MRCX format (193 slides) or NDPI format (89 slides). 47 WSIs (26 SGC and 21 SC) were divided into training and validation sets; care was taken that the patients in the training and validation sets did not overlap with the subjects independently (the slides from one subject were not divided into training and validation datasets at the same time). For the training set, two pathological slide processing software programs (Slideviever, v.2.4.0, 3DHISTECH and Qupath, v.0.3.0, https://qupath.github.io) were used for delineating and labeling the regions of different kinds of tissue, including the following three classes: tissue (normal or stroma), the SGC tumor region and the SC tumor region. Two experienced pathologists (Q. Zhang and H. Zhang) (with >10 years of experience) were responsible for labeling WSIs and reviewing each other’s assessments. In case of a dispute between the two pathologists, the final decision made depended on the chief physician of the department of pathology (Piao).

Patient data with complete medical records were obtained from the Third Medical Center of the TMCPLA for the period May 2017 to May 2023 to construct an independent external testing set to test the extrapolation ability of our DL model. Finally, 36 slides (including FFPE pathological slides and intraoperative frozen sections) were scanned (27 SGC WSIs from 12 patients and nine SC WSIs from four patients).

Statistical analysis of baseline characteristics of patients was performed using SPSS software (version 25.0, Foundation for Statistical Computing, IBM, America). Frequency (percentage) was described as categorical variables, and continuous variables with normal and skewed distributions were presented as mean ± standard deviation and median (interquartile range), respectively.

Training

All models were trained on a server with two NVIDIA 3090 GPUs, an Intel(R) Xeon(R) Gold 5218R CPU @ 2.10 GHz, and 256 GB of memory. SGD33 (stochastic gradient descent) was used as the optimization method.

Assisting pathologists in reading slides

To assess the clinical utility of our DL framework in aiding pathologists with diagnosis, five pathologists with varying degrees of clinical experience (two associate chief pathologists with >15 years of experience, two attending pathologists with >10 years of experience, and one resident pathologist with >5 years of experience) were recruited to diagnose 25 challenging digital pathological slides (15 SGC slides from 12 patients and 10 SC slides from nine patients) from the internal testing dataset. Patient information was anonymized, and pathologists were instructed to perform binary classifications. First, pathologists independently classified the slides, and after a 45-day washout period, they were identified again using heat maps of the whole slide and classified tiles provided by the DL-based framework as clues. Specifically, in the second round, the pathologists were provided with classification results of tiles automatically categorized by computers.

Performance metrics

In cross-validation, for the meaningless tile filter module, row normalized confusion matrices, accuracy, sensitivity, specificity, and (AUROC) were used to evaluate the performance. For the tile identification modules, row-normalized confusion matrices were used for overall evaluation, and the accuracy, sensitivity, specificity, and AUROC were used to evaluate the performance for each class34,35. The corresponding confidence intervals were calculated via point estimates36. During the validation of the internal and external testing dataset, the diagnostic accuracies for SC and SGC slides were summarized. For the time cost of reading slides, the Wilcoxon paired test was used to evaluate the difference between readings without AI assistance and those with AI assistance37. A Paired Samples t-test was utilized to compare the accuracy of pathologists diagnosing with AI assistance versus without. A p value of <0.05 was considered statistically significant. All statistical analyses were performed using MATLAB R2016a (https://www.mathworks.com/), GraphPad Prism 5 (https://www.graphpad.com/) and SPSS software (version 25.0, Foundation for Statistical Computing, IBM, America).

Statistical analysis of baseline patient characteristics was carried out using SPSS software (version 25.0, Foundation for Statistical Computing, IBM, USA). Categorical variables were expressed as frequency (percentage), while continuous variables with normal and skewed distributions were reported as mean ± standard deviation and median (interquartile range), respectively.

Data availability

The datasets generated or analyzed during this study are available from the corresponding author upon reasonable request.

Code availability

The code for training the models in current research are available from https://github.com/Hugo0512/AI4SGCSC.

References

Yin, V. T., Merritt, H. A., Sniegowski, M. & Esmaeli, B. Eyelid and ocular surface carcinoma: diagnosis and management. Clin. Dermatol. 33, 159–169 (2015).

Silverman, N. & Shinder, R. What’s new in eyelid tumors. Asia Pac. J. Ophthalmol.6, 143–152 (2017).

Cook, B. E. Jr. & Bartley, G. B. Epidemiologic characteristics and clinical course of patients with malignant eyelid tumors in an incidence cohort in Olmsted County, Minnesota. Ophthalmology 106, 746–750 (1999).

Shields, C. L., Naseripour, M., Shields, J. A. & Eagle, R. C. Jr. Topical mitomycin-C for pagetoid invasion of the conjunctiva by eyelid sebaceous gland carcinoma. Ophthalmology 109, 2129–2133 (2002).

Sun, M. T. et al. Periocular squamous cell carcinoma: TNM staging and recurrence. Ophthalmology 122, 1512–1516 (2015).

Reifler, D. M. & Hornblass, A. Squamous cell carcinoma of the eyelid. Surv. Ophthalmol. 30, 349–365 (1986).

Shields, J. A., Demirci, H., Marr, B. P., Eagle, R. C. Jr. & Shields, C. L. Sebaceous carcinoma of the ocular region: a review. Surv. Ophthalmol. 50, 103–122 (2005).

Shields, J. A., Demirci, H., Marr, B. P., Eagle, R. C. Jr. & Shields, C. L. Sebaceous carcinoma of the eyelids: personal experience with 60 cases. Ophthalmology 111, 2151–2157 (2004).

Kaliki, S. et al. Sebaceous gland carcinoma of the eyelid: clinicopathological features and outcome in Asian Indians. Eye 29, 958–963 (2015).

Ho, J. et al. Use of whole slide imaging in surgical pathology quality assurance: design and pilot validation studies. Hum. Pathol. 37, 322–331 (2006).

Qiao, Y. et al. Multi-modality artificial intelligence in digital pathology. Brief Bioinform. 23, https://doi.org/10.1093/bib/bbac367 (2022).

Dong, L. et al. Artificial intelligence for screening of multiple retinal and optic nerve diseases. JAMA Netw. Open 5, e229960 (2022).

Jiang, X., Dong, L., Luo, L., Zhang, K. & Li, D. Retinal photograph-based deep learning system for detection of thyroid-associated ophthalmopathy. J. Craniofac. Surg. https://doi.org/10.1097/scs.0000000000009919 (2023).

Mobadersany, P. et al. Predicting cancer outcomes from histology and genomics using convolutional networks. Proc. Natl. Acad. Sci. USA 115, E2970–e2979 (2018).

Zhu, J.-Y., Park, T., Isola, P., Alexei A. E. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proc. IEEE International Conference on Computer Vision (ICCV) 2242–2251 (IEEE, 2017).

Kai Zhang, Y. Z. et al. Anatomical sites identification in both ordinary and capsule gastroduodenoscopy via deep learning. Biomed. Signal Process. Control 90, https://doi.org/10.1016/j.bspc.2023.105911 (2024).

Zhang, H. et al. Quickly diagnosing Bietti crystalline dystrophy with deep learning. iScience 27, 110579 (2024).

Sergey Zagoruyko, N. K. Wide residual networks.arXiv:1605.07146 https://doi.org/10.48550/arXiv.1605.07146 (2016).

Zhang, Y. et al. Diagnosing chronic atrophic gastritis by gastroscopy using artificial intelligence. Dig. Liver Dis. 52, 566–572 (2020).

Mingxing Tan, Q. V. L. EfficientNet: rethinking model scaling for convolutional neural networks. arXiv:1905.11946 https://doi.org/10.48550/arXiv.1905.11946 (2019).

Bera, K., Schalper, K. A., Rimm, D. L., Velcheti, V. & Madabhushi, A. Artificial intelligence in digital pathology—new tools for diagnosis and precision oncology. Nat. Rev. Clin. Oncol. 16, 703–715 (2019).

Zhang, R. et al. Automatic retinoblastoma screening and surveillance using deep learning. Br. J. Cancer 129, 466–474 (2023).

Hui, S. Q. et al. Noninvasive identification of Benign and malignant eyelid tumors using clinical images via deep learning system. J. Big Data 9, 14 (2022).

Coudray, N. et al. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat. Med. 24, 1559–1567 (2018).

Wu, S. et al. Artificial intelligence-based model for lymph node metastases detection on whole slide images in bladder cancer: a retrospective, multicentre, diagnostic study. Lancet Oncol. https://doi.org/10.1016/S1470-2045(23)00061-X (2023).

Gui, C. P. et al. Multimodal recurrence scoring system for prediction of clear cell renal cell carcinoma outcome: a discovery and validation study. Lancet Digit. Health 5, e515–e524 (2023).

Wang, L. et al. Automated identification of malignancy in whole-slide pathological images: identification of eyelid malignant melanoma in gigapixel pathological slides using deep learning. Br. J. Ophthalmol. 104, 318–323 (2020).

Wick, M. R. The hematoxylin and eosin stain in anatomic pathology—an often-neglected focus of quality assurance in the laboratory. Semin Diagn. Pathol. 36, 303–311 (2019).

Janowczyk, A., Zuo, R., Gilmore, H., Feldman, M. & Madabhushi, A. HistoQC: An open-source quality control tool for digital pathology slides. JCO Clin. Cancer Inform. 3, 1–7 (2019).

Luo, Y. et al. Deep learning-based fully automated differential diagnosis of eyelid basal cell and sebaceous carcinoma using whole slide images. Quant. Imaging Med. Surg. 12, 4166–4175 (2022).

Ehteshami Bejnordi, B. et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. Jama 318, 2199–2210 (2017).

Pan, X. et al. The artificial intelligence-based model ANORAK improves histopathological grading of lung adenocarcinoma. Nat. Cancer 5, 347–363 (2024).

Bottou, L. In Neural Networks: Tricks of the Trade: Second Edition (eds Montavon G., Geneviève B. O., & Müller K.-R.) 421–436 (Springer Berlin Heidelberg, 2012).

Zhang, Y. et al. Deep transfer learning from ordinary to capsule esophagogastroduodenoscopy for image quality controlling. Eng. Rep. 6, e12776 (2024).

Du, K. et al. Deep learning system for screening AIDS-related cytomegalovirus retinitis with ultra-wide-field fundus images. Heliyon 10, e30881 (2024).

Schmidt, H., Spieker, A. J., Luo, T., Szymczak, J. E. & Grande, D. Variability in primary care physician attitudes toward medicaid work requirement exemption requests made by patients with depression. JAMA Health Forum 2, e212932 (2021).

Rosner, B., Glynn, R. J. & Lee, M. L. The Wilcoxon signed rank test for paired comparisons of clustered data. Biometrics 62, 185–192 (2006).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (grant number 82071005), National Natural Science Foundation of China (Nos. 82220108017, 82141128, 82401283), the Sanming Project of Medicine in Shenzhen (No. SZSM202311018), the Capital Health Research and Development of Special (2020-1-2052), Science & Technology Project of Beijing Municipal Science & Technology Commission (Z201100005520045), the priming scientific research foundation for the junior researcher in Beijing Tongren Hospital, Capital Medical University (2023-YJJ-ZZL-003) and Scientific Research Common Program of Beijing Municipal Commission of Education (No. KM202410025011). We wish to thank Dr. Xiaoli Zhao and Dr. Suping Cui (Department of Pathology, Beijing Tongren Hospital) for participating in “Comparison between pathologists without and with AI assistance” of study and Dr. Yan Hei (Department of Pathology, the Third Medical Center of Chinese PLA General Hospital) for her guidance on participation in collecting data for this study.

Author information

Authors and Affiliations

Contributions

D.M.L., Y.S.P, and X.J.Y., initiated the study. J.L.G., K.Z., L.D., Q.Z., S.Q.H. designed and performed the experiments. K.Z. and Z.X.L. wrote the code. S.T.S., H.Z., and Y.Y.Y. collected experimental data. R.H.Z., X.J. and M.Y.W. reviewed the experiment results. D.M.L., Q.Z., L.D., R.H.Z., and X.J. critically reviewed and commended the manuscript. All authors contributed to the preparation of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Geng, J., Zhang, K., Dong, L. et al. AI assistance enhances histopathologic distinction between sebaceous and squamous cell carcinoma of the eyelid. npj Digit. Med. 8, 406 (2025). https://doi.org/10.1038/s41746-025-01775-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-025-01775-z