Abstract

Deep learning methods have been considered promising for accelerating molecular screening in drug discovery and material design. Due to the limited availability of labelled data, various self-supervised molecular pre-training methods have been presented. Although many existing methods utilize common pre-training tasks in computer vision and natural language processing, they often overlook the fundamental physical principles governing molecules. In contrast, applying denoising in pre-training can be interpreted as an equivalent force learning, but the limited noise distribution introduces bias into the molecular distribution. To address this issue, we introduce a molecular pre-training framework called fractional denoising, which decouples noise design from the constraints imposed by force learning equivalence. In this way, the noise becomes customizable, allowing for incorporating chemical priors to substantially improve the molecular distribution modelling. Experiments demonstrate that our framework consistently outperforms existing methods, establishing state-of-the-art results across force prediction, quantum chemical properties and binding affinity tasks. The refined noise design enhances force accuracy and sampling coverage, which contribute to the creation of physically consistent molecular representations, ultimately leading to superior predictive performance.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

27,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

118,99 € per year

only 9,92 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The pre-training and fine-tuning data used in this work are available in the following links: PCQM4Mv2 (ref. 47), https://ogb.stanford.edu/docs/lsc/pcqm4mv2/ and https://figshare.com/articles/dataset/MOL_LMDB/24961485 (ref. 59); QM9 (refs. 42,43), https://figshare.com/collections/Quantum_chemistry_structures_and_properties_of_134_kilo_molecules/978904 (ref. 60); MD17 (ref. 35) and MD22 (ref. 37), http://www.sgdml.org/#datasets; ISO17 (ref. 36), http://quantum-machine.org/datasets/; LBA44, https://zenodo.org/records/4914718 (ref. 61). Source data for the figures in this work are available via figshare at https://doi.org/10.6084/m9.figshare.25902679.v1 (ref. 62) and are provided with this paper.

Code availability

Source codes for Frad pre-training and fine-tuning are available via GitHub at https://github.com/fengshikun/FradNMI. The pre-trained models63 are available via Zenodo at https://zenodo.org/records/12697467 (ref. 63).

References

Butler, K. T., Davies, D. W., Cartwright, H., Isayev, O. & Walsh, A. Machine learning for molecular and materials science. Nature 559, 547–555 (2018).

Wong, F. et al. Discovery of a structural class of antibiotics with explainable deep learning. Nature 626, 177–185 (2023).

Li, J. et al. AI applications through the whole life cycle of material discovery. Matter 3, 393–432 (2020).

Deng, J. et al. A systematic study of key elements underlying molecular property prediction. Nat. Commun. 14, 6395 (2023).

Stokes, J. M. et al. A deep learning approach to antibiotic discovery. Cell 180, 688–702 (2020).

Dowden, H. & Munro, J. Trends in clinical success rates and therapeutic focus. Nat. Rev. Drug Discov. 18, 495–496 (2019).

Galson, S. et al. The failure to fail smartly. Nat. Rev. Drug Discov. 20, 259–260 (2021).

Pyzer-Knapp, E. O. et al. Accelerating materials discovery using artificial intelligence, high performance computing and robotics. npj Comput. Mater. 8, 84 (2022).

Schneider, G. Automating drug discovery. Nat. Rev. Drug Discov. 17, 97–113 (2018).

Chen, T., Kornblith, S., Norouzi, M. & Hinton, G. A simple framework for contrastive learning of visual representations. In International Conference on Machine Learning 1597–1607 (PMLR, 2020).

He, K. et al. Masked autoencoders are scalable vision learners. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 16000–16009 (IEEE, 2022).

Dai, A. M. & Le, Q. V. Semi-supervised sequence learning. Adv. Neural Inf. Process. Syst. 28, 3079–3087 (2015).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers) 4171–4186 (Association for Computational Linguistics, 2019).

Wang, Y., Wang, J., Cao, Z. & Barati Farimani, A. Molecular contrastive learning of representations via graph neural networks. Nat. Mach. Intell. 4, 279–287 (2022).

Moon, K., Im, H.-J. & Kwon, S. 3D graph contrastive learning for molecular property prediction. Bioinformatics 39, 371 (2023).

Fang, Y. et al. Knowledge graph-enhanced molecular contrastive learning with functional prompt. Nat. Mach. Intell. 5, 542–553 (2023).

Stärk, H. et al. 3D Infomax improves GNNs for molecular property prediction. In International Conference on Machine Learning 20479–20502 (PMLR, 2022).

Liu, S. et al. Pre-training molecular graph representation with 3D geometry. In International Conference on Learning Representations Workshop on Geometrical and Topological Representation Learning https://openreview.net/pdf?id=xQUe1pOKPam (ICLR, 2022).

Li, S., Zhou, J., Xu, T., Dou, D. & Xiong, H. GeomGCL: geometric graph contrastive learning for molecular property prediction. In Proc. AAAI Conference on Artificial Intelligence 4541–4549 (PKP Publishing Services, 2022).

Zeng, X. et al. Accurate prediction of molecular properties and drug targets using a self-supervised image representation learning framework. Nat. Mach. Intell. 4, 1004–1016 (2022).

Zhang, X.-C. et al. MG-BERT: leveraging unsupervised atomic representation learning for molecular property prediction. Brief. Bioinf. 22, bbab152 (2021).

Ross, J. et al. Large-scale chemical language representations capture molecular structure and properties. Nat. Mach. Intell. 4, 1256–1264 (2022).

Xia, J. et al. Mole-BERT: rethinking pre-training graph neural networks for molecules. In The Eleventh International Conference on Learning Representations https://openreview.net/pdf/21b1918178090348ffb159460ee696cfe8360dd2.pdf (ICLR, 2023).

Rong, Y. et al. Self-supervised graph transformer on large-scale molecular data. Adv. Neural Inf. Process. Syst. 33, 12559–12571 (2020).

Fang, X. et al. Geometry-enhanced molecular representation learning for property prediction. Nat. Mach. Intell. 4, 127–134 (2022).

Zhou, G. et al. Uni-Mol: a universal 3D molecular representation learning framework. In The Eleventh International Conference on Learning Representations https://openreview.net/pdf?id=IfFZr1gl0b (ICLR, 2023).

Wang, H. et al. Scientific discovery in the age of artificial intelligence. Nature 620, 47–60 (2023).

Zaidi, S. et al. Pre-training via denoising for molecular property prediction. In International Conference on Learning Representations https://openreview.net/pdf?id=tYIMtogyee (ICLR, 2023).

Luo, S. et al. One transformer can understand both 2D & 3D molecular data. In The Eleventh International Conference on Learning Representations https://openreview.net/pdf?id=vZTp1oPV3PC (ICLR, 2023).

Liu, S., Guo, H. & Tang, J. Molecular geometry pretraining with SE(3)-invariant denoising distance matching. In The Eleventh International Conference on Learning Representations https://openreview.net/pdf?id=CjTHVo1dvR (ICLR, 2023).

Jiao, R., Han, J., Huang, W., Rong, Y. & Liu, Y. Energy-motivated equivariant pretraining for 3D molecular graphs. Proc. of the AAAI Conference on Artificial Intelligence 37, 8096–8104 (2023).

Feng, R. et al. May the force be with you: unified force-centric pre-training for 3D molecular conformations. Adv. Neural Inf. Process. Syst. 36, 72750–72760 (2023).

Thölke, P. & Fabritiis, G.D. Equivariant transformers for neural network based molecular potentials. In International Conference on Learning Representations https://openreview.net/pdf?id=zNHzqZ9wrRB (ICLR, 2022).

Boltzmann, L. Studien uber das gleichgewicht der lebenden kraft. Wissen. Abh. 1, 49–96 (1868).

Chmiela, S. et al. Machine learning of accurate energy-conserving molecular force fields. Sci. Adv. 3, 1603015 (2017).

Schütt, K. et al. SchNet: a continuous-filter convolutional neural network for modeling quantum interactions. Adv. Neural Inf. Process. Syst. 30, 992–1002 (Curran Associates, 2017).

Chmiela, S. et al. Accurate global machine learning force fields for molecules with hundreds of atoms. Sci. Adv. 9, 0873 (2023).

Chmiela, S., Sauceda, H. E., Müller, K.-R. & Tkatchenko, A. Towards exact molecular dynamics simulations with machine-learned force fields. Nat. Commun. 9, 3887 (2018).

Wang, Y., Xu, C., Li, Z. & Barati Farimani, A. Denoise pretraining on nonequilibrium molecules for accurate and transferable neural potentials. J. Chem. Theory Comput. 19, 5077–5087 (2023).

Smith, J. S., Isayev, O. & Roitberg, A. E. ANI-1: an extensible neural network potential with DFT accuracy at force field computational cost. Chem. Sci. 8, 3192–3203 (2017).

Smith, J. S. et al. The ANI-1ccx and ANI-1x data sets, coupled-cluster and density functional theory properties for molecules. Sci. Data 7, 134 (2020).

Ruddigkeit, L., Van Deursen, R., Blum, L. C. & Reymond, J.-L. Enumeration of 166 billion organic small molecules in the chemical universe database GDB-17. J. Chem. Inf. Model. 52, 2864–2875 (2012).

Ramakrishnan, R., Dral, P. O., Rupp, M. & Von Lilienfeld, O. A. Quantum chemistry structures and properties of 134 kilo molecules. Sci. Data 1, 140022 (2014).

Townshend, R. et al. ATOM3D: tasks on molecules in three dimensions. In Proc. Neural Information Processing Systems Track on Datasets and Benchmarks (2021).

Landrum, G. et al. RDKit: a software suite for cheminformatics, computational chemistry, and predictive modeling. Greg Landrum (2013).

Chmiela, S., Sauceda, H. E., Poltavsky, I., Müller, K.-R. & Tkatchenko, A. sGDML: constructing accurate and data efficient molecular force fields using machine learning. Comput. Phys. Commun. 240, 38–45 (2019).

Nakata, M. & Shimazaki, T. PubChemQC project: a large-scale first-principles electronic structure database for data-driven chemistry. J. Chem. Inf. Model. 57, 1300–1308 (2017).

Schütt, K. T., Sauceda, H. E., Kindermans, P.-J., Tkatchenko, A. & Müller, K.-R. SchNet—a deep learning architecture for molecules and materials. J. Chem. Phys. 148, 241722 (2018).

Satorras, V. G., Hoogeboom, E. & Welling, M. E(n) equivariant graph neural networks. In International Conference on Machine Learning 9323–9332 (PMLR, 2021).

Gasteiger, J., Groß, J. & Günnemann, S. Directional message passing for molecular graphs. In International Conference on Learning Representations https://openreview.net/pdf?id=B1eWbxStPH (ICLR, 2020).

Gasteiger, J., Giri, S., Margraf, J.T. & Günnemann, S. Fast and uncertainty-aware directional message passing for non-equilibrium molecules. In Machine Learning for Molecules Workshop, NeurIPS (2020).

Liu, Y. et al. Spherical message passing for 3D molecular graphs. In International Conference on Learning Representations https://openreview.net/pdf?id=givsRXsOt9r (ICLR, 2022).

Schütt, K., Unke, O. & Gastegger, M. Equivariant message passing for the prediction of tensorial properties and molecular spectra. In International Conference on Machine Learning 9377–9388 (PMLR, 2021).

Öztürk, H., Özgür, A. & Ozkirimli, E. DeepDTA: deep drug–target binding affinity prediction. Bioinformatics 34, 821–829 (2018).

Rao, R. et al. Evaluating protein transfer learning with tape. Adv. Neural Inf. Process. Syst. 32, 9689–9701 (2019).

Elnaggar, A. et al. ProtTrans: toward understanding the language of life through self-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 44, 7112–7127 (2021).

Somnath, V. R., Bunne, C. & Krause, A. Multi-scale representation learning on proteins. Adv. Neural Inf. Process. Syst. 34, 25244–25255 (2021).

Wang, L., Liu, H., Liu, Y., Kurtin, J. & Ji, S. Learning hierarchical protein representations via complete 3D graph networks. In The Eleventh International Conference on Learning Representations https://openreview.net/forum?id=9X-hgLDLYkQ (ICLR, 2022).

Feng, S. MOL_LMDB. figshare https://doi.org/10.6084/m9.figshare.24961485.v1 (2024).

Ramakrishnan, R., Dral, P., Rupp, M. & Anatole von Lilienfeld, O. Quantum chemistry structures and properties of 134 kilo molecules. figshare https://doi.org/10.6084/m9.figshare.c.978904.v5 (2014).

Townshend, R. J. L. ATOM3D: ligand binding affinity (LBA) dataset. Zenodo https://doi.org/10.5281/zenodo.4914718 (2021).

Ni, Y. Source data for figures in ‘Pre-training with fractional denoising to enhance molecular property prediction’. figshare https://doi.org/10.6084/m9.figshare.25902679.v1 (2024).

Feng, S. Pre-training with fractional denoising to enhance molecular property prediction. Zenodo https://doi.org/10.5281/zenodo.12697467 (2024).

Acknowledgements

Y.L. acknowledges funding from the National Key R&D Program of China (no. 2021YFF1201600) and the Beijing Academy of Artificial Intelligence (BAAI). We acknowledge B. Qiang, Y. Huang, C. Fan and H. Tang for valuable discussions.

Author information

Authors and Affiliations

Contributions

Y.N. and S.F. conceived the initial idea for the projects. S.F. processed the dataset and trained the model. Y.N. developed the theoretical results and drafted the initial manuscript. X.H. and Y.N. analysed the results and created the illustrations and data visualizations. S.F. and Y.S. carried out the experiments utilizing the pre-trained model. Y.N., S.F., X.H. and Y.L. participated in the revision of the paper. The project was supervised by Y.L., Q.Y., Z.-M.M. and W.-M.M., with funding obtained by Y.L. and Q.Y.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Hosein Fooladi and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Test performance of Coord and Frad with different data accuracy on 3 tasks in QM9.

“Train from scratch" refers to the backbone model TorchMD-NET without pre-training. Both Coord and Frad use the TorchMD-NET backbone. “RDKit" and “DFT" refer to pre-training on molecular conformations generated by RDKit and DFT methods respectively. We can see that Frad is more robust to inaccurate pre-training conformations than Coord.

Extended Data Fig. 2 Estimated force accuracy of Frad and Coord in three different sampling settings.

Force accuracy is measured by Pearson correlation coefficient ρ between the force estimation and ground truth. The estimation error is denoted as Cerror. We can see that within the range of small estimation errors, the force estimated by Frad(σ > 0) is consistently more accurate than that estimated by Coord(σ = 0).

Extended Data Fig. 3 Performance of Coord and Frad with different perturbation scales.

The performance (MAE) is averaged across seven energy prediction tasks in QM9. The perturbation scale refers to the mean absolute coordinate changes resulting from noise application and is determined by the noise scale. For Frad, the hyperparameter is fixed at τ = 0.04. We can see that Frad can effectively sample farther from the equilibrium, without a notable performance drop.

Extended Data Fig. 4 An illustration of model architecture.

The model primarily follows the TorchMD-NET framework, with our minor modifications highlighted in dotted orange boxes.

Supplementary information

Supplementary Information

Supplementary Notes A–E, Figs. 1 and 2 and Tables 1–15.

Source data

Source Data Fig. 2

Statistical source data.

Source Data Extended Data Fig./Table 1

Statistical source data.

Source Data Extended Data Fig./Table 2

Statistical source data.

Source Data Extended Data Fig./Table 3

Statistical source data.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ni, Y., Feng, S., Hong, X. et al. Pre-training with fractional denoising to enhance molecular property prediction. Nat Mach Intell 6, 1169–1178 (2024). https://doi.org/10.1038/s42256-024-00900-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-024-00900-z

This article is cited by

-

Advancing active compound discovery for novel drug targets: insights from AI-driven approaches

Acta Pharmacologica Sinica (2025)

-

Molecular pretraining models towards molecular property prediction

Science China Information Sciences (2025)

-

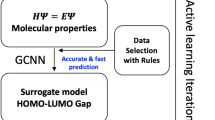

A full-process artificial intelligence framework for perovskite solar cells

Science China Materials (2025)