Abstract

Artificial intelligence (AI) systems, particularly those based on deep learning models, have increasingly achieved expert-level performance in medical applications. However, there is growing concern that such AI systems may reflect and amplify human bias, reducing the quality of their performance in historically underserved populations. The fairness issue has attracted considerable research interest in the medical imaging classification field, yet it remains understudied in the text-generation ___domain. In this study, we investigate the fairness problem in text generation within the medical field and observe substantial performance discrepancies across different races, sexes and age groups, including intersectional groups, various model scales and different evaluation metrics. To mitigate this fairness issue, we propose an algorithm that selectively optimizes those underserved groups to reduce bias. Our evaluations across multiple backbones, datasets and modalities demonstrate that our proposed algorithm enhances fairness in text generation without compromising overall performance.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

27,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

99,00 € per year

only 8,25 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

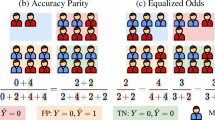

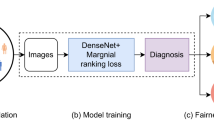

The MIMIC-CXR dataset used in this study is available in the PhysioNet database48 https://www.physionet.org/content/mimic-cxr-jpg/, which consists of de-identified chest X-ray images collected from the Beth Israel Deaconess Medical Center. The PubMed dataset is available at https://huggingface.co/datasets/ccdv/pubmed-summarization. It is a summarization and document pair dataset derived from PubMed, containing biomedical research abstracts and their corresponding summaries. All source datasets are public datasets that can be accessed on the basis of the links in this paper. Source data for Figs. 3–5 are available with this manuscript56 under a Creative Commons license CC BY 4.0. Figures 1 and 2 do not contain associated data.

Code availability

The code supporting this study is publicly available57 under a Creative Commons license CC BY 4.0. For development and version control, the source code is also hosted on GitHub: https://github.com/iriscxy/GenFair.

References

Jiang, F. et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc. Neurol. 2, 230–243 (2017).

Rajpurkar, P. et al. CheXNet: radiologist-level pneumonia detection on chest x-rays with deep learning. Preprint at https://arxiv.org/abs/1711.05225 (2017).

Lin, M. et al. Automated diagnosing primary open-angle glaucoma from fundus image by simulating human’s grading with deep learning. Sci. Rep. 12, 14080 (2022).

Rimmer, A. Radiologist shortage leaves patient care at risk, warns royal college. Br. Med. J. 359, j4683 (2017).

Chen, X. et al. Unveiling the power of language models in chemical research question answering. Commun. Chem. 8, 4 (2025).

Wang, T. et al. Nature of metal–support interaction for metal catalysts on oxide supports. Science 386, 915–920 (2024).

Obermeyer, Z., Powers, B., Vogeli, C. & Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 366, 447–453 (2019).

Seyyed-Kalantari, L., Liu, G., McDermott, M., Chen, I. Y. & Ghassemi, M. CheXclusion: fairness gaps in deep chest X-ray classifiers. In Biocomputing 2021: Proc. Pacific Symposium Vol. 26, 232–243 (World Scientific, 2020).

Zong, Y., Yang, Y. & Hospedales, T. MEDFAIR: benchmarking fairness for medical imaging. In Eleventh International Conference on Learning Representations (ICLR, 2022).

Lin, M. et al. Improving model fairness in image-based computer-aided diagnosis. Nat. Commun. 14, 6261 (2023).

Larrazabal, A. J., Nieto, N., Peterson, V., Milone, D. H. & Ferrante, E. Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis. Proc. Natl Acad. Sci. USA 117, 12592–12594 (2020).

Zhou, Y. et al. RadFusion: benchmarking performance and fairness for multimodal pulmonary embolism detection from CT and EHR. Preprint at https://arxiv.org/abs/2111.11665 (2021).

Kinyanjui, N. M. et al. Fairness of classifiers across skin tones in dermatology. In International Conference on Medical Image Computing and Computer-Assisted Intervention Vol. 12266, 320–329 (MICCAI, 2020).

Lin, M. et al. Evaluate underdiagnosis and overdiagnosis bias of deep learning model on primary open-angle glaucoma diagnosis in under-served populations. AMIA Jt Summits Transl. Sci. Proc. 2023, 370–377 (2023).

Saarni, S. I. et al. Ethical analysis to improve decision-making on health technologies. Bull. World Health Org. 86, 617–623 (2008).

Grote, T. & Berens, P. On the ethics of algorithmic decision-making in healthcare. J. Med. Ethics 46, 205–211 (2020).

Zhang, H. et al. Improving the fairness of chest X-ray classifiers. Proc. Mach. Learn. Res. 174, 204–233 (2022).

Lahoti, P. et al. Fairness without demographics through adversarially reweighted learning. Adv. Neural Inf. Process. Syst. 33, 728–740 (2020).

Narasimhan, H., Cotter, A., Gupta, M. & Wang, S. Pairwise fairness for ranking and regression. In Proceedings of the AAAI Conference on Artificial Intelligence Vol. 34, 5248–5255 (AAAI Press, 2020).

Yang, Y., Zhang, H., Gichoya, J. W., Katabi, D. & Ghassemi, M. The limits of fair medical imaging AI in real-world generalization. Nat. Med. 30, 2838–2848 (2024).

Chen, Q. et al. An extensive benchmark study on biomedical text generation and mining with ChatGPT. Bioinformatics 39, btad557 (2023).

Li, J., Dada, A., Puladi, B., Kleesiek, J. & Egger, J. ChatGPT in healthcare: a taxonomy and systematic review. Comput. Methods Programs Biomed. 245, 108013 (2024).

Tian, S. et al. Opportunities and challenges for ChatGPT and large language models in biomedicine and health. Brief. Bioinform. 25, bbad493 (2024).

Tanida, T., Müller, P., Kaissis, G. & Rueckert, D. Interactive and explainable region-guided radiology report generation. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 7433–7442 (IEEE, 2023).

Van Veen, D. et al. RadAdapt: radiology report summarization via lightweight ___domain adaptation of large language models. In The 22nd Workshop on Biomedical Natural Language Processing and BioNLP Shared Tasks (eds Demner-Fushman, D. et al.) 449–460 (Association for Computational Linguistics, 2023).

Karabacak, M., Ozkara, B. B., Margetis, K., Wintermark, M. & Bisdas, S. The advent of generative language models in medical education. JMIR Med. Educ. 9, e48163 (2023).

Subbiah, V. The next generation of evidence-based medicine. Nat. Med. 29, 49–58 (2023).

Weidinger, L. et al. Ethical and social risks of harm from language models. Preprint at https://arxiv.org/abs/2112.04359 (2021).

Miner, A. S. et al. Smartphone-based conversational agents and responses to questions about mental health, interpersonal violence, and physical health. JAMA Intern. Med. 176, 619–625 (2016).

Bickmore, T. W. et al. Patient and consumer safety risks when using conversational assistants for medical information: an observational study of Siri, Alexa, and Google Assistant. J. Med. Internet Res. 20, e11510 (2018).

Sloan, P., Clatworthy, P., Simpson, E. & Mirmehdi, M. Automated radiology report generation: a review of recent advances. IEEE Rev. Biomed. Eng. 4225–4232 (2024).

Pang, T., Li, P. & Zhao, L. A survey on automatic generation of medical imaging reports based on deep learning. Biomed. Eng. Online 22, 48 (2023).

Zhang, T., Kishore, V., Wu, F., Weinberger, K. Q. & Artzi, Y. BERTScore: evaluating text generation with BERT. In International Conference on Learning Representations (ICLR, 2020).

Celikyilmaz, A., Clark, E. & Gao, J. Evaluation of text generation: a survey. Preprint at https://arxiv.org/abs/2006.14799 (2020).

Fu, J., Ng, S.-K., Jiang, Z. & Liu, P. GPTScore: evaluate as you desire. In Proc. 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies Vol. 1, 6556–6576 (2024).

Lin, C.-Y. ROUGE: a package for automatic evaluation of summaries. In Text Summarization Branches Out 74–81 (Association for Computational Linguistics, 2004).

Chen, X. et al. Flexible and adaptable summarization via expertise separation. In Proc. 47th International ACM SIGIR Conference on Research and Development in Information Retrieval 2018–2027 (Association for Computing Machinery, 2024).

Liu, Y. et al. Revisiting the gold standard: grounding summarization evaluation with robust human evaluation. Proc. 61st Annual Meeting of the Association for Computational Linguistics Vol. 1 (eds Rogers, A. et al.) 4140–4170 (Association for Computational Linguistics, 2023).

Scialom, T. et al. QuestEval: summarization asks for fact-based evaluation. In Proc. 2021 Conference on Empirical Methods in Natural Language Processing (eds Moens, M.-F. et al.) 6594–6604 (Association for Computational Linguistics, 2021).

Chen, X. et al. Rethinking scientific summarization evaluation: grounding explainable metrics on facet-aware benchmark. Preprint at https://arxiv.org/abs/2402.14359 (2024).

Irvin, J. et al. CheXpert: a large chest radiograph dataset with uncertainty labels and expert comparison. Proc. Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence 590–597 (AAAI Press, 2019).

Wang, X. et al. CXPMRG-Bench: pre-training and benchmarking for X-ray medical report generation on CheXpert Plus dataset. Preprint at https://arxiv.org/abs/2410.00379 (2024).

Endo, M., Krishnan, R., Krishna, V., Ng, A. Y. & Rajpurkar, P. Retrieval-based chest x-ray report generation using a pre-trained contrastive language-image model. Proc. Mach. Learn. Res. 158, 209–219 (2021).

Boag, W. et al. Baselines for chest x-ray report generation. Proc. Mach. Learn. Res. 116, 126–140 (2020).

Chen, Z., Song, Y., Chang, T.-H. & Wan, X. Generating radiology reports via memory-driven transformer. In Proc. 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP) (eds Jurafsky, D. et al.) 1439–1449 (2020).

Lewis, M. et al. BART: denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In Proc. 58th Annual Meeting of the Association for Computational Linguistics 7871–7880 (Association for Computational Linguistics, 2020).

Touvron, H. et al. Llama 2: open foundation and fine-tuned chat models. Preprint at https://arxiv.org/abs/2307.09288 (2023).

Johnson, A. E. et al. MIMIC-CXR-JPG, a large publicly available database of labeled chest radiographs. Preprint at https://arxiv.org/abs/1901.07042 (2019).

Cohan, A. et al. A discourse-aware attention model for abstractive summarization of long documents. In Proc. 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies Vol. 2 (eds Walker, M. et al.) 615–621 (2018).

Johnson, A. et al. MIMIC-IV. PhysioNet https://physionet.org/content/mimiciv/1.0/ 49–55 (2020).

Mayr, F. B. et al. Do hospitals provide lower quality of care to black patients for pneumonia? Crit. Care Med. 38, 759–765 (2010).

Wolf, T. et al. Transformers: State-of-the-art natural language processing. In Proc. 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations (eds Liu, Q. & Schlangen, D.) 38–45 (Association for Computational Linguistics, 2020).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Deng, J. et al. ImageNet: a large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009).

Kingma, D. P. and Ba, J. Adam: a method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

Chen, X. support data.zip. Figshare https://doi.org/10.6084/m9.figshare.28516889.v1 (2025).

Chen, X. Code for GenFair. Figshare https://doi.org/10.6084/m9.figshare.28516898.v1 (2025).

Acknowledgement

X.C. was supported by Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) through grant award 8481000078.

Author information

Authors and Affiliations

Contributions

X.C. and T.W. contributed to the development of the idea, experiments and manuscript writing. J.Z. and Z.S. were responsible for conducting experiments. X.G. and X.Z. provided supervision and contributed to the manuscript writing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Computational Science thanks Jiangning Song and Wenbin Zhang for their contribution to the peer review of this work. Primary Handling Editor: Ananya Rastogi, in collaboration with the Nature Computational Science team. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–7, Discussion and Tables 1–11.

Source data

Source Data Fig. 2

Original scores and statistical source data for Fig. 2.

Source Data Fig. 3

Original scores and statistical source data for Fig. 3.

Source Data Fig. 5

Original scores and statistical source data for Fig. 5.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, X., Wang, T., Zhou, J. et al. Evaluating and mitigating bias in AI-based medical text generation. Nat Comput Sci 5, 388–396 (2025). https://doi.org/10.1038/s43588-025-00789-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s43588-025-00789-7

This article is cited by

-

Toward fair AI-driven medical text generation

Nature Computational Science (2025)