Abstract

This paper mainly studies the issue of fractional parameter identification of generalized bilinear-in-parameter system(GBIP) with colored noise. Hierarchical fractional least mean square algorithm based on the key term separation principle(K-HFLMS) and multi-innovation hierarchical fractional least mean square algorithm based on the key term separation principle (K-MHFLMS) are presented for the effective parameter estimation of GBIP system. The K-MHFLMS expands the scalar innovation into the vector innovation by making full use of the system input and output data information at each recursive step. The detailed performance analyses of the K-MHFLMS strategy are compared with the K-HFLMS algorithm for GBIP identification model based on the Fitness metrics, the mean square error metrics and the average predicted output error. The effectiveness and reliability of K-HFLMS and K-MHFLMS algorithms are further verified through the simulation experimentation under different noise variances, fractional orders and innovation lengths, and the K-MHFLMS yields faster convergence speed than the K-HFLMS by increasing the innovation length.

Similar content being viewed by others

Introduction

The design of fractional adaptive algorithms based on the fractional calculus has become a very important and emerging research field during the last decade for investigating signal processing, control system and chaotic system1,2,3,4,5,6. Fractional calculus is a significant branch of mathematics which proposed the possibility of computing fractional order derivatives and integrals, and has been applied in many different fields of science, technology and engineering7,8,9,10. The researchers have focused on the FLMS algorithms and exploited them to solve diversified parameter estimation problems by applying the theories of the fractional calculus. For instance, signal processing 11, image denoising12, circuit analysis13, neural networks14, chaotic systems15, fractional system identification16,17, power signal18,19, robotics20, epidemic model21, recommender systems22,23. Some new fractional adaptive algorithms have been proposed to enhance the accuracy, convergence rate, and complexity of the fractional system identification. Chaudhary et al. provided normalized fractional adaptive algorithms for the parameter estimation of control autoregressive autoregressive systems and the nonlinear control autoregressive systems24,25. Yin et al. proposed a novel orthogonalized fractional order filtered-x normalized least mean squares algorithm using orthogonal transform method and fractional order switch mechanism to obtain the precise parameters of the feedforward controller and enhance the convergence speed of the introduced algorithm26. Chaudhary et al. designed a class of novel sign fractional adaptive algorithms for nonlinear Hammerstein systems by applying the sign function to input data corresponding to first-order term, fractional-order term and both of them27. Chaudhary et al. presented an innovative fractional order least mean square algorithm for an effective parameter estimation for power signal by introducing high values of the fractional order28. Liu et al. proposed a quasi fractional order gradient descent method with adaptive stepsize to solve the high-dimensional function optimization problems29. Wang et al. proposed a fast speed fractional order gradient descent method with variable initial value to ensure convergence to the actual extremum30.

The research community showed great interest in the fields of BIP systems and the GBIP systems in the last few decades, and proposed various approaches for parameter estimation of these complicated nonlinear systems. The especial peculiarity of the BIP systems is that the system output is the linear function in any one of the parameter vectors when the other parameter vectors are fixed. The BIP systems can characterize lots of the block-oriented cascaded nonlinear systems. Hammerstein systems, Wiener systems and Hammerstein–Wiener systems can be transformed into the form of the generalized bilinear-in-parameter systems31,32. For example, Hammerstein impulse response systems can be expediently described as bilinear-in-parameter systems when the nonlinearity is expressed as the linear combination of the known basis functions33. The BIP systems can explain the nonlinearity with relatively low computational complexity, so they are widely applied in many areas of scientific research and engineering practice34,35, and several new identification methods have been reported in this field. Ding et al. derived a filtering based recursive least squares algorithm for BIP systems by using the hierarchical identification principle36. Wang and Ding presented a filtering based multi-innovation stochastic gradient algorithm for interactively identifying each linear regressive sub-model of the bilinear-in-parameter system with ARMA noise37. Wang et al. discussed a hierarchical least squares algorithm based on the model decomposition principle for estimating the parameters of BIP systems, and analyzed the convergence of the proposed algorithm using the stochastic martingale theory38. The output nonlinear system can be formulated as the GBIP system36,37,38. Hammerstein systems, Wiener systems, and Hammerstein -Wiener systems also can be transformed into the GBIP system39,40,41.

Recently, the concept of the hierarchical identification principle and the multi innovation theory was presented for the effective fractional parameter identification of nonlinear systems. Zhu et al. formulated the auxiliary model and hierarchical normalized fractional adaptive algorithms for parameter estimation of BIP systems by using the auxiliary model idea and the hierarchical identification principle42. Chaudhary NI et al. proposed fractional hierarchical gradient descent algorithm by generalizing the standard hierarchical gradient descent algorithm to fractional order for effectively solving parameters estimate of nonlinear control autoregressive systems under different fractional order and noise conditions43. Chaudhary NI et al. presented multi-innovation fractional LMS algorithm for parameter estimation of input nonlinear control autoregressive systems by increasing the length of innovation vector44. In terms of the GBIP system, the hierarchical fractional adaptive method could not completely separate the two parameter vectors \(a\) and \(b\) in the system, but by using the key term separation principle, the two parameter vectors in the system not only can be completely separated, but also the system output can be represented as the a linear combination of the unknown parameter vectors of the system. The fractional hierarchical gradient descent algorithm only used the fractional order derivative, but the K-HFLMS algorithm also used the integer order derivative, so it could give more accurate estimation results. The multi-innovation hierarchical fractional adaptive strategies could make full use of the data in the dynamical data window to refresh parameter estimation of the system, they are more effective than the traditional hierarchical fractional adaptive approaches in parameter identification of the nonlinear control systems.

Based on the work in [42,43,44], this study proposed the K-HFLMS algorithm and the K-MHFLMS algorithm for parameter estimation of GBIP system by using the hierarchical identification principle and multi-innovation theory. The performance of the K-MHFLMS strategy is analyzed in comparison with the K-HFLMS strategy for different noise variances, fractional orders and innovation lengths . The main contributions of this study are given as bellow.

-

1.

A K-HFLMS algorithm is designed by generalizing the standard hierarchical stochastic gradient algorithm to fractional order method based on the key term separation principle and the hierarchical identification principle for more accurate parameter estimates of GBIP system.

-

2.

A K-MHFLMS algorithm is exploited based on the multi-innovation theory in order to accelerate the convergence speed of the proposed hierarchical fractional adaptive algorithm.

-

3.

The effectiveness of the proposed two fractional algorithms is validated through simulation experimentation with different values of noise variance, fractional order and length of innovation vector.

-

4.

The superior performance of the proposed multi-innovation hierarchical fractional adaptive strategy is compared with the hierarchical fractional adaptive approach through accurate parameter estimation based on the Fitness metrics and the average predicted output error.

Rest of this study is outlined as follows: an identification model based on the key term separation principle is briefly described for the GBIP system in Section "Generalized bilinear-in-parameter system model". Section "Hierarchical stochastic gradient identification algorithm" presents a hierarchical stochastic gradient identification algorithm for the GBIP system using the hierarchical identification principle. Section "Hierarchical fractional least mean square identification algorithm" discusses a K-HFLMS algorithm for parameter estimation of the identification model. Section "Multi-innovation hierarchical fractional least mean square identification algorithm" provides a K-MHFLMS algorithm based on the multi-innovation theory for the GBIP system. Section "Simulation experimentation and results discussion" proposes the results for a simulation experimentation of the two algorithms along with a detailed comparative discussion based on different performance metrics. The concluding remarks with some potential research directions in the relevant fields of engineering, science and technology are given in Section "Conclusions".

Generalized bilinear-in-parameter system model

Consider the block diagram of the GBIP system with ARMA noise is described in Fig. 1, the mathematical expression for the GBIP system is given as below36,37,38,39:

where \(y(t) \in R\) is the system output, \({\varvec{F}}(t) \in R^{{n_{a} \times n_{b} }}\) is the known information matrix which consisted of the input measurement data, \({{\varvec{\Phi}}}(t) \in R^{{n_{\rho } }}\) is the known information vector, \(v(t) \in R\) is the unknown white noise with zero mean and variance \(\sigma^{2}\), polynomials \(C(z)\) and \(D(z)\) are given as unit backward shift operator \(z^{ - 1} [z^{ - 1} y(t) = y(t - 1)], C(z) = 1 + \sum\limits_{i = 1}^{{n_{c} }} {c_{i} z^{ - i} }, D(z) = 1 + \sum\limits_{i = 1}^{{n_{d} }} {d_{i} z^{ - i} }\), \(\varvec{a}= [a_{1} ,a_{2} , \cdots ,a_{{n_{a} }} ]^{T} \in R^{{n_{a} }}\), \(\varvec{b}= [b_{1} ,b_{2} , \cdots ,b_{{n_{b} }} ]^{T} \in R^{{n_{b} }}\), \(\varvec{\rho}= [\rho_{1} ,\rho_{2} , \cdots ,\rho_{{n_{\rho } }} ]^{T} \in R^{{n_{\rho } }}\), \(\varvec{c}= [c_{1} ,c_{2} , \cdots ,c_{{n_{c} }} ]^{T} \in R^{{n_{c} }}\) and \(\varvec{d}= [d_{1} ,d_{2} , \cdots ,d_{{n_{d} }} ]^{T} \in R^{{n_{d} }}\) are the unknown parameter vectors to be identified. Assume that the orders \(n_{a} ,n_{b} ,n_{\rho } ,n_{c} ,n_{d}\) are known.

Define the information matrix:

while \({\varvec{f}}(u(t - i)) = \left[f_{1} (u(t - i)),f_{2} (u(t - i)), \cdots ,f_{{n_{a} }} (u(t - i)) \right]^{T} \in R^{{n_{a} }} ,\;i = 1,2, \cdots ,n_{b}\).

Using the auxiliary model identification idea45,46, the intermediate variable can be defined as:

where the unknown colored disturbance \(w(t) \in R\) is the autoregressive moving average(ARMA) process. So Eq. (3) can be formulated as:

where \({{\mathbf{\Omega}}}(t) = [ - w(t - 1), - w(t - 2), \cdots , - w(t - n_{c} ),v(t - 1),v(t - 2), \cdots ,v(t - n_{d} )]^{T} \in R^{{n_{c} + n_{d} }}\) is the unknown noise information vector, \(\varvec{\theta}\) \(= [c_{1} ,c_{2} , \cdots ,c_{{n_{c} }} ,d_{1} ,d_{2} , \cdots ,d_{{n_{d} }} ]^{T} \in R^{{n_{c} + n_{d} }}\) is the unknown noise parameter vector, Eq. (5) is the noise identification model. Due to in Fig. 1, and polynomial \(G(z) = \sum\limits_{i = 1}^{{n_{b} }} {b_{i} z^{ - i} }\), the unknown inner variable \(\overline{u}(t) = \sum\limits_{i = 1}^{{n_{a} }} {a_{i} f_{i} (u(t))} = {\varvec{f}}^{T} (u(t))\,{\varvec{a}}\), then Eq. (1) can be rewritten as:

Using the key term separation principle47,48, \(\overline{u}(t - 1)\) can be chosen as a key term. Let \(b_{1} = 1\), so we have:

where the unknown information vector and the unknown parameter are respectively defined as \({{\varvec{\Psi}}}(t) = [\overline{u}(t - 2),\overline{u}(t - 3), \cdots ,\overline{u}(t - n_{b} )]^{T} \in R^{{n_{\lambda } }}\), \(\lambda = [b_{2} , \cdots ,b_{{n_{b} }} ]^{T} = [\lambda_{1} , \cdots ,\lambda_{{n_{\lambda } }} ]^{T} \in R^{{n_{\lambda } }}\), \(n_{\lambda } = n_{b} - 1\).

Equation (10) is the identification model of the GBIP system given in Fig. 1. The objective of this paper is to propose new fractional gradient identification algorithms based on the key term separation principle for estimating parameter vectors \({\varvec{a}},{{\varvec{\lambda}}},{{\varvec{\rho}}}\) and \({{\varvec{\theta}}}\) using the measured data.

Hierarchical stochastic gradient identification algorithm

The hierarchical stochastic gradient algorithm decomposes the system into several sub-systems with smaller dimension and fewer variables, thus reducing the complexity of the system and making the hierarchical stochastic gradient algorithm more efficient than the standard counterparts. Using the hierarchical identification principle49,50, the four intermediate variables are defined as:

Then Eq. (10) can be decomposed into four subsystems as bellow:

Defining the quadratic cost functions according to (15–18):

Using the negative gradient search and minimizing \(J_{a} ({\varvec{a}}),J_{\lambda } ({{\varvec{\lambda}}}),J_{\rho } ({{\varvec{\rho}}})\) and \(J_{\theta } ({{\varvec{\theta}}})\), we can gain the following recursive algorithm for estimating \({\varvec{a}},{{\varvec{\lambda}}},{{\varvec{\rho}}}\) and \({{\varvec{\theta}}}\).

where \(\mu_{a} (t),\,\mu_{\lambda } (t),\,\mu_{\rho } (t)\) and \(\mu_{\theta } (t)\) are the first-order derivative learning rates, \(\varepsilon\) is the convergence index.

But Eqs. (23), (24), (27), (29), (30), (33), (35) contain unmeasurable inner variables \(\overline{u}(t - i)\) in \({{\varvec{\Psi}}}(t)\) and \(w(t - i),v(t - i)\) in \({{\varvec{\Omega}}}(t)\), the recursive algorithm in (23–35) is impossible to implement. These unknown variables should be replaced with their corresponding estimates through the following relations:

Thus, we obtain the hierarchical stochastic gradient identification algorithm (HSG) for parameter estimation of GBIP system using the key term separation principle as follow:

Hierarchical fractional least mean square identification algorithm

The better performance of the hierarchical fractional gradient approaches over the conventional fractional counterparts motivate researchers to design the fractional variant of identification algorithms that generalizes the hierarchical stochastic gradient algorithm to the case of fractional order. In this section, we present hierarchical fractional adaptive algorithms for GBIP system by minimizing the quadratic cost functions (19–22) through first-order derivative and fractional derivative. Before proposed the fractional gradient algorithms, the basic concepts of the fractional derivative are introduced briefly. One of the most widely used definitions of the fractional derivative is Riemann–Liouville(RL) definition, which of the unknown fractional order \(\delta\) with lower terminal at \(c\) for the function \(f(t)\) is given as51,52:

The fractional order derivative of order \(\delta\) for the polynomial function \(f(t) = t^{n}\) is defined as53:

where known constant \(n\) is a positive integer, the symbol \(\Gamma\) is the gamma function and is given as:

The quadratic cost function for the GBIP model is written as24:

The estimation error \(e(n)\) is the difference between actual response \(d(n)\) and the estimated response \(\hat{d}(n)\), and it is given as:

where \({\hat{\mathbf{w}}}(n)\) represents the estimate of the weight vector \({\mathbf{w}}(n)\), \({\mathbf{w}}(n) = \left[w_{0} (n),w_{1} (n), \cdots ,w_{M - 1} (n) \right]^{T}\), and \({\varvec{x}}(n)\) represents the input vector, \({\varvec{x}}(n) = \left[x(n),x(n - 1), \cdots ,x(n - M + 1) \right]^{T}\).

Minimizing the quadratic cost function (62) by taking first-order derivative and fractional derivative with respect to \(\hat{w}_{k}\), the recursive update relations of conventional fractional least mean square (FLMS) algorithm is written as54:

where \(\mu_{1}\) and \(\mu_{\delta }\) are the learning rates for the first-order derivative terms and fractional derivative terms, \(k = 0,1, \cdots ,M - 1\).

Using (60) and (61), the fractional derivative of the cost function is given as:

Substituting (66) in (64), and assuming \(\hat{w}_{k}^{1 - \delta } (n) \cong \hat{w}_{k}^{1 - \delta } (n - 1)\), the adaptive weight updating equation of FLMS algorithm is rewritten as bellow:

The vector form of the FLMS algorithm for the GBIP system is given as:

where the absolute value of the estimate of weight vector \({\hat{\mathbf{w}}}(n)\) is taken for avoiding complex entries, and the symbol \(\circ\) is expresses an element by element multiplication of input vector \({\varvec{x}}(n)\) and \({\hat{\mathbf{w}}}(n)\).

Based on Eq. (68), we have:

For enhancing the convergence of the proposed K-HFLMS algorithm, we have \(\mu_{a} (t) = \mu_{\delta }\), then the Eq. (69) can be updated as:

By minimizing the quadratic cost functions (19–22), then the K-HFLMS for GBIP system is given as bellow:

When \(\mu_{\delta } = 0\), the K-HFLMS algorithm (69) and (72–86) can be reduced to the hierarchical stochastic gradient (HSG) algorithm. And when \(\mu_{a} (t) = \mu_{\lambda } (t) = \mu_{\rho } (t) = \mu_{\theta } (t) = 0\), the K-HFLMS algorithm also can be reduced to the hierarchical fractional stochastic gradient algorithm based on the key term separation principle (K-HFSG)43.

Multi-innovation hierarchical fractional least mean square identification algorithm

In order to improve the identification accuracy and convergence speed of the K-HFLMS algorithm, the multi-innovation theory was introduced for the fractional system identification55,56. The main idea consists in expanding the information vector \({\varvec{f}}(u(t - 1)) \in R^{{n_{a} }}\),\({\hat{\mathbf{\Psi }}}(t) \in R^{{n_{\lambda } }}\) to the information matrix \({{\varvec{\Gamma}}}(u(t - 1)) \in R^{{n_{a} \times p}}\), \({\hat{\mathbf{\Pi }}}(p,t) \in R^{{n_{\lambda } \times p}}\), and the scalar innovation \(e(t) \in R^{1}\) to the innovation vector \({\varvec{E}}(p,t) \in R^{p}\). Compared with the K-HFLMS algorithm, the multi-innovation algorithm in this section can make full use of not only the current time data but also the previous data, where \(p\) is the innovation length. The innovation vector is defined as:

Accordingly, the stacked output vector and the stacked information matrices are written as:

Then (88) can be rewritten as:

So the K-MHFLMS for GBIP system is given as:

Obviously, the K-MHFLMS algorithm (89–103) can improve parameter estimation performance by using full of all the collected data. When \(p = 1\), the K-MHFLMS algorithm will reduce to the K-HFLSM algorithm.

Simulation experimentation and results discussion

In this section, the simulation experimentation results are given for the example of GBIP system using the proposed K-MHFLMS and K-HFLMS algorithms for different noise levels, fractional orders and innovation lengths. The graphical abstract of proposed strategy is given in Fig. 2.

The example

In order to verify the performance of the proposed fractional algorithm, the following parameters are considered in GBIP system with \(n_{a} = 2\),\(n_{b} = 3\),\(n_{\lambda } = 2\),\(n_{\rho } = 2\),\(n_{c} = 1\) and \(n_{d} = 1\).

where,

Then the parameter vector of GBIP system is written as:

In order to evaluate the performance of the proposed K-MHFLMS and K-HFLMS algorithm for GBIP system, three performance metrics based on the estimation error are used, i.e., fitness function (Fitness), mean square error (MSE), and Nash Sutcliffe efficiency (NSE). They are respectively defined as following57:

where \({\hat{\mathbf{\Sigma }}}(t)\) is the value of an estimated parameter vector, and \({{\varvec{\Sigma}}}\) is the actual parameter vector at \(t\) th iteration of the proposed hierarchical fractional identification algorithms. In case of perfect model, the optimum values of MSE and NSE are zero and one.

In simulation experimentation, a zero mean and unit variance persistent excitation signal sequence with the uniform distribution is taken as the input \(\left\{ {u(t)} \right\}\), and the uncorrelated white noise disturbance sequence \(\left\{ {v(t)} \right\}\) is taken as a zero mean and variance \(\sigma^{2}\) having the normal distribution, and the autoregressive moving average noise \(w(t)\) is generated based on Eq. (5) , then the system output \(y(t)\) can be obtained according to Eq. (10).

The data length is \(10800\), using the first \(L = 10000\) data and applying the proposed K-HFLMS and K-MHFLMS algorithms, we estimated the parameter vector \({{\varvec{\Sigma}}}\) of the GBIP model, and then used the remaining \(L_{\;r} = 800\) data from \(t = L + 1 = 10001\) to \(t = L + L_{\;r} = 1080010800\) to validate the effectiveness of the proposed multi-innovation hierarchical fractional algorithm. The fractional learning rate \(\mu_{\delta }\) is empirically selected as \(0.0001\). The convergence index is taken as \(\varepsilon = 0.65\). The robustness of the fractional adaptive algorithms are evaluated for the noise variances \(\sigma^{2} { = 0}{\text{.2}}^{2} {,0}{\text{.5}}^{2} {,}0.8^{2}\). The fractional order is examined for five different values, i.e., \(\delta = 0.4,0.7,1.0,1.2,1.4\). The effectiveness of the K-MHFLMS algorithm is verified for distinct innovation lengths namely \(p = 1,2,3,4\). The proposed fractional algorithms are evaluated based on Fitness and MSE criterion and results are shown in the Tables and Figures.

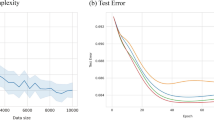

The effect of noise variances on the hierarchical fractional algorithms

The robustness of the K-HFLMS and K-MHFLMS strategies for GBIP model is tested for three different noise variances \(\sigma^{2} = 0.2^{2} ,0.5^{2} ,0.8^{2}\), and the fractional order is taken as \(\delta = 1.2\), the innovation lengths are taken as \(p = 2,3,4\) of the K-MHFLMS algorithm, the results are presented in Fig. 3 and Tables 1 and 2 based on the MSE values and the Fitness values respectively for these noise variances. Form Fig. 3 and Tables 1 and 2, it can be seen that the proposed fractional adaptive algorithms are all convergent and accurate for the noise variances, and while the accuracy of both K-HFLMS and K-MHFLMS strategies increases with the increase in noise variance. All the strategies provide better results for higher noise variance i.e., \(\sigma^{2} = 0.8^{2}\). So it is justifiable to take \(\sigma^{2} = 0.8^{2}\) in later investigations.

The effect of fractional orders on the hierarchical fractional algorithms

The selection of fractional order is very significant but a little complicated for the parameter identification of fractional adaptive strategies. To choose a more appropriate fractional order, we evaluated the proposed algorithms for five different values of fractional order \(\delta = 0.4,0.7,1.0,1.2,1.4\), and the noise variances and the innovation lengths of the K-MHFLMS algorithm are selected as \(\sigma^{2} = 0.8^{2}\) and \(p = 2,3,4\) respectively, the results are shown in Fig. 4 and Tables 3 and 4 in terms of the MSE values and the Fitness values as before. From Fig. 4 and Tables 3 and 4, we can conclude that the proposed fractional adaptive strategies are effective for the fractional orders, and the accuracy of both K-HFLMS and K-MHFLMS strategies increases with the increase in fractional order from \(\delta = 0.4\) to \(\delta = 1.2\), but from the Fig. 4a, b , the effect of \(\delta = 1.4\) is obviously different from those of the other fractional orders. Then it is reasonable to take \(\delta = 1.2\) in later investigations.

The effect of innovation lengths on the multi-innovation hierarchical fractional algorithm

For further verified the effect of innovation lengths to the proposed multi-innovation hierarchical fractional algorithm, we also examine the proposed K-MHFLMS algorithm in detail for four different innovation lengths \(p = 1,2,3,4\). The noise variance and the fractional order are selected four groups, that is: \(\delta { = 0}{\text{.4}}\)(\(\sigma^{2} = 0.2^{2}\)) and \(\delta { = 1}{\text{.2}}\)(\(\sigma^{2} = 0.2^{2}\),\(\sigma^{2} = 0.5^{2}\),\(\sigma^{2} = 0.8^{2}\)). The results are presented in Fig. 5 and Tables 5 and 6 in terms of the Fitness values. It is clearly noticed that the error of K-MHFLMS(\(p = 4\)) is the smallest in the errors of the four multi-innovation hierarchical algorithms, and it provides better effect than K-HFLMS and K-MHFLMS (\(p = 2,3\)) in terms of convergence and accuracy. This also verified that the proposed K-MHFLMS algorithms when \(p = 2,3,4\) are more effective and more accurate than the K-HFLMS algorithm which is \(p = 1\) of the K-MHFLMS algorithms.

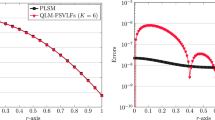

The comparative analysis of the proposed hierarchical fractional algorithms

We compare the proposed the K- HFLMS algorithm with the K-HFSG algorithm based on MSE for the example.

with \(\sigma^{2} = 0.2^{2} ,0.8^{2}\) and \(\delta = 0.4,1.2\), and the comparative analysis results are show in Fig. 6. From Fig. 6, it is clearly observed that the performance of the K-HFLMS algorithm is better than that of the K-HFSG algorithm, and the K-HFLMS algorithm has more faster convergence rate compared with the K-HFSG algorithm. So it is concluded that K-HFLMS adaptive method gives more accurate estimation precision than the K-HFSG adaptive method.

Model validation

Based on the estimated parameters when \(t = 10000\), the rest \(800\) data from \(t = 10001\) to \(t = 10800\) are taken to further validate the effectiveness of the multi-innovation hierarchical fractional algorithm.

The average predicted output error of the multi-innovation hierarchical fractional identification model is written as55:

where \(\hat{y}(t)\) is the output of the estimated models and \(y(t)\) is the actual model output. According to the express (104), the average prediction errors of the four multi-innovation algorithms of \(p = 1,2,3,4\) are calculated when \(\delta { = 0}{\text{.4}}\)(\(\sigma^{2} = 0.2^{2}\)) and \(\delta { = 1}{\text{.2}}\)(\(\sigma^{2} = 0.2^{2}\),\(\sigma^{2} = 0.5^{2}\),\(\sigma^{2} = 0.8^{2}\)) respectively, the results are shown in Table 7. From Table 7, it can be further indicate that the average predicted output errors of K-MHFLMS strategies decrease with the increase in innovation lengths from \(p = 1\) to \(p = 4\), the proposed multi-innovation fractional algorithm is effective, and K-MHFLMS can achieve more accurate estimation than K-HFLMS based on the average prediction errors. This also validates the previous conclusion namely that the convergence rate of the K-MHFLMS algorithms increases in case of the increase in noise variance.

Conclusions

The K-HFLMS algorithm and the K-MHFLMS algorithm are proposed by combining fractional derivative concepts based on the key term separation principle and the hierarchical identification principle for effective parameter estimation of GBIP system.

The K-MHFLMS algorithm is generalized from the K-HFLMS strategy through exploiting the multi-innovation theory.

The proposed hierarchical fractional algorithms are convergent and accurate for the three noise variances, and better results are achieved for higher noise variance.

The designed hierarchical fractional strategies are effective and robust for the fractional orders. And the relatively better results are found for higher fractional order for the identification algorithms.

It can be obviously noticed that the relatively better results are achieved for higher innovation lengths of the multi-innovation hierarchical algorithms based on the Fitness error and the average predicted output error.

The results of the simulation analyses demonstrate that the K-MHFLMS algorithms have more accurate and robust performance than the K-HFLMS counterparts.

In the future, there are more challenging parameter identification problems for us to investigate, especially the fractional adaptive strategies based on the filtering technique and the hierarchical identification principle would be the important works for us to attempt. The proposed the multi-innovation hierarchical adaptive algorithm can be coupled with the filtering technique, the multi-stage estimation theory, the key term separation technique to solve more complicated parameter estimation problems of GBIP system, CARMA system, CARARMA system, Hammerstein OEMA system, finite impulse response MA system, mutli-input multi-output nonlinear system and kernel systems58,59,60,61,62,63,64,65.

Data availability

All data included in this study are available upon request by contact with the corresponding author.

References

Chaudhary, N. I. & Raja, M. A. Z. Identification of Hammerstein nonlinear ARMAX systems using nonlinear adaptive algorithms. Nonlinear Dyn. 79(2), 1385–1397 (2015).

Shah, S. M., Samar, R., Khan, N. M. & Raja, M. A. Z. Design of fractional-order variants of complex LMS and NLMS algorithms for adaptive channel equalization. Nonlinear Dyn. 88, 839–858 (2017).

Chaudhary, N. I., Zubair, S. & Raja, M. A. Z. Design of momentum LMS adaptive strategy for parameter estimation of Hammerstein controlled autoregressive systems. Neural Comput. Appl. 30(4), 1133–1143 (2018).

Yin, W., Wei, Y., Liu, T. & Wang, Y. A novel orthogonalized fractional order filtered-x normalized least mean squares algorithm for feedforward vibration rejection. Mech. Syst. Signal Process. 119, 138–154 (2019).

Xu, T., Chen, J., Pu, Y. & Guo, L. Fractional-based stochastic gradient algorithms for time-delayed ARX models. Circuits Syst. Signal Process. 41, 1895–1912 (2022).

Shoaib, B. & Qureshi, I. M. Adaptive step-size modified fractional least mean square algorithm for chaotic time series prediction. Chin. Phys. B. 23(5), 050503 (2014).

Ortigueira, M. D., Ionescu, C. M., Machado, J. A. T. & Trujillo, J. J. Fractional signal processing and applications. Signal Process. 107, 197 (2015).

Zhang, Q. et al. A novel fractional variable-order equivalent circuit model and parameter identification of electric vehicle li-ion batteries. ISA Trans. 97, 448–457 (2020).

He, S. & Banerjee, S. Epidemic outbreaks and its control using a fractional order model with seasonality and stochastic infection. Phys. A. 501, 408–417 (2018).

Sun, H., Zhang, Y., Baleanu, D., Chen, W. & Chen, Y. A new collection of real world applications of fractional calculus in science and engineering. Commun. Nonlinear Sci. Numer. Simul. 64, 213–231 (2018).

Aslam, M. S. & Raja, M. A. Z. A new adaptive strategy to improve online secondary path modeling in active noise control systems using fractional signal processing approach. Signal Process. 107, 433–443 (2015).

Pu, Y., Zhang, N., Zhang, Y. & Zhou, J. A texture image denoising approach based on fractional developmental mathematics. Pattern Anal. Appl. 19(2), 427–445 (2016).

Yang, X., Machado, J. T., Cattani, C. & Gao, F. On a fractal LC-electric circuit modeled by local fractional calculus. Commun. Nonlinear Sci. 47, 200–206 (2017).

Pu, Y., Yi, Z. & Zhou, J. Fractional Hopfield neural networks: fractional dynamic associative recurrent neural networks. IEEE Trans. Neural Netw. Learn. Syst. 99, 1–15 (2016).

Baskonus, H. M., Mekkaoui, T., Hammouch, Z. & Bulut, H. Active control of a chaotic fractional order economic system. Entropy 17(8), 5771–5783 (2015).

Safarinejadian, B., Asad, M. & Sadeghi, M. S. Simultaneous state estimation and parameter identification in linear fractional order systems using coloured measurement noise. Int. J. Control. 89(11), 1–38 (2016).

Cheng, S., Wei, Y., Chen, Y., Li, Y. & Wang, Y. An innovative fractional order LMS based on variable initial value and gradient order. Signal Process. 133, 260–269 (2017).

Chaudhary, N. I., Zubair, S. & Raja, M. A. Z. A new computing approach for power signal modeling using fractional adaptive algorithms. ISA Trans. 68, 189–202 (2017).

Zubair, S., Chaudhary, N. I., Khan, Z. A. & Wang, W. Momentum fractional LMS for power signal parameter estimation. Signal Process. 142, 441–449 (2018).

Machado, J. T. & Lopes, A. M. A fractional perspective on the trajectory control of redundant and hyper-redundant robot manipulators. Appl. Math. Model. 46, 716–726 (2017).

Ameen, I. & Novati, I. P. The solution of fractional order epidemic model by implicit Adams methods. Appl. Math. Model. 43, 78–84 (2017).

Khan, Z. A. et al. Fractional stochastic gradient descent for recommender systems. Electron. Mark. 29(2), 275–285 (2019).

Khan, Z. A. et al. Design of normalized fractional SGD computing paradigm for recommender systems. Neural Comput. Appl. 32(14), 10245–10262 (2020).

Chaudhary, N. I. et al. Design of normalized fractional adaptive algorithms for parameter estimation of control autoregressive autoregressive systems. Appl. Math. Model. 55, 698–715 (2018).

Chaudhary, N. I., Khan, Z. A., Zubair, S., Raja, M. A. Z. & Dedovic, N. Normalized fractional adaptive methods for nonlinear control autoregressive systems. Appl. Math. Model. 66, 457–471 (2019).

Yin, W., Wei, Y., Liu, T. & Wang, Y. A novel orthogonalized fractional order filtered-x normalized least mean squares algorithm for feedforward vibration rejection. Mech. Syst. Signal Process. 119, 138–154 (2019).

Chaudhary, N. I., Aslam, M. S., Baleanu, D. & Raja, M. A. Z. Design of sign fractional optimization paradigms for parameter estimation of nonlinear Hammerstein systems. Neural Comput. Appl. 32, 8381–8399 (2020).

Chaudhary, N. I., Latif, R., Raja, M. A. Z. & Machado, J. A. T. An innovative fractional order LMS algorithm for power signal parameter estimation. Appl. Math. Model. 83, 703–718 (2020).

Liu, J. et al. A quasi fractional order gradient descent method with adaptive stepsize and its application in system identification. Appl. Math. Comput. 393, 125797 (2021).

Wang, Y., He, Y. & Zhu, Z. Study on fast speed fractional order gradient descent method and its application in neural networks. Neurocomputing 489, 366–376 (2022).

Bai, E. & Li, K. Convergence of the iterative algorithm for a general Hammerstein system identification. Automatica 46(11), 1891–1896 (2010).

Li, J. et al. A recursive identification algorithm for Wiener nonlinear systems with linear state-space subsystem. Circuits Syst. Signal Process. 37(6), 2374–2393 (2018).

Wang, J., Zhang, Q. & Ljung, L. Revisiting Hammerstein system identification through the two-stage algorithm for bilinear parameter estimation. Automatica 45(11), 2627–2633 (2009).

Ding, F. & Wang, X. Hierarchical stochastic gradient algorithm and its performance analysis for a class of bilinear-in-parameter systems. Circuits Syst. Signal Process. 36(4), 1393–1405 (2017).

Chen, M., Ding, F. & Yang, E. Gradient-based iterative parameter estimation for bilinear-in-parameter systems using the model decomposition technique. IET Control Theory Appl. 12, 2380–2389 (2018).

Ding, F., Wang, Y., Dai, J., Li, Q. & Chen, Q. A recursive least squares parameter estimation algorithm for output nonlinear autoregressive systems using the input–output data filtering. J. Franklin Inst. 354, 6938–6955 (2017).

Wang, X. & Ding, F. The filtering based parameter identification for bilinear-in-parameter systems. J. Franklin Inst. 365, 514–538 (2019).

Wang, X., Ding, F., Alsaadi, F. E. & Hayat, T. Convergence analysis of the hierarchical least squares algorithm for bilinear-in-parameter systems. Circuits Syst. Signal Process. 35(12), 4307–4330 (2016).

Chen, M., Ding, F., Xu, L., Hayat, T. & Alsaedi, A. Iterative identification algorithms for bilinear-in-parameter systems with autoregressive moving average noise. J. Franklin Inst. 354(17), 7885–7898 (2017).

Hu, Y., Liu, B. & Zhou, Q. A multi-innovation generalized extended stochastic gradient algorithm for output nonlinear autoregressive moving average systems. Appl. Math. Comput. 247, 218–224 (2014).

Yu, F., Mao, Z., Jia, M. & Yuan, P. Recursive parameter identification of Hammerstein-Wiener systems with measurement noise. Signal Process. 105, 137–147 (2014).

Zhu, Y., Wu, H., Chen, Z., Chen, Y. & Zheng, X. Design of auxiliary model and hierarchical normalized fractional adaptive algorithms for parameter estimation of bilinear-in-parameter systems. Int. J. Adapt. Control Signal Process. 36(10), 2562–2584 (2022).

Chaudhary, N. I., Raja, M. A. Z., Khan, Z. A., Mehmood, A. & Shah, S. M. Design of fractional hierarchical gradient descent algorithm for parameter estimation of nonlinear control autoregressive systems. Chaos Solitons Fract. 157, 111913 (2022).

Chaudhary, N. I., Raja, M. A. Z., He, Y., Khan, Z. A. & Machado, J. A. T. Design of multi innovation fractional LMS algorithm for parameter estimation of input nonlinear control autoregressive systems. Appl. Math. Model. 93, 412–425 (2021).

Zong, T., Li, J. & Lu, G. Auxiliary model-based multi-innovation PSO identification for Wiener-Hammerstein systems with scarce measurements. Eng. Appl. Artif. Intell. 106, 104470 (2021).

Xu, L., Ding, F. & Yang, E. Auxiliary model multiinnovation stochastic gradient parameter estimation methods for nonlinear sandwich systems. Int. J. Robust Nonlinear Control. 31, 148–165 (2021).

Chen, H., Xiao, Y. & Ding, F. Hierarchical gradient parameter estimation algorithm for Hammerstein nonlinear systems using the key term separation principle. Appl. Math. Comput. 247, 1202–1210 (2014).

Ding, F. et al. A hierarchical least squares identification algorithm for Hammerstein nonlinear systems using the key term separation. J. Franklin Inst. 355, 3737–3752 (2018).

Zhou, Y., Zhang, X. & Ding, F. Hierarchical estimation approach for RBF-AR models with regression weights based on the increasing data length. IEEE Trans. Circuits Syst. II Express Briefs 68(12), 3597–3601 (2021).

Gu, Y., Dai, W., Zhu, Q. & Nouri, H. Hierarchical multi-innovation stochastic gradient identification algorithm for estimating a bilinear state-space model with moving average noise. J. Comput. Appl. Math. 420, 114794 (2023).

Podlubny, I. Fractional Differential Equations: an Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of their Solution and Some of Their Applications Vol. 198 (Academic Press, 1998).

Dumitru, B., Kai, D. & Enrico, S. Fractional Calculus: Models and Numerical Methods Vol. 3 (World Scientific, 2012).

Ortigueira, M. & Coito, F. On the usefulness of Riemann-Liouville and Caputo derivatives in describing fractional shift-invariant linear systems. J. Appl. Nonlinear Dyn. 1, 113–124 (2012).

Zahoor, R. M. A. & Qureshi, I. M. A modified least mean square algorithm using fractional derivative and its application to system identification. Eur. J. Sci. Res. 35(1), 14–21 (2009).

Ding, F. System Identification–Multi-Innovation Identification Theory and Methods (Science Press, 2016).

Mao, Y. & Ding, F. A novel data filtering based multi-innovation stochastic gradient algorithm for Hammerstein nonlinear systems. Digit. Signal Process. 46, 215–225 (2015).

Chaudhary, N. I., Raja, M. A. Z. & Khan, A. U. R. Design of modified fractional adaptive strategies for Hammerstein nonlinear control autoregressive systems. Nonlinear Dyn. 82(4), 1811–1830 (2015).

Sun, H., Xiong, W., Ding, F. & Yang, E. Hierarchical estimation methods based on the penalty term for controlled autoregressive systems with colored noises. Int. J. Robust Nonlinear Control. 34, 6804–6826 (2024).

Xu, L., Xu, H. & Ding, F. Adaptive multi-innovation gradient identification algorithms for a controlled autoregressive autoregressive moving average model. Circuits Syst. Signal Process. 43, 3718–4377 (2024).

Xu, C. & Mao, Y. Auxiliary model-based multi-innovation fractional stochastic gradient algorithm for hammerstein output-error systems. Machines 9, 247 (2021).

Zheng, J. & Ding, F. A filtering-based recursive extended least squares algorithm and its convergence for finite impulse response moving average systems. Int. J. Robust Nonlinear Control. 34, 6063–6082 (2024).

Xing, H., Ding, F., Pan, F. & Yang, E. Hierarchical recursive least squares parameter estimation methods for multiple-input multiple-output systems by using the auxiliary models. Int. J. Adapt Control Signal Process. 37, 2983–3007 (2023).

Geng, F. & Qian, S. An optimal reproducing kernel method for linear nonlocal boundary value problems. Appl. Math. Lett. 77, 49–56 (2018).

Fernandez, A., Özarslan, M. A. & Baleanu, D. On fractional calculus with general analytic kernels. Appl. Math. Comput. 354, 248–265 (2019).

Atangana, A., Aguilar, J. F. G., Kolade, M. O. & Hristov, J. Y. Fractional differential and integral operators with non-singular and non-local kernel with application to nonlinear dynamical systems. Chaos Solitons Fractals. 132, 109493 (2020).

Acknowledgements

The authors gratefully acknowledge the financial support received from the Natural Science Foundation of China, grant number 62303360, 62203339, 62173262, and 62073250. We also sincerely acknowledge the funding provided by a grant from the Hubei Provincial Natural Science Foundation of China, grant number 2023AFB619 and 2022CFB602.

Author information

Authors and Affiliations

Contributions

Main idea H.W. and Y.Z.; methodology Y.Z.; simulations and real plant experiments Z.C.; validation Z.Z.; writing original draft preparation Y.Z., visualization Y.C. and X.Z. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhu, Y., Wu, H., Chen, Z. et al. Design of multi-innovation hierarchical fractional adaptive algorithm for generalized bilinear-in-parameter system using the key term separation principle. Sci Rep 14, 32013 (2024). https://doi.org/10.1038/s41598-024-83654-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-83654-3