Abstract

The control of variable stiffness actuators (VSAs) is challenging because they have highly nonlinear characteristics and are difficult to model accurately. Classical control approaches using high control gains can make VSAs stiff, which alters the inherent compliance of VSAs. Iterative learning control can achieve high tracking accuracy for VSAs but generally lacks sufficient generalization. This study applies Gaussian process (GP) regression to design a stable tracking controller combining feedforward and low-gain feedback control actions for agonistic-antagonistic (AA) VSAs subjected to unknown dynamics. The GP model learns the inverse dynamics of AA-VSAs and provides model fidelity by the predicted variance. The stability analysis of the closed-loop system demonstrates that the tracking error is uniformly ultimately bounded and exponentially converges to a small ball under a given probability. Experiments on an AA-VSA named qbmove Advanced have validated the superiority of the proposed method with respect to tracking accuracy and generalization.

Similar content being viewed by others

Introduction

The increase in applications of human-robot interaction leads to the introduction of compliant actuators to robots1. Compliant actuators can be roughly classified into two types: Series elastic actuators with constant stiffness2 and variable stiffness actuators (VSAs)3. VSAs are complex mechatronic devices whose position and stiffness can be controlled by two motors separately or jointly. Classical control goals, such as adjusting equilibrium positions and stiffness or injecting damping into the system, are still important for VSAs4. Note that in contrast to passivity-based impedance control for flexible-joint robots in Ref.5 that achieves robot compliance (relevant to stiffness and damping) virtually by software implementation, the stiffness of VSAs is controlled by mechanical reconfiguration3. The control of VSAs is challenging, mainly due to their strong nonlinearities and difficulties in accurate modeling6.

Several classical model-based control approaches have been employed to VSAs4,7,8,9,10. The feedback linearization was applied to achieve decoupled position and stiffness control of VSAs in Ref.7. In Ref.4, a command-filtered backstepping controller was developed for VSA-driven robots. In Ref.8, an elastic structure-preserving controller was designed for VSA-driven robots to achieve favorable regulation with the desired link-side damping. In Ref.9, a disturbance observer-based sliding mode controller was designed for a VSA with S-shaped springs. In Ref.10, a gain-scheduling controller was applied to a lever arm-based VSA. The above control approaches have two major drawbacks: 1) they need exact VSA models that are rarely available in practice; 2) they achieve good control accuracy at the cost of altering the physical robot dynamics, which stiffens the robot and violates the purpose of introducing compliant actuators11.

Iterative learning control (ILC) can achieve high-accuracy control without accurate VSA models and high feedback gains11,12,13. Della Santina et al.11 introduced a position-based ILC method for articulated soft robots (including robots with VSAs) and showed that a suitable combination of low-gain feedback and feedforward control improves position tracking accuracy while maintaining robot compliance, but the stability problem was not discussed in Ref.11. Angelini et al.12 extended the method of Ref.11 to control articulated soft robots under unexpected interactions in unstructured environments and provided systematic design and analysis tools to guarantee closed-loop stability and tracking error convergence. However, motion control actions and stiffness are coupled in the method of Ref.12, which means that a new learning phase is needed when either the desired trajectory or stiffness is changed. To alleviate the aforesaid limitation in Ref.12, Mengacci et al.13 designed a torque-based ILC method that requires only the minimum knowledge of articulated soft robots by identifying a class of articulated soft robots whose motion and stiffness adjustments can be decoupled. However, this method still requires a new learning phase when the desired trajectory changes, needs a symmetric assumption for the elastic mechanism that is difficult to verify, and is more sensitive to friction effects compared to Ref.12. Thus, the task generalization of the above ILC methods is limited14. Note that experimental validations in Refs.11,12,13 were carried out on a series of agonistic-antagonistic (AA) VSAs named qbmove.

Machine learning-based control can improve task generalization while maintaining the benefits of ILC to some extent15,16,17,18,19,20,21. Tran et al.15 proposed an adaptive NN backstepping control method for an electrohydraulic elastic robot manipulator with variable joint stiffness. Mitrovic et al.16 introduced locally weighted projection regression (LWPR) to learn the dynamic model and stochastic properties of an AA-VSA for optimal impedance control. Knezevic et al.17 applied NN and LWPR to design two feedforward controllers for a qbmove AA-VSA, respectively. Gandarias et al.18 proposed a hybrid NN-based kinematic modeling method for open-loop position control of robots with variable stiffness links, where two NNs are applied to learn the forward kinematics for position estimation and the inverse kinematics for predicting the compensated next position, respectively. Habich et al.19 and Pan et al.20 applied Gaussian process (GP) to design feedforward controllers for the control of AA-VSAs. Liu et al.21 designed an adaptive NN backstepping controller for a pneumatic artificial muscle-driven robot with variable stiffness. Note that the stability problems were not considered in Refs.16,17,18,19,20.

In general, machine learning-based control is promising for VSAs, but to the best of our knowledge, relevant results are rare, such that it is still desirable to investigate machine learning-based control with stability guarantees for VSAs. In this study, we apply GP regression to develop a stable tracking controller consisting of feedforward and low-gain feedback actions for AA-VSAs subjected to unknown dynamics. GP is a supervised machine learning approach widely applied to robotics due to the universal approximation ability and the generalization under small training data22. Compared with NN learning, GP possesses two distinctive features23: (1) it is more convenient to achieve knowledge acquisition without the stringent condition termed persistent excitation24, where its learning capacity depends on the number of stored data; (2) it provides a fidelity measure of the learned model via the predicted variance, which can be used to improve control robustness.

The proposed GP control (GPC) method applies a GP model to learn the inverse dynamics of AA-VSAs for feedforward control, and the feedback control gains are adapted according to the predicted variance to enhance performance. The stability analysis rigorously shows that the tracking error is uniformly ultimately bounded (UUB) and exponentially converges to a ball under a given probability. The proposed GPC method is compared experimentally with a pure feedback method and an ILC method on an AA-VSA named qbmove Advanced25. Compared with existing results on AA-VSA control, the major contributions of this study include: 1) a low-gain GPC is designed for AA-VSAs to preserve the mechanical behavior with guaranteed tracking accuracy, where closed-loop stability is rigorously established based on a specially chosen Lyapunov function; 2) comparative experiments are provided to verify the superiority of the proposed method with respect to tracking accuracy and generalization. The current study significantly extends its conference version20 by providing a more comprehensive literature review, stability analysis of the closed-loop system, and robustness verification.

Notation: \(\mathbb {R}\), \(\mathbb {R}^+\), \(\mathbb {R}^{n}\), and \(\mathbb {R}^{m\times n}\) denote the spaces of real numbers, positive real numbers, real n-vectors, and real \(m\times n\)-matrices, respectively, \(\mathcal {N}(\varvec{\mu }, K)\) denotes a multivariate Gaussian distribution with a mean vector \(\varvec{\mu } \in \mathbb {R}^{m}\) and a covariance matrix \(K \in \mathbb {R}^{m \times m}\), \(\mathcal{G}\mathcal{P}(\mu , k({\varvec{x}}, {\varvec{x}}^{\prime }))\) is a GP with a mean \(\mu \in \mathbb {R}\) and a kernel function \(k: \mathbb {R}^{n} \times \mathbb {R}^{n} \mapsto \mathbb {R}\), \(\Vert \varvec{x} \Vert\) is the Euclidean norm of \(\varvec{x} \in \mathbb {R}^n\), min\(\{\cdot \}\) and max\(\{\cdot \}\) are the minimum and maximum operators, respectively, the probability of a probabilistic event \(\Pi\) is written as \(P\{\Pi \}\), and \(I_n\) denotes a \({n}\times {n}\) identity matrix, where n and m are positive integers.

Problem formulation

Consider an AA-VSA mechanism with a link moving in the vertical plane so that its dynamic model is12

where \({q}(t)\in \mathbb {R}\) is the joint angular position, \({\varvec{\theta }} (t)\) \(\in \mathbb {R}^{2}\) is the motor angular position, M \(\in \mathbb {R}^{+}\) is the inertia of the shaft, J \(\in \mathbb {R}^{2 \times 2}\) is the inertia of the motors, B \(\in \mathbb {R}^{2 \times 2}\) is the viscous friction at the motor side, D \(\in \mathbb {R}^{+}\) is the viscous friction at the link side, \({{g}({q})} \in \mathbb {R}\) is the gravity term, \({\tau }_{\text {un}} \in \mathbb {R}\) collects model uncertainties, such as nonlinear friction and coupling dynamics, \(E({q},{\varvec{\theta }}) \in \mathbb {R}\) is the elastic potential of the system, and \({\varvec{\tau }}_{\textrm{m}} \in \mathbb {R}^ {2}\) is the input torque from the motors.

To simplify the model, we introduce two assumptions from Ref.12. First, the motor dynamics (2) is ignored, which means that the motor side can be perfectly controlled, so the motor position \({\varvec{\theta }}\) can be used as an effective control input. Second, there exists a nonlinear function \(T: \mathbb {R} \times \mathbb {R} \mapsto \mathbb {R}\) satisfying \(\frac{\partial E({q}, {\varvec{\theta }}) ^T}{\partial q} = T({q} - {r}, {d})\) to model the elastic joint torque, where \(\varvec{\theta }\) \(=\) \([r+d, r-d]^T\) is a coordinate transformation, r is a motor reference position, and d is an additional parameter for stiffness adjustment. Note that the expression of \(T({q} - {r}, {d})\) and the role of d depend on the physical implementation of VSAs. For AA-VSAs, d indicates the joint co-contraction level, and it is treated to be quasi-static here. Let \({\tau }:= T({q} - {r}, {d})\). Then, one gets a simplified AA-VSA model

where r is a new control input. A control scheme in Fig. 1 will be designed for Eq. (3) in next section.

Define a position tracking error \({e}(t):= {q}(t) - {q}_{\text {d}}(t)\), where \({q}_{\text {d}}(t) \in \mathbb {R}\) denotes a joint reference position. This study aims to design a suitable control input r for the system (3) such that the position tracking error e is as small as possible under a quasi-static stiffness adjustment parameter d. In addition, r should not change the inherent physical stiffness of the system (3) beyond a specific limit. That is, given a constant \({\zeta }\) \(\in \mathbb {R}^+\), denoting the maximum stiffness variation, the closed-loop stiffness \(\frac{\partial {T({q} - {r}, {d})}}{\partial q}\) should be within a neighborhood of the radius \({\zeta }\) of the open-loop stiffness, which can be expressed as follows11:

where \({\psi (q, \dot{q})} \in \mathbb {R}\) is the feedback control part, \(\frac{\partial T({q} - {\psi (q, \dot{q})}, {d})}{\partial q}\) denotes the open-loop stiffness of the system (3), and \(q_{*} \in \mathbb {R}\) is such a value that satisfies \({\psi (q_{*})} = q_{*}.\)

Remark 1

The above two assumptions have been used in the ILC method of AA-VSAs in Ref.12. Note that the function \(T({q} - {r}, {d})\) contains the compliance information of AA-VSAs, and the closed-loop stiffness \(\frac{\partial {T({q} - {r}, {d})}}{\partial q}\) can be adjusted by designing the control input r and setting the adjustment parameter d.

Gaussian process control design

Gaussian process modeling

Consider a training data set \(\mathcal {D} = \{X,\varvec{y}\}\) generated by \(y_i = f(\varvec{x}_i) + \eta\) with \(f: \mathbb {R}^{n} \rightarrow \mathbb {R}\), where X \(:=\) \([\varvec{x}_1\), \(\varvec{x}_2\), \(\cdots\), \(\varvec{x}_N]^T\) \(\in \mathbb {R}^{N \times n}\), \(\varvec{y}\) \(:=\) \([y_1\), \(y_2\), \(\ldots\), \(y_N]^T\) \(\in \mathbb {R}^{N}\), \(\eta \sim \mathcal {N} (0, \sigma _\text {n}^2)\) is a noisy signal with the standard deviation \({\sigma _\text {n}} \in \mathbb {R}^{+}\), N is the number of data points, and n is an input dimension. Let \(\mathcal{G}\mathcal{P}(\mu , k({\varvec{x}}, {\varvec{x}}^{\prime }))\) be an approximation of f, denoted by \(f \sim \mathcal{G}\mathcal{P}(\mu , k({\varvec{x}}, {\varvec{x}}^{\prime }))\). Given a test input \(\varvec{x}^* \in \mathbb {R}^n\), the prediction of f is determined by the mean \(\mu \in \mathbb {R}\) and variance \(\sigma ^2 \in \mathbb {R}^{+}\) as follows22:

with \(K_{XX}:= K(X, X) \in \mathbb {R}^{N \times N}\), \(K_{\varvec{x}^* \varvec{x}^*}:= K(\varvec{x}^*, \varvec{x}^*) \in \mathbb {R}\), and \(K_{\varvec{x}^*X}:= K(\varvec{x}^*,X) \in \mathbb {R}^{1 \times N}\), where

is a covariance matrix. Note that the definitions of \(K(\varvec{x}^*,X)\) and \(K(\varvec{x}^*, \varvec{x}^*)\) are in the same way as that of K(X, X) so that they are omitted here. The kernel function k, which represents the correlation between two points, is frequently chosen as a Gaussian type

with \({\varvec{x}}, {\varvec{x}}^{\prime } \in \mathbb {R}^{n}\), where \(\Lambda \in \mathbb {R}^{n \times n}\) and \({\phi } \in \mathbb {R}^{+}\) are hyperparameters obtained by optimizing the likelihood function during training. The Gaussian kernel function has the mertis of powerful modeling, good numerical stability, and simple hyperparameters selection, but the weakness in interpretability and calculation speed26. If \(\Lambda\) and \({\phi }\) are determined, the output of k depends only on the distance between two points.

Consider the dynamic model (3) with M, D, and g(q) being uncertain. A prior estimation of Eq. (3) is

where \(\hat{M}\) \(\in \mathbb {R}^{+}\), \(\hat{D}\) \(\in \mathbb {R}^{+}\), and \(\hat{g}({q}) \in \mathbb {R}\) are estimates of M, D, and g(q), respectively.

Property 1

There exist constants \(m_1\), \(m_2\), \(c_{\text {d}} \in \mathbb {R}^{+}\) that satisfy \({m_1} \le {\hat{M}} \le {m_2}\) and \(\hat{D} \le c_{\text {d}}.\)

When there is no prior knowledge of Eq. (3), set \(\hat{M} = c_{\text {m}}\), \(\hat{D} = 0\), and \(\hat{g} = 0\), in which \(c_{\text {m}} \in \mathbb {R}^{+}\) is a constant. Then, a GP model is trained offline with a data set \(\mathcal {D} = \{{\varvec{p}}_{i}, {{\tilde{\tau }}}_{i}\}_{i=1}^N\) to learn the unknown part of Eq. (3), where \({\varvec{p}}_i:= [{q}, {\dot{q}}]^T \in \mathbb {R}^{2}\), and \(\tilde{\tau }_i \in \mathbb {R}\) is obtained by subtracting Eq. (9) from Eq. (3) as follows:

with \(\tilde{M}:= M - \hat{M}\), \(\tilde{D}:= D - \hat{D}\), and \(\tilde{g}:= {g} - \hat{g}\). The GP model only uses the position q and velocity \(\dot{q}\) as inputs whereas the acceleration \(\ddot{q}\) is inferred from q and \(\dot{q}\) to avoid using the immeasurable \(\ddot{q}\)27. Note that \({\varvec{p}}_i\) and \(\tilde{\tau }_i\) denote the i-th data in \(\mathcal {D}\), which are generated by a real AA-VSA described by Eq. (3) with a specially designed control input r, and the subscript i is omitted below. Considering the prior knowledge in Eq. (9) makes the GPC design more generalized, but its availability or not does not affect the GPC design. Next, we give the bound of the modeling error \(\Delta := \mu (\tilde{\tau }|{\varvec{p}}, \mathcal {D}) - \tilde{ \tau } \in \mathbb {R}.\)

Lemma 1

For the uncertainty \({{\tilde{\tau }}}\) given by Eq. (10) with the bounded reproducing kernel Hilbert space norm \(\Vert {\tilde{\tau }} \Vert _k < \infty\) on any compact set \(\Omega \subset \mathbb {R}^{2}\), \(\Delta\) is bounded by28

with \({\varvec{p}} \in {\Omega }\), where \(\delta \in \mathbb (0,1)\) is a probability value, and

with \(Y = [\varvec{p}_1, \ldots , \varvec{p}_{N}]^{T} \in \mathbb {R}^{N \times 2}.\)

Remark 2

As the same practice in Ref.12, this study assumes that a rough estimation of the function \(T ({q} - {r}, {d})\) is available (usually provided by manufacturers). Then, the control torque \(\tau\) in Eq. (3) can be obtained by substituting the real control input r. When using a GP to predict the elastic torque \(\tilde{\tau }\), r needs to be obtained by solving \(\tau = T ({q} - {r}, {d})\), which can be calculated offline to reduce the computational burden during control. The above assumption facilitates the GPC control design, and model uncertainties resulting from the inaccuracy of \(T ({q} - {r}, {d})\) can be absorbed in the lumped uncertainty \(\tau _{\textrm{un}}\) and learned by the GP. Hence, the inaccuracy of \(T ({q} - {r}, {d})\) does not affect the learning of the unknown part in Eq. (3).

Gaussian process tracking control

This section presents a GPC method that combines feedforward and variable low-gain feedback actions to guarantee tracking error convergence. To this end, define a data set \(\mathcal {D}_p = \{{q}_{i}, {{\tilde{\tau }}}_{i}\}_{i=1}^N\). Analogously to Eq. (6), let \({\sigma }_{\text {p}}(q)\) \(:=\) \({\sigma ^2}(\tilde{\tau }| q, \mathcal {D}_p)\) \(\in\) \(\mathbb {R}^{+}\) and \({\sigma }_{\text {d}}(\dot{q}, q)\) \(:=\) \({\sigma ^2}(\tilde{\tau }|\dot{q}, q, \mathcal {D})\) \(\in\) \(\mathbb {R}^{+}\). Let variable feedback gain functions \(K_{\text {p}}({\sigma }_{\text {p}}):= K_{\text {p}}({\sigma }_{\text {p}}(q)) + K_\text {pc} \in \mathbb {R}^{+}\) and \(K_{\text {d}}({\sigma }_{\text {d}})\) \(:=\) \(K_{\text {d}}({\sigma }_{\text {d}}(\dot{q}, q)) + K_\text {dc} \in \mathbb {R}^{+}\) with \(K_\text {pc}\), \(K_\text {dc} \in \mathbb {R}^{+}\) being certain constants. Then, three assumptions are given as follows.

Assumption 1

The desired signals \({q}_{\text {d}}\) and \(\dot{q}_{\text {d}}\) are bounded by \(| q_{\text {d}} | \le {c}_{\text {d}1}\), \(| \dot{q}_{\text {d}} | \le {c}_{\text {d}2}\) with \({c}_{\text {d}1}\), \({c}_{\text {d}2}\in \mathbb {R}^{+}\).

Assumption 2

The variable feedback gain functions \(K_{\text {p}}\) and \(K_{\text {d}}\) are continuous and bounded by

\(\forall q, \dot{q} \in \mathbb {R}\) with \(k_{{\text {p}}1}, k_{{\text {p}}2}, k_{{\text {d}}1}\), \(k_{{\text {d}}2} \in \mathbb {R}^{+}\) some constants.

Assumption 3

The second control goal expressed by Eq. (4) restricts the use of high feedback gains, i.e., if

then the constraint (4) holds12.

Let \(\textbf{e}:= [e, \dot{e}]^T\) be a tracking error vector. Now, we introduce the main theoretical result of this study.

Theorem 1

Consider the system (3) and a GP trained by the data set \(\mathcal {D}\) with Eq. (10). Let the control torque

with \({\varvec{p}}_{\text {d}}:= [{q_{\text {d}}}, {\dot{q}_{\text {d}}}]^T \in \mathbb {R}^{2}\), which is composed of a feedback control term \(\tau _{\text {fb}} \in \mathbb {R}\) and a feedforward control term \(\tau _{\text {ff}} \in \mathbb {R}\) containing the learned GP torque \(\mu (\tilde{\tau }|{\varvec{p}_{\text {d}}}, \mathcal {D})\) and the prior model knowledge (9) [see Fig. 1]. Then, the closed-loop system is stable in the sense that the tracking error vector \(\textbf{e}\) is UUB and exponentially converges to a ball of a radius \(\varrho \in \mathbb {R}^{+}\) with a given probability \(\delta \in (0,1).\)

Proof

One adopts a Lyapunov function candidate

and explains the stability and convergence of the closed-loop system (3) under (17) referring to Ref.23, where \(\epsilon \in \mathbb {R}^{+}\) is a small coefficient specified later, and \(z \in \mathbb {R}\) is an integral variable. To show that (18) is positive-definite and bounded, we first analyze its integral term based on Eq. (14), which is lower-bounded by

The 3rd term at the right-hand side of Eq. (18) is upper-bounded by

Then, one obtains \(\epsilon {\hat{M}}{e}{\dot{e}} \ge -{\frac{1}{2}} \epsilon m_2( {\dot{e}}^2 + {e}^2 )\). Considering \(\hat{M}\) is positive-definite, the 1st term at the right-hand side of (18) is positive-definite. Therefore, Eq. (18) is lower-bounded by

If \(\epsilon <\text {min} \{k_{p1}/m_2, m_1/m_2\}\), the lower bound in Eq. (21) is positive-definite. Similarly, one gets an upper quadratic bound of the integral term in Eq. (18) using the upper bound of \(K_{\text {p}}\) in Eq. (14) as follows:

Therefore, using Property 1, Assumption 2, Eqs. (20), (22), one obtains an upper bound of Eq. (18) as follows:

which is positive for \(0< \epsilon <\text {min} \{k_{p1}/m_2, m_1/m_2\}\). Thus, there exist constants \(c_1, c_2 \in \mathbb {R}^+\) to get

Next, the time derivative of V in Eq. (18) is given by

Combining Eqs. (3), (9), and (17) yields the closed-loop dynamics

Considering the mean function \(\mu (\tilde{\tau }|{\varvec{p}}, \mathcal {D})\) satisfies the Lipschitz condition on \({\varvec{p}} = [{q}, {\dot{q}}]^T\), one gets

with \({\varsigma } \in \mathbb {R}^{+}\) being a constant. Then, one obtains

Substituting the above inequality into Eq. (26), one gets

Applying the above inequality to Eq. (25), one obtains

Using Property 1 and Assumption 2, one gets

With Lemma 1 and the above inequalities, one gets

According to the Peter-Paul inequality, one knows that \({e}{\dot{e}} \le \frac{1}{2}(\rho {{\dot{e}}^2} + {{e}^2}/{\rho } )\) holds, \(\forall {e},{\dot{e}} \in \mathbb {R}\) and \(\rho \in \mathbb {R}^{+}\). Then, Eq. (34) is transformed into

For the sake of simplicity, set \(\rho = (1 + \epsilon _2)\frac{\epsilon (k_\text {d2} + c_d) +(1+\epsilon ){\varsigma }}{2 \epsilon k_\text {p1}}\) with \(\epsilon _2 \in \mathbb {R^{+}}\). The choice of \(\rho\) ensures that the factors of the quadratic items in Eq. (35) are still negative:

with \(v_1:= - \epsilon m_2 - {\varsigma } + k_\text {d1} - \rho (\epsilon (k_\text {d2} + c_d) +(1 +\epsilon ){\varsigma })/2\) and \(v_2:= k_{p1} \epsilon _2/(1 + \epsilon _2) - {\varsigma }\). To ensure \(v_1\), \(v_2 \in \mathbb {R}^{+}\) (i.e., being positive constants), one needs \(k_{{\text {p}}1} \ge {\varsigma } + {\varsigma }/ {\epsilon _2}\) and \(k_{{\text {d}}1} \ge {\varsigma } +\rho {\varsigma } / 2\), and the restriction of \(\epsilon\) below Eq. (21) should be extended to

As the covariance function k is continuous, it is bounded on the compact set \(\Omega\) and the variance \(\sigma ^2(\tilde{\tau }|\varvec{p}, \mathcal {D})\) is bounded. Thus, the modeling error \(\Delta\) has an upper bound. Using \({v_1}| x | \le {{v_1}^2} / {v_2} + {{v_2}{| x |}^2}/{4}\), \(\forall { x \in \mathbb {R}}\) and \(v_1\), \(v_2 \in \mathbb {R}^{+}\), one obtains

Thus, there exist constants \(c_3, \xi \in \mathbb {R}^{+}\) for Eq. (34) to get

with \(\xi := {\frac{{\Delta }^{2}}{v_1}} + \epsilon {\frac{{\Delta }^{2}}{v_2}}\) and \(c_3:= \frac{3}{2} {\frac{\text {min}\{v_1,\epsilon v_2\}}{\text {max}\{\epsilon m_2 + k_\text {p2},(1+\epsilon ) m_2\}}}.\)

Now, applying the Lemma 2 of Ref.29 to Eqs. (24) and (39), one gets that the closed-loop system (3) with (17) is stable in the sense that the tracking error vector \(\textbf{e}\) is UUB and exponentially converges to a ball with a given probability \(\delta\). Besides, as the states q and \(\dot{q}\) are bounded, a compact set \(\Omega\) and a maximum of the modeling error \({\Delta }\) can be found to make \(\varvec{p} \in \Omega\). The radius \(\varrho\) of the ball in Theorem 1 is given exactly as follows29:

where \(\epsilon\) meets the restriction in Eq. (37). If a perfect GP model is available, such that the model error \(\Delta = 0\), the closed-loop system (3) with (17) is asymptotically stable.

Remark 3

The feedback gains \(K_{\text {p}}\) and \(K_{\text {d}}\) in Eq. (17) are adapted according to the variances \({\sigma }_{\text {p}}\) and \({\sigma }_{\text {d}}\), respectively. If the distance between the desired trajectory \({q}_{\text {d}}\) and the training data set \(\mathcal {D}\) becomes large, the GP prediction torque \({\tilde{\tau }}\) becomes inaccurate and \({K_{\text {d}}}\) and \({K_{\text {p}}}\) will increase to ensure tracking accuracy. We use the variances \({\sigma }_{\text {p}}\) and \({\sigma }_{\text {d}}\) to make sure that \({K_{\text {d}}}\) and \({K_{\text {p}}}\) depend implicitly and exclusively on q and (q, \(\dot{q})\), respectively29.

Remark 4

It is indicated in Assumption 3 that the inherent mechanical behavior of AA-VSAs can be preserved by using a low-gain controller, i.e., the feedback gains in feedback action \(\tau _{\text {fb}}\) (defined as \(\psi (q, \dot{q})\) in Eq. (4)) of Eq. (17) should satisfy the condition (16). Thus, the control gains \(K_{\text {p}}\) and \(K_{\text {d}}\) should be kept low. As we assume that there is no prior knowledge about the gravity term g(q), the feedforward action \(\tau _{\text {ff}}\) in Eq. (17) does not depend on q so does not affect Assumption 3.

Experimental studies

Experimental setup

This section implements the GPC method (17) to a qbmove Advanced actuator with load [see Fig. 2], where the control torque \(\tau\) is transferred into the position input r as in Remark 2. Two position-based methods are chosen as baselines, including a proportional integral-integral (PII) method and a position-based ILC method12. The qbmove Advanced actuator is a bidirectional AA-VSA that reaches the best performance in the qbmove family25, where its compliant mechanism is implemented by two motors connected at the output shaft by nonlinear springs [see Fig. 3], and its actuation torque \({\tau }\) in Eq. (3) is explicitly expressed by

where \(a_1 =\) 8.9995, \(a_2 =\) 8.9989, \(k_{\theta 1} =\) 0.0026, and \(k_{\theta 2} =\) 0.0011 are elastic parameters from the data sheet. The actuator is connected to a PC using RS485/USB protocol and powered by a 24V power supply. A manufacturer’s MATLAB/Simulink library is run on the PC to interface the actuator. Then, we execute the Simulink code such that the actuator is activated to receive the position control input r from the PC.

The schematic diagram of a kind of AA-VSAs named qbmove redrawn from ref.25. (a) The antagonistic mechanism of the qbmove actuator, where two motors are connected at the output shaft by nonlinear springs, q is an output position, \(\theta _1\) and \(\theta _2\) are motor positions, and \(\tau\) is an elastic torque. (b) The implementation of nonlinear compliant elements in the qbmove actuator, where the two circles represent one of the motors and the output shaft that are connected by nonlinear springs. (c) Sensor illustration in the qbmove actuator.

Training data collection

The training data set \(\mathcal {D}\) contains \(q, \dot{q}\), and \(\tilde{\tau }\), where q can be obtained by the encoder inside the qbmove Advanced actuator, and \(\dot{q}\) can be estimated through the low-pass filtering of the measured q. In this section, \(\mathcal {D}\) is collected to cover various values of q, in which the actuator described by Eq. (3) is activated by

where \(a_{{\text {w}}1}\), \(a_{{\text {w}}2} \in \mathbb {R}\) denote amplitude parameters chosen to satisfy \(|q| \le 1\) rad via Eq. (3) (according to the application scenario), and \(w_1\), \(w_2 \in \mathbb {R}^{+}\) are frequency parameters. To make the input signal r rich enough, we choose different values of \(a_{{\text {w}}1}\), \(a_{{\text {w}}2}\), \(w_1\), and \(w_2\) during data collection.

As the prior knowledge of the model (3) is unavailable, we set \(\hat{M} = 0.001\), \(\hat{D} = 0\), and \(\hat{g} = 0\) in Eq. (10). Then, the uncertainty \(\tilde{\tau }\) is calculable by Eqs. (10) and (41). Note that the model uncertainty caused by the inaccuracy of Eq. (41) can be included in the lumped uncertainty \({\tau }_{\text {un}}\) and learned by the GP model. The data collection is sampled at 1 kHz, and the number N of data points is set to be 2000 by trial and error. Note that choosing N needs to consider the modeling error \(\Delta\), the GP input dimension n, and the computational burden. After the input dimension n is fixed, N should be chosen carefully, as a small N may lead to a large \(\Delta\), while a large N may also increase \(\Delta\) as optimizing the length-scale parameter \(\Lambda\) of GP becomes computationally intractable30. The offline training time of the GP model with \(N = 2000\) is about 10 s in a personal computer with 2.90GHz Intel i7-10700 CPU and 16GB RAM, which is usually negligible. In addition, \(\tilde{\tau }\) will quickly decrease to 0 when the desired trajectory \({q_{\text {d}}}\) is far away from the training data set \(\mathcal {D}\), which means that the GP should be trained with a sufficiently large area of \(\varvec{p}\) to ensure its generalization.

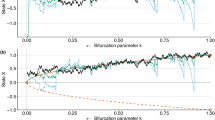

The collected data set \(\mathcal {D}\) is divided into two parts: A training data set for GP modeling and a testing data set for verifying modeling fidelity. In this study, the hyperparameter \(\Lambda\) represents the weights of the position q and velocity \(\dot{q}\) in the kernel function k. Due to the presence of noise in \(\dot{q}\), we set the initial weight for \(\dot{q}\) to be lower than that for q. In the training process, using the fminsearch function in MATLAB to optimize the likelihood function, one obtains the hyperparameters \(\Lambda = [0.5\ 0\;0\ 2.30]\) and \({\phi } =0.0085\). Fig. 4 illustrates \(\mathcal {D}\) under the above input signal r. The fidelity of the learned GP model via the predicted variance is verified in Fig. 5, and hence, the GP prediction mean \(\mu (\tilde{\tau }|{\varvec{p}_{\text {d}}}, \mathcal {D})\) can be used for the feedforward compensation of the unknown part in Eq. (3) in the GPC method (17). Note that the experiments here show the cases with fixed stiffness parameter d. Other cases with varying d can be handled similarly to collect training data under a different d.

Experiment 1: performance validation

The aim of this experiment is to illustrate the tracking performance of the GPC method (17) and its softness preservation capability compared with the two comparative methods. In all experiments, the stiffness parameter d is set to 0 rad, implying minimum stiffness. We use the same desired trajectory as in Ref.12 given by

which is a smooth polynomial path from 0 to 0.7854 rad with the terminal time \(t_\text {f}\) = 2 s. Firstly, we apply the GPC method (17) to the qbmove Advanced actuator, where the feedback control gains are parameterized as \(K_{\text {p}}({\sigma }_{\text {p}}) = 100 {\sigma }_{\text {p}} + 0.004\) and \(K_{\text {d}}({\sigma }_{\text {d}}) = 20 {\sigma }_{\text {d}} + 0.001\), and the control gain boundaries in (14) and (15) are set as \(k_{{\text {p}}1} = 0.004, k_{{\text {p}}2} = 0.04, k_{{\text {d}}1} = 0.001\), and \(k_{{\text {d}}2} = 0.01\). The open-loop stiffness \(\frac{\partial T({q} - {\psi (q)}, {d})}{\partial q}\) in Eq. (4) is calculated as 0.033 N m rad\(^{-1}\) by using Eq. (41) and the rule in Ref.11. Then, the maximum stiffness variation \(\zeta\) in Eq. (4) is calculated as 0.005 N m rad\(^{-1}\) by Eq. (16) under the proposed method.

For comparison purposes, we implement the PII method12 as follows to the actuator:

in which the control gains are set higher than those of the GPC method to make the tracking performance comparable. The maximum stiffness variation \(\zeta\) of the PII becomes 0.04 N m rad\(^{-1}\), which is much bigger than that of the GPC. The ILC from12 is compared similarly, where we set the iteration number as 10 and the input weight of the linear-quadratic regulator as 5 (relevant to the convergence rate).

Control results by the above three methods are depicted in Figs. 6 and 7. Note that the control actions and gains by the PII and ILC are not depicted as their control actions are the motor reference position r, the control gains of the PII are fixed, and the control gains of the ILC are similar to that of the proposed method. It is shown that the proposed method achieves guaranteed tracking accuracy [see Fig. 6], the feedback gains \(K_{\text {p}}\) and \(K_{\text {d}}\) are adjusted based on the predicted variances \({\sigma }_{\text {p}}\) and \({\sigma }_{\text {d}}\), respectively, and the GP feedforward term \(\tau _{\text {ff}}\) remains a large part in the control action [see Fig. 7]. The ILC at the first iteration and the PII show much larger tracking errors e than the proposed method [see Fig. 6]. Note that the link gravity is considered in this case, so the tracking accuracy of the PII is not as good as that in Ref.12 without considering link gravity.

Considering that ILC requires iterations, we normalize the integral of the tracking error e by means of the terminal time \(t_\text {f}\) to compare the tracking performance. Define an averaged integral error

The comparison of the tracking performances over iterations for the three methods is depicted in Fig. 8, where the errors E by the GPC and PII are constant as they do not require iterations. It is shown that the GPC achieves much higher tracking accuracy than the PII, because it applies the mean function \(\mu (\tilde{\tau }|{\varvec{p}_{\text {d}}}, \mathcal {D})\) as the feedforward control action \({\tau }_{\text {ff}}\), which can compensate for the unknown dynamics in (3) well when the desired trajectory \({q_{\text {d}}}\) is around the training data \(\mathcal {D}\). Thus, the GPC can use lower feedback gains, which implies that the maximum stiffness variation \(\zeta\) of the GPC is smaller than that of the PII.

The ILC performs worse than the GPC at the beginning but achieves higher tracking accuracy after several iterations [see the 10th iteration of ILC in Fig. 6]. This is because the control actions of the ILC in the previous iterations can be exploited as the feedforward control actions \({\tau }_{\text {ff}}\) of future iterations to improve tracking accuracy. However, this improvement has the cost of repeating the control task with the time cost \((t_{\textrm{a}} +t_{\textrm{f}} + t_{\mathrm{{off}}}) \times n_{\textrm{k}}\), where \(n_{\textrm{k}}\) is the iteration number, \(t_{\textrm{a}}\) is the time needed to arrange the robot in the initial condition, and \(t_\mathrm{{off}}\) is the time needed to compute (offline) the control action between two trials12. Moreover, it makes the ILC more memory-consuming, where the number of datapoints needed is \(n_{\textrm{k}} \times\) \(t_{\textrm{f}}/ t_{\textrm{s}}\) with \(t_{\textrm{s}}\) being the sampling time. The comparison of datapoint usage and execution time of the GPC and ILC in Experiment 1 is summarized in Table 1. Note that the datapoint usage and execution time are related to the number of tasks and the trajectory duration \(t_{\textrm{f}}\), where a large \(t_{\textrm{f}}\) or more tasks will lead to more datapoints and execution time for the ILC. The tracking error e by the GPC is noisier than that by the ILC [see Fig. 6], as the data set \(\mathcal {D}\) for GPC training is noisy, which affects the prediction of \({\tau }_{\text {ff}}\). Compared with the ILC, the GPC can provide the prediction variance (in addition to the feedforward control action), which represents the model fidelity according to the distance between the desired trajectory \(q_{\text {d}}\) and the training data set \(\mathcal {D}\). The feedback gains \(K_{\text {p}}\) and \(K_{\text {d}}\) increase when \({q_{\text {d}}}\) is far away from \(\mathcal {D}\), such that the tracking error e remains low. Note that the variations of \(K_{\text {p}}\) and \(K_{\text {d}}\) improve the tracking performance but bring the extra computational cost to get the variances \({\sigma }_{\text {p}}\) and \({\sigma }_{\text {d}}.\)

Experiment 2: robustness validation

To further evaluate the generalization and robustness of the proposed GPC, we keep the experimental setup the same as before but set three different desired trajectories \(q_{\text {d}}\). The desired trajectory is generated by

where \({a_r}(s) = {s^n} + {a_{rn - 1}}{s^{n - 1}}+ \cdots + {a_{r0}}\) is a monic Hurwitz polynomial, \({q_{\text {r}}}(t) \in \mathbb {R}\) is a bounded and piecewise-continuous signal, s is a complex variable, and \(a_{ri} \in \mathbb {R}^+\) with \(i =\) 0 to \(n-\)1 are constants. Here, we set \({a_r}(s)\) \(=\) \({s^4}\) \(+\) \({8}{s^{3}}\) \(+\) \({24}{s^{2}}\) \(+\) 32s \(+\) 16. The reference signals \({q_{\text {r}}}(t)\) for desired trajectories A and B are set as 5.6\(\pi\)sin(0.5t) and 2.67\(\pi\)sin(0.4t) with \(\ t \in\) [0, 6), respectively. The desired trajectory C is given by

Control results by the proposed method are depicted in Fig. 9, which show high tracking accuracy without retraining for Trajectory A. According to our experiments, the PII still shows poor tracking accuracy even with high feedback gains when \({q _{\text {d}}}\) changes, resulting in a large stiffness variation \(\zeta\); the ILC achieves good tracking accuracy after several iterations, but it needs to retrain iteratively when \({q _{\text {d}}}\) changes as concluded in Ref.12. Experimental trajectories by the PII and ILC are not depicted here as they are similar to those in Experiment 1. In sharp contrast, the proposed method achieves guaranteed tracking accuracy, preserves the actuator softness due to the low feedback gains, and possesses favorable generalization.

To validate the robustness of the GPC, we use Trajectory B with an initial tracking error e(0) and Trajectory C under an external disturbance \(\tau _{\mathrm{{dis}}}(t) \in \mathbb {R}\). Control results for Trajectory B are depicted in Fig. 10, where the feedback term \(\tau _{\text {fb}}\) remains a large part at the initial control stage and rapidly decreases when achieving guaranteed tracking accuracy. We inject an external disturbance \(\tau _{\mathrm{{dis}}}(t)\) to the right-hand side of Eq. (1) by using a human hand to touch the robot link during \(t \in\) [0.60, 1.35] s, resulting in a large tracking error e for Trajectory C [see Fig. 11]. Once the disturbance disappears, the GPC resumes trajectory tracking quickly, implying its strong robustness to external disturbances. This is because the learned GP model has favorable prediction and compensation effects, so the GPC has enough stability margin to overcome external disturbances even with low feedback gains \(K_{\text {p}}\) and \(K_{\text {d}}\).

Remark 5

The mechanical and electrical parameters of AA-VSAs may change over time due to internal or external conditions, resulting in model variations in Eq. (3). In such a situation, like all offline learning-based control methods, such as16,31, retraining is necessary when the proposed GPC faces a large amount of parameter changes. Fortunately, training data collection is not tedious for our case. As the actuator generates a lot of data while working, it can be selected for retraining when the control performance is declining, and the offline training time of the GP model used in the experiments is about 10 s on a personal computer with 2.90 GHz Intel i7-10700 CPU and 16 GB RAM, which is usually negligible. Finally, online machine learning can alleviate the above limitation, which may be explored in our further studies.

Conclusions

This paper has developed a stable GPC tracking method that combines GP feedforward and variable low-gain feedback actions for AA-VSAs. A GP model is applied to learn the inverse actuator dynamics and to provide model fidelity, and feedback gains are adapted according to model fidelity to enhance the tracking performance. The stability analysis of the closed-loop system rigorously shows that the tracking error is UUB and exponentially converges to a ball with a given probability. Experiments on the qbmove Advanced actuator have validated that the proposed method achieves guaranteed tracking accuracy, preserves the mechanical behavior of the actuator, and has better generalization than state-of-the-art control approaches. Future work would focus on the time-varying stiffness of AA-VSAs and the extension to the multi-DoF case, where the number N of data points must be considered carefully to balance modeling accuracy and computational burden as the computational complexity of GP scales exponentially in \(O(N^{3})\) due to the need to invert a \(N \times N\) kernel matrix.

Data availability

The datasets generated and/or analysed in this study are available from the corresponding author upon reasonable request.

References

Ham, R., Sugar, T., Vanderborght, B., Hollander, K. & Lefeber, D. Compliant actuator designs. IEEE Robot. Autom. Mag. 16, 81–94 (2009).

Pratt, G. & Williamson, M. Series elastic actuators. In Proc. IEEE/RSJ Int. Conf. Intell. Robot. Syst. 399–406 (1995).

Vanderborght, B. et al. Variable impedance actuators: a review. Rob. Auton. Syst. 61, 1601–1614 (2013).

Petit, F., Daasch, A. & Albu-Schaffer, A. Backstepping control of variable stiffness robots. IEEE Trans. Control Syst. Technol. 23, 2195–2202 (2015).

Ott, C., Albu-Schaffer, A., Kugi, A. & Hirzinger, G. On the passivity-based impedance control of flexible joint robots. IEEE Trans. Robot. 24, 416–429 (2008).

Angelini, F., Della Santina, C., Garabini, M., Bianchi, M. & Bicchi, A. Control architecture for human-like motion with applications to articulated soft robots. Front. Robot. AI 7, 117 (2020).

Palli, G., Melchiorri, C. & De Luca, A. On the feedback linearization of robots with variable joint stiffness. In Proc. IEEE Int. Conf. Robot. Autom 1753–1759 (Pasadena, 2008).

Mengacci, R., Keppler, M., Pfanne, M., Bicchi, A. & Ott, C. Elastic structure preserving control for compliant robots driven by agonistic-antagonistic actuators (ESPaa). IEEE Robot. Autom. Lett. 6, 879–886 (2021).

Xu, Y., Guo, K., Li, J. & Li, Y. A novel rotational actuator with variable stiffness using S-shaped springs. IEEE/ASME Trans. Mechatron. 26, 2249–2260 (2021).

Liu, L. et al. Low impedance-guaranteed gain-scheduled GESO for torque-controlled VSA with application of exoskeleton-assisted sit-to-stand. IEEE/ASME Trans. Mechatron. 26, 2080–2091 (2021).

Della-Santina, C. et al. Balancing feedback and feedforward elements. Controlling soft robots. IEEE Robot. Autom. Mag. 24, 75–83 (2017).

Angelini, F. et al. Decentralized trajectory tracking control for soft robots interacting with the environment. IEEE Trans. Robot. 34, 924–935 (2018).

Mengacci, R., Angelini, F., Catalano, M. G., Grioli, G. & Garabini, M. On the motion/stiffness decoupling property of articulated soft robots with application to model-free torque iterative learning control. Int. J. Robot. Res. 40, 348–374 (2021).

Angelini, F., Mengacci, R., Santina, C. D., Catalano, M. G. & Grioli, G. Time generalization of trajectories learned on articulated soft robots. IEEE Robot. Autom. Lett. 5, 3493–3500 (2020).

Tran, D.-T., Nguyen, M.-N. & Ahn, K. K. RBF neural network based backstepping control for an electrohydraulic elastic manipulator. Appl. Sci. 9, 11 (2019).

Mitrovic, D., Klanke, S. & Vijayakumar, S. Learning impedance control of antagonistic systems based on stochastic optimization principles. Int. J. Robot. Res. 30, 556–573 (2011).

Nikola, K., Branko, L. & Jovanovic, K. Feedforward control approaches to bidirectional antagonistic actuators based on learning. In Advances in Service and Industrial Robotics 337–345 (Springer, 2020).

Gandarias, J. M. et al. Open-loop position control in collaborative, modular variable-stiffness-link (VSL) robots. IEEE Robot. Autom. Lett. 5, 1772–1779 (2020).

Habich, T.-L., Kleinjohann, S. & Schappler, M. Learning-based position and stiffness feedforward control of antagonistic soft pneumatic actuators using gaussian processes. In IEEE Int. Conf. Soft Robot. 1–7 (2023).

Pan, Y., Zou, Z., Li, W., Yang, C. & Yu, H. Gaussian process inverse dynamics learning for variable stiffness actuator control. In Proc. IEEE Int. Conf. Adv. Intell. Mechatron. 1373–1377 (2024).

Liu, G. et al. Neural network-based adaptive command filtering control for pneumatic artificial muscle robots with input uncertainties. Control. Eng. Pract. 118, 104960–104971 (2022).

Rasmussen, C. E. & Williams, C. K. I. Gaufssian Processes for Machine Learning (MIT Press, 2006).

Beckers, T., Kulić, D. & Hirche, S. Stable Gaussian process based tracking control of Euler-Lagrange systems. Automatica 103, 390–397 (2019).

Pan, Y. & Yu, H. Composite learning robot control with guaranteed parameter convergence. Automatica 89, 398–406 (2018).

Della-Santina, C. et al. An open platform to fast-prototyping articulated soft robots. The quest for natural machine motion. IEEE Robot. Autom. Mag. 24, 48–56 (2017).

Pillonetto, G., Dinuzzo, F., Chen, T., De Nicolao, G. & Ljung, L. Kernel methods in system identification, machine learning and function estimation: a survey. Automatica 50, 657–682 (2014).

Valencia-Vidal, B., Ros, E., Abadía, I. & Luque, N. R. Bidirectional recurrent learning of inverse dynamic models for robots with elastic joints: a real-time real-world implementation. Front. Neurorobot. 17, 1–15 (2023).

Srinivas, N., Krause, A., Kakade, S. M. & Seeger, M. W. Information-theoretic regret bounds for Gaussian process optimization in the bandit setting. IEEE Trans. Inf. Theory 58, 3250–3265 (2012).

Ravichandran, T., Wang, D. & Heppler, G. Stability and robustness of a class of nonlinear controllers for robot manipulators. In Proc. Am. Control Conf. 5262–5267 (2004).

Rueckert, E., Nakatenus, M., Tosatto, S. & Peters, J. Learning inverse dynamics models in O(n) time with LSTM networks. In Proc. IEEE Int. Conf. Humanoid Robot. 811–816 (2017).

Chen, K., Yi, J. & Song, D. Gaussian-process-based control of underactuated balance robots with guaranteed performance. IEEE Trans. Robot. 39, 572–589 (2023).

Acknowledgements

This work was supported in part by the Guangdong S&T Programme, China under Grant 2024B0101010003.

Author information

Authors and Affiliations

Contributions

Zhigang Zou: Conceptualization; Methodology; Validation; Writing—original draft; Writing—review and editing. Wei Li: Methodology; Writing—review and editing. Chenguang Yang: Conceptualization; Writing—review and editing. Yongping Pan: Conceptualization; funding acquisition; Investigation; Supervision; Writing—review and editing.

Corresponding author

Ethics declarations

Competing Interests:

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary Information.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zou, Z., Li, W., Yang, C. et al. Stable Gaussian process tracking control of antagonistic variable stiffness actuators. Sci Rep 15, 19762 (2025). https://doi.org/10.1038/s41598-025-03659-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-03659-4