Abstract

Limestone calcined clay cement (LC3) is a promising low-carbon construction material in terms of its comparable mechanical performance to ordinary Portland cement (OPC) but a much less embodied carbon footprint. Previous literature have demonstrated that the large-scale implementation of LC3 can reduce embodied CO2 emissions associated with OPC production by at least 30%. This study proposes a hybrid framework combining machine learning (ML) and multi-objective optimization (MOO) to design cost-effective and eco-friendly LC3 mixtures. A dataset of 387 LC3 specimens was constructed to develop ML models for predicting compressive strength. Multivariate Imputation by Chained Equations-Extreme Gradient Boosting (MICE-XGBoost) model achieved the highest accuracy of R2 = 0.928 (± 0.009). SHAP analysis identified key factors influencing strength, including water-to-cement/binder ratio, and kaolinite content. The local range of each feature showing more significant contributions was also identified. Non-dominated Sorting Genetic Algorithm-II was employed for MOO, generating Pareto fronts to minimize cost and embodied carbon while meeting strength requirements. A minimum balanced reduction in cost by 13.06% and embodied carbon by 14.83% was obtained. Inflection points on Pareto fronts were identified to guide decision-making for low-medium grade mixtures. A table of optimal mix designs is provided, offering practical solutions for selecting sustainable LC3 formulations.

Similar content being viewed by others

Introduction

Limestone calcined clay cement (LC3) is a promising low-carbon construction material composed of clinker, calcined clay, and limestone powder1,2,3. Its increasing application in diverse construction scenarios highlights its significance in advancing sustainable construction practices. The primary advantage of LC3 lies in its ability to partially substitute clinker with more environmentally friendly materials, achieving a 35%-40% reduction in energy consumption and CO2 emissions while maintaining adequate mechanical properties and durability4. Although extensive research demonstrates that LC3 own mechanical performance comparable to ordinary Portland cement (OPC), accurately modeling its performance—particularly mechanical strength—remains challenging due to an incomplete understanding of cement hydration and pozzolanic reactivity5,6,7. Similar to conventional concrete, many studies on LC3 rely on empirical models that correlate ultimate mechanical properties with key parameters, such as the water-to-cement ratio, aggregate size, or curing conditions. For example, Liang et al.8 developed a uniaxial compressive constitutive model based on 12 LC3 mixes with identical water/binder ratio (0.27) but varying clinker substitution levels (35%-55%) and calcined clay to limestone ratios (2:1 and 3:1). However, such models often oversimplify LC3’s behavior by excluding the influence of raw material composition on mechanical performance.

Data-driven methods, particularly machine learning (ML), have emerged as effective complements to traditional mix design approaches for modeling the mechanical performance of cementitious materials9,10. Various ML algorithms offer scalability and efficiency, minimizing the need for trial-and-error experiments while providing high-performance predictive models with sufficient generalizability and robustness through analyzing diverse datasets11,12,13. Several studies have attempted to predict the compressive strength of LC3 using ML algorithms14,15. Table 1 provides a summary of different low-carbon concrete properties production based on ML algorithms by various researchers. However, research in this area is still in its infancy, where the feature importance analysis results of ML models for LC3 are usually inconsistent with material theories. For instance, the findings from14 reveal that the alumina-to-silica ratio of clays has a significant influence on the compressive strength of LC3, while the results in15 demonstrate that age is the most significant variable on the compressive strength of LC3, while water/cement ratio, which has been considered as the most critical parameter in traditional LC3 mix design, only ranks at 10th.

The inconsistency between the feature importance results of the ML model and established material theories or empirical knowledge may imply the unreliability of models. In the existing literature, this issue may arise due to the limited number of features in the dataset. The LC3 is composed of a more complex composition compared to OPC, as well as the greater diversity in raw material sources, the influence of its components on performance becomes more intricate. Therefore, it is essential to build a comprehensive dataset with diverse features. However, during the process of constructing this comprehensive dataset, challenges related to data completeness will arise. Although extensive experimental data from different research groups is available in the literature30,31,32, their presentation format, style, and completeness vary significantly. For example, the composition of calcined clay, i.e., the main raw material for LC3, is presented using X-ray fluorescence in some studies, while others only disclose its kaolinite content. Additionally, in practical applications, it may not be able to obtain all the features of raw materials, resulting in a need to establish ML models capable of handling missing features or incomplete data for LC3 prediction. Therefore, data imputation techniques are adopted to enhance prediction. The explanatory analysis will also be applied to the developed model to validate the practicability of the ML model by comparing the results with the theory of material science.

In addition to simply predicting the strength of a given mixture, to better promote the widespread application of LC3 as a sustainable construction material, there is also a demand for an intelligent design method for LC3 mixtures, which is capable of quickly providing the optimal mixtures that meet strength requirements, while the lower cost and reduced environmental impact are typically the main focuses for the optimal. Improvements in these two indicators frequently come at the expense of strength. For instance, high-strength concrete formulations often require increased cement content, raising both material costs and embodied carbon emissions. There is also a conflict between the objectives of reducing embodied carbon and lowering cost in some features. Therefore, multi-objective optimization (MOO) is necessary to navigate the determination of optimal LC3 mixtures with proper trade-offs between the objectives of minimum cost and minimum embodied carbon.

To address the above issues, this paper proposes a hybrid framework that integrates ML and MOO for the design of LC3 mixtures. ML algorithms are utilized to rapidly estimate the compressive strength of LC3 with given mixtures. The multi-objective heuristic algorithm is adopted to determine the optimal LC3 mixture with objectives of minimum cost and minimum embodied CO2 emissions. Figure 1 provides a schematic illustration of the methodology adopted in this study. To develop the ML prediction model, a comprehensive dataset was formed with both experiment results and published data from research articles. Before conducting the data training, a missing value imputation algorithm was adopted to improve data quality and standardize the original data. ML prediction model for LC3 compressive strength is developed with the combined algorithm selection and hyperparameter tuning process. Shapley Additive Explanations (SHAP) was employed to analyze how material composition, mix design, and curing conditions affect LC3 compressive strength. Furthermore, multi-objective optimization problems are formed based on the ML model, to optimize LC3 mix design, considering cost, mechanical and environmental criteria.

Methodology

Dataset description

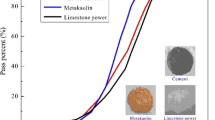

The raw dataset of LC3 consists of 387 specimens, including 18 from self-designed experiments and the remainder extracted from existing literatures4,30,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117. This data collection approach ensures both reliability and sufficiency by encompassing a substantial number of data points from diverse sources. The calcined clays used in the self-designed experiments were obtained from five different suppliers in China, with each type representing an individual clay source. The mixture of LC3 mortar specimens was determined based on established guidelines from previous literature116. The proportion of each component in the cementitious materials is clinker 50%, calcined clay 31%, limestone 15%, and the gypsum 4%. 6 sources of calcined clay with different contents of SiO2, Al2O3, and CaO were utilized. The water/binder ratio was set at 0.45, and the sand to binder ratio was 2. Fresh mortar mixtures were cast into 40mm × 40mm × 40mm molds and cured under saturated lime water for 28 days before compressive strength measurements. Figure 2 provides an overview of the experiment setup and raw materials.

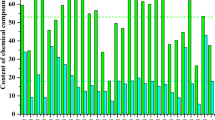

Overall, the dataset includes 19 input features, encompassing mix design parameters, raw materials characteristics (e.g., properties of calcined clay and limestone powders), and curing conditions. The target features are 28-day compressive strength, material cost, and embodied carbon. Strength values were obtained from both the literature and experimental measurements. The cost and embodied carbon values of all raw materials are summarized in Table 2. Feature names, along with the statistics for the 19 input features and 3 target features, are detailed in Table 3. Furthermore, to ensure the model robustness, it is essential to standardize the data formats extracted from different literature and harmonize the representation of mix designs across various sample types (e.g., paste, mortar, and concrete) and different material types. For example, three extra mixture parameters, i.e., the sand-to-binder ratio, sand-to-aggregate ratio and aggregate to paste volume were employed to reflect the individual characteristics among paste, mortar, and concrete specimens. To reflect the material-specific differences arising from various sources of calcined clay, the dataset incorporated both compositional and processing-related parameters. On the compositional side, kaolinite content (wt%), SiO2 content (wt%), and Al2O3 content (wt%) were used to capture the chemical variability. Additionally, two processing parameters—calcined clay particle size and calcination temperature—were included to account for differences in physical properties and thermal treatment histories.

Algorithms

Missing value imputation algorithm

Due to the inconsistent recording across different studies, missing values are a common issue in the dataset. An incomplete dataset can compromise the accuracy of subsequent model development. To address this, data imputation techniques were applied to estimate and fill in missing values. Accurate estimation of missing data is essential for optimizing the performance of ML models. In this study, three imputation techniques were evaluated: simple imputer (mean/median), k-nearest neighbors (kNN), and multivariate imputation by chained equations (MICE). The suitability of each algorithm for the dataset was compared to identify the most effective approach.

The simple imputer (mean/median) method is one of the most widely used missing data imputation methods121. It is a univariate technique that replaces missing values with a descriptive statistic—either the mean or median—calculated from the non-missing values within the same feature. However, unlike the simple imputer, both kNN and MICE are multivariate imputations that infer missing values by considering correlations with other features. The kNN algorithm is a non-parametric method that predicts the missing values of samples using the k neighboring samples. It identifies these neighbors by calculating the Euclidean distances of complete features. The missing feature is then estimated using the weighted average of the selected neighbors122.

MICE, on the other hand, is a multiple imputation method that replaces a missing value with multiple plausible estimates derived from regression models. It assumes that missing values depend on the observed features123. The general steps are presented in Algorithm 1.

Figure 3 illustrates the probability distribution function of the dataset imputed using the three candidate algorithms. Since the median and mean methods simply replace missing by a constant value, the density at the mean/ median point is particularly high. By contrast, the kNN and MICE imputers infer missing values by treating them as the dependent variables, resulting in imputed feature distributions that more closely resemble the original distributions compared to the simple imputers.

ML algorithms

After prepossessing the dataset, a predictive ML model for LC3 concrete performance was developed. Given the availability of various ML algorithms, it is crucial to select those best suited to the size, structure, and nature of the dataset to ensure model robustness and accuracy. Since the dataset is in tabular form, decision tree-based ensemble learning is among the most widely used approaches124. These models strengthen prediction accuracy by combining multiple weak learners (decision trees) rather than relying on a single decision tree125. Among the decision tree-based ensemble learning algorithms, Gradient boost decision trees (GBDT) is one of the most classical algorithms proposed by Jerome126. Based on GBDT, several advanced algorithms have been developed, among which extreme gradient boost (XGBoost) and light gradient boost machines (LightGBM) are the most popular127,128,129.

Deep learning algorithms such as Artificial Neural Networks and Convolutional Neural Networks have been widely used in recent years. However, multiple benchmark studies have demonstrated that for structured/tabular datasets, deep learning models often underperform compared to gradient-boosted tree ensembles130,131,132. Additionally, neural networks typically require larger datasets, longer training times, and complex hyperparameter tuning, which limits their practicality in this study with a moderate dataset size. For this reason, conventional deep learning methods are not selected as candidate algorithms.

However, other than Tree-based ensemble learning, Google published the deep learning algorithm that is designed for tabular datasets in 2021, called TabNet133. TabNet employs a multistep Neural Network structure inspired by the decision tree learning manifold. TabNet has performed better or equal prediction abilities compared with tree-based ensemble learning for some classical tabular datasets133,134. In this study, GBDT, XGBoost, LightGBM, and TabNet algorithms are selected as the candidate algorithms. In addition, the voting technique was adopted to develop an ensemble model that integrates the predictions of these four ML algorithms. A brief description of the algorithms is provided below.

Let the training dataset be denoted as \(D=({X}_{i}, {y}_{i}| i =\text{1,2},...,n)\),where \({X}_{i}\) is the feature vectors of the ith samples, and \({y}_{i}\) is the target value of the ith sample. \({f}_{t}\left(X\right), t =\text{1,2},...,T,\) is a set of decision trees that map X into a scalar. The GDBT then develops T decision trees in an additive manner. The prediction result of the kth iteration is shown in Eq. (1). The decision tree in the kth iteration is developed with the features and residual values of the last iteration, that is \(D=({X}_{i}, {{\widehat{y}}_{i}-y}_{i}| i =\text{1,2},...,n)\). The objective function of the decision trees is given by Eq. (2)135.

where \(l\) is a second order differentiable loss. \(w\) is the value of the leaves of decision trees, and \(\frac{{w}^{2}}{2}\) is the regularization term of \(f\) to avoid overfitting. \(\uplambda\) is a positive constant to control the impact of the regularization.

XGBoost and LightGBM are both variations of GBDT with some modifications and improvements136. XGBoost, proposed by Chen and Guestrin137 applies second-order approximation to improve the optimization speed of the objective function. Furthermore, it uses column blocks that enable parallel learning, improving computational efficiency and prediction accuracy. LightGBM further optimizes the algorithm for high computing speed138. In this method, Gradient-based One-Side Sampling and Exclusive Feature Bundling techniques are proposed to reduce the number of samples and features, making it especially suitable for large datasets.

TabNet is a deep learning algorithm that is composed of a series of sequential multi-step blocks. The results are processed by a fully connected layer to obtain the final output. In each step, feature data should be processed by operators of Mask, Feature Transformer, Split, and finally transformed by a ReLU function to determine the decision in this step. The Mask is a realization of the important function of TabNet to select features. It is a dynamic function that is obtained by inputting the processed data in the last step into the Attentive transformer, where features that have been selected in previous steps will not be selected or by a lower probability.

The voting technique, which is the meta-ensembles, is used to combine the results of multiple ML algorithms to output the final results. It calculates the average prediction of individual ML models as the final result139. In this study, the voting regressor is composed of GBDT, XGBoost, LightGBM, and TabNet.

Prediction model evaluation

Multiple regression models by different preprocessing and ML algorithms are developed in this research. To select the optimal algorithm combinations, and evaluate the prediction performances of the developed models, three frequently used regression matrices, R-squared (R2), mean absolute error (MAE), root mean squared error (RMSE), and A20-index are adopted as the prediction metrics. R2, also called the coefficient of determination, describes the proportions of variance in the dependent variable that is predictable from the regression model, as presented in Eq. (3). MAE reflects the mean level of the absolute errors, while RMSE emphasizes larger errors. The expressions of these two metrics are shown in Eq. (4) and Eq. (5). The A20-index evaluates the portion of samples that fit the prediction values with a deviation of ± 20%140, as shown in Eq. (6).

Explanatory analysis

In addition to compressive strength prediction, the ML model can also elucidate the strength development mechanism of LC3 materials. Therefore, SHAP analysis is applied in this research to interpret the developed model. SHAP is a method for explaining individual predictions using game theory and Shapley value141. From a game-theoretic perspective, each feature variable in a dataset is analogous to a player, and the prediction results of a machine-learning model are analogous to the gains from player cooperation. SHAP distributes Shapley values to features to evaluate their contributions to the results. SHAP develops a linear regression-formed explanatory model as shown in Eq. (6).

where \(g\left(z{\prime}\right)\) is the prediction value of one sample. \({\phi }_{0}\) is the base value of the prediction. \({z}_{j}{\prime}\) is a binary variable that refers to the presence of the jth feature, and \({\phi }_{j}\) is the contribution value of the jth feature.

Multi-objective optimization

Using the ML model, the compressive strength of LC3 cementitious materials can be predicted based on a given mix design. By maximizing the prediction results of the model, the optimal mix design parameters can be obtained. In practical applications, materials that satisfy multiple requirements—such as strength grade, cost, and environmental performance—are often preferred over those with the highest strength alone. Therefore, minimizing cost and carbon footprint while meeting the required strength is a primary goal for stakeholders. To address this, this study implements multi-objective optimization (MOO) to refine LC3 mixtures while simultaneously considering strength, cost, and embodied carbon.

Conventional programming methods struggle to solve non-convex optimization problems with multiple objectives. Instead, genetic algorithms (GA) are often employed to approximate optimal solutions. For example, Shafaie and Movahedi Rad132 adopted GA to calibrate the simulation model of colored self-compacting concrete. Non-dominated Sorting Genetic Algorithm-II (NSGA-II), a popular heuristic algorithm, is used in this research to solve MOO problems. NSGA-II, proposed by Deb, Pratap, Agarwal and Meyarivan142, follows the procedures shown in Fig. 4. In the first step, the NSGA-II algorithm generates a set of population solutions randomly. Each individual within the population is then evaluated with respect to the specified objectives. Following this, a selection operator is employed to choose parent solutions from the population, with individuals possessing higher conformity to objectives having a greater likelihood of being selected. Recombination is implemented on these parents to produce offspring solutions by the crossover operator. To ensure the diversity of the population, the mutation operator is applied, modifying one or more decision variables of the offspring solutions. The process continues until the termination criterion is met. Then the optimal individuals from the final generation are identified as the optimal solutions.

Generally, there is a certain contradiction between the different objectives of the MOO problem. Therefore, a MOO problem usually results in a Pareto front with multiple dominant solutions, rather than a single optimal solution, as shown in Fig. 5. In practical applications, one recommended solution is often selected from the Pareto frontier using additional criteria. If one objective is significantly prioritized over others, the anchor point, which maximizes or minimizes the prioritized objective, can be chosen. If all objectives are equally weighted, a Utopia point can be identified by finding the intersection of the maximum and minimum values of the objective functions. The solution with the minimum Euclidean distance to the Utopia point is selected as the balanced optimal solution143.

Experimental validation

To validate the practical feasibility of the MOO results, selected mix designs were experimentally prepared and tested. Four optimized LC3 concrete formulations were chosen from the Pareto front, each representing a balanced trade-off among compressive strength, embodied CO2 emissions, and material cost.

The concrete mixtures were prepared in a concrete drum mixer. After the mixing was complete, fresh mixture was immediately cast into 100 mm cube molds for concrete and 50mm cube molds for mortar. 5 samples were prepared from each group for the evaluation of compressive strength in 28 days. All specimens were prepared by filling the molds from the top in 2 lifts. After being filled up to about 90% of the mold height, the molds were moved onto a vibration table for consolidation. The molds were then topped up and levelled off while being compacted. All samples were demolded after 24 h and cured under water at room temperature (28 ± 2 ℃) until 28 days. Compressive strength was measured in accordance with ASTM C109144.

Model development and results analysis

Data screening and feature selection

Data screening and feature selection are prerequisites for robust developing ML models. These steps aim to eliminate irrelevant or redundant features, reduce the dimensionality of the dataset, improve model accuracy, and minimize computation time. A simplified model that includes only the most relevant features enhances interpretability and provides insights into the data generation process. This research examines feature correlations, addresses missing values, and identifies outliers, as shown in Figs. 6 and 7. In this study, if two features exhibited a Pearson correlation coefficient greater than 0.8, one of them was removed to mitigate multicollinearity. Furthermore, features with a missing rate exceeding 50% were excluded to avoid excessive imputation and potential information noise.

Figure 6a indicates that all features exhibit some degree of positive or negative correlation with the target variables, with no irrelevant features identified. In line with cement and concrete chemistry, the water-to-cement/binder ratio (W/C(B)) demonstrates a significant negative correlation with the 28-day compressive strength. However, other features do not display strong linear relationships with compressive strength, reflecting the complex, nonlinear nature of concrete strength development, which is affected by multiple variables. Outliers, such as those observed in the L(D50), are also identified and removed from the dataset to improve the data quality.

Figure 6b presents the Pearson correlation coefficient heatmap for all features. High absolute correlation values indicate strong correlations between input features, which results in multicollinearity. The multicollinearity between input features can distort parameter estimation and impair the predictive performance of ML models145. It is observed that the feature pairs “C—CC”, “Type—A/P”, and “RH—L(D50)” have significant correlations (> 0.8 or < − 0.8) with each other, and for each pair, one variable needs to be removed. For the "C—CC" group, we removed C because keeping CC allows us to more directly reveal the impact of CC on strength in subsequent analyses. The variables to be removed in the other two groups were determined based on subsequent data screening.

The issue of missing value is addressed during feature selection, as illustrated in Fig. 7. This figure represents a visualization of the dataset matrix, where the white color of the columns indicates missing data. 11 out of 19 features contain missing values. Features with more than 50% missing values were excluded to prevent introducing bias or instability into the model. Consequently, CTemp and L(D50) are removed due to the high degree of missingness. Additionally, the completeness of the feature A/P is lower than that of Type, leading to the removal of A/P. In summary, four features—C, A/P, CTemp, and L(D50)—are excluded following data screening and feature selection. The remaining 15 features are retained for developing the ML model, ensuring both data quality and relevance.

Combined algorithm selection and hyperparameter tuning (CASH)

To ensure model generalization, the prepared dataset is partitioned into two subsets: the training set (80%) and the test set (20%). The training set is used to train ML models, while the test set evaluates the effectiveness of predictive models. To develop a high-performance model, CASH is implemented. This approach selects the optimal imputer and regression algorithm combination along with hyperparameter tuning using Bayesian optimization. The candidate algorithms, corresponding hyperparameters, and their respective search spaces are listed in Table 4. Since each model is formed by pairing one imputation algorithm with one regression algorithm, and XGBoost and LightGBM are capable of handling missing inputs directly, this study considers 18 candidate prediction models.

During the CASH process, fivefold cross validation is adopted to avoid overfitting and ensure the robustness of the developed models. \({R}^{2}\) is selected as the primary metric for hyperparameter selection. For each candidate model, a 100-iteration hyperparameter tuning process is implemented. The convergence curves for candidate algorithm combinations are plotted in Fig. 8. The curves demonstrate that the evaluation metrics have reached or approached convergence, indicating that the optimal hyperparameter settings have been successfully achieved.

Prediction results

The evaluation metrics for the fivefold cross validation, R2, MAE, RMSE and A20-index of candidate models are presented in Table 5. The reported values represent the mean (± standard deviation) obtained from 100 replications with different random seeds to ensure robustness and reliability. The results indicate that MICE-LightGBM combination achieved the highest mean R2 value 0.931 (± 0.006), followed closely by MICE-LightGBM with a mean R2 of MICE-XGBoost0.930 (± 0.007) for fivefold validation. The evaluation metrics for the testing set are basically consistent with those from the cross-validation, demonstrating that MICE-XGBoost possess the highest R2 (0.928 (± 0.009)), the lowest MAE (3.996 (± 0.165)) the lowest RMSE (5.366 (± 0.208)), and the highest A20-index (0.912 (± 0.013)). In the context of compressive strength prediction, R2 values above 0.90 are generally considered indicative of excellent model fit, while MAE values below 5 MPa and RMSE values below 6 MPa reflect acceptable prediction errors for engineering applications. MICE-XGBoost performed slightly better than MICE-LightGBM on the testing set, which may be attributed to its stronger capability in preventing overfitting.

To further compare the prediction performances and validate the reliability of developed ML models, the Taylor diagram showing standard deviations of the predicted results on the testing test of all candidate models and their correlation coefficient with the actual values is presented in Fig. 9. The standard deviation of actual values for the testing set is 20.6 MPa, which is plotted as a reference in the diagram. The Taylor diagram also shows consistent results with the evaluation metrics that MICE-XGBoost outperforms other candidate models, with the closest standard deviations with the actual results and the highest correlation coefficient. MICE-XGBoost was selected as the primary model for subsequent optimization due to its slightly better performance and lower variability on the testing set. It is important to note that MICE-LightGBM also exhibited strong and competitive performance. Given the small differences between the two models, and considering that model behavior may vary with different datasets or problem settings, MICE-LightGBM remains a viable alternative in practical applications.

MICE stands out among all the imputers, achieving the optimal results when paired with prediction algorithms. As illustrated in Fig. 10, bar charts comparing the R2 and MAE values across different imputers clearly show that MICE consistently leads to better predictive performance. This can be attributed to the implicit relationships between input features, such as those related to calcined clay characteristics: Calcined Clay Size (D50), Kaolinite Content of Raw Clay, SiO2 Content of Calcined Clay, Al2O3 Content of Calcined Clay. The MICE imputer captures their implicit relationships to some extent, resulting in more reasonable imputed values. Additionally, the XGBoost model performed better when handling missing data directly, as shown by its superior performance compared to Simple-XGBoost and KNN-XGBoost. This suggests that while XGBoost is inherently capable of managing missing values, pairing it with inappropriate imputation methods can reduce its performance.

The prediction results of MICE-XGBoost and MICE-LightGBM on the test set are illustrated in Fig. 10. The observed and predicted strength values closely align with the 1:1 line, confirming the effectiveness of the developed models. These ensemble learning models precisely predict the compressive strength of LC3, particularly the 28-day strength. With this model, the industry can quickly estimate the strength of a newly designed LC3 concrete mixture without labor-intensive experiments.

However, for high-strength LC3 samples (strengths exceeding 70 MPa), the prediction errors are slightly larger than those for samples with ordinary strength. The larger distances of high-strength samples from the 1:1 line, as shown in Fig. 11, suggest that the smaller number of data points for high-strength concrete limits the model’s ability to learn the underlying strength development rules for high-strength LC3 cementitious materials. This also implies significant differences in strength development between high-strength cementitious materials and normal-strength ones. Consequently, prediction errors for high-strength specimens increase the MAE and RMSE values of the models. For typical strength ranges (30 MPa–60 MPa), the prediction errors are smaller, demonstrating the model’s reliability for predicting common strength levels. These results suggest that the developed ML models are highly effective for scenarios requiring rapid predictions, such as initial mix proportion screening.

It should be noted that, despite the high predictive accuracy, the ML models were developed and validated on a dataset of moderate size. As a result, their generalizability to LC3 mixtures outside the current dataset may be limited. The current dataset does not fully represent the diversity of mix designs, especially for high-strength or non-standard LC3 compositions, which may affect the robustness of the models in unseen contexts.

SHAP analysis

Figure 12 presents the SHAP values for each input variable used in predicting compressive strength, highlighting the relative impact of each variable on the predictions made by the MICE-XGBoost model. Figure 12a presents the ranks of the contributions to the prediction values for input features. Figure 12b shows the scatter plot of SHAP values versus feature values for each feature. The SHAP values of these dots suggest their contributions to the output compared with the baseline value, where the sign refers to the positive or negative impact and the absolute value shows the extent of the impact. The color-coding scheme reflects the magnitude of the input values, where red dots represent higher input values, and blue indicates lower values. The SHAP analysis results show good alignment with findings in other literatures, which verifies the validity of the results, and it also provides valuable references for future optimization. The detailed results are presented below.

W/C(B) ratio and kaolinite content are the most critical variable that determines the final compressive strength of LC3 specimens, as shown in Fig. 12a. The observation for the W/C(B) ratio not only conforms to established rules of mix design among ordinary cementitious materials but also aligns with fundamental principles of cement and concrete chemistry, where a lower water/binder ratio contributes to a denser microstructure, resulting in higher compressive strength146. Similar evidence also aids in explaining why cement type matters in LC3 mixture. The second important variable is the kaolinite content in raw clay (K_RC), which shows a positive relationship with compressive strength. This finding is consistent with conclusions in typical LC3 recipes2,147. Specifically, previous literature showed that kaolinite is the reactant for generating C-A-S–H, which is the main source of strength development in the LC3 system. Besides, it is noteworthy that the dosage of limestone (L) is also a negative variable, since a high limestone content in cement may increase the porosity and degrade the performance148. Also, the dosage of calcined clay (CC) shows a negative impact, where larger calcined clay substitution negatively affects the workability and final compressive strength.

The impacts of mixture features on the compressive strength vary by the ranges, according to Fig. 12b. For W/C(B) ratio, the slop is steep when the parameter is between 0.2 and 0.5. indicating that adjusting the W/C(B) ratio within this range can have a significant impact on the compressive strength. There are few points with a W/C(B) ratio less than 0.2, meaning that our collected mix designs rarely have a W/C(B) ratio below 0.2. After the W/C(B) ratio exceeds 0.5, the SHAP values decrease at a slower rate, indicating that increasing the W/C(B) ratio within a reasonable range will not result in a significant reduction in strength. In contrast, the plot of calcine clay (CC) in Fig. 12b presents a negative correlation, and the slop turns to steeper when that value is larger than 0.3. This suggests that when calcined clay constitutes more than 30% of the cementitious materials, further increases result in a substantial reduction in compressive strength. These findings provide actionable insights for optimizing LC3 mix designs while maintaining strength performance.

Figure 13 presents three SHAP force plots that provide localized explanations of how the ML model arrives at specific predictions for the compressive strength of LC3. In each plot, the base value (center point) represents the average model prediction across the dataset. The predicted value is located at the intersection of the cumulative red and blue arrows, reflecting the individualized output for a given data point149.

As shown in Fig. 13a, b and c, corresponding to predicted strengths of 40.53 MPa and 41.98 MPa and 51.42 MPa, it can be observed that W/C(B), Sand-to-Binder Ratio, and Cement type play dominant roles in shaping the predictions. Other variables also have measurable impacts, but to a lesser degree. These visualizations help demystify the internal reasoning of the model and offer clear insight into the material variables driving each prediction.

Multi-objective optimization

Optimization problem formulation

In the previous section, we developed reliable ML models to estimate the 28-day compressive strength of LC3. However, the industry’s demand is not for the highest concrete strength but to reduce the cost and carbon footprint as much as possible while meeting the strength requirements. Similar to the 28-day compressive strength, the costs and embodied carbon of LC3 are also controlled by mix design, with the cost and embodied carbon coefficients of each raw material shown in Table 3. Figure 11 presents the scatter plot of cost vs embodied CO2 emissions of concrete-type specimens in the LC3 dataset, with the color representing the strength. Although it is generally expected that higher-strength concrete would have higher costs and environmental impacts, in Fig. 14, some low-strength specimens exhibit higher cost and embodied carbon compared to high-strength ones. This is because the mix portions of specimens in the dataset are mostly not properly designed. It also indicates that there is large space for optimizing LC3 mix proportions to reduce cost and embodied carbon while ensuring strength. Additionally, although a certain positive correlation between cost and embodied carbon can be observed, it is not a significantly strong correlation, where the correlation coefficient between the two for the concrete specimens is 0.51. This implies that the two objectives, cost and embodied carbon, are somewhat antagonistic, so it is necessary to set them as separate optimization targets and explore the trade-offs between them.

Focusing on the concrete LC3, we designed 4 MOO problems to investigate the potential optimal parameters of LC3 and the optimal mix design to minimize the cost and embodied carbon simultaneously. The objectives, variables, and constraints of the MOO problems are summarized in Table 5. The objectives of all MOO problems are min(Cost), and min(EC). The controlled variables of all optimization problems are the same, including W/C(B) ratio, Cement type, Dosage of CC, Dosage of limestone, Dosage of gypsum, Sand/ binder mass ratio, sand/ aggregate mass ratio, SiO2 content of calcined clay, Al2O3 content of calcined clay. For other features, the median values are used as the default.

The constraints of the 4 MOO problems vary. A constraint of 28-day compressive strength is set for each problem to be larger than 30 MPa, 40 MPa, 50, MPa, and 60 MPa, respectively, which are considered as the commonly used concrete strength. Several general constraints are established to make the optimization problem accord with reality. Firstly, a rationale range is set for the W/C(B) ratio, as shown in Eq. (7). The dosages of cement, calcined clay, limestone, and gypsum should not be negative, and the sum of the portions of these four materials must equal 1. These constraints are presented in Eqs. (8) and (9). The minimum and maximum values of the sand/ binder mass ratio, sand/ aggregate mass ratio for concrete samples, and SiO2 content and Al2O3 content of calcined clay for all samples in the dataset are set as the ranges for these four variables, as shown in Eqs. (10–13). The sum of SiO2 content and Al2O3 content of calcined clay is less than 1, as seen in Eq. (14). The settings of the four optimization problems are presented in Table 6.

Optimization results and analysis

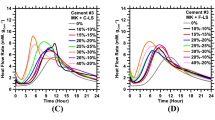

4 sets of MOO experiments were implemented, as described in the previous section. focusing on specific concrete strength levels to identify the optimal mix designs that achieve both the lowest cost and lowest embodied carbon. The Pareto fronts for each optimization experiment are illustrated in Fig. 15, and a summary of suggested mix designs under various criteria, derived from the local optimizations, is presented in Table 7. Detailed descriptions of the MOO results are outlined below.

Due to the conflict between the objectives of reducing cost and reducing EC, the results from our four sets of MOO experiments do not represent four optimal solutions but rather four sets of Pareto solutions. The resulting Pareto front, along with the specimens from the collected LC3 dataset with the relatively dominant cost and EC, are shown in Fig. 15. It can be observed that, although there are some differences between the four Pareto fronts, their cost, and EC dominate all the specimens in the dataset. The dominant point of specimens in the dataset is located at (75.82, 178.52), with a strength of 64 MPa, which can be considered as the best cost and EC of LC3 concrete with the strength requirement of 60 MPa suggested by the experiments. By comparing this specimen with the utopia point (65.92, 152.04) of the Pareto front for 60 MPa, the MOO decreases the cost by 13.06%, and the embodied carbon by 14.83% simultaneously, which is significant.

Observing the Pareto front of each optimization, the patterns for 30 MPa, 40 MPa, and 50 MPa are very similar: EC initially decreases rapidly, while cost increases slowly. However, after reaching a certain inflection point, the rate of decrease in EC slows down, while the rate of increase in cost accelerates. This provides valuable insights for the industry regarding LC3 mix design decision-making: when the EC of a designed LC3 mix is greater than the EC at the corresponding Pareto front’s inflection point, the cost required to reduce EC is relatively small. However, if the EC is already lower than the inflection point, further reductions in EC will require higher costs. Conversely, when the cost of the designed LC3 mix is greater than the cost at the corresponding Pareto front’s inflection point, the increase in EC resulting from cost reduction is relatively small. If the cost is already lower than the inflection point, further cost reduction may lead to a significant increase in EC. However, for 60 MPa, the curve becomes steeper, suggesting that reducing material cost becomes increasingly challenging as strength requirements reach higher grades.

To facilitate industrial applications, Table 7 details the optimal mix designs for specific strength grades based on the optimization results. These results provide quantitative support for the application potential of LC3 in real construction scenarios, offering practical guidelines for achieving cost-effective and environmentally friendly mix designs while meeting strength requirements.

Optimization results are inherently dependent on the underlying prediction model and the selected parameter space. While the NSGA-II algorithm used in this study is a classical and well-established optimizer, the solutions are still sensitive to model uncertainty and dataset limitations. A broader dataset could yield more diverse and robust Pareto solutions.

To validate the optimization results, four optimized LC3 mix designs generated from the multi-objective optimization with the balance strategy (targeting compressive strength of 30, 40, 50 and 60 MPa, respectively), and prepared LC3 concrete specimens following the same procedures outlined in our experimental section. The measured 28-day compressive strength values were for the four levels are 28.1 ± 3.4 MPa, 41.5± 2.6MPa, 54.3 ± 1.4 MPa, 59.4 ± 3.1 MPa, as shown in Fig. 16. It is found to be in close agreement with the predicted results (relative error within ± 10%), thereby confirming the practical feasibility and reliability of the optimized mix formulations.

Graphical user interface (GUI)

As highlighted in the introduction, accurately predicting the properties of LC3 concrete is essential for its practical application and broader acceptance in the construction industry. While the machine learning models developed in this study (e.g., MICE-XGBoost and ensemble models) achieved high accuracy, their adoption by engineering practitioners can be limited due to the complexity of algorithm implementation and the lack of user-friendly tools.

To address this, a graphical user interface (GUI) has been developed using Python to provide an accessible tool for practitioners. The interface enables users to input key LC3 mix parameters, including water-to-binder ratio, calcined clay content, kaolinite percentage, cement type, particle size, and others. For better usability and consistency with engineering practice, some feature units were converted to more commonly used forms. For example, dosages are expressed in kg/m3. Corresponding units are clearly labeled next to each input field. Upon clicking the “Predict” button, the GUI instantly returns the predicted 28-day compressive strength, estimated material cost, and embodied carbon emissions. An example of the interface is shown in Fig. 17.

This GUI bridges the gap between advanced machine learning models and practical engineering workflows, allowing civil engineers and stakeholders to make informed decisions without requiring in-depth knowledge of data science. It also demonstrates the translational potential of ML applications in sustainable construction material design.

Conclusion

This study proposed an integrated framework combining machine learning (ML) prediction and multi-objective optimization (MOO) for LC3 concrete mix design, aiming to balance mechanical performance, cost, and environmental impact. The key findings and contributions are summarized as follows:

-

Among all tested models, MICE-XGBoost achieved the best performance with R2 = 0.928 (± 0.009), MAE = 3.996 (± 0.165) MPa, RMSE = 5.366 (± 0.208) MPa, and A20-index = 0.912 (± 0.013) on the test set.

-

Key influencing factors for 28-day strength include: water-to-cementitious ratio, kaolinite content in raw clay, sand-to-binder ratio, cement type, and particle size of calcined clay.

-

High calcined clay content (> 30%) was associated with notable strength reductions, emphasizing the need for optimal proportioning.

-

The Pareto fronts revealed cost–carbon inflection points for low-to-medium grade LC3 mixes, supporting rational trade-off decisions.

-

A table of recommended mix designs was developed for practical implementation based on the optimized results.

Despite the promising results, this study acknowledges that current LC3 datasets remain limited, particularly in comparison to other low-carbon cementitious materials. Most existing research still focuses on chemical mechanisms and early-age hydration, with relatively fewer high-quality datasets addressing mechanical properties and sustainability indicators. Future efforts should focus on expanding the dataset diversity across multiple sources and conditions, which will further enhance the generalizability and long-term utility of data-driven LC3 design frameworks.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Z. Franco, L.S. Karen, The key role of limestone calcined clay cements on the roadmap towards eco-efficient cement and concrete, ACI Symposium Publication 361.

Scrivener, K., Martirena, F., Bishnoi, S. & Maity, S. Calcined clay limestone cements (LC3). Cem. Concr. Res. 114, 49–56 (2018).

Scrivener, K. L., John, V. M. & Gartner, E. M. Eco-efficient cements: Potential economically viable solutions for a low-CO2 cement-based materials industry. Cem. Concr. Res. 114, 2–26 (2018).

Dhandapani, Y., Sakthivel, T., Santhanam, M., Gettu, R. & Pillai, R. G. Mechanical properties and durability performance of concretes with limestone calcined clay cement (LC3). Cem. Concr. Res. 107, 136–151 (2018).

Snellings, R., Suraneni, P. & Skibsted, J. Future and emerging supplementary cementitious materials. Cement Concr. Res. 171, 107199 (2023).

Juenger, M. C. G., Snellings, R. & Bernal, S. A. Supplementary cementitious materials: New sources, characterization, and performance insights. Cem. Concr. Res. 122, 257–273 (2019).

Scrivener, K. L., Matschei, T., Georget, F., Juilland, P. & Mohamed, A. K. Advances in hydration and thermodynamics of cementitious systems. Cement Concr. Res. 174, 107332. https://doi.org/10.1016/j.cemconres.2023.107332 (2023).

Liang, T., Chen, L., Huang, Z., Zhong, Y. & Zhang, Y. Ultra-lightweight low-carbon LC3 cement composites: Uniaxial mechanical behaviour and constitutive models. Constr. Build. Mater. 404, 133173 (2023).

Young, B. A., Hall, A., Pilon, L., Gupta, P. & Sant, G. Can the compressive strength of concrete be estimated from knowledge of the mixture proportions?: New insights from statistical analysis and machine learning methods. Cem. Concr. Res. 115, 379–388 (2019).

Chaabene, W. B., Flah, M. & Nehdi, M. L. Machine learning prediction of mechanical properties of concrete: Critical review. Constr. Build. Mater. 260, 119889 (2020).

Zhang, X., Chen, H., Sun, J. & Zhang, X. Predictive models of embodied carbon emissions in building design phases: Machine learning approaches based on residential buildings in China. Build. Environ. 258, 111595. https://doi.org/10.1016/j.buildenv.2024.111595 (2024).

Luo, X., Zhang, Y. & Song, Z. Analysis of carbon emission characteristics and establishment of prediction models for residential and office buildings in China. Build. Environ. 267, 112208. https://doi.org/10.1016/j.buildenv.2024.112208 (2025).

Shafaie, V., Ghodousian, O., Ghodousian, A., Cucuzza, R. & Rad, M. M. Integrating push-out test validation and fuzzy logic for bond strength study of fiber-reinforced self-compacting concrete. Constr. Build. Mater. 425, 136062 (2024).

El Khessaimi, Y. et al. Machine learning-based prediction of compressive strength for limestone calcined clay cements. J. Build. Eng. 76, 107062 (2023).

Han, T. et al. On the prediction of the mechanical properties of limestone calcined clay cement: a random forest approach tailored to cement chemistry. Minerals 13(10), 1261. https://doi.org/10.3390/min13101261 (2023).

Gao, Y., Wang, J. Y. & Xu, X. X. Machine learning in construction and demolition waste management: Progress, challenges, and future directions. Autom. Constr. 162, 105380 (2024).

Jaf, D. K. I. et al. Machine learning techniques and multi-scale models to evaluate the impact of silicon dioxide (SiO2) and calcium oxide (CaO) in fly ash on the compressive strength of green concrete. Constr. Build. Mater. 400, 132604 (2023).

Chao, Z. M., Li, Z. K., Dong, Y. K., Shi, D. D. & Zheng, J. H. Estimating compressive strength of coral sand aggregate concrete in marine environment by combining physical experiments and machine learning-based techniques. Ocean Eng. 308, 118320 (2024).

Asteris, P. G., Koopialipoor, M., Armaghani, D. J., Kotsonis, E. A. & Lourenço, P. B. Prediction of cement-based mortars compressive strength using machine learning techniques. Neural Comput. Appl. 33(19), 13089–13121 (2021).

Feng, D. C. et al. Machine learning-based compressive strength prediction for concrete: An adaptive boosting approach. Constr. Build. Mater. 230, 117000 (2020).

Alabdullah, A. A. et al. Prediction of rapid chloride penetration resistance of metakaolin based high strength concrete using light GBM and XGBoost models by incorporating SHAP analysis. Constr. Build. Mater. 345, 128296 (2022).

Alyami, M. et al. Estimating compressive strength of concrete containing rice husk ash using interpretable machine learning-based models. Case Stud. Constr. Mater. 20, e02901 (2024).

Farooq, F. et al. A comparative study of random forest and genetic engineering programming for the prediction of compressive strength of high strength concrete (HSC). Appl. Sci.-Basel 10(20), 7330 (2020).

Yaseen, Z. M. et al. Predicting compressive strength of lightweight foamed concrete using extreme learning machine model. Adv. Eng. Softw. 115, 112–125 (2018).

Aslam, F. et al. Applications of gene expression programming for estimating compressive strength of high-strength concrete. Adv. Civil Eng. 1, 8850535 (2020).

Parhi, S. K. & Patro, S. K. Prediction of compressive strength of geopolymer concrete using a hybrid ensemble of grey wolf optimized machine learning estimators. J. Build. Eng. 71, 106521 (2023).

Alyaseen, A. et al. Assessing the compressive and splitting tensile strength of self-compacting recycled coarse aggregate concrete using machine learning and statistical techniques. Mater. Today Commun. 38, 107970 (2024).

Tran, V., Dang, V. Q. & Ho, L. S. Evaluating compressive strength of concrete made with recycled concrete aggregates using machine learning approach. Constr. Build. Mater. 323, 126578 (2022).

Ekanayake, I. U., Meddage, D. P. P. & Rathnayake, U. A novel approach to explain the black-box nature of machine learning in compressive strength predictions of concrete using Shapley additive explanations (SHAP). Case Stud. Constr. Mater. 16, e01059 (2022).

Leo, E. S., Alexander, M. G. & Beushausen, H. Optimisation of mix proportions of LC3 binders with African clays, based on compressive strength of mortars, and associated hydration aspects. Cem. Concr. Res. 173, 107255 (2023).

Zunino, F., Alberto, F., Limestone calcined clay cements (LC3): raw material processing, sulfate balance and hydration kinetics, EPFL Thesis 8173 (2020).

Emmanuel, A. C., Haldar, P., Maity, S. & Bishnoi, S. Second pilot production of limestone calcined clay cement in India: the experience. Indian Concr. J 90(5), 57–63 (2016).

Dong, Y., Liu, Y. & Hu, C. Towards greener ultra-high performance concrete based on highly-efficient utilization of calcined clay and limestone powder. J. Build. Eng. 66, 105836 (2023).

Hay, R. & Celik, K. Performance enhancement and characterization of limestone calcined clay cement (LC3) produced with low-reactivity kaolinitic clay. Constr. Build. Mater. 392, 131831 (2023).

Hay, R. & Celik, K. Effects of water-to-binder ratios (w/b) and superplasticizer on physicochemical, microstructural, and mechanical evolution of limestone calcined clay cement (LC3). Constr. Build. Mater. 391, 131529 (2023).

Irassar, E. F. et al. Performance of composite portland cements with calcined illite clay and limestone filler produced by industrial intergrinding. Minerals 13(2), 240 (2023).

Kafodya, I. et al. Mechanical performance and physico-chemical properties of limestone calcined clay cement (LC3) in Malawi. Buildings 13(3), 740 (2023).

Li, X., Dengler, J. & Hesse, C. Reducing clinker factor in limestone calcined clay-slag cement using CSH seeding–A way towards sustainable binder. Cem. Concr. Res. 168, 107151 (2023).

Liu, Y., Zhang, Y., Li, L. & Ling, T.-C. Impact of limestone-metakaolin ratio on the performance and hydration of ternary blended cement. J. Mater. Civ. Eng. 35(4), 04023027 (2023).

Luan, C., Wang, J. & Zhou, Z. Synergic effect of triethanolamine and CSH seeding on early hydration of the limestone calcined clay cement in UHPC. Constr. Build. Mater. 400, 132675 (2023).

Redondo-Soto, C. et al. Limestone calcined clay binders based on a Belite-rich cement. Cem. Concr. Res. 163, 107018 (2023).

Sui, H. et al. Limestone calcined clay cement: mechanical properties, crystallography, and microstructure development. J. Sustain. Cement-Based Mater. 12(4), 427–440 (2023).

Wang, Y.-S., Oh, S., Ishak, S., Wang, X.-Y. & Lim, S. Recycled glass powder for enhanced sustainability of limestone calcined clay cement (LC3) mixtures: mechanical properties, hydration, and microstructural analysis. J. Market. Res. 27, 4012–4022 (2023).

Yu, T. et al. Calcined nanosized tubular halloysite for the preparation of limestone calcined clay cement (LC3). Appl. Clay Sci. 232, 106795 (2023).

Yu, T. et al. Optimization of mechanical performance of limestone calcined clay cement: Effects of calcination temperature of nanosized tubular halloysite, gypsum content, and water/binder ratio. Constr. Build. Mater. 389, 131709 (2023).

Zhang, G.-Y., Oh, S., Han, Y., Lin, R.-S. & Wang, X.-Y. Using perforated cenospheres to improve the engineering performance of limestone-calcined clay-cement (LC3) high performance concrete: Autogenous shrinkage, strength, and microstructure. Constr. Build. Mater. 406, 133467 (2023).

Zhang, M., Yang, L. & Wang, F. Influence of C-S–H seed and sodium sulfate on the hydration and strength of limestone calcined clay cement. J. Clean. Prod. 408, 137022 (2023).

Zhang, M., Yang, L. & Wang, F. Understanding the longer-term effects of C-S–H seeding materials on the performance of limestone calcined clay cement. Constr. Build. Mater. 392, 131829 (2023).

Zhou, W., Wu, S. & Chen, H. Early strength-promoting mechanism of inorganic salts on limestone-calcined clay cement. Sustainability 15(6), 5286 (2023).

da Silva, M. R., Neto, J. D., Walkley, B. & Kirchheim, A. P. Exploring sulfate optimization techniques in limestone calcined clay cements (LC3): Limitations and insights. Cement Concr. Res. 175, 107375 (2024).

Alvarez, G. L., Nazari, A., Bagheri, A., Sanjayan, J. G. & De Lange, C. Microstructure, electrical and mechanical properties of steel fibres reinforced cement mortars with partial metakaolin and limestone addition. Constr. Build. Mater. 135, 8–20 (2017).

Avet, F., Snellings, R., Diaz, A. A., Haha, M. B. & Scrivener, K. Development of a new rapid, relevant and reliable (R3) test method to evaluate the pozzolanic reactivity of calcined kaolinitic clays. Cem. Concr. Res. 85, 1–11 (2016).

Antoni, M., Rossen, J., Martirena, F. & Scrivener, K. Cement substitution by a combination of metakaolin and limestone. Cem. Concr. Res. 42(12), 1579–1589 (2012).

Dhandapani, Y. & Santhanam, M. Assessment of pore structure evolution in the limestone calcined clay cementitious system and its implications for performance. Cement Concr. Compos. 84, 36–47 (2017).

Haldar, P. K. et al. The effect of kaolinite content of china clay on the reactivity of limestone calcined clay cement. In Calcined clays for sustainable concrete (eds Martirena, F. et al.) 195–199 (Springer Netherlands, Dordrecht, 2018). https://doi.org/10.1007/978-94-024-1207-9_31.

Khan, M., Nguyen, Q., Castel, A., Carbonation of limestone calcined clay cement concrete, Calcined clays for sustainable concrete. In Proc. of the 2nd International Conference on Calcined Clays for Sustainable Concrete, Springer, 2018, pp. 238–243.

Lins, D., Rêgo, J., Silva, E., Analysis of the mixing performance containing the LC3 as agglomerant with different types of calcined clay, Calcined Clays for Sustainable Concrete. In Proc. of the 2nd International Conference on Calcined Clays for Sustainable Concrete, Springer, 2018, pp. 279–285.

Parashar, A., Shah, V., Bishnoi, S., Applicability of lime reactivity strength potential test for the reactivity study of limestone calcined clay cement, Calcined Clays for Sustainable Concrete. In Proc. of the 2nd International Conference on Calcined Clays for Sustainable Concrete, Springer, 2018, pp. 339–345.

Pérez, A., Favier, A., Scrivener, K., Martirena, F., Influence grinding procedure, limestone content and PSD of components on properties of clinker-calcined clay-limestone cements produced by intergrinding, Calcined Clays for Sustainable Concrete. In Proc. of the 2nd International Conference on Calcined Clays for Sustainable Concrete, Springer, 2018, pp. 358–365.

Almenares Reyes, R.S., Díaz, A.A., Rodríguez, S.B., Rodríguez, C.A.L., Hernández, J.F.M., Assessment of cuban kaolinitic clays as source of supplementary cementitious materials to production of cement based on clinker–calcined clay–limestone, calcined clays for sustainable concrete. In Proc. of the 2nd International Conference on Calcined Clays for Sustainable Concrete, Springer, 2018, pp. 21–28.

Tongbo, S., Wang, B., Cai, Y., Tang, S., Progress of limestone calcined clay cement in China, Calcined Clays for Sustainable Concrete. In Proc. of the 2nd International Conference on Calcined Clays for Sustainable Concrete, Springer, 2018, pp. 461–468.

Tironi, A., Scian, A. N. & Irassar, E. F. Blended cements with limestone filler and kaolinitic calcined clay: Filler and pozzolanic effects. J. Mater. Civ. Eng. 29(9), 04017116 (2017).

Yu, C., Yuan, P., Yu, X., Liu, J., Degradation of calcined clay-limestone cementitious composites under sulfate attack, Calcined Clays for Sustainable Concrete. In Proc. of the 2nd International Conference on Calcined Clays for Sustainable Concrete, Springer, 2018, pp. 110–116.

Gettu, R. et al. Sustainability-based decision support framework for choosing concrete mixture proportions. Mater. Struct. 51, 1–16 (2018).

Krishnan, S. & Bishnoi, S. Understanding the hydration of dolomite in cementitious systems with reactive aluminosilicates such as calcined clay. Cem. Concr. Res. 108, 116–128 (2018).

Krishnan, S., Kanaujia, S. K., Mithia, S. & Bishnoi, S. Hydration kinetics and mechanisms of carbonates from stone wastes in ternary blends with calcined clay. Constr. Build. Mater. 164, 265–274 (2018).

Nguyen, Q. D., Khan, M. S. H. & Castel, A. Engineering properties of limestone calcined clay concrete. J. Adv. Concr. Technol. 16(8), 343–357 (2018).

Shah, V. & Bishnoi, S. Carbonation resistance of cements containing supplementary cementitious materials and its relation to various parameters of concrete. Constr. Build. Mater. 178, 219–232 (2018).

Chen, L., Zheng, K., Xia, T. & Long, G. Mechanical property, sorptivity and microstructure of steam-cured concrete incorporated with the combination of metakaolin-limestone. Case Stud. Constr. Mater. 11, e00267 (2019).

Ferreiro, S., Canut, M., Lund, J. & Herfort, D. Influence of fineness of raw clay and calcination temperature on the performance of calcined clay-limestone blended cements. Appl. Clay Sci. 169, 81–90 (2019).

Krishnan, S., Emmanuel, A. C. & Bishnoi, S. Hydration and phase assemblage of ternary cements with calcined clay and limestone. Constr. Build. Mater. 222, 64–72 (2019).

Qinfei, L. et al. The microstructure and mechanical properties of cementitious materials comprised of limestone calcined clay and clinker. Ceram. Silikaty 63, 356–364 (2019).

Jafari, K. & Rajabipour, F. Performance of impure calcined clay as a pozzolan in concrete. Transp. Res. Rec. 2675(2), 98–107 (2021).

Shi, Z. et al. Sulfate resistance of calcined clay–Limestone–Portland cements. Cem. Concr. Res. 116, 238–251 (2019).

Tang, J. et al. Synergistic effect of metakaolin and limestone on the hydration properties of Portland cement. Constr. Build. Mater. 223, 177–184 (2019).

Akindahunsi, A. A., Avet, F. & Scrivener, K. The Influence of some calcined clays from Nigeria as clinker substitute in cementitious systems. Case Stud. Constr. Mater. 13, e00443 (2020).

Dhandapani, Y. & Santhanam, M. Investigation on the microstructure-related characteristics to elucidate performance of composite cement with limestone-calcined clay combination. Cem. Concr. Res. 129, 105959 (2020).

Du, H. & Dai Pang, S. High-performance concrete incorporating calcined kaolin clay and limestone as cement substitute. Constr. Build. Mater. 264, 120152 (2020).

Gu, Y.-C. et al. Immobilization of hazardous ferronickel slag treated using ternary limestone calcined clay cement. Constr. Build. Mater. 250, 118837 (2020).

Guo, F. Calcined clay and limestone as partial replacements of portland cement: Electrochemical corrosion behavior of low carbon steel rebar as concrete reinforcement in corrosive environment. Int. J. Electrochem. Sci. 15(12), 12281–12290 (2020).

Huang, Z.-Y. et al. Development of limestone calcined clay cement concrete in South China and its bond behavior with steel reinforcement. J. Zhejiang Univ.-SCIENCE A 21(11), 892–907 (2020).

Khan, M. S., Nguyen, Q. D. & Castel, A. Performance of limestone calcined clay blended cement-based concrete against carbonation. Adv. Cem. Res. 32(11), 481–491 (2020).

Krishnan, S., Gopala Rao, D., Bishnoi, S., Why low-grade calcined clays are the ideal for the production of limestone calcined clay cement (LC 3), calcined clays for sustainable concrete. In Proc. of the 3rd International Conference on Calcined Clays for Sustainable Concrete, Springer, 2020, pp. 125–130.

Marangu, J. M. Physico-chemical properties of Kenyan made calcined Clay-Limestone cement (LC3). Case Stud. Constr. Mater 12, e00333 (2020).

Mo, Z., Wang, R. & Gao, X. Hydration and mechanical properties of UHPC matrix containing limestone and different levels of metakaolin. Constr. Build. Mater. 256, 119454 (2020).

Nair, N., Haneefa, K. M., Santhanam, M. & Gettu, R. A study on fresh properties of limestone calcined clay blended cementitious systems. Constr. Build. Mater. 254, 119326 (2020).

Nguyen, Q. D., Khan, M. S. & Castel, A. Chloride diffusion in limestone flash calcined clay cement concrete. ACI Mater. J. 117(6), 165–175 (2020).

Nguyen, Q. D., Kim, T. & Castel, A. Mitigation of alkali-silica reaction by limestone calcined clay cement (LC3). Cem. Concr. Res. 137, 106176 (2020).

Parashar, A. & Bishnoi, S. A comparison of test methods to assess the strength potential of plain and blended supplementary cementitious materials. Constr. Build. Mater. 256, 119292 (2020).

Rodriguez, C. & Tobon, J. I. Influence of calcined clay/limestone, sulfate and clinker proportions on cement performance. Constr. Build. Mater. 251, 119050 (2020).

Argın, G. & Uzal, B. Enhancement of pozzolanic activity of calcined clays by limestone powder addition. Constr. Build. Mater. 284, 122789 (2021).

Bernal, I. M., Shirani, S., Cuesta, A., Santacruz, I. & Aranda, M. A. Phase and microstructure evolutions in LC3 binders by multi-technique approach including synchrotron microtomography. Constr. Build. Mater. 300, 124054 (2021).

Chen, Y. et al. 3D printing of calcined clay-limestone-based cementitious materials. Cem. Concr. Res. 149, 106553 (2021).

Costa, A. R. D. & Gonçalves, J. P. Accelerated carbonation of ternary cements containing waste materials. Constr. Build. Mater. 302, 124159 (2021).

da Silva, M. R. C., Malacarne, C. S., Longhi, M. A. & Kirchheim, A. P. Valorization of kaolin mining waste from the Amazon region (Brazil) for the low-carbon cement production. Case Stud. Constr. Mater. 15, e00756 (2021).

Dixit, A., Du, H., Dang, J. & Dai Pang, S. Quaternary blended limestone-calcined clay cement concrete incorporating fly ash. Cement Concr. Compos. 123, 104174 (2021).

Dixit, A., Du, H. & Dai Pang, S. Performance of mortar incorporating calcined marine clays with varying kaolinite content. J. Clean. Prod. 282, 124513 (2021).

Huang, H., Li, X., Avet, F., Hanpongpun, W. & Scrivener, K. Strength-promoting mechanism of alkanolamines on limestone-calcined clay cement and the role of sulfate. Cem. Concr. Res. 147, 106527 (2021).

Krishnan, S., Singh, A. & Bishnoi, S. Impact of alkali salts on the hydration of ordinary portland cement and limestone–calcined clay cement. J. Mater. Civ. Eng. 33(9), 04021223 (2021).

Li, R. & Ye, H. Influence of alkalis on natural carbonation of limestone calcined clay cement pastes. Sustainability 13(22), 12833 (2021).

Lin, R.-S., Han, Y. & Wang, X.-Y. Macro–meso–micro experimental studies of calcined clay limestone cement (LC3) paste subjected to elevated temperature. Cement Concr. Compos. 116, 103871 (2021).

Lin, R.-S., Lee, H.-S., Han, Y. & Wang, X.-Y. Experimental studies on hydration–strength–durability of limestone-cement-calcined Hwangtoh clay ternary composite. Constr. Build. Mater. 269, 121290 (2021).

Long, W.-J., Lin, C., Tao, J.-L., Ye, T.-H. & Fang, Y. Printability and particle packing of 3D-printable limestone calcined clay cement composites. Constr. Build. Mater. 282, 122647 (2021).

Malacarne, C. et al. Influence of low-grade materials as clinker substitute on the rheological behavior, hydration and mechanical performance of ternary cements. Case Stud. Constr. Mater. 15, e00776 (2021).

Malacarne, C. S., da Silva, M. R. C., Danieli, S., Maciel, V. G. & Kirchheim, A. P. Environmental and technical assessment to support sustainable strategies for limestone calcined clay cement production in Brazil. Constr. Build. Mater. 310, 125261 (2021).

Marangu, J. M. Effects of sulfuric acid attack on hydrated calcined clay–limestone cement mortars. J. Sustain Cement-Based Mater. 10(5), 257–271 (2021).

Mo, Z., Zhao, H., Jiang, L., Jiang, X. & Gao, X. Rehydration of ultra-high performance concrete matrix incorporating metakaolin under long-term water curing. Constr. Build. Mater. 306, 124875 (2021).

Rossetti, A., Ikumi, T., Segura, I. & Irassar, E. F. Sulfate performance of blended cements (limestone and illite calcined clay) exposed to aggressive environment after casting. Cem. Concr. Res. 147, 106495 (2021).

Sun, Y. et al. Development of a novel eco-efficient LC2 conceptual cement based ultra-high performance concrete (UHPC) incorporating limestone powder and calcined clay tailings: Design and performances. J. Clean. Prod. 315, 128236 (2021).

Shah, V. et al. Influence of cement replacement by limestone calcined clay pozzolan on the engineering properties of mortar and concrete. Adv. Cem. Res. 32(3), 101–111 (2020).

Vaasudevaa, B., Dhandapani, Y. & Santhanam, M. Performance evaluation of limestone-calcined clay (LC2) combination as a cement substitute in concrete systems subjected to short-term heat curing. Constr. Build. Mater. 302, 124121 (2021).

Yu, J., Wu, H.-L., Mishra, D. K., Li, G. & Leung, C. K. Compressive strength and environmental impact of sustainable blended cement with high-dosage limestone and calcined clay (LC2). J. Clean Prod. 278, 123616 (2021).

Zolfagharnasab, A., Ramezanianpour, A. A. & Bahman-Zadeh, F. Investigating the potential of low-grade calcined clays to produce durable LC3 binders against chloride ions attack. Constr. Build. Mater. 303, 124541 (2021).

Zunino, F. & Scrivener, K. The reaction between metakaolin and limestone and its effect in porosity refinement and mechanical properties. Cem. Concr. Res. 140, 106307 (2021).

Zunino, F. & Scrivener, K. Assessing the effect of alkanolamine grinding aids in limestone calcined clay cements hydration. Constr. Build. Mater. 266, 121293 (2021).

Yu, J., Mishra, D. K., Hu, C., Leung, C. K. & Shah, S. P. Mechanical, environmental and economic performance of sustainable Grade 45 concrete with ultrahigh-volume Limestone-Calcined Clay (LCC). Resour. Conserv. Recycl. 175, 105846 (2021).

Ruan, Y., Jamil, T., Hu, C., Gautam, B. P. & Yu, J. Microstructure and mechanical properties of sustainable cementitious materials with ultra-high substitution level of calcined clay and limestone powder. Constr. Build. Mater. 314, 125416 (2022).

G. Hammond, C. Jones, Inventory of carbon & energy: ICE, Sustainable energy research team, Department of Mechanical Engineering, 2008.

Gettu, R. et al. Influence of supplementary cementitious materials on the sustainability parameters of cements and concretes in the Indian context. Mater. Struct. 52, 1–11 (2019).

Müller, H. S., Haist, M. & Vogel, M. Assessment of the sustainability potential of concrete and concrete structures considering their environmental impact, performance and lifetime. Constr. Build. Mater. 67, 321–337 (2014).

Lin, W.-C. & Tsai, C.-F. Missing value imputation: a review and analysis of the literature (2006–2017). Artif. Intell. Rev. 53(2), 1487–1509 (2019).

Lalande, F. & Doya, K. Numerical data imputation: choose kNN over deep learning. In Similarity search and applications (eds Skopal, T. et al.) 3–10 (Springer International Publishing, 2022).

Khan, S. I. & Hoque, A. S. M. L. SICE: an improved missing data imputation technique. J. Big Data 7(1), 37 (2020).

Song, J., Wang, Y., Fang, Z., Peng, L. & Hong, H. Potential of ensemble learning to improve tree-based classifiers for landslide susceptibility mapping. IEEE J. Selected Topics Appl. Earth Obs. Remote Sens. 13, 4642–4662 (2020).

Chen, W. & Zhang, L. Building vulnerability assessment in seismic areas using ensemble learning: A Nepal case study. J. Clean. Prod. 350, 131418. https://doi.org/10.1016/j.jclepro.2022.131418 (2022).

Jerome, H. F. Greedy function approximation: A gradient boosting machine. Ann. Stat. 29(5), 1189–1232 (2001).

Qiao, P., Ni, J., Huang, R. & Cheng, Z. Prediction of instantaneous particle number for light-duty gasoline vehicles under real driving conditions based on ensemble learning. J. Clean. Prod. 434, 139859 (2024).

Tian, L., Feng, L., Yang, L. & Guo, Y. Stock price prediction based on LSTM and LightGBM hybrid model. J. Supercomput. 78(9), 11768–11793 (2022).

Tian, Y., Lai, Y. M., Pei, W. S., Qin, Z. P. & Li, H. W. Study on the physical mechanical properties and freeze-thaw resistance of artificial phase change aggregates. Constr. Build. Mater. 329, 12 (2022).

Borisov, V. et al. Deep neural networks and tabular data: a survey. IEEE Trans. Neural Netw. Learn. Syst. 35(6), 7499–7519 (2024).

Lyngdoh, G. A., Zaki, M., Krishnan, N. M. A. & Das, S. Prediction of concrete strengths enabled by missing data imputation and interpretable machine learning. Cement Concr. Compos. 128, 104414 (2022).

Shafaie, V. & Movahedi Rad, M. Multi-objective genetic algorithm calibration of colored self-compacting concrete using DEM: An integrated parallel approach. Sci. Rep. 14(1), 4126 (2024).

Arik S.Ö., Pfister, T., Tabnet: Attentive interpretable tabular learning, In Proc. of the AAAI conference on artificial intelligence, 2021, pp. 6679–6687.

Kanász, R., Drotár, P., Gnip, P. & Zoričák, M. Clash of titans on imbalanced data: TabNet vs XGBoost. IEEE Confer. Artif. Intell. (CAI) 2024, 320–325 (2024).

Zhang, Z. & Jung, C. GBDT-MO: gradient-boosted decision trees for multiple outputs. IEEE Trans. Neural Netw. Learn. Syst. 32(7), 3156–3167 (2021).

Yuan, X. et al. Applied machine learning for prediction of CO2 adsorption on biomass waste-derived porous carbons. Environ. Sci. Technol. 55(17), 11925–11936 (2021).

Chen, T., Guestrin, C., XGBoost: A scalable tree boosting system, In Proc. of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Association for Computing Machinery, San Francisco, California, USA, 2016, pp. 785–794.

Ke, G., Meng, Q., Finley, T., Wang, T., Chen, W., Ma, W., Ye, Q., Liu, T.-Y., LightGBM: a highly efficient gradient boosting decision tree, In Proc. of the 31st International Conference on Neural Information Processing Systems, Curran Associates Inc., Long Beach, California, USA, 2017, pp. 3149–3157.

Eyo, E. U. & Abbey, S. J. Machine learning regression and classification algorithms utilised for strength prediction of OPC/by-product materials improved soils. Constr. Build. Mater. 284, 122817 (2021).

Inqiad, W. B. et al. Utilizing contemporary machine learning techniques for determining soilcrete properties. Earth Sci. Inf. 18(1), 176 (2025).

Lundberg, S. M. et al. From local explanations to global understanding with explainable AI for trees. Nature Mach. Intell. 2(1), 56–67 (2020).

Deb, K., Pratap, A., Agarwal, S. & Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 6(2), 182–197 (2002).

Gunantara, N. A review of multi-objective optimization: Methods and its applications. Cogent Eng. 5(1), 1502242 (2018).

C. ASTM, Standard test method for slump of hydraulic-cement concrete, 2020.

Chan, J.Y.-L. et al. Mitigating the multicollinearity problem and its machine learning approach: a review. Mathematics 10(8), 1283 (2022).

A.M. Neville, Properties of concrete, Longman London1995.

Sharma, M., Bishnoi, S., Martirena, F. & Scrivener, K. Limestone calcined clay cement and concrete: A state-of-the-art review. Cement Concr. Res. 149, 106564 (2021).

Barbhuiya, S., Nepal, J. & Das, B. B. Properties, compatibility, environmental benefits and future directions of limestone calcined clay cement (LC3) concrete: A review. J. Build. Eng. 79, 107794 (2023).

Inqiad, W. B. et al. Soft computing models for prediction of bentonite plastic concrete strength. Sci. Rep. 14(1), 18145 (2024).

Acknowledgements

This work was supported by the Natural Science Foundation of Henan Province, China (No. 242300421429).

Author information

Authors and Affiliations

Contributions

Xi Chen and Weiyi Chen wrote the main manuscript text and prepared all figures. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions