Abstract

Background

The proportion of residual leukemic blasts after chemotherapy assessed by multiparameter flow cytometry, is an important prognostic factor for the risk of relapse and overall survival in acute myeloid leukemia (AML). This measurable residual disease (MRD) is used in clinical trials to stratify patients for more or less intensive consolidation therapy. However, an objective and reproducible analysis method to assess MRD status from flow cytometry data is lacking, yet is highly anticipated for broader implementation of MRD testing.

Methods

We propose a computational pipeline based on Gaussian mixture modeling that allows a fully automated assessment of MRD status while remaining completely interpretable for clinical diagnostic experts. Our pipeline requires limited training data, which makes it easily transferable to other medical centers and cytometry platforms.

Results

We identify all healthy and leukemic immature myeloid cells in with high concordance (Spearman’s Rho = 0.974) and classification performance (median F-score = 0.861) compared to manual analysis. Using control samples (n = 18), we calculate a computational MRD percentage with high concordance to expert gating (Spearman’s rho = 0.823) and predict MRD status in a cohort of 35 AML follow-up measurements with high accuracy (97%).

Conclusions

We demonstrate that our pipeline provides a powerful tool for fast (~3 s) and objective automated MRD assessment in AML.

Plain Language Summary

Cancer cells can be targeted with intensive chemotherapy in patients with acute myeloid leukemia (a type of blood cell cancer). However, disease can return after treatment due to the survival of cancer cells in the bone marrow. Identifying these cells is relevant to decide on future treatment options. However, this analysis is still performed manually by looking at a series of graphs to identify cancer and healthy cells. This process is labor-intensive, and results can differ based on the person performing the analysis. In this study, we demonstrate that this process can be automated using a computer algorithm (calculations), cutting the analysis time down from thirty minutes to three seconds. We anticipate that this can improve the accessibility and accuracy of diagnosing acute myeloid leukemia.

Similar content being viewed by others

Introduction

Measurable residual disease (MRD), defined as the proportion of residual leukemic blasts after (chemo)therapy, is a critical biomarker for relapse-free and overall survival in acute myeloid leukemia (AML)1. A recommended technique for MRD assessment in AML is multiparameter flow cytometry, which uses fluorescent-labeled monoclonal antibodies to determine intra- and extracellular expression of proteins of individual cells2. Flow cytometry allows for the identification and monitoring of immature myeloid cells (blasts) with characteristic leukemia-associated phenotypes (LAIPs), protein expression patterns absent or present in very low frequencies in healthy or regenerating bone marrow3. Currently, experts manually identify these leukemic blasts through hierarchical bivariate visualizations in a process called manual gating. However, this method requires extensive background knowledge of protein expression patterns of healthy and malignant hematopoiesis and is time-consuming. Furthermore, the subjectivity of gating can result in undesired variability in MRD percentages, derived from the proportion of LAIP cells relative to the total white blood cells (WBC). The threshold for MRD positivity is currently 0.1%2, so small differences in manual gating can affect the reliability of MRD positivity. Therefore, an objective and standardized MRD assessment is a clinical imperative for AML management.

A promising new approach for objective MRD assessment in AML is computational MRD (cMRD) assessment. Previous applications in other hematological malignancies, such as chronic lymphocytic leukemia4,5, acute lymphoblastic leukemia6,7, and multiple myeloma8, have demonstrated varying degrees of success. For AML, several studies have utilized (semi-)supervised machine learning and artificial intelligence to identify leukemic features for automated AML-MRD assessment6,9,10,11,12,13,14,15. However, the heterogeneity of marker expression in AML poses a significant challenge for supervised approaches: more than 100 distinct LAIPs have been described in AML, with some observed in less than 1% of patients16. Because LAIPs manifest as distinct clusters in high-dimensional data, supervised approaches require training models on the full spectrum of AML phenotypes. This requires unrealistically large amounts of training data, which is infeasible for smaller diagnostic centers. Additionally, the development and implementation of such models require consistent use of identical flow cytometry panels and platforms over time and would become unusable if new test samples deviate from previous training data.

Several algorithms that limit the challenges of supervised approaches have been proposed. One approach allows for clustering a single AML sample with a set of (disease-free) control or “reference” samples, assuming that cells with characteristics absent from the reference coincide with leukemic populations of interest. Leukemic clusters can then be delineated in a “computer-assisted” setting15,17,18,19, in which clusters are manually evaluated, or determined using a heuristic. For example, Jacqmin et al. 20. and Weijler et al. 21. used the ratio of patient and control cells within clusters to define potential leukemic clusters. However, the degree to which leukemic blasts form a separate cluster depends on the mixture of leukemic to control cells. Additionally, these clustering algorithms are heavily dependent on density patterns in feature space, which may fail for rare cell populations and in high dimensions. Weijler et al. 21. attempted to circumvent this by reducing the data to three dimensions using Uniform manifold approximation and projection (UMAP). However, such transformations may extensively distort expression data22,23, potentially limiting their applicability in clinical settings.

Another promising approach to identify leukemic blasts may come from novelty detection algorithms, which evaluate whether a new sample contains anomalous observations that do not occur (or with a low frequency) in training data. Consequently, the need for ad-hoc clustering together with a reference is alleviated, and the same model can be used and validated for use across AML samples. Additionally, as novelty detection evaluates each cell individually, it is not affected by the rarity of leukemic blasts in test samples.

In this study, we developed and evaluated a novel pipeline for fully automated MRD assessment in AML using Gaussian mixture models (GMMs). Briefly, these statistical models fit a combination of Gaussian distributions to the data, representing clusters (“components”) of cells with similar marker expression. Using these models, we identified the cell subset containing healthy immature and leukemic blasts. Next, we identified leukemic blasts within this subset and predicted the MRD status. We applied the pipeline to a large flow cytometry dataset which was acquired according to standardization criteria of the European LeukemiaNet guidelines2. This dataset was annotated and labeled extensively, and made publically available to provide further potential for benchmarks of computational AML-MRD strategies.

Methods

Datasets

Involvement of human participants was approved by the institutional review boards of Amsterdam UMC (#METC-2023.0883) for in-house participants and Erasmus MC (#MEC-2013-539) for patients in the HOVON-SAKK-132 trial24. The study followed the Declaration of Helsinki with written informed consent from all patients.

Bone marrow samples were obtained via aspiration from the posterior superior iliac spine in pulls of 1–2 mL25 from AML patients or from the sternum during cardiothoracic surgery for normal bone marrow. Three anonymized cohorts of patient samples were collected for model training and optimization. The first cohort, BLAST110, includes 90 AML and 20 normal bone marrow in-house patients measured between 2015 and 2022. For AML patients, a mixture of diagnosis (n = 30) and follow-up (n = 60) samples with different frequencies of leukemic blasts at different time points were selected for phenotypic variation of AML progression. For follow-up samples, an even mixture (20 - 20 – 20 samples) of MRDlow (0–0.1%), MRDintermediate (0.1%–1%), and MRDhigh (≥1%) samples were randomly selected from the total patient cohort between 2015 and 2022. The second cohort, LAIP29, comprised another 29 in-house AML patients with samples from diagnostic (n = 28) and follow-up (n = 20) time points. Lastly, from patients included in the HOVON-SAKK 132 trial24, a subset of 18 patients with an event-free survival of 3 years, without a LAIP at diagnosis and MRD negativity at follow-up, was set aside for the construction of a disease-free reference dataset, which we refer to as regenerating bone-marrow (RBM18).

Flow cytometry

Flow cytometry measurements were performed on FACS CANTO II (BD Biosciences, San Jose, CA, USA) equipment using a four-tube (P1-P4), eight-color antibody panel26 (Supplementary Table 1). To explore potential batch effects, we visualized each marker’s percentiles (interpercentile distance of 10%, excluding minimum and maximum) for each file across the three cohorts using principal component analysis, which did not show a clear technical confounder (Supplementary Fig. 1).

In the BLAST110 dataset, blasts were manually gated in FlowJo (v10.8.1) by identifying CD45dim/CD34+ and CD45dim/CD117+ cells (Supplementary Fig. 2). Cell labeling was extracted from FlowJo workspace files in R (4.3.1) using CytoML (v2.12.0)27. In the LAIP29 and RBM18 datasets, every event was given a unique identifier before manual AML-MRD analysis26 in Infinicyt (v2.0.5b). Each manually gated population was exported as a unique FCS file, after which an R script was used to obtain cell labeling similar to that obtained through FlowJo. For the RBM18 dataset, any LAIP+ cells were removed.

Flow cytometry pre-processing

Events exceeding the instrument’s capacity were removed using PeacoQC (v1.10.0)28, after which fluorescence parameters were compensated. Data was transformed using the logicle transformation29. The w parameter of the transform was based on the median value as determined using the estimateLogicle function in flowCore (v2.12.0)30 based on the BLAST110 cohort. An approximated min-max scaling was applied for scatter parameters based on the 0.01 and 0.99 quantiles. Doublets were removed by excluding all cells identified by PeacoQC’s RemoveDoublets or flowStat’s (4.12.0) singletGate.

Supervised blast classification

Supervised models and their hyperparameters (Supplementary Table 2) were compared using nested group 10-fold cross-validation to classify blasts in the BLAST110 dataset. In this benchmark, we also included a supervised variant of the FlowSOM clustering algorithm (FlowSOMclf) in Python (v3.9.10)31,32. In this setting clusters of the default 10 × 10 grid in training data were assigned as blast clusters if the ratio to blast to non-blast cells exceeded a threshold. We treated this threshold as a tunable hyperparameter during model training.

To develop a model with applicability across the four-tube panel, models were trained exclusively on SSC-A, CD45, CD34, and CD117. Patient identifiers were used as groupings to prevent data leakage between training and validation folds. Optimal hyperparameters were chosen based on the best performing (highest F-score) hyperparameter set based on either exhaustive search (logistic regression, support vector machine, FlowSOMclf) or 20 randomized search iterations (random forests, LightGBM, GMMclf). For computational considerations, models were trained on downsampled data by randomly sampling 2000 total cells from each FCS file. After model selection, a final GMM classifier was finetuned and fitted on a more extensive (5000 total cells/sample) dataset, selecting the final hyperparameters using an exhaustive search in group 10-fold cross-validation. In all performance evaluation settings, classification performance was calculated for full samples without downsampling.

Supervised WBC count identification

The cell count corresponding to each non-blast component in the GMM classifier was obtained to predict the WBC count. For modeling, we used scikit-learn’s (v1.4.0) implementation of linear regression. In the BLAST110 cohort, we first assessed training performance using leave-one-out cross-validation using patient identifiers as groups. Next, the model was trained on the features from the BLAST110 cohort before evaluation in the LAIP29 cohort.

Reference modeling and novelty detection

An aggregated dataset was created from the RBM18 samples by sampling 4000 cells from every FCS file, ensuring an equal contribution of samples. A unique GMM was trained on fluorescence parameters in the aggregated data for each tube. The number of components (range: 1–20) was optimized using the Bayesian information criterion (BIC) score in leave-one-out cross-validation, using individual patients as held-out test sets. Cells were marked as novelties if they were below a log-likelihood threshold defined by a percentile of the log-likelihood distribution in the aggregated RBM18 data (e.g., 10%). For nuSVM and LOF-based novelty detection, scikit-learn implementations were used, using nu = 0.05 for the nuSVM and contamination = 0.05 for the LOF.

For the UMAP-HDBSCAN pipeline21, we used previously described parameters for UMAP (min_dist = 0, n_components = 3) and HDBSCAN (min_cluster_size = 50). Clusters were marked as leukemic if a cluster contained less than 5% control events. Although the authors originally used 80% of the cell count of a test sample to define the reference cell count, this was not always feasible for test samples with large blast counts due to the size of our reference dataset. We used downsampled test data for these samples to identify the aberrant cluster, after which all test data was used for evaluation. Although this could theoretically have lowered sensitivity, samples with such high blast counts were typically diagnosis samples for which sensitivity was unlikely to be the limiting factor.

Results

To perform automated MRD assessment of AML samples, we used a two-staged approach (Fig. 1). In the first stage, leukemic blasts and their healthy myeloid counterparts are identified manually or automatically using a classifier based on GMMs. Next, control samples without leukemic blasts are used to train a GMM reference model. Subsequently, we use this model to identify blasts absent in the control data (i.e., “novelties”). These predicted leukemic blasts can be visually inspected during expert evaluation, and, combined with a manually determined or predicted WBC count, yield a computational MRD percentage (cMRD%). We explain these steps in more detail in the following sections.

A cMRD percentage is calculated for test samples in two stages. First, immature blasts are identified either manually or through a GMM classifier. From the GMM classifier, the WBC count can also be inferred, but can also be determined using a manual gate. Next, a reference model trained on control blasts identifies leukemic blasts through novelty detection. These predicted blasts can be validated by experts, and together with the WBC count as a denominator is used to calculate a cMRD%. MRD, measurable residual disease; cMRD, computational measurable residual disease; GMM, Gaussian mixture model; WBC, white blood cell.

Interpretable identification of myeloid blasts using Gaussian mixture models

Because leukemic (LAIP + ) blasts are present in very low proportions (below 0.1% of WBCs) in bone marrow samples after chemotherapy, we applied a strategy to enrich for these cells, facilitating their detection. Leukemic blasts are blocked in differentiation and can be found in the compartment of hematopoietic immature blasts, characterized by the following flow cytometry parameters: low side scatter (SCC-A), moderate expression of CD45, and high expression of CD34 or CD11726. To enrich for these cells without human intervention, we trained GMMs on SSC-A intensity and CD45, CD34, and CD117 expression patterns. However, instead of using GMM in the usual unsupervised clustering setting, we used them for classification (Supplementary Text 1). By fitting separate GMMs on blast and non-blast populations in labeled training data, we could calculate two scores for every cell: the (log-)likelihood of a cell belonging to the blast model and the (log-)likelihood of a cell belonging to the non-blast model. These scores were used to classify cells by assigning them to the most likely population.

We benchmarked the GMM classifier (GMMclf) for blast identification against other supervised algorithms, such as logistic regression (LR), support vector machines (SVM), random forests (RF), gradient boosting (LightGBM) and a supervised classifier based on FlowSOM clustering (FlowSOMclf). Performance was first evaluated on a training set (BLAST110) of 90 AML and 20 normal bone marrow samples comprising different manually gated phenotypes (Fig. 2a). To obtain an unbiased performance assessment with optimal hyperparameters (Supplementary Table 2), models were compared using nested cross-validation, using a model selection step before further evaluation and validation33. Cross-validation in the training set revealed GMMclf as the best-performing method in terms of accuracy (Median = 0.987) and recall (Median = 0.997) (Fig. 2b). Moreover, predicted blast counts showed high concordance (Spearman’s rho = 0.940) with manual analysis (Fig. 2c). While the FlowSOM-based classifier showed the highest F-score and specificity, the superior sensitivity and interpretability of the GMM classifier outweighs this modest difference, especially in a pre-filtering setting.

a Overview of nested cross validation for model optimization and comparison. b Model performance in BLAST110 training set (n = 110 individuals, n = 403 measurements). c Concordance between manual and predicted blast counts in BLAST110 training set (n = 110 individuals, n = 403 measurements). d GMMclf performance in LAIP29 test set (n = 29 individuals, n = 82 measurements). e Concordance between manual and predicted blast counts in LAIP29 test set (n = 29 individuals, n = 82 measurements). f Concordance of leukemic blast count in manual and GMMclf predicted blast compartment in LAIP29 test set (n = 29 individuals, n = 82 measurements). In annotated points (<50% LAIP+ cells conserved), LAIPs were expressed on blast phenotypes not accounted for during model training. g Positions of GMMclf components for blasts (K = 2, red) and non-blasts (K = 3, blue). h Predicted blasts (red) and non-blasts (blue) for a single sample. BLAST110, training cohort, LAIP29 test cohort, LR logistic regression, SVM support vector machine, RF random forest, LightGBM light gradient-boosting machine, FlowSOMclf FlowSOM classifier, GMMclf Gaussian mixture model classifier, LAIP leukemia-associated immunophenotype.

After initial benchmarking, we further refined the GMM classifier on the full BLAST110 cohort with an exhaustive parameter search. In this setting, we observed optimal performance when using two components for blasts and three for non-blasts (Supplementary Fig. 3). We validated this final model in a test cohort of 29 AML patients (LAIP29) in which blasts were gated by a different expert to prevent human bias. Compared to performance in the BLAST110 training set, the final model resulted in a higher F-score (Median = 0.861; Fig. 2d) and concordance (Spearman’s Rho = 0.974; Fig. 2e) with manual analysis in the LAIP29 test set. This may be based on the model refinement or differences in gating.

Lastly, we evaluated if the leukemic blasts we aimed to identify in the second stage of the pipeline (Fig. 1) were not lost as false negatives as a result of automated blast identification. High recall for leukemic blasts was confirmed (Fig. 2f). In isolated instances of notable (>50%) loss of leukemic populations, LAIP expression on CD45- (pt7) or CD34-CD117- cells in the blast region (pt9) accounted for the observed variation—cell types not accounted for during model training.

Because the components in each model approximate known cell populations (Fig. 2g), model decisions (Fig. 2h) remain interpretable in 2D visualizations. This, combined with the observed performance, yields an automated blast identification that is both accurate and highly interpretable.

Reference models describe myeloid maturation patterns in regenerating bone marrow

Leukemic blasts in AML are commonly defined using aberrant protein expression patterns, which are absent on blasts in normal or regenerating bone marrow. We first evaluated whether a GMM could model patterns of hematopoiesis in regenerating bone marrow, which could be a negative control for detecting leukemic blasts in AML samples. In 18 apparent disease-free AML samples after induction chemotherapy (regenerating bone marrow 18; RBM18 set), we used cross-validation to pick an optimal number of components (K) in each tube for the fluorescence intensities of manually gated and predicted blasts, ensuring that components generalize across the cohort without overfitting (Fig. 3a).

a Overview of cross-validation for model training and evaluation. b Model components in CD34 vs. CD117. c Model components in CD13 vs HLA-DR. d Model components in CD13 vs. CD36. e Model components in CD34 vs. CD19. For all shown components, the GMM for manual blast input was used. RBM18 regenerating bone marrow training cohort, LAIP29 test cohort, Dx diagnosis, FU follow-up, GMM Gaussian mixture model, LOOCV leave-one-out cross-validation.

Depending on the input data and tube, we identified a different optimal number of components, ranging from 5 to 11 (Supplementary Fig. 4), which were subsequently annotated by experts (Supplementary Tables 3–4). This showed that the reference models provided a detailed representation of known healthy progenitor counterparts to leukemic populations such as promyelocytes, early monocytes and erythroid precursors. Bivariate visualizations of the model components captured known maturation patterns (Fig. 3b, c). Additionally, certain cell types such as CD36+ erythroid precursors (component 3 in Fig. 3d) and CD19+ pre-B cells (component 2 in Fig. 3e) stood out as single components. In several components, residual debris, cell types without a malignant counterpart in AML (e.g., pre-B cells) and cells with high background levels of LAIPs (CD22, CD15, CD56) that are potentially induced by chemotherapy could still be identified (Supplementary Tables 3–4). However, as these cell types are also identified in test cases, their inclusion in the model will prevent false positives during novelty detection, and therefore we decided to still include these cells.

Novelty detection facilitates automated AML MRD assessment

Using the reference models, we evaluated whether GMM-based novelty detection (Supplemental Text 1) can identify leukemic blasts in the LAIP29 cohort. We benchmarked performance with the UMAP-HDBSCAN pipeline21, the only comparable published method capable of semi-supervised detection of leukemic populations in a fully automated setting, and novelty detection algorithms not yet evaluated in the context of AML: the one-class support vector machine (nuSVM) and local outlier factor (LOF).

To perform GMM-based novelty detection, we first calculated the log-likelihood based on the reference models for all blasts. To identify aberrant blasts, we evaluated a range of potential thresholds based on percentiles of the log-likelihood distribution in regenerating bone marrow below which we label cells leukemic (Fig. 4a). For example, a cell with a log-likelihood below the 10% percentile can be considered more anomalous than 90% of cells in regenerating bone marrow. As a proof-of-principle, we evaluated six intuitive thresholds: 25%, 10%, 5%, 2.5%, 1% and 0.1%. Using the GMM with manually gated blast input, we found that the 25% log-likelihood percentile threshold recovered nearly 100% of leukemic blasts in a semi-automated setting (Fig. 4b). Lowering the threshold reduced the number of recovered cells but increased the precision. At a percentile threshold of around 1%, a trade-off between precision and recall was identified. For predicted blast input in a fully automated setting, this cut-off was around 5%, suggesting a lower discriminatory potential. Using these thresholds (1% for manually gated blast input in a semi-automated setting, 5% for predicted blast input in a fully automated setting), we retrieved a leukemic blast count for every sample.

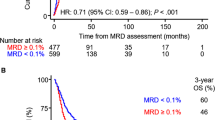

a Theoretical example of how log-likelihood values extracted from a reference model separate leukemic (LAIP+) and non-leukemic (LAIP-) cells. b Trade-off between mean precision and recall across different GMM percentile thresholds in the semi and fully automated setting in 47 Dx and 35 FU measurements from LAIP29 cohort. c Correlation between MRD% and cMRD% for GMM-based novelty detection in semi- and fully automated settings (47 Dx, 35 FU measurements from LAIP29 cohort). d Correlation between MRD% and cMRD% for UMAP-HDBSCAN in semi- and fully automated settings (47 Dx, 35 FU measurements from LAIP29 cohort). e Spearman correlation between MRD% and cMRD% for different methods in semi- and fully automated settings in 47 Dx and 35 FU measurements from the LAIP29 cohort. f ROC curve for GMM-based cMRD% and manual MRD positivity (>0.1% of WBCs) in 35 FU measurements from the LAIP29 cohort. g Concordance between cMRD and MRD positivity (>0.1% of WBCs) in the semi- and fully automated setting in LAIP29 FU measurements (n = 35). Optimal thresholds (dotted lines) are defined by ROC-AUC statistics. MRD measurable residual disease, cMRD computational measurable residual disease, GMM Gaussian mixture model, LOF local outlier factor, nuSVM one-class support vector machine, LAIP leukemia-associated immunophenotype, Dx diagnosis, FU follow-up, LAIP29 test cohort receiver operating characteristic, AUC area under the curve, ROC, WBC white blood cell.

The prognostic relevance of MRD after initial chemotherapy is established and clinically validated using the CD45-expressing cells as a denominator2. To translate the predicted number of leukemic blasts in our pipeline to a similar, clinically useful MRD status, we hypothesized that the WBC count could be inferred from the mixture components of the GMM classifier based on the presence of CD45 positive and negative components in parallel to the first blast classification (Fig. 2g). We obtained the cell counts associated with each component for the non-blast GMM in the BLAST110 cohort and used this as input for a linear regression model to predict the manual WBC count. This model could accurately determine the WBC count in the BLAST110 and LAIP29 cohorts with a root mean square error of around 21,000 (Supplementary Fig. 5). Like predicted blast counts, WBC counts were highly correlated with manual analysis in the BLAST110 training cohort (Spearman’s rho = 0.975) and the LAIP29 test cohort (Spearman’s rho = 0.973). Consequently, we used the predicted WBC count in a fully automated setting to calculate the cMRD%.

Enumerating the predicted leukemic blast count combined with the WBC count resulted in a cMRD% for each of the four methods for the semi-automated and fully-automated setting. The GMM-based cMRD% in the LAIP29 cohort showed high concordance with manual gating in both the semi (Spearman’s rho = 0.766) and fully (Spearman’s rho = 0.823) automated setting (Fig. 4c). Performance was superior to the UMAP-HDBSCAN approach, which did not recover any leukemic blasts in 34% (semi-automated) or 44% (fully automated) of samples, as leukemic populations failed to form distinct clusters in the UMAP embedding (Fig. 4d). In general, all novelty detection methods (GMM, LOF, nuSVM) outperformed the clustering-based approach of UMAP-HDBSCAN (Fig. 4e).

Next, as a proof-of-principle, we evaluated whether the cMRD% calculated by the GMM-based pipeline could distinguish between MRD+ and MRD- samples in the AML follow-up measurements in the LAIP29 cohort (n = 35, mean 937,164 total cells per sample). We found that the pipeline allowed for distinguishing between MRD positive and negative samples (ROC-AUC > 0.97) in the semi- and fully automated setting (Fig. 4f) in under three seconds (Supplementary Table 5). Visualization of the optimal cMRD% thresholds defined by ROC-AUC statistics revealed that 34 out of 35 (97%) measurements can be correctly classified. One measurement (tube P1 of pt11) was misclassified using the these cut-offs as a false negative. Interestingly, this patient became MRD-negative shortly after and did not die of AML-related causes.

Predictions made by the pipeline can be interpreted in conventional bivariate plots. We illustrate this with one of the follow-up samples from the LAIP29 cohort (pt13). Manual gating of this sample identified two LAIPs (CD13 + /CD15 + , CD13 + /HLA-DR-) expressed on CD34+ blasts (Fig. 5a). Interestingly, both LAIPs were co-expressed on the majority of cells, but a minority also expressed either one of the LAIPs independently. In Fig. 5b, we show how the log-likelihood percentile highlights the bulk of anomalous cells, corresponding with the aberrant region identified by gating in Fig. 5a. Using the optimal log-likelihood percentile threshold identified in Fig. 4b, the pipeline correctly identified 5870 out of 6129 leukemic cells (Fig. 5c).

a Manual gating identified two LAIPs (CD13 + /CD15 + , CD13 + /HLA-DR-) both expressed on CD34+ blasts. Both LAIPs are co-expressed as well as independently. b Computational analysis quantifies the aberrancy of individual cells. Cells with a low log-likelihood values are less likely to be observed in regenerating bone marrow. c Final output of pipeline with predicted normal (blue) and leukemic (red) cells according to the optimal log-likelihood percentile threshold. MRD, measurable residual disease; LAIP, leukemia-associated immunophenotype; LAIP29, test cohort.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Discussion

This study assessed the feasibility of using mixture models for automated AML-MRD assessment. We found that classification using two GMMs is a suitable approach for automated blast detection, which is highly accurate (Median F-score = 0.86; Fig. 2d) and outperforms more complex supervised learning algorithms. Moreover, this approach is highly flexible, as model components could be repurposed for additional tasks such as inference of the WBC count. To identify leukemic blasts in the heterogeneous context of AML, we used GMMs for novelty detection rather than previous supervised or “cluster-with-normal” approaches. This approach outperformed the UMAP-HDBSCAN pipeline and correctly classified MRD status in 34 out of 35 AML follow-up measurements (Fig. 4g) in under three seconds. Beyond this set, further retrospective and prospective studies in independent cohorts are required for validation of the model and its hyperparameters. Importantly, all model decisions throughout the pipeline remain interpretable in conventional 2D visualizations, demonstrating a promising powerful automated AML-MRD assessment.

A key advantage of our approach lies in its minimal dependence on training data. With just 18 regenerating bone marrow samples, we could effectively discriminate between MRD-positive and MRD-negative samples. This facilitates scalability and transfer of MRD assessment across clinical centers and mitigates issues related to cross-center batch effects. Additionally, as the field moves towards higher dimensional cytometry platforms34, our approach offers a smoother transition than supervised models, which become invalid upon adopting new platforms. While our utilization of clinical data for control sample selection may not always be feasible, alternative sources, such as samples from ALL11,12, stage 1 lymphoma13, immune/idiopathic thrombocytopenic purpura patients20 have been successfully employed in previous studies. Although normal bone marrow could be the most accessible type of control, induction chemotherapy may induce aberrancies35, which should ideally be represented in reference models. Nevertheless, the optimal size and suitability of different control samples have not been explored extensively and should be evaluated in future research.

Despite the model reaching a high level of concordance with manual MRD assessment, performance remained suboptimal at the cell level. In particular, we found a high false positive rate as many blasts gated as non-leukemic in AML samples were classified as novelties. These anomalous events within measurements may be attributed to different sources such as poor sample quality, varying antibody titrations or instrument settings and aspecific antibody staining, causing cells to occupy otherwise empty regions within feature space. Preemptive sample-level quality control measures could potentially identify such cases beforehand, evaluating the extent of sample alignment with reference data. Alternatively, discordant cases could contain leukemic populations missed by manual gating, but validating this requires an alternative ground truth at the single cell level.

Another possibility for refining a GMM-based approach is through improved modeling. For example, modeling control data is performed in a “pooled” data setting, but does not take into account the sample-level information. Inclusion of such information has already proven useful in unsupervised36 and supervised settings37 but could prove particularly beneficial in heterogeneous AML cohorts. Alternatively, alternative mixture model architectures can improve the modeling of non-Gaussian clusters38. By better approximation of the distribution of cells in feature space, such models could aid in delineating healthy progenitors, improving the detection of anomalous leukemic blasts as novelties. This aspect is also relevant for novel AML-MRD panels39, as estimating density patterns in high dimensional cytometry data may be challenged by the curse of dimensionality40.

Several studies have also evaluated the possibility of using unsupervised clustering algorithms such as FlowSOM31 to objectify and augment manual gating in a computer-assisted setting15,17,18,19. Although our pipeline output could serve as the input for further refinement in gating software, we recently demonstrated limited inter-operator variability for our assay41. Nevertheless, it could potentially identify leukemic populations not identified by manual gating, thereby augmenting the gating process. This is of particular interest for centers with less experience in AML MRD gating, where this data-driven approach could aid harmonization efforts. However, human examination of model output will remain crucial for models used in clinical settings. In our opinion, the interpretability of GMMs in bivariate visualizations can aid in this effort by allowing for a detailed interpretation of the models “under the hood”.

Finally, by making the standardized and labeled datasets used in this study publicly available, we hope other researchers are stimulated to advance flow cytometry bioinformatics for AML. Consequently, future studies can build upon these findings, improving the modeling of rare and heterogeneous cell populations in high dimensional data to advance the management of AML.

Data availability

FCS files from the BLAST110 and LAIP29 datasets and the associated cell labeling are publicly available at https://zenodo.org/records/11046402 (https://doi.org/10.5281/zenodo.11046401)42. All source data is available in Supplementary Data 1. All other data are available from the corresponding author on reasonable request.

Code availability

Scripts for preprocessing FCS files and subsequent modeling and analysis are available at https://github.com/AUMC-HEMA/cMRD-manuscript and are deposited at https://zenodo.org/records/13474900 (https://doi.org/10.5281/zenodo.13474899)43.

References

Short, N. J. et al. Association of measurable residual disease with survival outcomes in patients with acute myeloid leukemia: a systematic review and meta-analysis. JAMA Oncol. 6, 1890–1899 (2020).

Heuser, M. et al. 2021 Update on MRD in acute myeloid leukemia: a consensus document from the European LeukemiaNet MRD Working Party. Blood 138, 2753–2767 (2021).

Grimwade, D. & Freeman, S. D. Defining minimal residual disease in acute myeloid leukemia: which platforms are ready for “prime time”? Blood. J. Am. Soc. Hematol. 124, 3345–3355 (2014).

Salama, M. E. et al. Artificial intelligence enhances diagnostic flow cytometry workflow in the detection of minimal residual disease of chronic lymphocytic leukemia. Cancers 14, 2537 (2022).

Nguyen, P. C. et al. Computational flow cytometry provides accurate assessment of measurable residual disease in chronic lymphocytic leukaemia. Br. J. Haematol. 202, 760–770 (2023).

Arvaniti, E. & Claassen, M. Sensitive detection of rare disease-associated cell subsets via representation learning. Nat. Commun. 8, 14825 (2017).

Verbeek, M. W. et al. Minimal residual disease assessment in B‐cell precursor acute lymphoblastic leukemia by semi‐automated identification of normal hematopoietic cells: a EuroFlow study. Cytometry Part B: Clin. Cytometry 106, 252–263 (2023).

Flores-Montero, J. et al. Next Generation Flow for highly sensitive and standardized detection of minimal residual disease in multiple myeloma. Leukemia 31, 2094–2103 (2017).

Ko, B.-S. et al. Clinically validated machine learning algorithm for detecting residual diseases with multicolor flow cytometry analysis in acute myeloid leukemia and myelodysplastic syndrome. EBioMedicine 37, 91–100 (2018).

Ni, W. et al. Automated analysis of acute myeloid leukemia minimal residual disease using a support vector machine. Oncotarget 7, 71915 (2016).

Licandro, R. et al. Application of machine learning for automatic MRD assessment in paediatric acute myeloid leukaemia. Cancer cells 1012, 1010 (2018).

Licandro, R. et al. WGAN latent space embeddings for blast identification in childhood acute myeloid leukaemia. In 24th International Conference on Pattern Recognition (ICPR) 3868–3873 (ICPR, 2018).

Rajwa, B., Wallace, P. K., Griffiths, E. A. & Dundar, M. Automated assessment of disease progression in acute myeloid leukemia by probabilistic analysis of flow cytometry data. IEEE Trans. Biomed. Eng. 64, 1089–1098 (2016).

Weijler, L. et al. FATE: Feature-Agnostic Transformer-based Encoder for learning generalized embedding spaces in flow cytometry data. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision 7956–7964 (IEEE, 2024).

Shopsowitz, K. et al. MAGIC-DR: An interpretable machine-learning guided approach for acute myeloid leukemia measurable residual disease analysis. Cytometry. Part B, Clin. Cytometry. 106, 239–251 (2024).

Terwijn, M. et al. High prognostic impact of flow cytometric minimal residual disease detection in acute myeloid leukemia: data from the HOVON/SAKK AML 42A study. J. Clin. Oncol. 31, 3889–3897 (2013).

Vial, J. P. et al. Unsupervised flow cytometry analysis allows for an accurate identification of minimal residual disease assessment in acute myeloid leukemia. Cancers 13, 629 (2021).

Bücklein, V. et al. Flowsom: an R-based evaluation strategy for flow cytometry-based Measurable Residual Disease (MRD) diagnostics in Acute Myeloid Leukemia (AML). Blood 134, 4656 (2019).

Canali, A. et al. Prognostic impact of unsupervised early assessment of bulk and leukemic stem cell measurable residual disease in acute myeloid leukemia. Clin. Cancer Res. 29, 134–142 (2023).

Jacqmin, H. et al. Clustering and Kernel density estimation for assessment of measurable residual disease by flow cytometry. Diagnostics 10, 317 (2020).

Weijler, L. et al. UMAP based anomaly detection for minimal residual disease quantification within acute myeloid leukemia. Cancers 14, 898 (2022).

Chari, T. & Pachter, L. The specious art of single-cell genomics. PLOS Comput. Biol.19, e1011288 (2023).

Wang, S., Sontag, E. D. & Lauffenburger, D. A. What cannot be seen correctly in 2D visualizations of single-cell ‘omics data? Cell Syst. 14, 723–731 (2023).

Löwenberg, B. et al. Addition of lenalidomide to intensive treatment in younger and middle-aged adults with newly diagnosed AML: the HOVON-SAKK-132 trial. Blood Adv. 5, 1110–1121 (2021).

Cloos, J. et al. Comprehensive protocol to sample and process bone marrow for measuring measurable residual disease and leukemic stem cells in acute myeloid leukemia. JoVE (Journal of Visualized Experiments), 133, e56386 (2018).

Zeijlemaker, W., Kelder, A., Cloos, J. & Schuurhuis, G. J. Immunophenotypic detection of measurable residual (stem cell) disease using LAIP approach in acute myeloid leukemia. Curr. Protoc. Cytometry 91, e66 (2019).

Finak, G., Jiang, W. & Gottardo, R. CytoML for cross‐platform cytometry data sharing. Cytometry Part A 93, 1189–1196 (2018).

Emmaneel, A. et al. PeacoQC: Peak‐based selection of high quality cytometry data. Cytometry Part A 101, 325–338 (2022).

Parks, D. R., Roederer, M. & Moore, W. A. A new “Logicle” display method avoids deceptive effects of logarithmic scaling for low signals and compensated data. Cytometry Part A: J. Int. Soc. Anal. Cytol. 69, 541–551 (2006).

Hahne, F. et al. flowCore: a Bioconductor package for high throughput flow cytometry. BMC Bioinform. 10, 1–8 (2009).

Van Gassen, S. et al. FlowSOM: Using self‐organizing maps for visualization and interpretation of cytometry data. Cytometry Part A 87, 636–645 (2015).

Couckuyt, A., Rombaut, B., Saeys, Y. & Van Gassen, S. Efficient cytometry analysis with FlowSOM in Python boosts interoperability with other single-cell tools. Bioinformatics 40, btae179 (2024).

Lever, J., Krzywinski, M. & Altman, N. Points of significance: model selection and overfitting. Nat. Methods 13, 703–705 (2016).

Fokken, H. et al. A 19‐color single‐tube full spectrum flow cytometry assay for the detection of measurable residual disease in acute myeloid leukemia. Cytometry Part A 105, 181–195 (2023).

Hanekamp, D., Bachas, C., van de Loosdrecht, A., Ossenkoppele, G. & Cloos, J. Re: Myeloblasts in normal bone marrows expressing leukaemia-associated immunophenotypes. Pathology 52, 289–291 (2020).

Cron, A. et al. Hierarchical modeling for rare event detection and cell subset alignment across flow cytometry samples. PLoS Comput. Biol. 9, e1003130 (2013).

Wödlinger, M. et al. Automated identification of cell populations in flow cytometry data with transformers. Comput. Biol. Med. 144, 105314 (2022).

Frühwirth-Schnatter, S. & Pyne, S. Bayesian inference for finite mixtures of univariate and multivariate skew-normal and skew-t distributions. Biostatistics 11, 317–336 (2010).

Fokken, H. et al. A 19‐color single‐tube full spectrum flow cytometry assay for the detection of measurable residual disease in acute myeloid leukemia. Cytometry Part A 105, 181–195 (2024).

Lin, L. & Li, J. Clustering with hidden Markov model on variable blocks. J. Mach. Learn. Res. 18, 1–49 (2017).

Tettero, J. M. et al. Analytical assay validation for acute myeloid leukemia measurable residual disease assessment by multiparametric flow cytometry. Cytometry Part B: Clin. Cytometry 104, 426–439 (2023).

Mocking, T. R. et al. Flow cytometry data from: computational measurable residual disease assessment in acute myeloid leukemia using mixture models. Zenodo. https://doi.org/10.5281/zenodo.11046402 (2024).

Mocking, T. R. et al. Code from: computational measurable residual disease assessment in acute myeloid leukemia using mixture models. Zenodo. https://doi.org/10.5281/zenodo.13474900 (2024).

Acknowledgements

We would like to thank W.N. van Wieringen for a critical reading of the manuscript. This project was in part funded by an AMC-VUMC Cancer Center Amsterdam grant (#CCA_2020-9-68).

Author information

Authors and Affiliations

Contributions

T.M. and C.B. conceptualized and designed the study. A.K., L.L.N., and P.G. assisted with curation and selection of clinical data. T.M. and A.K. performed manual gating. T.M. performed bioinformatics and statistical analyses. P.R. provided feedback on statistical modeling. A.K. and T.R. provided expertise on data interpretation. C.B. and J.C. supervised the project. T.M. wrote the paper with input from all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Medicine thanks the anonymous reviewers for their contribution to the peer review of this work. [Peer review reports are available.]

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Mocking, T.R., Kelder, A., Reuvekamp, T. et al. Computational assessment of measurable residual disease in acute myeloid leukemia using mixture models. Commun Med 4, 271 (2024). https://doi.org/10.1038/s43856-024-00700-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s43856-024-00700-x