Abstract

Machine-learned potentials (MLPs) have exhibited remarkable accuracy, yet the lack of general-purpose MLPs for a broad spectrum of elements and their alloys limits their applicability. Here, we present a promising approach for constructing a unified general-purpose MLP for numerous elements, demonstrated through a model (UNEP-v1) for 16 elemental metals and their alloys. To achieve a complete representation of the chemical space, we show, via principal component analysis and diverse test datasets, that employing one-component and two-component systems suffices. Our unified UNEP-v1 model exhibits superior performance across various physical properties compared to a widely used embedded-atom method potential, while maintaining remarkable efficiency. We demonstrate our approach’s effectiveness through reproducing experimentally observed chemical order and stable phases, and large-scale simulations of plasticity and primary radiation damage in MoTaVW alloys.

Similar content being viewed by others

Introduction

Atomistic simulations of elemental metals and their alloys play a crucial role in understanding and engineering materials properties. While quantum-mechanical methods such as density-functional theory (DFT) calculations can be directly used for small simulation cells and short sampling times, their feasibility quickly diminishes with increasing spatial and temporal scales. For large-scale classical atomistic simulations, both molecular dynamics(MD) and Monte Carlo (MC) simulations crucially depend on interatomic potentials. For metallic systems in particular, embedded-atom method (EAM)-type potentials1,2 have proven to be useful and have been extensively applied over the past decades, especially for elemental metals and their alloys. However, these existing classical interatomic potentials often lack the required level of accuracy for numerous applications. This deficiency primarily stems from constrained functional forms. Recently, a paradigm for developing interatomic potentials has emerged based on machine learning (ML) techniques3,4,5,6,7,8. In a machine-learned potential (MLP), the interatomic potential is modeled using ML methods, allowing for a significantly greater number of fitting parameters and providing versatility as compared to traditional many-body potentials. The functional forms of these MLPs are remarkably flexible, free from the limitations of a small number of analytical functions suggested by physical and chemical intuition or fitting to ground state properties only. The combination of flexible functional forms and a large number of fitting parameters empowers MLPs to achieve a level of accuracy that can be well beyond that of the traditional many-body potentials.

The basic theory behind MLPs is rather mature now. There are two main ingredients of a MLP: the regression model and the descriptors as inputs to the regression model. For the construction of input descriptors, linearly complete basis functions for the atom-environments have been proposed9,10. For the regression model, linear regression9,11, artificial neural network (NN) regression12, and kernel-based regression13 have all been proven to be feasible approaches. The combination of equivariant (as opposed to invariant) constructions and message passing or graph NNs14,15 has also shown great potential in enhancing the regression accuracy of MLPs, albeit at the cost of reduced computational efficiency and challenges in maintaining parallelism.

Despite the higher accuracy offered by MLPs, there are still challenges for applying MLPs in materials modeling, namely the relatively higher computational cost of many MLPs compared to most conventional many-body potentials, and the absence of readily usable databases of MLPs that cover a large number of elements and their compounds. In some cases where an extensive database is available, one can use an available MLP to study a specific problem, but in many cases, one has to train a new one or improve an existing one before being able to study the problem at hand. In particular, there is no simple way to combine MLPs for different elements to build MLPs for their compounds or alloys. This can lead to repeated efforts in the community and the case-by-case approach of developing MLPs is neither optimal nor sustainable in the long run. Regarding the computational cost of MLPs, the neuroevolution potential (NEP) approach16,17,18 developed recently has been shown to yield excellent computational efficiency compared to other state-of-the-art methods, thanks to an optimization of the theoretical formalism and an efficient implementation in the GPUMD package19. The NEP approach can reach computational speeds unprecedented for MLPs, on par with empirical potentials, paving the way for the application of MLPs to large-scale atomistic simulations.

In this paper, we introduce a sustainable approach for the construction of MLPs. Although our approach could potentially be extended to construct a comprehensive MLP covering the entire periodic table, we have chosen a more focused task as a proof of concept. Our objective is to develop a general-purpose NEP model encompassing 16 elemental metals and their alloys. Previous attempts to create general-purpose MLPs for numerous elements, or even the entire periodic table, have been initiated by researchers such as Takamoto et al.20,21 and Chen and Ong22. These studies have introduced “universal” MLPs, covering up to 45 elements21 and 89 elements22, respectively. Despite being termed universal, these MLPs have a rather limited application range and are orders of magnitude slower than EAM potentials. General-purpose MLPs have only been conclusively demonstrated for elemental matter such as Si23, C24, Fe25, and Pb26. For compounds comprising multiple chemical species, a special class of transition-metal oxides27, binary Sn alloys with a few metals28, and Si–O29 have been successfully modeled using MLPs. However, for metallic alloys, it remains a highly nontrivial task to construct a unified MLP that can be reliably used for arbitrary chemical compositions. Here, our goal is to construct a genuinely general-purpose MLP for a diverse range of elements that matches the speed of EAM and surpasses it in the description of various physical properties.

Apart from achieving high accuracy and efficiency for the unified NEP model, which we term version 1 of unified NEP (UNEP-v1), we also propose an efficient approach for constructing the training dataset. Constructing a training dataset with all the possible chemical compositions is a formidable task. Fortunately, the NEP descriptor parameters depend only on pairs of elements. We will demonstrate that considering unaries and binaries alone for the training dataset is sufficient, yielding a NEP model that is transferable to systems with more components. Using this route, we achieve a transferable UNEP-v1 model for 16 elemental metals (Ag, Al, Au, Cr, Cu, Mg, Mo, Ni, Pb, Pd, Pt, Ta, Ti, V, W, Zr) and their diverse alloys, with only about 100000 reference structures. This accomplishment is evidenced by accurate predictions of formation energies across various test datasets comprising multi-component alloy systems, reproduction of experimentally observed chemical order and stable phases, and the generality and high efficiency of our UNEP-v1 model in large-scale MD simulations of mechanical deformation and primary radiation damage in MoTaVW refractory high-entropy alloys.

Results

A neural-network architecture for many-component systems

Our starting point is the NEP approach as described in ref. 18, called NEP3. In this work, we introduce two crucial extensions to NEP3 designed specifically for many-component systems. This extended approach will be called NEP4, which has been implemented in GPUMD during the course of this work and is available starting from version 3.8.

We first briefly introduce NEP318, which is a NN potential that maps a descriptor vector qi (with Ndes components) of a central atom i to its site energy Ui. The total energy of a system of N atoms is expressed as the sum of the site energies \(U={\sum }_{i=1}^{N}{U}^{i}\). The ML model is a fully connected feedforward NN with a single hidden layer with Nneu neurons,

where \(\tanh (x)\) is the activation function in the hidden layer, w(0) is the connection weight matrix from the input layer (descriptor vector) to the hidden layer, w(1) is the connection weight vector from the hidden layer to the output layer, b(0) is the bias vector in the hidden layer, and b(1) is the bias in the output layer. Denoting the weight and bias parameters in the NN collectively as w, we can formally express the site energy as

The descriptor vector consists of a number of radial and angular components. In this work, we utilize up to five-body angular components. For illustration purposes, we discuss the three-body angular components here. Interested readers are referred to Ref18. for the description of higher-order terms up to five-body angular components, which can help improve the model’s completeness30. A three-body angular descriptor component can be expressed as

where n and l represent the order of the radial and angular expansions, respectively. Here, the summation runs over all neighbors of atom i within a certain cutoff distance, rij represents the distance between atoms i and j, θijk is the angle for the triplet (ijk) with i being the central atom, and Pl(x) is the Legendre polynomial of order l. The functions gn(rij) depend solely on the distance rij and are therefore referred to as radial functions. These radial functions are defined as linear combinations of a number of basis functions:

The basis functions fk are constructed based on Chebyshev polynomials and a cutoff function, ensuring both formal completeness and smoothness. Explicit expressions for these functions can be found in ref. 18. The expansion coefficients \({c}_{nk}^{IJ}\) depend on n and k and also on the types (denoted as capitals I and J) of atoms i and j. Due to the summation over neighbors, the descriptor components defined above are invariant with respect to the permutation of atoms of the same type. More importantly, these coefficients are treated as trainable parameters17, which is crucial for efficiently differentiating different atom pairs contributing to the descriptor.

While the descriptor parameters {cIJ} depend on the atom types (species), the NN parameters w in NEP3 are the same for all the atom types. Therefore, as the number of atom types increases, the regression capacity of the NN model for each atom type decreases. To keep a constant regression capacity per atom type, in the present work, we employ different sets of NN parameters wI for each atom type I. While this increases the total number of trainable parameters, it does not significantly increase the computational cost during MD simulations with the trained model, because it only involves a selection of the correct set of NN parameters for a given atom. With the extension, the site energy can be expressed as

which constitutes the NEP4 model introduced in this work (Fig. 1a).

a Schematic illustration of the architecture of the neuroevolution potential version 4 (NEP4) model with distinct sets of neural network (NN) parameters for different atom types, A (yellow), B (green), and C (blue). For a central atom of type A, the descriptor qA involves the cAJ parameters (J can be of any type), while the weight and bias parameters wA are specific for type A. The hidden layer in each NN is represented by x. Similar rules apply to the central atoms of other types. The total energy U is the sum of the site energies for all the atoms in a given structure. By contrast, in neuroevolution potential version 3 (NEP3) all atom types share a common set of NN parameters w, which restricts the regression capacity. b Schematic illustration of the multi-loss evolutionary training algorithm. For example, in a 3-component system, the optimization of the parameters related to atom type A (including wA, cAA, cAB, and cAC) is only driven by a loss function defined using the structures with the chemical compositions of A, AB, and AC. In the conventional evolutionary algorithm, which is used in NEP3, a single loss function is used to optimize all parameters, which is less effective for training general-purpose models for many-component systems.

A multiple-loss evolutionary training algorithm for many-component systems

While the increase in the number of trainable parameters does not significantly affect the inference speed, it considerably increases the number of iterations required for training, particularly with the approach used for neuroevolution potential version 3 (NEP3). It turns out that the training algorithm must be modified to achieve better performance for many-element systems. For training NEP models, we use the separable natural evolution strategy (SNES) approach31, which is a powerful black-box optimization algorithm that is particularly suitable for problems with many possible solutions32. It maintains a mean value and a variance for each trainable parameter that is updated according to the rank of a population of solutions. The rank is determined according to the loss function to be minimized. The loss function L is constructed using predicted and reference data for energies, forces, and virials, and is a function of the trainable parameters, i.e.,

The rank (or “fitness”) is of crucial importance in evolutionary algorithms, as it determines the relative weight of a solution in the population. However, using a single loss function can lead to ambiguity in rank assignment: Even if the total loss of solution X is smaller than that of solution Y, it does not guarantee that solution X is more accurate for all the subsystems in a many-element system. For example, solution X might offer higher accuracy for Au systems but lower accuracy for Ag systems. To account for this observation, we define multiple loss functions for many-element systems. Since we are concerned with alloys, we cannot define a set of loss functions that have no common terms at all, but we can make a definition that minimizes the common parts. Naturally, we define the loss function for element I as the parts in Eq. (6) that are contributed by structures containing element I. For illustration, consider an explicit example with three elements, denoted A, B, and C, respectively. The loss function for element A can be calculated by considering the chemical compositions A, AB, and AC only, excluding B, C, and BC. This loss function is used when training the parameters related to element A, which are wA, cAA, cAB, and cAC (Fig. 1b). Using this multi-loss evolutionary algorithm, the training converges much faster than using a single-loss function. The efficiency improvement in training becomes more significant with an increasing number of elements, and is crucial for being able to develop models such as UNEP-v1.

Construction of training data for many-component systems based on chemical generalizability

The chemical space for 16 elements consists of 216 − 1 = 65535 chemical combinations, including 16 unaries, 120 binaries, 560 ternaries, etc. It is formidable to construct a training dataset by enumerating all the possible chemical combinations. Fortunately, leveraging the construction of the radial functions in terms of linear combinations of basis functions provides a solution. The descriptor values for a given configuration of n-component (n > 2) systems fall within the range spanned by those of the 1-component and 2-component systems derived from the same configuration by element substitution. Given the interpolation capabilities of NNs, a NEP model trained using 1-component and 2-component structures is expected to predict the behavior of n-component (n > 2) systems reasonably well. Therefore, our training dataset focused only on unary and binary systems.

For each unary or binary system, we constructed an initial training dataset with a few hundred structures. These structures included small cells with position and/or cell perturbations, cells with one to a few vacancies, cells with surfaces and various defects (such as grain boundaries) taken from the Materials Project33 and the Open Quantum Materials Database34, cells sampled from MD simulations based on an EAM potential35 at various temperature (up to 5000 K) and pressure conditions including highly deformed structures (see “Methods” for details). There are initially about 60000 structures in total for the 16 metals and their binary alloys. In spite of its seemingly modest size, this training dataset is remarkably diverse in configuration space. Reference data (energy, force, and virial) for the structures were generated via DFT calculations using the VASP package (see “Methods” for details).

The diversity of the initial training dataset ensured a robust initial NEP model that could be used to run MD and hybrid Monte Carlo and molecular dynamics (MCMD) simulations at various thermodynamic conditions. From diverse MD and MCMD trajectories generated by the initial NEP model, structures (still unary and binary only) were sampled and labeled using DFT calculations. Those with relatively large errors (NEP versus DFT) were identified and incorporated into the training set. This iterative process was repeated a few times until no large errors could be detected. This active-learning scheme, while simple, proved to be highly effective. The final training dataset contains 105464 structures and 6886241 atoms in total. The DFT calculations for these structures required about six million CPU hours.

Training and testing results

Using the refined training dataset, we trained a NEP model (see “Method” and Supplementary Note for details on the hyperparameters) using the NEP4 approach as described above. We refer to this NEP model as UNEP-v1, which represents the first attempt at constructing a unified NEP model for many elements.

The parity plots for energy, force, and stress affirm the high accuracy of this UNEP-v1 model (Fig. 2a–c). Despite the large ranges of the three quantities, their root-mean-square error(RMSEs) are relatively small, at 17.1 meV atom−1, 172 meV Å−1, and 1.16 GPa, respectively.

a–c Parity plots for energy, stress, and force comparing density functional theory (DFT) reference data and the first version of unified neuroevolution potential (UNEP-v1) predictions for the whole training dataset. In c there are three test datasets containing n-component (n ≥ 3) structures, including one with up to 13 components (Ag, Au, Cr, Cu, Mo, Ni, Pd, Pt, Ta, Ti, V, W, Zr) taken from Lopanitsyna et al.38 (labeled Test-1), one with up to four components (Mo, Ta, V, W) from Byggmästar et al.37 (labeled Test-2), and one with up to three components (Pd, Cu, Ni) from Zhao et al.36 (labeled Test-3). d, e Parity plots for formation energies comparing DFT reference data and predictions from UNEP-v1 (green circles), MACE-MP-0 (medium model, blue stars)40, and embedded-atom method (EAM) (orange triangles)35, for structures from the Materials Project (MP-ternary)33 and the GNoME paper39. Mean absolute error (MAE) and R2 (coefficient of determination) values are provided for comparison. f Distribution of the training dataset (this work, UNEP-v1, comprising 1-component to 2-component systems, blue) and various test datasets, including Test-1 (up to 13-component systems, orange)38, Test-2 (up to 4-component systems, yellow)37, Test-3 (up to 3-component systems, purple)36, MP-ternary alloys (3-component systems, red)33, and GNoME dataset (2-component to 5-component systems, green)39, in the 2D principal component (PC) space of the descriptor. Source data are provided as a Source Data file88.

To validate the force accuracy of our UNEP-v1 model we consider here three public datasets. Although the public datasets were not computed using exactly the same DFT settings as used for generating the UNEP-v1 training data, the resulting differences in force values are marginal (of the order of a few meV Å−1) and are much smaller than the force RMSE achieved by UNEP-v1 (Fig. 2c). The comparison moreover shows that the UNEP-v1 model trained against 1-component and 2-component structures also performs very well for 3-component36, 4-component37, and 13-component38 structures extracted from the datasets in the previous studies36,37,38. The testing RMSEs of the UNEP-v1 model for these three datasets are respectively 76 meV Å−1, 196 meV Å−1, and 269 meV Å−1, which are comparable to those reported as training RMSEs in the original publications36,37,38.

To validate the energy accuracy of our UNEP-v1 model we utilize two public datasets, including all the relevant 3-component structures in the Materials Project database33 and the structures predicted using the GNoME approach39 ranging from 2-component to 5-component systems with force components less than 80 eV Å−1. We calculate the formation energies using DFT, an EAM potential35, a foundation model named MACE-MP-0 (medium version)40, and our UNEP-v1 model, where the reference energy for each species is based on the most stable allotrope. For the two datasets, the mean absolute errors (MAEs) of our UNEP-v1 model compared to DFT calculations are 75 meV atom−1 and 60 meV atom−1, respectively (Fig. 2d, e). In contrast, the corresponding values from the EAM potential are 695 meV atom−1 and 1122 meV atom−1, respectively, and thus about one order of magnitude larger. For the Materials Project dataset, which MACE-MP-0 has been trained on while UNEP-v1 has not, MACE-MP-0 is slightly more accurate. However, for the GNoME dataset, on which neither model has been trained, UNEP-v1 demonstrates notably better accuracy.

Besides the Materials Project and GNoME datasets, Figures S1–S3 present parity plots for formation energies and forces predicted by UNEP-v1, EAM, and MACE-MP-0 compared to DFT for the three test datasets36,37,38. Figures S4–S17 show the formation energies, comparing UNEP-v1, EAM, and DFT for the equation of state curves (for alloys), heating, compressing, and stretching processes with 1 to 5-component materials in various crystalline structures, including face-centered cubic (FCC), body-centered cubic (BCC), hexagonal close packed (HCP), and metallic glasses. The results altogether clearly demonstrate the superior accuracy of UNEP-v1 over EAM and confirm the excellent generalizability of our UNEP-v1 model from the 1- and 2-component structures included in the training dataset to unseen multi-component structures.

As a further test, we trained a NEP model by including relevant n-component (n ≥ 3) structures from the Open Quantum Materials Database database34. The RMSEs for the three public datasets36,37,38 obtained using this NEP model are only marginally improved compared to UNEP-v1, which demonstrates that our training dataset with n-component (n ≤ 2) structures is already sufficient for training a general-purpose NEP model for all the considered elements and their alloys.

As mentioned earlier, our approach to training data generation relies on the chemical generalizability embedded in the radial functions Eq. (4). This feature is illustrated by a principal component analysis of the descriptor space (Fig. 2f), which shows that the various n-component (n ≥ 3) structures fall comfortably within the space spanned by the 1-component and 2-component training structures.

Evaluation of basic physical properties for the 16 metal elements

After having confirmed the high training accuracy of the UNEP-v1 model for 1-component and 2-component systems, and its high testing accuracy for systems with multiple components, we conducted an extensive evaluation of the UNEP-v1 model beyond RMSEs, focusing on various physical properties (see “Methods” for details on the calculations). Elastic constants Cij, surface formation energies γ, mono-vacancy formation energies Ev, melting points Tm, and phonon dispersion relations were calculated for all 16 elements, using both the UNEP-v1 model and an EAM potential35. While there are recent and possibly more accurate EAM potentials41 for a limited subset of species considered here, we have consistently opted for the widely used EAM potential developed by Zhou et al.35 because it supports all the 16 species and their alloys. Detailed results for phonon dispersion relations are presented in Figs. S18–S20, while other physical properties are listed in Tables S1–S4. Figure 3a–d show the parity plots comparing predictions of various basic properties from EAM and UNEP-v1 against DFT calculations or experimental values. The EAM predictions have some outliers, especially in the case of the surface formation energies, while the UNEP-v1 predictions do not show any notable discrepancies.

a–d Elastic constants Cij, formation energies γ for \(\left\{111\right\}\), \(\left\{110\right\}\), and \(\left\{100\right\}\) surfaces, mono-vacancy formation energies Ev, and melting points Tm as predicted by the embedded-atom method (EAM) potential35 (orange triangles) and the first version of unified neuroevolution potential (UNEP-v1) model (green circles) compared to density functional theory (DFT) or experimental values for the 16 elements. R2 (coefficient of determination) values are provided for comparison. e Mean absolute errors (MAEs) for the above four quantities as well as the phonon frequency ω for EAM (orange bars) and UNEP-v1 (green bars) models with respect to reference data from DFT calculations and experiment. Detailed phonon dispersion data for 16 elements are provided in Supplementary Figs. S18-20, comparing UNEP-v1, EAM, and DFT. Source data are provided as a Source Data file88.

The MAEs for all the evaluated quantities calculated by averaging the absolute error between predicted (EAM or UNEP-v1) and reference values (DFT or experimental) over all 16 elements are presented in Fig. 3e. UNEP-v1 consistently outperforms the EAM potential for all physical properties, and demonstrates a significant advantage in predicting surface formation energies, elastic constants, and vacancy formation energies.

We have additionally trained an ensemble of eight NEP models using different sets of training hyperparameters, and compared the predictions for bulk and shear moduli as well as the equilibrium volume for the ensemble to DFT reference data to estimate the uncertainty in the model predictions (Fig. 4a–c). Generally, the deviations in the predictions across the ensemble are small, and mostly agree well with the reference data. As a further illustration, we estimated the uncertainty in the phonon dispersion for Ag (Fig. 4d), illustrating the very small uncertainty throughout the entire Brillouin zone.

a–c Parity plots for NEP models versus density functional theory (DFT) data for bulk modulus, shear modulus, and equilibrium volume for roughly 400 different alloys of the 16 elements. The averaged values μ are shown with error bars computed as the standard deviation σ of the predicted properties over an ensemble of eight NEP models. The structures and reference DFT data were taken from the Materials Project33. d Phonon dispersion relations for face-centered cubic (FCC) Ag, calculated by averaging of all models in the ensemble. Source data are provided as a Source Data file88.

Computational performance

The computational efficiency of a MLP is crucial for its effective applications in large-scale MD simulations. Here, the UNEP-v1 model as implemented in GPUMD exhibits excellent computational performance (Table 1). Using a single Nvidia A100 GPU, UNEP-v1 can reach a simulation size of about 14 million atoms and a computational speed of 2.4 × 107 atom step s−1, which is only a few times lower than that for the EAM potential (11 × 107 atom step s−1) as implemented in LAMMPS42 using the same hardware. To reach even larger simulation sizes, we implemented a multi-GPU version of NEP that can effectively use the computational power of all the GPUs available on a computational node. With only 8 A100 GPUs, we can reach a simulation size of 100 million atoms, achieving much higher computational efficiency than either the deep potential (DP) (thousands of Nvidia V100 GPUs)43,44 or Allegro (128 A100 GPUs) approaches45.

With 8 A100 GPUs, the overall computational speed of UNEP-v1 is about 1.5 × 108 atom step s−1. The parallel efficiency relative to ideal scaling for UNEP-v1 with 8 A100 GPUs is 80%, while it is only about 50% for EAM with 4 A100 GPUs. The speed per GPU achieved by UNEP-v1 is significantly higher than those for the DP43,44 and Allegro approaches45. The excellent computational speed of UNEP-v1 allows us to tackle challenging problems in multi-principal-element alloys (MPEAs) as discussed below.

Application to plasticity of multi-principal element alloys

Refractory MPEAs have emerged as materials for high-temperature applications, crystallizing typically in the BCC solid solution phase. These alloys exhibit exceptional properties such as high ductility and mechanical strength at ultra-high temperature46,47,48,49 as well as impressive irradiation resistance50,51. However, their ductility at room temperature is limited52,53. Recent experimental observations in alloys such as HfNbTaTiZr have revealed the presence of numerous straight screw dislocations and a substantial amount of dislocation debris53,54, consistent with known behavior in BCC metals55. Recent MD simulations have also indicated the possible crucial role of dislocation in the plastic flow of MPEAs56,57,58. Despite these insights, the complex structural and mechanical properties of MPEAs remain incompletely understood. Here, atomistic simulations employing accurate and efficient MLPs can provide further insights into the intricate behavior of these materials. Although there are a few available MLPs limited to specific alloys56,57,58, a comprehensive general-purpose potential model capable of encompassing a wide range of elements and their alloys, providing both high efficiency and accuracy and enabling large-scale (up to millions of atoms) MD simulations of BCC MPEAs, is still lacking.

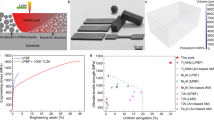

The UNEP-v1 model developed in this work emerges as a promising solution, enabling large-scale MD simulations of MPEAs with an accuracy superior to existing models while still achieving very high computational efficiency. To demonstrate its effectiveness in this context, we investigated the mechanism of plastic deformation of a MoTaVW alloy under compression. Our evaluation of the UNEP-v1 model involved comprehensive tests, including checking the vacancy formation energies (Fig. 5a) in equimolar MoTaVW alloys, Peierls barriers for the 1/2〈111〉 screw dislocation (Fig. 5b) in elemental systems as well as atomic forces in melting (Fig. 5c), compression and tensile stretching processes (Fig. S22) of equimolar MoTaVW alloys. The results illustrate the superior performance of UNEP-v1 compared to EAM potentials and its suitability for studying structural and mechanical properties in large-scale MD simulations.

a Mono-vacancy formation energies from the first version of unified neuroevolution potential (UNEP-v1) and embedded-atom method (EAM)35 compared to density functional theory (DFT) data for an equimolar MoTaVW alloy with 128 atoms sampled from hybrid Monte Carlo and molecular dynamics simulations. Mean absolute error (MAE) and R2 (coefficient of determination) values are provided for comparison. b Peierls barrier for 1/2〈111〉 screw dislocation migration in elemental W (see Fig. S21 for the other three species). c Comparisons of UNEP-v1, EAM35, and DFT results for equimolar MoTaVW alloys sampled from molecular dynamics simulations using 256-atom supercells for a melting process from 10 to 5000 K during 10 ns. UNEP-v1 shows much better predictions than EAM, with a much smaller force MAE as indicated in the legend (see Fig. S22 for similar comparisons for deformation processes). Source data are provided as a Source Data file88.

After having confirmed the accuracy, efficiency, and reliability of our NEP model, we modeled an equimolar BCC polycrystalline MoTaVW system containing 100205176 atoms and conducted MD simulations to investigate changes in dislocation density under compression. These simulations involved compressive deformation at a strain rate of 4.2 × 108 s−1 (see “Methods” for simulation details). The dislocation density decreases during the elastic stage, reaches a minimum at the yield strain ϵ = 6%, and gradually returns to the original level due to enhanced densification (Fig. 6a). The dislocation density plateaus for large strains (ϵ ≥ 16%), consistent with the behavior observed in BCC Ta59. It is noteworthy that stress-strain response and dislocation density exhibit contrasting trends under compression.

Dislocation density as a function of compressive strain for equimolar polycrystalline MoTaVW alloy containing 12 grains with 100 million atoms at 300 K. a Strain-induced dislocation density (red solid line) and stress (blue dashed line). b–e Distributions of dislocation in 20 nm thick slices at strains of (b) ϵ = 0%, (c) ϵ = 2.5%, (d) ϵ = 6%, and (e) ϵ = 20%, respectively. Grain boundaries are labeled by numbers for reference. The compressed direction is perpendicular to the plane of view. The 1/2〈111〉 dislocations are depicted in green, while other dislocations are shown in red. Source data are provided as a Source Data file88. The initial and final molecular dynamics configurations are provided in the Supplementary Data90.

To gain deeper insight into the plastic deformation mechanisms, we extracted the distribution of the dislocation density in snapshots of the polycrystalline MoTaVW system at selected strains (Fig. 6b–e). Notably, all dislocations are confined to grain boundaries of the polycrystalline system under compression, and this pattern remains unchanged throughout the linear response ("elastic”) region of the stress-strain curve (Fig. 6b–c). It is worth noting that dislocations transform from other types (labeled 1 and 2 in Fig. 6b) to 1/2〈111〉 ones (Fig. 6c) in the elastic region (0 − 2.5%), and recover back at the yield strain of 6% (Fig. 6d). Subsequently, during the plastic stage (Fig. 6d–e), some of the grain boundaries begin to emit, slip, and pin dislocations into the grains along with boundary movement. This finding demonstrates the significant impacts of boundary stability on the hardness of MPEAs, as previously observed in the study of a NiMo alloy60.

Through 100-million-atom large-scale MD simulations, we have thus illuminated the intricate details of plastic deformation, shedding light on dislocation behavior in grain boundaries. This application of our UNEP-v1 model to the plasticity of MPEAs, exemplified by the MoTaVW alloy, is an important demonstration for the generality and high computational efficiency of our approach.

Application to primary radiation damage in MPEAs

Next, we demonstrate the versatility of the UNEP-v1 model through large-scale MD simulations of primary radiation damage in MPEAs, using again the MoTaVW alloy system for illustration (see “Methods” for details). Here, in order to accurately describe interactions at short distances where large forces are at play, we incorporated a two-body Ziegler-Biersack-Littmark (ZBL) potential61 to train a combined NEP-ZBL model62. The UNEP-v1 part and the ZBL part are smoothly connected in the range between 1 and 2 Å. Above 2 Å, only the UNEP-v1 part is active, while below 1 Å, the ZBL part dominates. Illustrative examples demonstrating the seamless connection between UNEP-v1 and ZBL for Al and W dimers are presented in Fig. S23.

Figure 7 a shows the defect snapshot of the peak-damage state formed at about 0.6 ps with a primary knock-on atom energy of 100 keV. The defect distribution stabilizes after a few tens of ps. Figure 7b shows the stable defect distribution at 140 ps, revealing 121 residual point defects, including vacancies and interstitial atoms. The maximum cluster sizes for vacancies and interstitials are 15 and 11, respectively. In comparison, a previous study62 on elemental W at similar simulation conditions reported 183 residual point defects with a maximum defect-cluster size exceeding 200 atoms. The MPEA thus features fewer defects and smaller defect clusters. Our simulation results are consistent with the experimental study of a similar tungsten-based refractory MPEA, which exhibits exceptional radiation resistance, negligible radiation hardening, and no evidence of radiation-induced dislocation loops even at a high dose level50.

Defect snapshots of a cascade in a MoTaVW alloy at (a) the peak damage state (at about 0.6 ps) and (b) the final damage state (at 140 ps). The red and blue dots represent interstitial atoms and vacancies, respectively. The initial and final molecular dynamics configurations are provided in the Supplementary Data90.

The enhanced radiation resistance of the tungsten-based refractory MPEAs could be attributed to the increased chemical complexity, leading to cascade splitting, as depicted in Fig. 7a. Cascade splitting results in the formation of smaller defect clusters and a more dispersed distribution of isolated (non-clustered) point defects. This specific application of our UNEP-v1 model to study primary radiation damage through extensive MD simulations involving 16 million atoms provides further evidence of the generality and high efficiency of our approach. However, more detailed investigations are necessary to comprehensively characterize and understand the role of alloying in influencing radiation resistance.

Comparisons between UNEP-v1 and EAM models in MD and MCMD simulations

Finally, we showcase the reliability of UNEP-v1 in large-scale MD and MCMD (see “Methods”) simulations across three applications, providing close comparisons with EAM results and experimental data.

In the first application, we use UNEP-v1 to perform MD simulations for the recently synthesized goldene63, a monolayer form of gold that is not explicitly included in the training dataset. The stable configuration of goldene features a triangular lattice. We first construct a rectangular cell with 1800 atoms and then performed MD simulations in the isothermal-isobaric ensemble with a target temperature of 300 K and a target in-plane pressure of 0 GPa. Figure 8a shows that UNEP-v1 maintains the stability of the goldene sheet at 300 K, exhibiting out-of-plane ripples typical for two-dimensional materials. In contrast, Fig. 8b–d shows that the monolayer structure of goldene cannot be maintained by EAM potentials from the literature, which include the one used for most benchmarks35 and two more recent ones41,64. The results here demonstrate that the UNEP-v1 model has good generalizability in the configuration space.

a–d Snapshots of structures from molecular dynamics (MD) simulations of 1 ns in the isothermal-isobaric ensemble with a target temperature of 300 K and a target in-plane pressure of 0 GPa, starting with a flat monolayer of goldene, using the first version of unified neuroevolution potential (UNEP-v1) and the embedded-atom method (EAM) potentials by Zhou et al.35, Olsson64, and Sheng et al.41 The results demonstrate that UNEP-v1 can maintain goldene’s 2D structure at ambient temperature and pressure, while the three EAM potentials cannot. e Initial structure of a γ-Ni and \({\gamma }^{{\prime} }\)-Ni3Al superlattice with a random Mo distribution. f–g Snapshots of the final equilibrium Mo distributions from hybrid Monte Carlo and molecular dynamics (MCMD) simulations using UNEP-v1 and EAM models35. UNEP-v1 correctly reproduces the final ratio of the Mo concentration in \({\gamma }^{{\prime} }\)-Ni3Al to that in γ-Ni (\({K}^{{\gamma }^{{\prime} }/\gamma }=0.667\)), in good agreement with experimental observations, while the EAM potential by Zhou et al.35 gives a value (\({K}^{{\gamma }^{{\prime} }/\gamma }=4.981\)) contradicting experimental trend. h Initial face-centered cubic (FCC) structure of Al0.31Cr0.06Cu0.22Ni0.32V0.09. i, j Snapshots of the final equilibrium structures from MCMD simulations using UNEP-v1 and EAM models35. UNEP-v1 successfully produces both disordered (A2) and ordered (B2) body-centered cubic (BCC) structures in full agreement with experiments67. In contrast, EAM potential by Zhou et al.35 keeps the system in the FCC structure, unable to reproduce the experimentally expected BCC structure. (See Fig. S24 for similar results for Al0.20Cr0.12Cu0.19Ni0.35V0.14. The initial and final MD configurations are provided in the Supplementary Data90).

In the second application, we use MCMD simulations to study the Mo distribution in a superlattice structure formed by γ-Ni and \({\gamma }^{{\prime} }\)-Ni3Al. Starting from a uniform Mo distribution with an overall Mo concentration of 8.1% (Fig. 8e), the final ratio of the Mo concentration in \({\gamma }^{{\prime} }\)-Ni3Al to that in γ-Ni is \({K}^{{\gamma }^{{\prime} }/\gamma }=0.667\) according to our UNEP-v1 model (Fig. 8f). This agrees well with experimental observations indicating \({K}^{{\gamma }^{{\prime} }/\gamma } < 1\) when the initial Mo concentration is above approximately 6%65,66. In contrast, the EAM potential by Zhou et al.35 gives a value of \({K}^{{\gamma }^{{\prime} }/\gamma }=4.981\) (Fig. 8g), which contradicts the experimental trend.

In the third application, we use MCMD simulations to reproduce the experimentally expected BCC structure in the Al-rich intermetallic Al0.31Cr0.06Cu0.22Ni0.32V0.09, despite the presence of a large fraction of FCC metals, starting from an initial FCC structure (Fig. 8h). Our UNEP-v1 model successfully produces both disordered (A2) and ordered (B2) BCC structures (Fig. 8i) in full agreement with experiments67. In contrast, the EAM potential by Zhou et al.35 keeps the system in the FCC structure (Fig. 8j). Similar results for Al0.20Cr0.12Cu0.19Ni0.35V0.14 are shown in Fig. S24, further demonstrating the superior reliability of UNEP-v1 over the EAM model. Finally, in Fig. S25, we illustrate that the equimolar TiZrVMo and TiZrVMoTa alloys correctly transform to BCC structures from HCP structures during MCMD simulations with UNEP-v1, in excellent agreement with experimental observations68. This indicates that the UNEP-v1 model, trained on 1-component and 2-component structures, can correctly capture phase transitions occurring in multi-element alloys.

Discussion

In summary, we have developed an advanced NEP approach capable of constructing accurate and efficient general-purpose MLPs for numerous elements and their alloys. Two crucial extensions have been made compared to previous NEP versions. Firstly, we employed distinct NNs for each species, ensuring consistent regression capacity even as the number of species grows. Secondly, we introduced multiple loss functions to optimize different subsets of the parameters, crucially accelerating the training process when using evolutionary algorithms with a large number of trainable parameters. We expect that this concept can more generally boost the application of evolutionary algorithms in solving complex optimization problems.

A pivotal insight driving the success of this approach is the recognition that chemical (species) information can be embedded in the trainable expansion coefficients of radial functions, dependent only on atom pairs and basis functions. As a result, the 1-component and 2-component structures delineate an outer boundary in descriptor space, while n-component structures with n≥3 represent interpolation points in this space. Leveraging the exceptional interpolation capabilities of NNs, a NEP model trained solely with 1-component and 2-component structures performs very well for n-component structures with n ≥ 3, provided the configuration space has been sufficiently explored. The effectiveness of this approach has been demonstrated through accurate predictions of formation energies across various multi-component alloys, as well as reproduction of experimentally observed chemical order and stable phases, using our UNEP-v1 model.

While the current study focuses on 16 elements, our approach lays the groundwork for potentially extending NEP models across the entire periodic table. The primary challenge resides in the generation of the reference data, typically via DFT calculations, rather than the regression capabilities of the NEP model. Notably, our approach is also sustainable. Starting from our existing training set for 16 elements, one merely needs to include structures involving 17 chemical compositions (one 1-component and 16 2-component systems) to form a comprehensive training set for 17 elements. This method is far more economical than building an entirely new training set from scratch. Beyond extending the chemical space, one can also broaden the configuration space for existing chemical compositions, through established active-learning approaches, especially with the aid of structure searching methods69.

The successful applications of the UNEP-v1 model in studying plasticity and primary radiation damage in the MoTaVW refractory MPEAs demonstrate the versatility and robustness of the NEP4 approach in general and the UNEP-v1 model in particular, establishing its significant potential for in-depth explorations and insights into the intricate behavior of complex materials such as MPEAs.

In conclusion, our study demonstrates the promise of our approach in constructing a unified general-purpose MLP for 16 species with remarkable computational efficiency, taking full advantage of the embedded chemical generalizability, the interpolation capabilities of NNs and an advanced multiple-loss evolutionary training algorithm for many-component systems. By successfully developing a highly accurate and efficient MLP for a diverse range of elemental metals and alloys, our study showcases the versatility and applicability of our approach across various materials. These advancements mark a significant leap forward in enhancing the practical applications of MLPs in materials modeling, offering opportunities for more accurate, efficient, and predictive computer simulations in materials research.

Methods

MD simulations for training structure generation

To create the initial training structures, we used the LAMMPS package (23 Jun 2022)42 to run MD simulations with cells ranging from 32 to 108 atoms. For each 1-component or 2-component system, we ran MD simulations in the isothermal-isobaric ensemble (zero target pressure) using the EAM potential35 at 9 temperatures (50, 300, 800, 1300, 1700, 2300, 3000, 4000, and 5000 K), each for 2 ns. For each MD run, we sampled 5 structures. For each structure, we made three copies, one with a subsequent box scaling of 95%, one with 105%, and one with 5% (random) box perturbation. We also ran MD simulations at 300 K with tensile or compressing loading with a strain rate of 2 × 108 s−1 for 1 to 2 ns and uniformly sampled 35 structures.

DFT calculations for reference data generation

After preparing the initial training structures, we performed quantum-mechanical calculations to obtain reference data, including the energy and virial for each structure and the force on each atom in each structure. DFT calculations as implemented in VASP70 were performed to generate reference data. The INCAR file for VASP is presented in Supplementary Note S1.

We used the projector augmented wave method71,72, the PBE functional73, an energy cutoff of 600 eV, a Γ-centered k-point mesh with a spacing of 0.2 Å−1, and a threshold of 10−6 eV for the electronic self-consistent loop. We used the blocked Davidson iteration scheme for electronic minimization. The PREC tag in the VASP input file was set to Accurate to ensure accurate forces. A Gaussian smearing with a smearing width of 0.02 eV was used. The Gaussian smearing is not the best choice for elemental metals and their alloys but we chose this in view of possible future extension of our approach to the whole periodic table. Our settings can ensure a convergence of the energy to 1 meV atom−1 for all the materials. In our DFT calculations, we did not consider magnetism, consistent with previous works36,38. We have tested that modeling Ni and Cr as ferromagnetic does not change the energy ordering for the major phases, see Fig. S26. While a proper account of magnetism might help to improve the quality of the DFT results and the resulting potential, it would significantly complicate the training database and require extensive additional computational resources.

The NEP training hyperparameters

We used GPUMD v3.9.374 to train the UNEP-v1 model, which is a NEP4 model as introduced in this paper. The details of the nep.in input file we used and the SNES-based multi-loss training algorithm can be found in Supplementary Note S2.

The cutoff radii for radial and angular descriptor parts are 6 Å and 5 Å, respectively. For both the radial and the angular descriptor components, we used 5 radial functions constructed from a linear combination of 9 basis functions. The descriptor vector for one element thus has 5 + 5 × 6 = 35 components. There are 80 neurons used in the hidden layer and the NN architecture for each element can be written as 35-80-1, corresponding to 2960 trainable parameters. For each pair of elements, there are 5 × 9 + 5 × 9 = 90 trainable descriptor parameters. The total number of trainable parameters in the UNEP-v1 model for 16 elements is thus 2960 × 16 + 90 × 162 + 1 = 70401, where a global bias (shifting) parameter is included. The training was performed with a batch size of 10000 structures for 1000000 generations (steps), which took about ten days using four A100 GPUs.

Calculations of basic physical properties

To evaluate the reliability of the UNEP-v1 model in molecular statics and MD simulations, we calculated a set of relevant static and dynamic material properties, with a close comparison with EAM35, DFT (if available or affordable)75,76, and experiments (if available). Energetics, elastic properties, and phonon dispersion relations were calculated with the help of GPUMD-WIZARD77, ASE78, PYNEP18, CALORINE79, and PHONOPY80 packages. Melting points were calculated using the two-phase method as implemented in GPUMD18 for UNEP-v1 and LAMMPS42 for EAM, and are compared to experimental values81. Vacancy formation energies were evaluated using 4 × 5 × 6 supercells. The formation energies of free surfaces were evaluated with 2 × 2 × 10 supercells (taking a surface perpendicular to z as an example here). The uncertainties in the predictions for the bulk and shear moduli and volume per atom, over different ensemble models, were estimated as the standard deviation using CALORINE79.

MD simulations for plasticity of MPEAs

We used the UNEP-v1 model to drive MD calculations of the plasticity of MPEAs under compression using the GPUMD package18,19. First, we used the Voronoi algorithm implemented in ATOMSK82 to build our initial MoTaVW polycrystalline MPEA model by removing overlapping atoms at boundaries. The model is composed of 12 grains with sizes ranging from 96 nm3 to 195 nm3, and contains 100 205 176 atoms which randomly occupy a BCC lattice at equimolar ratios. The initial MoTaVW model was further relaxed by MD simulations for 500 ps in the isothermal-isobaric ensemble at 300 K and 0 GPa using the Bernetti-Bussi barostat83 and Bussi-Donadio-Parrinello thermostat84. Finally we simulated uniaxial compressive deformation with a constant engineering strain rate of 4.2 × 108 s−1. The time step was kept fixed at 1 fs. The 2D visualization of dislocations perpendicular to the compressive axis was rendered using the OVITO package85.

MD simulations for primary radiation damage

The MD simulations of the displacement cascade in MoTaVW were performed using the GPUMD package18,19 with the UNEP-v1 model and a repulsive two-body ZBL-like potential61. A periodic cubic simulation cell with 16000000 atoms was constructed by creating a random mixture of the Mo, Ta, V, and W atoms with equimolar ratio in a BCC crystal. We equilibrated this system in the isothermal-isobaric ensemble for 30 ps, with a target temperature of 300 K and a target pressure of 0 GPa. A primary knock-on atom with an energy of 100 keV moving in the high-index direction 〈135〉 (to avoid channeling effects) was then created at the center of the simulation cell. Atoms within a thickness of three lattice constants of the boundaries were maintained at 300 K. The integration time step had an upper limit of 1 fs and was dynamically determined so that the fastest atom could move at most 0.015 Å (less than 0.5% of the lattice constant) within one step. The total number of steps is 200000, corresponding to 140 ps. Electronic stopping86 was applied as a frictional force on atoms with a kinetic energy over 10 eV. We used the OVITO package85 for defect analysis and visualization. The interstitials and vacancies were identified by using the Wigner-Seitz cell method. The defects were grouped into clusters: two vacancies were considered to be in the same cluster if the distance between them was within the second-nearest-neighbor distance, while the third-nearest-neighbor distance was used to identify self-interstitial clusters.

MCMD simulations for multi-component alloys

We utilized MCMD simulations in the canonical MC ensemble (involving swapping atoms of different species)87 in the study of Mo distribution in a superlattice structure formed by γ-Ni and \({\gamma }^{{\prime} }\)-Ni3Al (108000-atom supercell), the FCC-to-BCC transformation in Al-rich alloys (Al0.31Cr0.06Cu0.22Ni0.32V0.09 and Al0.20Cr0.12Cu0.19Ni0.35V0.14, with 4000-atom supercell), and the HCP-to-BCC transformation in the equimolar TiZrVMo and TiZrVMoTa alloys (1600-atom supercell). During these MCMD simulations, MC trials are attempted 2000 times after every 1000 MD steps, totaling about 106 MD steps to reach equilibrium, at which the MC acceptance ratio is close to zero.

Data availability

Source data are provided with this paper, deposited in the Zenodo repository https://doi.org/10.5281/zenodo.1395722988. The training and test datasets in extended XYZ format and the trained NEP models have been deposited in the Zenodo repository https://doi.org/10.5281/zenodo.1153386489. The supplementary data for initial and final MD configurations have been deposited in the Zenodo repository https://doi.org/10.5281/zenodo.1395186890.

Code availability

The source code for GPUMD (version 3.9.3) is available at the Zenodo repository https://doi.org/10.5281/zenodo.1112233974 and the Github repository https://github.com/brucefan1983/GPUMD/releases/tag/v3.9.3. The source code for CALORINE (version 2.2.1) is available at the Zenodo repository https://doi.org/10.5281/zenodo.1072337491. The source code for GPUMD-WIZARD (version 1.0) is available at the Zenodo repository https://doi.org/10.5281/zenodo.1394862777. The source code for PYNEP (version 1.0.0) is available at the Zenodo repository https://doi.org/10.5281/zenodo.1395380392.

References

Daw, M. S. & Baskes, M. I. Embedded-atom method: derivation and application to impurities, surfaces, and other defects in metals. Phys. Rev. B 29, 6443 (1984).

Finnis, M. W. & Sinclair, J. E. A simple empirical n-body potential for transition metals. Philos. Mag. A 50, 45 (1984).

Behler, J. Perspective: Machine learning potentials for atomistic simulations. J. Chem. Phys. 145, 170901 (2016).

Deringer, V. L., Caro, M. A. & Csányi, G. Machine learning interatomic potentials as emerging tools for materials science. Adv. Mater. 31, 1902765 (2019).

Mueller, T., Hernandez, A. & Wang, C. Machine learning for interatomic potential models. J. Chem. Phys. 152, 050902 (2020).

Noé, F., Tkatchenko, A., Müller, K.-R. & Clementi, C. Machine learning for molecular simulation. Annu. Rev. Phys. Chem. 71, 361 (2020).

Mishin, Y. Machine-learning interatomic potentials for materials science. Acta Materialia 214, 116980 (2021).

Unke, O. T. et al. Machine learning force fields. Chem. Rev. 121, 10142 (2021).

Shapeev, A. V. Moment tensor potentials: a class of systematically improvable interatomic potentials. Multiscale Modeling Simul. 14, 1153 (2016).

Drautz, R. Atomic cluster expansion for accurate and transferable interatomic potentials. Phys. Rev. B 99, 014104 (2019).

Thompson, A., Swiler, L., Trott, C., Foiles, S. & Tucker, G. Spectral neighbor analysis method for automated generation of quantum-accurate interatomic potentials. J. Computational Phys. 285, 316 (2015).

Behler, J. & Parrinello, M. Generalized Neural-Network Representation of High-Dimensional Potential-Energy Surfaces. Phys. Rev. Lett. 98, 146401 (2007).

Bartók, A. P., Payne, M. C., Kondor, R. & Csányi, G. Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 104, 136403 (2010).

Batzner, S. et al. E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nat. Commun. 13, 2453 (2022).

Batatia, I., Kovacs, D. P., Simm, G., Ortner, C. & Csanyi, G. MACE: Higher Order Equivariant Message Passing Neural Networks for Fast and Accurate Force Fields, in https://proceedings.neurips.cc/paper_files/paper/2022/file/4a36c3c51af11ed9f34615b81edb5bbc-Paper-Conference.pdf. Advances in Neural Information Processing Systems, Vol. 35, edited by Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K. & Oh, A. 11423–11436 (Curran Associates, Inc., 2022).

Fan, Z. et al. Neuroevolution machine learning potentials: Combining high accuracy and low cost in atomistic simulations and application to heat transport. Phys. Rev. B 104, 104309 (2021).

Fan, Z. Improving the accuracy of the neuroevolution machine learning potential for multi-component systems. J. Phys.: Condens. Matter 34, 125902 (2022).

Fan, Z. et al. Gpumd: A package for constructing accurate machine-learned potentials and performing highly efficient atomistic simulations. J. Chem. Phys. 157, 114801 (2022).

Fan, Z., Chen, W., Vierimaa, V. & Harju, A. Efficient molecular dynamics simulations with many-body potentials on graphics processing units. Computer Phys. Commun. 218, 10 (2017).

Takamoto, S., Izumi, S. & Li, J. Teanet: Universal neural network interatomic potential inspired by iterative electronic relaxations. Computational Mater. Sci. 207, 111280 (2022).

Takamoto, S. et al. Towards universal neural network potential for material discovery applicable to arbitrary combination of 45 elements. Nat. Commun. 13, 1 (2022).

Chen, C. & Ong, S. P. A universal graph deep learning interatomic potential for the periodic table. Nat. Computational Sci. 2, 718 (2022).

Bartók, A. P., Kermode, J., Bernstein, N. & Csányi, G. Machine Learning a General-Purpose Interatomic Potential for Silicon. Phys. Rev. X 8, 041048 (2018).

Rowe, P., Deringer, V. L., Gasparotto, P., Csányi, G. & Michaelides, A. An accurate and transferable machine learning potential for carbon. J. Chem. Phys. 153, 034702 (2020).

Jana, R. & Caro, M. A. Searching for iron nanoparticles with a general-purpose Gaussian approximation potential. Phys. Rev. B 107, 245421 (2023).

Kloppenburg, J., Pártay, L. B., Jónsson, H. & Caro, M. A. A general-purpose machine learning Pt interatomic potential for an accurate description of bulk, surfaces, and nanoparticles. J. Chem. Phys. 158, 134704 (2023).

Artrith, N., Urban, A. & Ceder, G. Efficient and accurate machine-learning interpolation of atomic energies in compositions with many species. Phys. Rev. B 96, 014112 (2017).

Thorn, A., Gochitashvili, D., Kharabadze, S. & Kolmogorov, A. N. Machine learning search for stable binary Sn alloys with Na, Ca, Cu, Pd, and Ag. Phys. Chem. Chem. Phys. 25, 22415 (2023).

Erhard, L. C., Rohrer, J., Albe, K. & Deringer, V. L. Modelling atomic and nanoscale structure in the silicon–oxygen system through active machine learning. Nat. Commun. 15, 1927 (2024).

Pozdnyakov, S. N. et al. Incompleteness of atomic structure representations. Phys. Rev. Lett. 125, 166001 (2020).

Schaul, T., Glasmachers, T. & Schmidhuber, J. High Dimensions and Heavy Tails for Natural Evolution Strategies, in https://doi.org/10.1145/2001576.2001692. Proceedings of the 13th Annual Conference on Genetic and Evolutionary Computation, GECCO ’11. 845–852 (Association for Computing Machinery, New York, NY, USA, 2011).

Wierstra, D. et al. Natural evolution strategies. J. Mach. Learn. Res. 15, 949 (2014).

Jain, A. et al. The materials project: a materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

Kirklin, S. et al. The Open Quantum Materials Database (OQMD): assessing the accuracy of DFT formation energies. npj Computational Mater. 1, 15010 (2015).

Zhou, X. W., Johnson, R. A. & Wadley, H. N. G. Misfit-energy-increasing dislocations in vapor-deposited CoFe/NiFe multilayers. Phys. Rev. B 69, 144113 (2004).

Zhao, R. et al. Development of a neuroevolution machine learning potential of Pd-Cu-Ni-P alloys. Mater. Des. 231, 112012 (2023).

Byggmästar, J., Nordlund, K. & Djurabekova, F. Simple machine-learned interatomic potentials for complex alloys. Phys. Rev. Mater. 6, 083801 (2022).

Lopanitsyna, N., Fraux, G., Springer, M. A., De, S. & Ceriotti, M. Modeling high-entropy transition metal alloys with alchemical compression. Phys. Rev. Mater. 7, 045802 (2023).

Merchant, A. et al. Scaling deep learning for materials discovery. Nature 624, 80 (2023).

Batatia, I. et al. A foundation model for atomistic materials chemistry, Preprint at, https://arxiv.org/abs/2401.00096 (2024).

Sheng, H. W., Kramer, M. J., Cadien, A., Fujita, T. & Chen, M. W. Highly optimized embedded-atom-method potentials for fourteen fcc metals. Phys. Rev. B 83, 134118 (2011).

Thompson, A. P. et al. LAMMPS - a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales. Computer Phys. Commun. 271, 108171 (2022).

Jia, W. et al. Pushing the Limit of Molecular Dynamics with Ab Initio Accuracy to 100 Million Atoms with Machine Learning, in Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, SC ’20 (IEEE Press, 2020).

Guo, Z. et al. Extending The Limit Of Molecular Dynamics With Ab Initio Accuracy To 10 Billion Atoms, in https://doi.org/10.1145/3503221.3508425Proceedings of the 27th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, PPoPP ’22, 205–218 (Association for Computing Machinery, New York, NY, USA, 2022).

Musaelian, A. et al. Learning local equivariant representations for large-scale atomistic dynamics. Nat. Commun. 14, 579 (2023).

Senkov, O., Gorsse, S. & Miracle, D. High temperature strength of refractory complex concentrated alloys. Acta Materialia 175, 394 (2019).

George, E. P., Raabe, D. & Ritchie, R. O. High-entropy alloys. Nat. Rev. Mater. 4, 515 (2019).

Coury, F. G., Kaufman, M. & Clarke, A. J. Solid-solution strengthening in refractory high entropy alloys. Acta Materialia 175, 66 (2019).

Shi, P. et al. Enhanced strength-ductility synergy in ultrafine-grained eutectic high-entropy alloys by inheriting microstructural lamellae. Nat. Commun. 10, 489 (2019).

El-Atwani, O. et al. Outstanding radiation resistance of tungsten-based high-entropy alloys. Sci. Adv. 5, eaav2002 (2019).

El Atwani, O. et al. A quinary WTaCrVHf nanocrystalline refractory high-entropy alloy withholding extreme irradiation environments, Nature Commun. 14, 2516 (2023).

Senkov, O. N., Miracle, D. B., Chaput, K. J. & Couzinie, J.-P. Development and exploration of refractory high entropy alloys—a review. J. Mater. Res. 33, 3092–3128 (2018).

Couzinié, J.-P. & Dirras, G. Body-centered cubic high-entropy alloys: from processing to underlying deformation mechanisms. Mater. Charact. 147, 533 (2019).

Lilensten, L. et al. Study of a bcc multi-principal element alloy: tensile and simple shear properties and underlying deformation mechanisms. Acta Materialia 142, 131 (2018).

Caillard, D. & Martin, J.-L. Thermally Activated Mechanisms In Crystal Plasticity. (Elsevier, 2003).

Li, X.-G., Chen, C., Zheng, H., Zuo, Y. & Ong, S. P. Complex strengthening mechanisms in the NbMoTaW multi-principal element alloy. npj Computational Mater. 6, 70 (2020).

Yin, S. et al. Atomistic simulations of dislocation mobility in refractory high-entropy alloys and the effect of chemical short-range order. Nat. Commun. 12, 4873 (2021).

Zheng, H. et al. Multi-scale investigation of short-range order and dislocation glide in MoNbTi and TaNbTi multi-principal element alloys. npj Computational Mater. 9, 89 (2023).

Zepeda-Ruiz, L. A., Stukowski, A., Oppelstrup, T. & Bulatov, V. V. Probing the limits of metal plasticity with molecular dynamics simulations. Nature 550, 492 (2017).

Hu, J., Shi, Y., Sauvage, X., Sha, G. & Lu, K. Grain boundary stability governs hardening and softening in extremely fine nanograined metals. Science 355, 1292 (2017).

Ziegler, J. F. & Biersack, J. P. The Stopping And Range Of Ions In Matter, in https://doi.org/10.1007/978-1-4615-8103-1_3. Treatise on Heavy-Ion Science: 6, Astrophysics, Chemistry, and Condensed Matter, edited by Bromley, D. A. 93–129 (Springer US, Boston, MA, 1985).

Liu, J., Byggmästar, J., Fan, Z., Qian, P. & Su, Y. Large-scale machine-learning molecular dynamics simulation of primary radiation damage in tungsten. Phys. Rev. B 108, 054312 (2023).

Kashiwaya, S. et al. Synthesis of goldene comprising single-atom layer gold, https://doi.org/10.1038/s44160-024-00518-4Nat. Synth. 3, 744–751 (2024).

Olsson, P. A. T. Transverse resonant properties of strained gold nanowires. J. Appl. Phys. 108, 034318 (2010).

Tu, Y., Mao, Z. & Seidman, D. N. Phase-partitioning and site-substitution patterns of molybdenum in a model Ni-Al-Mo superalloy: An atom-probe tomographic and first-principles study. Appl. Phys. Lett. 101, 121910 (2012).

Jia, C., Ishida, K. & Nishizawa, T. Partition of alloying elements between γ(a1), \(\gamma {\prime}\)(l12), and β(b2) phases in ni-al base systems. Metall. Mater. Trans. A 25, 473 (1994).

Yi, J. et al. A novel Al0.5CrCuNiV 3d transition metal high-entropy alloy: Phase analysis, microstructure and compressive properties. J. Alloy. Compd. 846, 156466 (2020).

Mu, Y. et al. An ab initio and experimental studies of the structure, mechanical parameters and state density on the refractory high-entropy alloy systems. J. Alloy. Compd. 714, 668 (2017).

Wang, J. et al. MAGUS: machine learning and graph theory assisted universal structure searcher. Natl Sci. Rev. 10, nwad128 (2023).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169 (1996).

Blöchl, P. E. Projector augmented-wave method. Phys. Rev. B 50, 17953 (1994).

Kresse, G. & Joubert, D. From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758 (1999).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865 (1996).

Fan, Z. https://doi.org/10.5281/zenodo.11122339 brucefan1983/GPUMD: GPUMD-v3.9.3 (2024).

De Jong, M. et al. Charting the complete elastic properties of inorganic crystalline compounds. Sci. data 2, 1 (2015).

Tran, R. et al. Surface energies of elemental crystals. Sci. data 3, 1 (2016).

Liu, J. https://doi.org/10.5281/zenodo.13948627 GPUMD-Wizard: a python package for generating and evaluating machine learning potentials (2024).

Larsen, A. H. et al. The atomic simulation environment—a Python library for working with atoms. J. Phys.: Condens. Matter 29, 273002 (2017).

Lindgren, E. et al. calorine: A Python package for constructing and sampling neuroevolution potential models. J. Open Source Softw. 9, 6264 (2024).

Togo, A., Chaput, L., Tadano, T. & Tanaka, I. Implementation strategies in phonopy and phono3py. J. Phys. Condens. Matter 35, 353001 (2023).

Haynes, W. E. https://doi.org/10.1201/9781315380476CRC handbook of chemistry and physics (97th ed.) (CRC Press llc Boca Raton, FL, (2016).

Hirel, P. Atomsk: A tool for manipulating and converting atomic data files. Computer Phys. Commun. 197, 212 (2015).

Bernetti, M. & Bussi, G. Pressure control using stochastic cell rescaling. J. Chem. Phys. 153, 114107 (2020).

Bussi, G., Donadio, D. & Parrinello, M. Canonical sampling through velocity rescaling. J. Chem. Phys. 126, 014101 (2007).

Stukowski, A. Visualization and analysis of atomistic simulation data with OVITO-the open visualization tool. Model. Simul. Mater. Sci. Eng. 18, 015012 (2009).

Nordlund, K. Molecular dynamics simulation of ion ranges in the 1–100 keV energy range. Computational Mater. Sci. 3, 448 (1995).

Song, K. et al. Solute segregation in polycrystalline aluminum from hybrid Monte Carlo and molecular dynamics simulations with a unified neuroevolution potential, https://arxiv.org/abs/2404.13694 (2024).

Song, K. Source Data for the manuscript: general-purpose machine-learned potential for 16 elemental metals and their alloys, https://doi.org/10.5281/zenodo.13957229 (2024).

Fan, Z. Dataset for UNEP-v1, https://doi.org/10.5281/zenodo.11533864 (2024).

Song, K. Supplementary initial and final MD configurations for the manuscript: general-purpose machine- learned potential for 16 elemental metals and their alloys, https://doi.org/10.5281/zenodo.13951868 (2024).

Lindgren, E. et al. https://doi.org/10.5281/zenodo.10723374 Calorine - a python library for building and sampling NEP models via the GPUMD package (2024).

Wang, J. https://doi.org/10.5281/zenodo.13953803 bigd4/pynep: v1.0.0 (2024).

Acknowledgements

K.S., J.L., Y.Z.W., P.Q., and Y.S. acknowledge support from the National Key R & D Program of China (No. 2022YFB3707500) and the National Natural Science Foundation of China (NSFC) (No. 92270001). Y.Z.W. and T.A. have been supported in part by the Academy of Finland through its Quantum Technology Finland CoE grant No. 312298 and under the European Union - NextGenerationEU instrument by the Academy of Finland grant 353298. E.L. and P.E. acknowledge funding from the Swedish Research Council (Nos. 2020-04935 and 2021-05072) and the Swedish Foundation for Strategic Research via the SwedNESS graduate school (GSn15-0008) as well as computational resources provided by the National Academic Infrastructure for Supercomputing in Sweden at NSC and C3SE partially funded by the Swedish Research Council through grant agreement No. 2022-06725. J.S. acknowledges support from NSFC (Nos. 12125404, 11974162), the Basic Research Program of Jiangsu, the Fundamental Research Funds for the Central Universities, and computational sources from the High Performance Computing Center of Collaborative Innovation Center of Advanced Microstructures and the high-performance supercomputing center of Nanjing University. K.X. and T.L. acknowledge support from the National Key R&D Project from Ministry of Science and Technology of China (No. 2022YFA1203100), the Research Grants Council of Hong Kong (No. AoE/P-701/20), and RGC GRF (No. 14220022). Z.Q.Z„ Z.H.Z., and W.G. acknowledge support from the NSFC Projects of International Cooperation and Exchanges (No. 12261160367). Z.Z.Z., S.L., and Y.C. are grateful for the research computing facilities offered by ITS, HKU.

Author information

Authors and Affiliations

Contributions

K.S., R.Z., Y.Z.W., and N.X. prepared the training and test structures. K.S., Y.Z.W., Z.Q.Z., T.L., J.Y.S., J.W., K.X., S.L., Z.Z.Z., and S.R.L. performed the DFT calculations. K.S., S.C., and Y.Z.W. tested the various hyperparameters and trained the NEP models. P.Y. analyzed the descriptor space. K.S., R.Z., J.L., K.X., T.L., Z.Q.Z., and H.D. evaluated the basic physical properties. E.L. trained the first generation of ensemble models, and performed the ensemble model analysis. Y.W. and S.C. evaluated the computational performance. J.L. performed the radiation damage simulations and developed the high-throughput calculation tools. Z.F. and S.C. developed the NEP4 model and the multiple-loss evolutionary training algorithm. L.S., Y.C., Z.H.Z., W.G., P.Q., J.S., P.E., T.A., Y.S., and Z.F. supervised the project.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Logan Ward, and the other anonymous reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Song, K., Zhao, R., Liu, J. et al. General-purpose machine-learned potential for 16 elemental metals and their alloys. Nat Commun 15, 10208 (2024). https://doi.org/10.1038/s41467-024-54554-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-024-54554-x

This article is cited by

-

Tough and strong bioinspired high-entropy all-ceramics with a contiguous network structure

Nature Communications (2025)