Abstract

This paper proposes the GYU-DET dataset for bridge surface defect detection, aiming to address the limitations of existing datasets in terms of scale, annotation accuracy, and environmental diversity. The GYU-DET dataset includes six types of defects: cracks, spalling, seepage, honeycomb surface, exposed rebar, and holes, with a total of 11,123 high-resolution images. It covers a variety of lighting and environmental conditions, comprehensively reflecting the diversity and complexity of bridge defects. The dataset provides comprehensive coverage of bridge structures, with images covering multiple key structural parts. Strict annotation guidelines ensure annotation accuracy and consistency, using the YOLO format, which facilitates model training and evaluation in computer vision tasks. To validate the effectiveness of the dataset, experiments were conducted using the YOLOv11 object detection model. The results show that GYU-DET can effectively support bridge defect detection tasks in the field of computer vision, providing high-quality data support for bridge surface defect detection tasks and promoting the development of intelligent bridge health monitoring technology.

Similar content being viewed by others

Background & Summary

Bridges, as one of the essential infrastructures in modern cities, play a critical role in road transportation and are vital to urban development and economic prosperity1. However, with the increasing age of bridges and the impact of external environmental factors, various defects may develop in bridge structures, such as cracks, seepage, leakage, and honeycombing. These defects not only compromise the structural integrity of bridges but also pose a serious threat to urban traffic safety, potentially leading to bridge collapse and traffic accidents2.

In recent years, with the rapid development of deep learning, significant progress has been made in object detection technology. The core task of object detection technology is to identify and locate target objects in images or videos. Compared to traditional computer vision algorithms, deep learning, through Convolutional Neural Networks (CNN), automatically learns feature representations, greatly improving the accuracy and robustness of object detection algorithms3. Object detection technology has been widely applied across various disciplines, and its application in bridge defect detection also holds great potential.

Although CNNs demonstrate strong performance in object detection, successful model training typically relies on a large amount of high-quality, fully annotated datasets4. Especially when preparing bounding boxes for object detection tasks, image annotation is a time-consuming and labor-intensive process. To address this challenge, researchers often use existing large public datasets, such as ImageNet5, MS COCO6, and PASCAL VOC7, for model development and training. These datasets cover a wide range of object categories and scenes, providing rich resources for general object detection. However, the applicability of public datasets in bridge defect detection is relatively limited. Bridge surfaces often have complex structures and details, with defects manifesting as cracks, spalling, corrosion, and exposed rebar, among other fine features. These defects have delicate visual characteristics, irregular distributions, blurred boundaries, and exhibit diverse behaviors under varying lighting conditions. This complexity and diversity are often difficult to fully capture with existing public datasets.

Therefore, it is particularly necessary to create a high-quality dataset specifically for bridge defect detection, covering multiple defect types and sampled under different environmental conditions. Precise annotation should not only include bounding boxes but also record detailed features to improve the model’s detection accuracy and adaptability in this field8.

In the existing literature, publicly available datasets for bridge defect detection are relatively scarce, especially those specifically designed for object detection tasks. Most existing datasets are primarily intended for image classification rather than precise object localization. According to reference9, as of 2022, a total of 86 image datasets had been used in bridge inspection-related research, but only 26 of them were publicly accessible. In light of this, this paper selects and analyzes several representative and widely recognized high-quality datasets.

In 2017, study10 released the ‘GAPS’ (German Asphalt Pavement Distress) dataset, a small dataset for road surface defect classification, primarily aimed at detecting various common distresses on asphalt pavement. The dataset contains 1,969 sub-images, with the original image resolution of 1920 × 1080 and the cropped sub-image resolution of 64 × 64, covering defect types such as cracks, potholes, patches, and joints. In the same year, study11 constructed the ‘CSSC’ (Concrete Structure Spalling and Crack) dataset, a medium-sized dataset for classifying concrete structure defects, focusing on identifying cracks and spalling on concrete surfaces. The dataset consists of 278 spalling images and 954 crack images, which were further cropped to generate 37,523 sub-images categorized into four classes: crack, non-crack, spalling, and non-spalling. The sub-images were extracted using a sliding window technique from high-resolution originals, providing abundant high-quality training samples for deep learning models.

In addition, study12 introduced the ‘CSD’ (Cambridge Bridge Inspection Dataset), a small-scale bridge defect classification dataset focused on identifying concrete defects. The dataset contains a total of 1,028 images with a resolution of 299 × 299, including 691 images of healthy concrete and 337 images with defects. The defect types include cracks, graffiti, moss, and surface roughness. Subsequently, in 2018, study13 released the ‘SDNET2018’ dataset, which consists of 230 images of concrete surface cracks and non-cracks captured using a 16-megapixel Nikon digital camera. The images cover 54 bridge decks, 72 walls, and 104 sidewalks. After image segmentation, a total of 56,092 samples with a resolution of 256 × 256 were generated, including 47,608 crack images and 8,484 non-crack images, primarily used for training classification tasks in neural network models.

In 2019, study14 released the ‘MCDS’ (Multi-Classifier for Reinforced Concrete Bridge Defects) dataset, a medium-scale image classification dataset focused on concrete and reinforced bridge defects. The dataset contains a total of 3,607 images. Although the image resolutions are not explicitly specified, the content covers a variety of common structural defects, including concrete cracks, efflorescence, spalling, exposed reinforcement, corrosion, and surface scaling. It also includes some defect-free images as control samples to improve the model’s ability to recognize normal structures. Additionally, study15 constructed the ‘BiNet’ dataset for multi-label classification tasks in bridge defect detection. This dataset contains 3,588 images with varying resolutions, such as 455 × 186, 97 × 108, 123 × 131, and 153 × 68. The images cover four common types of concrete structural damage: cracks (1,330 images), spalling (240 images), corrosion (961 images), and exposed reinforcement (942 images). The data were mainly collected from field inspections of highway bridges in Slovenia between 2016 and 2018.

However, the aforementioned datasets do not provide detailed annotations of defects and are limited to classification tasks. In contrast, some datasets in recent years have begun to include defect annotation information. For example, in 2018, study16 developed the ‘Road Crack Detection’ dataset, a medium-scale object detection dataset focused on road surface defects. It aims to identify various types of cracks on asphalt pavement using object detection methods. The dataset contains a total of 9,053 images and supports training and testing with deep learning models such as YOLOv2. It includes annotations for eight types of cracks, including longitudinal cracks, transverse cracks, and alligator cracks, offering valuable image resources and application support for road maintenance and autonomous driving perception systems.

In 2019, study17 released the ‘CODEBRIM’ (COncrete DEfect BRidge IMage) dataset, which includes 1,590 images collected by cameras and drones across 30 bridges. The dataset covers six categories: cracks, weathering, spalling, exposed reinforcement, corrosion, and no defect. Among them, 1,052 images are annotated, with a total of 1,323 defect bounding boxes. The images vary in resolution, including 1920 × 400, 6000 × 4000, 1732 × 2596, and 1972 × 2960, providing high diversity and practical value. Subsequently, study18 introduced the ‘dacl1k’ dataset, which consists of 1,474 images with resolutions ranging from 245 × 336 to 5152 × 6000. It covers six label categories: cracks, efflorescence, spalling, exposed reinforcement, rust, and no damage, and includes 2,367 bounding box annotations.

In 2021, study19 introduced the ‘RDD2020’ (Road Damage Detection 2020) dataset, a large-scale road damage object detection dataset designed to support the application of deep learning methods in automatic road inspection and evaluation. The dataset contains a total of 26,336 images with resolutions of 600 × 600 and 720 × 720, featuring real-world road scenes from countries such as Japan, India, and the Czech Republic. More than 31,000 defect instances are annotated, covering categories including longitudinal cracks (D00), transverse cracks (D10), alligator cracks (D20), and potholes (D40).

In the same year, study20 created the ‘COCO-Bridge-2021’ dataset, a small-scale object detection dataset focused on identifying critical bridge components. It is designed to locate bridge details that are prone to fatigue damage or require prioritized inspection. The dataset contains 774 images with a resolution of 300 × 300, and includes 2,483 annotated object instances covering four typical components: bearings, girder ends, gusset plate nodes, and stiffeners. The images were collected from real bridge inspection scenarios and are suitable for mainstream detection algorithms such as SSD and YOLO. Building on this, study21 released an extended version named ‘COCO-Bridge-2021 + ‘ in the same year. This enhanced dataset aims to provide richer and more diverse training samples. It consists of 1,470 images, also with a resolution of 300 × 300, and contains 7,283 annotated structural instances, including 1,969 bearings, 335 girder connections, 1,083 gusset plate nodes, and 3,896 stiffeners. All annotations were precisely completed by structural experts based on actual inspection requirements, making the dataset suitable for visual analysis tasks such as bridge component recognition and structural condition assessment.

Although the aforementioned datasets provide a certain foundation for research in bridge defect detection, their application in object detection tasks still faces several limitations. First, the overall scale of these datasets is relatively small, with limited numbers of images, which makes it difficult to meet the demands of deep learning models for large-scale, high-quality samples. For example, the CSD dataset contains only 1,028 images, and while SDNET2018 expands to 56,092 images through cropping, it is originally based on just 230 images. Most images are taken from local perspectives, offering limited information density. Similarly, the GAPS and CSSC datasets primarily generate small sub-images through sliding window cropping, which restricts image detail and fails to provide sufficient contextual information, thereby limiting the generalization ability of models.

Second, most datasets lack fine-grained defect annotations and are suitable only for image-level classification tasks, falling short of the precision and localization requirements needed for object detection models. For instance, datasets such as GAPS, CSSC, MCDS, and BiNet include various typical bridge defects like cracks, spalling, corrosion, and exposed reinforcement, but they only provide image-level labels without specifying the exact defect regions. This coarse annotation approach limits the model’s localization performance in defect recognition and hinders subsequent damage assessment and quantitative analysis.

Even datasets that provide bounding box annotations, such as CODEBRIM and dacl1k, still suffer from issues like insufficient annotation granularity, limited defect type coverage, and inconsistent image resolution. For example, CODEBRIM’s annotations mainly focus on common defect types such as cracks, spalling, and exposed reinforcement, and the images are mostly collected from a single bridge environment, lacking diverse scene conditions, which hinders the model’s adaptability to complex environments. Although the dacl1k dataset includes six types of defect annotations, the wide variation in image resolutions and inconsistent shooting standards lead to uneven training sample quality, which affects the stability and robustness of detection models. In addition, while COCO-Bridge-2021 and its enhanced version COCO-Bridge-2021+ offer object detection annotations for detailed bridge components (such as bearings, gusset plates, and stiffeners), their focus is on structural components rather than specific damage types. Therefore, they are not directly applicable to surface defect detection tasks such as cracks, spalling, seepage, and exposed reinforcement. Furthermore, the image count in this series of datasets remains relatively limited, and the capture conditions are homogeneous, lacking diversity in lighting, viewpoints, and backgrounds—factors that are critical for improving a model’s generalization ability in real-world complex scenarios.

Therefore, this paper creates the GYU-DET dataset, aiming to address the shortcomings of existing datasets. The GYU-DET dataset includes a large number of high-resolution images, featuring six types of bridge defects: cracks, spalling, seepage, honeycombed surfaces, exposed rebar, and holes. The data is collected under various lighting and weather conditions to ensure diversity and complexity. Furthermore, the dataset provides precise bounding box annotations and detailed records of defect details, greatly improving the model’s applicability and detection accuracy in object detection tasks. Through GYU-DET, research in bridge defect detection will gain more comprehensive and accurate data support, enhancing the model’s practicality and robustness.

The data in this paper captures the complexity and diversity of bridge surface defects through collection under various lighting and environmental conditions. The data collection lasted 18 months (from April 2015 to December 2016), with over 18,000 raw images collected. After strict screening, annotation, and processing, a total of 11,123 clear and feature-distinct images were retained. These images cover multiple key parts of the bridge, including piers, main beams, railings, supports, etc., ensuring the representativeness and completeness of the data. Additionally, the dataset covers various lighting conditions (adequate lighting, low light, dim light) and weather conditions (rainy days, distant views, debris interference, etc.), providing rich training data for the algorithm’s robustness and generalization ability. The data annotation in this paper strictly follows the Chinese Road and Bridge Maintenance Standards22, classifying bridge surface defects into six categories: cracks, spalling, honeycombed surfaces, exposed rebar, seepage, and holes. To address the challenges encountered in the annotation process (such as ambiguous boundaries between defect types, coexistence of multiple defects, and difficulty in annotating irregular shapes), this paper established detailed annotation guidelines to ensure objectivity and consistency in the annotation process. The data annotation used the YOLO format to facilitate training and validation for deep learning object detection tasks.

Testing the GYU-DET dataset with the YOLOv11n model shows that the detection performance is better for obvious defect categories such as breakage, exposed rebar, and holes. For defects with blurred boundaries and irregular shapes, such as cracks and seepage, the detection performance is also relatively good. This indicates that the proposed GYU-DET dataset not only excels in annotation accuracy and consistency but also effectively supports automatic annotation by object detection models in bridge defect detection tasks, reducing the workload of manual annotation while ensuring high-quality annotation results.

In practical terms, GYU-DET can be used as a training dataset for developing lightweight detection models integrated into mobile inspection tools, such as handheld devices or UAV-based inspection platforms, which are increasingly used by municipal road maintenance teams. It also serves as a benchmark for researchers testing new algorithms under varied lighting and environmental conditions. Furthermore, the dataset supports educational purposes, including use in civil engineering and computer vision courses to train students on real-world infrastructure inspection scenarios. Because the dataset follows a standardized annotation format (YOLO), it is easily integrated into existing deep learning pipelines, allowing engineers and researchers to focus on model innovation and defect pattern analysis. These practical applications demonstrate how GYU-DET contributes to current efforts in semi-automated bridge inspection and AI-driven infrastructure maintenance.

Methods

Research area

The GYU-DET dataset created in this paper originates from bridges located between the coordinates of 103°36′–109°35′ E longitude and 24°37′–29°13′ N latitude. Selecting bridges in this area as the research subject offers unique geographical advantages. The region has a high west and low east terrain, sloping from the center to the north, east, and south, with an average altitude of about 1,100 meters. This unique plateau mountain topography not only presents severe challenges for bridge design and construction but also promotes the diversified development of bridge structures and construction technologies. The complex geological conditions require bridge designs that can cope with terrain undulations, river erosion, and natural obstacles such as high mountain gorges, resulting in diverse bridge structures and damage forms in this region.

In addition, the region has 28,023 highway bridges, including types such as suspension bridges, beam bridges, and arch bridges.The large number of bridges and the complex terrain distribution have earned this region the title of the “Bridge Museum.” This provides highly representative cases and rich data resources for the study of bridge structural damage, serving as an important foundation for research in bridge defect detection tasks.

Data collection

This paper conducts data collection for bridges located between the coordinates of 103°36′–109°35′ E longitude and 24°37′–29°13′ N latitude, primarily using cameras such as the Nikon D5500. The images were captured using a combination of handheld cameras and aerial work platforms (bucket trucks) to acquire close-up images of surface defects in different parts of the bridges. The captured sample images are shown in Fig. 1.

The data collection work was conducted from April 2015 to December 2016, during which more than 18,000 raw images of bridge damage were collected. After a multi-stage data screening process and a detailed annotation protocol, a total of 11,123 clear and feature-distinct bridge surface defect images were retained. The screening process began with an initial automated quality check to eliminate blurry, overexposed, or occluded images. This was followed by manual inspection conducted by trained annotators, who assessed the visibility of defects and the structural relevance of each image. Finally, civil engineering experts carried out a professional review to ensure the practical usability of the data. For annotation, a rigorous three-step protocol was applied. Each image was first labeled independently by two annotators. The bounding boxes were then cross-validated to check for accuracy and consistency. Lastly, all annotations underwent final confirmation by experts to ensure the highest level of precision and reliability.

During the shooting process, comprehensive coverage was carried out for multiple key locations on the bridge, including bridge piers, deck beams, abutment sidewalls and wingwalls, arch rings, abutments, edge beams, bottom areas of main beams, railings, crash walls, bottom of crossbeams, connections of main beams, support areas, as well as the areas beneath the bridge, road surface of the bridge, and both sides of the bridge. Through comprehensive documentation of these key parts, the data ensures the reflection of both the overall health condition of the bridge and localized damage characteristics. At the same time, shooting from multiple angles and under various lighting conditions was done in an effort to comprehensively capture the different manifestations of surface defects on the bridge. Furthermore, each type of defect was photographed under different lighting conditions, including morning slanting light, midday direct light, and overcast diffused light, in order to present richer visual details. Additionally, supplementary features such as bridge expansion joints, water stains, and dry moss were also recorded to obtain more comprehensive and realistic damage information.

To provide a representative sample, the bridge inventory included in the GYU-DET dataset encompasses a variety of structural forms and materials commonly found in practical engineering. Structurally, the dataset covers beam bridges (including simply supported and continuous beams), arch bridges, and suspension bridges, representing typical designs in both urban and rural transportation networks. In terms of materials, the bridges are primarily constructed from reinforced concrete, prestressed concrete, and steel–concrete composite sections, which are widely used in China’s municipal and highway bridge projects. The structural schemes observed in these bridges include single-span, multi-span continuous, and rigid-frame systems, reflecting diverse load-bearing and spatial configurations suited to complex terrain and different traffic requirements.

To ensure data accuracy, the shooting process paid particular attention to capturing detailed features of damaged areas. Importantly, the data collection was not limited to centralized damage; decentralized defect locations and partial-frame shots were also deliberately included to avoid spatial bias.

Image annotation

The classification and annotation of damage types in the GYU-DET dataset were conducted following the Chinese Road and Bridge Maintenance Standards22. These standards provide detailed definitions for six common types of surface damage: cracks, spalling, honeycomb surfaces, exposed rebar, seepage, and holes. The annotations for these six types of defects are shown in Table 1.

To better align this work with the international context of visual bridge inspections, we further reviewed global literature on inspection standards. For instance, the recent study23 presents a systematic approach to damage classification based on visual inspection protocols adopted in Europe. We found that the defect categories used in our dataset correspond closely to those in international practice, thereby ensuring relevance and potential interoperability of our dataset with broader global inspection frameworks.

During the image annotation process, this paper used the six defect types mentioned above for annotation. Moreover, many challenges were overcome during the actual annotation process. For example, the boundaries between some defect types are not obvious, especially when the damage is severe. In such cases, rough surfaces, honeycombed areas, and spalling may exhibit similar characteristics, making clear differentiation difficult. Secondly, multiple defects often coexist. For instance, cracks are commonly accompanied by seepage, and hole areas may also feature exposed rebar. Furthermore, for irregularly shaped defects (such as cracks and spalling), accurately fitting the annotation to the actual shape is a significant challenge. If the bounding box covers too many redundant areas, it may affect the model training results. On the other hand, if the annotation is too conservative, it may result in missing some damage. Finally, for defects with unclear boundaries, such as slight spalling and rough surfaces, annotations tend to be either too large or too small, which can affect the consistency and accuracy of the annotation results.

In response to the challenges mentioned above, in order to ensure the objectivity and consistency of the annotation process, the following annotation guidelines were established and implemented during the annotation of the GYU-DET dataset:

-

Annotators should strictly differentiate each defect type based on its key characteristics to ensure that all defect annotations are accurate and consistent.

-

When different types of defect areas overlap, each defect should be annotated separately, and overlapping bounding boxes are allowed to ensure the dataset captures the characteristics of compound damage.

-

For irregularly shaped defects, such as spalling, cracks, and holes, the annotation should closely follow the actual shape of the defect, avoiding excessive redundant space to ensure the accuracy of the bounding box.

-

For defects with unclear boundaries, such as slight spalling and rough surfaces, the annotation box should cover all affected areas while avoiding over-annotation, preventing the bounding box from being too large or too small.

The implementation of these guidelines helps ensure consistency and accuracy in annotation under complex damage conditions, thus improving the annotation quality of the dataset.To ensure clarity and reproducibility, we added visual examples demonstrating typical annotation standards, as well as failure cases encountered during the annotation process (Fig. 2).

After clarifying the annotation details, ___domain experts used the professional annotation tool LabelImg to annotate the images in the GYU-DET dataset according to the above guidelines, generating txt format files for training. The annotation process is shown in Fig. 3.

Dataset imbalance and defect distribution

As a large and complex bridge surface defect dataset with multiple defect types, GZ-DET has certain limitations. This subsection aims to provide further insights and knowledge about GZ-DET to help guide future research on GZ-DET, such as annotating or improving smaller but higher-quality subsets.

Considering the practical situation, the defect types in the GZ-DET dataset are imbalanced. Figure 4 shows the comparison of the number of instances for all defect types in GZ-DET. Specifically, “Br” (Breakage) and “Re” (Reinforcement) defects dominate the dataset, with over 12,000 and 8,000 instances respectively, while categories like “Cr” (Crack) and “Ho” (Hole) are significantly underrepresented. This imbalance may affect the model’s performance on rare defect types and needs to be considered in both training and evaluation strategies, such as using class-balanced sampling or loss functions.

The imbalance in defect type distribution within the GZ-DET dataset primarily stems from the real-world occurrence frequency and visual detectability of different defect types on beam bridges. Certain defects, such as Breakage and Reinforcement, are more prominent and easier to observe during inspection due to their larger size, clearer boundaries, or more frequent appearance in aging infrastructure. In contrast, defects like Crack and Hole are typically smaller, subtler, and sometimes obscured by environmental conditions (e.g., lighting, dirt, or structural shadows), making them more challenging to detect and annotate consistently. Additionally, the availability of inspection data may be biased toward structural components or areas where severe or visually obvious damage is more likely to be captured. As a result, this natural imbalance is reflected in the annotated dataset.

Images under varying lighting and environmental conditions

The GZ-DET dataset covers bridge surface images collected under different lighting conditions and various environments, fully reflecting the complex scenarios that may be encountered in real bridge inspections. As shown in Fig. 5, the dataset demonstrates good diversity and representativeness.

In terms of lighting conditions, the dataset includes images taken in well-lit daytime conditions, where the details are rich and the layers are clear, helping to accurately present the bridge surface’s state. It also contains images from nighttime or shadowed environments with weaker lighting, where the overall brightness is low, and the detail is limited, more realistically reflecting the challenges of bridge inspection under complex lighting conditions. In very dark environments, some images are supplemented with artificial light sources to improve brightness, but noise and blur may still occur, showcasing the challenges of image acquisition in low-light scenarios.

In terms of environmental conditions, GZ-DET includes bridge images from various natural settings. For example, in rainy weather, wet bridge surfaces and puddles often accompany reflections and noise, affecting image clarity. The dataset also includes long-range images of bridges, which are suitable for overall structural observation and assessment, but typically lack detailed information, requiring integration with close-up images for multi-scale analysis. Additionally, images featuring debris or attachments (such as plastic fragments, cables, etc.) on the bridge surface are also included, adding complexity to the images and reflecting the detection difficulties in real-world environments. It is worth mentioning that some images exhibit a yellowish tint, demonstrating the dataset’s diversity in color distribution.

Overall, GZ-DET, by covering various lighting and environmental conditions, realistically reflects the complex scenarios faced in bridge inspection tasks. It provides high diversity and high-quality data support for related research and lays a solid foundation for the development and evaluation of subsequent image processing methods and defect detection strategies.

Data Records

The data records cited in this work are stored in a scientific database, which is a publicly accessible repository for publishing research data from Guiyang University (https://doi.org/10.57760/sciencedb.19893)24. The GYU-DET dataset contains 11,123 RGB images saved in JPG format, with a resolution primarily of 4608×3456. A small portion of the images have resolutions of 2736×3648, 816×1088, and 554×370. It includes 10,432 positive samples with at least one defect and 691 negative samples without defects.

The GYU-DET dataset has been divided into train.zip, valid.zip, and test.zip following an 8:1:1 ratio. Each zip archive contains “images” and “labels” directories, where the annotation files are provided in YOLO “.txt” format. Additionally, a “classes.txt” file listing the six defect categories and their indices is included for clarity.

-

images: This directory stores RGB images, all of which are saved in “.jpg” format. The images are named starting from “1.jpg” and incrementing sequentially to ensure each image has a unique name, supporting the placement of all images in the same folder.

-

labels: This directory stores annotation files, which are saved in “.txt” format and correspond to the image names in the “images” folder. Each text file is named to match the corresponding image, for example, the first text file is named “1.txt.”

This directory structure ensures clear organization of the dataset and the uniqueness of the files, facilitating subsequent data processing and model training.

The annotation of bridge surface defects uses the You Only Look Once (YOLO) format, which was chosen due to its wide usage and ease of adaptation or conversion to other formats. The YOLO format defines bounding box annotations in an image through a row vector with five values:

<object class> <x center> <y center> <x width> <y height>

Among them:

-

<object class> represents the type of bridge surface defect, with integer values ranging from 0 to 5, corresponding to cracks, break, comb, reinforcement, seepage, and holes.

-

<x center> represents the x-coordinate of the center of the bounding box in the image, with a value range from 0 to 1. This value is the normalized x-coordinate of the bounding box center relative to the image width, i.e.,

$$x\,center=\frac{x\,coordinate}{image\,width}$$(1) -

<y center> represents the y-coordinate of the center of the bounding box in the image, with a value range from 0 to 1. This value is the normalized y-coordinate of the bounding box center relative to the image height, i.e.,

$${\rm{y}}\,center=\frac{y\,coordinate}{image\,height}$$(2) -

<x width> represents the width of the bounding box, with a value range from 0 to 1. It is the normalized value of the actual width of the bounding box relative to the image width, i.e.,

$$x\,width=\frac{boundary\,box\,width}{image\,width}$$(3) -

<y height> represents the height of the bounding box, with a value range from 0 to 1. It is the normalized value of the actual height of the bounding box relative to the image height, i.e.,

$$y\,height=\frac{boundary\,box\,height}{image\,height}$$(4)Therefore, the number of lines in each text file in the “labels” folder represents the number of defects annotated in the corresponding image. For example, the annotation file “260.txt” corresponding to the image “260.jpg” in the “images” folder contains the following content:

-

3 0.443531 0.827029 0.148757 0.150768

-

4 0.486659 0.536732 0.099050 0.097588

-

4 0.375731 0.564967 0.019006 0.025768

-

3 0.629203 0.600603 0.013523 0.018092

-

4 0.457602 0.693257 0.018275 0.033443

-

1 0.423977 0.625822 0.303363 0.274671

-

1 0.565241 0.381031 0.068348 0.190789

-

4 0.653692 0.212445 0.029605 0.072917

This image contains a total of 8 bounding box annotations, marking eight defect targets, including four exposed rebar defects, two spalling defects, and two hole defects. The annotation results are shown in Fig. 6.

Technical Validation

Model select

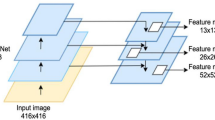

To validate the quality of the dataset and the accuracy of the annotations, the latest object detection algorithm YOLOv1125 was used to verify the dataset. YOLOv11 offers five models of different depths, namely YOLOv11n, YOLOv11s, YOLOv11m, YOLOv11l, and YOLOv11x. Generally, the deeper the model, the more parameters it has, leading to higher accuracy and better performance, but the detection speed decreases accordingly.

Since the goal of this experiment is to validate the quality of the dataset rather than to assess model performance, the YOLOv11n model—featuring the smallest number of parameters—was selected for evaluation. This model consists of a 319-layer neural network with approximately 9.5 MB of parameters.

Preparation work

In this paper, the GYU-DET dataset is randomly divided into training, testing, and validation sets in an 8:1:1 ratio to ensure that the model’s training and evaluation are sufficiently representative and fair. The model training used the PyTorch deep learning framework, running on the Windows 10 operating system. To improve training speed and save time, GPU acceleration was used, with the specific hardware configuration being the GeForce RTX 4090. Additionally, the experimental system has 31.8GB of RAM, Python version 3.11, and CUDA version 12.1, ensuring that large-scale data and complex computations can be effectively supported during training. Table 2 summarizes the software and hardware environment configurations used in the dataset validation experiments.

Additionally, the hyperparameter settings for the YOLOv11n model used in this paper are as follows: batch size of 16, with 300 epochs during model training, and the rest of the parameters set to their default values.

Training results

This paper uses Precision, Recall, F1-Score, and mAP50 metrics for analysis. Figure 7 shows the specific results.

From the Precision-Confidence Curve (top left) and Recall-Confidence Curve (top right), there are clear differences in detection performance across different defect categories. Among them, Re (Reinforcement) and Ho (Hole) perform the best. In the high-confidence region, both precision and recall for these two categories are high, indicating that the model has good recognition capability for these defects, with stable features that are easy to capture. Cr (Crack) and Se (Seepage) perform poorly, with both precision and recall significantly lower than other categories, especially at low confidence levels where false detections are more common. The morphological features of cracks and seepage are complex and easily affected by background interference, leading to inadequate model detection performance. The Br (Breakage) and Co (Comb) categories are at a medium level, with precision and recall gradually increasing as confidence rises, showing relatively stable performance.

From the F1-Confidence Curve (bottom left), the F1 score is a comprehensive balance indicator of precision and recall, reflecting the model’s overall detection performance across different categories: The F1 scores for Re (Reinforcement) and Ho (Hole) remain high at all confidence levels, indicating that the model has achieved a good balance for these two categories and can achieve the best results between precision and recall. The F1 scores for Se (Seepage) and Cr (Crack) categories are low, especially in the high-confidence range, where the F1 score drops sharply. This reflects that the model’s detection for these two categories is unstable, struggling to balance both precision and recall. The F1 scores for Br (Breakage) and Co (Comb) categories are at a moderate level, with performance being adequate, leaving room for further optimization.

From the Precision-Recall Curve (bottom right), the mAP (mean average precision) values for each defect category clearly reflect the model’s detection capability: Re (Reinforcement) has the highest mAP at 0.697, indicating that the detection features for exposed rebar are clear and the model can effectively recognize them. Ho (Hole) and Br (Breakage) have mAP values of 0.667 and 0.601, respectively, showing relatively stable performance, with high detection accuracy in these categories. Co (Comb) has an mAP of 0.566, showing moderate performance, but slightly lower than defects with more obvious features. Cr (Crack) and Se (Seepage) have mAP values of 0.479 and 0.426, respectively, which are the lowest among all categories. This indicates that the model performs poorly in crack and seepage detection tasks, mainly due to the blurriness of defect features, irregular shapes, and insufficient sample sizes.

Validation using the test set

To further validate the annotation quality of the GYU-DET dataset and its effectiveness in practical applications, the pre-trained YOLOv11n model was used to evaluate the test set. During evaluation, the confidence threshold (conf) was set to 0.3, the IoU threshold to 0.5, and the batch size to 16. The detailed results are shown in Fig. 8.

As illustrated in the Precision-Confidence Curve (top left), the overall precision improves steadily with increasing confidence threshold. The Reinforcement (Re) and Hole (Ho) categories demonstrate the highest precision, both approaching 1.0 at optimal thresholds. These categories also have relatively large numbers of annotated instances, enabling the model to learn more robust and discriminative features. Most categories exhibit stable precision trends across confidence levels, indicating reliable prediction behavior.

The Recall-Confidence Curve (top right) reveals that recall decreases as confidence increases, reflecting the typical trade-off between these two metrics. At lower confidence levels, the model captures more true positives, with an overall peak recall of 0.89. Notably, Breakage (Br), Hole (Ho), and Reinforcement (Re) maintain higher recall values across the range, which aligns with their higher representation in the dataset. For instance, Breakage is the most annotated category, providing the model with ample examples to generalize well during inference.

In the F1-Confidence Curve (bottom left), the overall F1-score peaks at 0.56 when the confidence threshold is around 0.30, supporting its selection as a practical balance point between precision and recall. The highest F1-scores are again observed in the Ho and Re categories, further reinforcing the positive impact of adequate sample representation on detection performance.

The Precision-Recall Curve (bottom right) offers a comprehensive view of detection trade-offs across all categories. The mean Average Precision ([email protected]) reaches 0.568. Reinforcement achieves the highest AP of 0.689, followed by Hole (0.641) and Breakage (0.605)—all of which correspond to the most abundant categories in the dataset. In contrast, Crack (Cr) and Seepage (Se), which have fewer labeled instances, show lower APs of 0.455 and 0.465, respectively. This highlights the challenges of learning from limited data, particularly for visually subtle or morphologically variable defect types.

In addition, this paper presents the actual detection results on the test set, as shown in Fig. 9.

In summary, the evaluation results based on multiple detection metrics confirm the high annotation quality, class consistency, and overall reliability of the GYU-DET dataset. The performance differences among defect types are closely related to the quantity of training examples, underscoring the importance of balanced data distribution. These findings demonstrate that GYU-DET not only supports effective model training and evaluation but also holds strong practical value for automated bridge defect detection in real-world applications.

Code availability

The code used in this paper has been made publicly available and can be accessed via the following link: https://github.com/IamSunday/Multi-defect-type-beam-bridge-dataset-GYU-DET.git. To enhance the scalability of the data, we have also provided open-source scripts in the GitHub repository for converting “.txt” format annotations into JSON and XML formats.

References

Ozer, E. & Kromanis, R. Smartphone Prospects in Bridge Structural Health Monitoring, a Literature Review[J]. Sensors 24(11), 3287, https://doi.org/10.3390/s24113287 (2024).

Wu, Y. et al. A study on the ultimate span of a concrete-filled steel tube arch bridge[J]. Buildings 14(4), 896, https://doi.org/10.3390/buildings14040896 (2024).

Xiong, C., Zayed, T. & Abdelkader, E. M. A novel YOLOv8-GAM-Wise-IoU model for automated detection of bridge surface cracks[J]. Construction and Building Materials 414, 135025, https://doi.org/10.1016/j.conbuildmat.2024.135025 (2024).

Zhao, L., Hu, G. & Xu, Y. Educational resource private cloud platform based on OpenStack[J]. Computers 13(9), 241, https://doi.org/10.3390/computers13090241 (2024).

Deng J. et al. Imagenet: A large-scale hierarchical image database[C]//2009 IEEE conference on computer vision and pattern recognition. Ieee, 248–255. https://doi.org/10.1109/CVPR.2009.5206848 (2009).

Lin T. Y. et al. Microsoft coco: Common objects in context[C]//Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13. Springer International Publishing, 740–755, https://doi.org/10.1007/978-3-319-10602-1_48 (2014).

Everingham, M. et al. The pascal visual object classes (voc) challenge[J]. International journal of computer vision 88, 303–338, https://doi.org/10.1007/s11263-009-0275-4 (2010).

Zhao, L. et al. A cost-sensitive meta-learning classifier: SPFCNN-Miner[J]. Future Generation Computer Systems 100, 1031–1043, https://doi.org/10.1016/j.future.2019.05.080 (2019).

Bianchi, E. & Hebdon, M. Visual structural inspection datasets[J]. Automation in Construction 139, 104299, https://doi.org/10.1016/j.autcon.2022.104299 (2022).

Eisenbach, M. et al. How to get pavement distress detection ready for deep learning? A systematic approach, in: Proceedings of the International Joint Conference on Neural Networks vols. pp. 2039–2047, https://doi.org/10.1109/IJCNN.2017.7966101 (2017).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition, in: International Conference on Intelligent Robots and Systems (IROS), Accessed: May 26, 2020. [Online]. Available: http://arxiv.org/abs/1409.1556 (2014).

Huethwohl, P. Cambridge Bridge Inspection Dataset. Apollo - University of Cambridge Repository https://doi.org/10.17863/CAM.13813 (2017).

Dorafshan, S., Thomas, R. J. & Maguire, M. SDNET2018: an annotated image dataset for non-contact concrete crack detection using deep convolutional neural networks. Data Brief. 21, 1664–1668, https://doi.org/10.15142/T3TD19 (2018).

Hüthwohl, P., Lu, R. & Brilakis, I. Multi-classifier for reinforced concrete bridge defects. Automation in Construction 105(April), 102824, https://doi.org/10.1016/j.autcon.2019.04.019 (2019).

Bukhsh, Z. A., Anžlin, A. & Stipanović, I. BiNet: Bridge Visual Inspection Dataset and Approach for Damage Detection[C]//Proceedings of the 1st Conference of the European Association on Quality Control of Bridges and Structures: EUROSTRUCT 2021 1. Springer International Publishing: 1027–1034, https://doi.org/10.1007/978-3-030-91877-4_117 (2022).

Mandal V., Uong L. & Adu-Gyamfi, Y. Automated road crack detection using deep convolutional neural networks, in: IEEE International Conference on Big Data (Big Data), pp. 5212–5215, https://doi.org/10.1109/BigData.2018.8622327 (2018).

Mundt M. et al. Meta-learning convolutional neural architectures for multi-target concrete defect classification with the concrete defect bridge image dataset[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition: 11196–11205. https://doi.org/10.48550/arXiv.1904.08486 (2019).

Ichi, E., Jafari, F. & Dorafshan, S. SDNET2021: Annotated NDE Dataset for Subsurface Structural Defects Detection in Concrete Bridge Decks[J]. Infrastructures 7(9), 107, https://doi.org/10.3390/infrastructures7090107 (2022).

Arya, D., Maeda, H., Ghosh, S. K., Toshniwal, D. & Sekimoto, Y. RDD2020: an annotated image dataset for automatic road damage detection using deep learning. Data in Brief 36, 107133, https://doi.org/10.1016/j.dib.2021.107133 (2021).

Bianchi, E., Abbott, A. L., Tokekar, P. & Hebdon, M. COCO-bridge: structural detail data set for bridge inspections. Journal of Computing in Civil Engineering 35(3), 04021003, https://doi.org/10.1061/(asce)cp.1943-5487.0000949 (2021).

Bianchi E. & Hebdon, M. COCO-Bridge 2021+ Dataset, University Libraries, Virginia Tech, https://doi.org/10.7294/16624495.v1 (2021).

Liu, H. Maintenance Methods and Strategies for Existing Bridges in China[J]. Applied and Computational Engineering 89, 178–183, https://doi.org/10.54254/2755-2721/89/20241110 (2024).

Meoni, A. et al. A procedure for bridge visual inspections prioritisation in the context of preliminary risk assessment with limited information[J]. Structure and Infrastructure Engineering 21(3), 394–420, https://doi.org/10.1080/15732479.2023.2210547 (2025).

Zhao, L. & Li, R. GYU-DET. V3. Science Data Bank. https://doi.org/10.57760/sciencedb.19893 (2025).

Khanam, R. & Hussain, M. Yolov11: An overview of the key architectural enhancements[J]. arXiv preprint arXiv:2410.17725. https://doi.org/10.48550/arXiv.2410.17725 (2024).

Acknowledgements

The authors would like to thank the anonymous reviewers for their comments that helped improve this paper. This work was supported in part by the Research on the Guizhou Provincial Key Laboratory for Digital Protection, Development and Utilization of Cultural Heritage (ZSYS[2025]012), the Quality Monitoring System for Master's Degree Programs in Electronic Information Based on Analytic Hierarchy Process (2024JG01), the Guiyang City Science and Technology Plan Project ([2024]2-22), the Guizhou Provincial Science and Technology Plan Project (QKTY[2024]017), the Science and Technology Foundation of Guizhou Province (QKHJC-ZK[2023]012, QKHJC-ZK[2022]014), the Action Research on Blended Teaching of Machine Learning under the Curriculum Ideology and Politics Concept in the Field of Big Data [GYU-YG[2025]-06], the Science and Technology Research Program of Chongqing Municipal Education Commission (KJQN202200715), the Guiyang University Multidisciplinary Team Construction Projects in 2025 [Gyxk202505], the Guiyang University Start-up Fund for Recruited Talents Research Project (GYU-KY-2025), the National Natural Science Foundation of China (U23A20341) and IER Foundation 2023 (IERF202304, IERF202205).

Author information

Authors and Affiliations

Contributions

Ruiping Li: Writing-original draft, Methodology. Linchang Zhao: Writing-review and editing. Hao Wei: Validation. Bocheng OuYang: Supervision, Investigation. Mu Zhang: Resources. Yongchi Xu: Formal analysis. Guoqing Hu and Jin Tan: Data curation.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, R., Zhao, L., Wei, H. et al. Multi-defect type beam bridge dataset: GYU-DET. Sci Data 12, 1101 (2025). https://doi.org/10.1038/s41597-025-05395-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-025-05395-w