Abstract

Due to the lack of underground space and lighting in coal mines, coal mine images suffer from low contrast, poor clarity and uneven brightness, which severely obstacles the visual task achievement in underground coal mines. Since the coal mine dust image has a special black shift, the existing ground and underwater defogging methods cannot play a role in the coal mine dust image with the black shift. Therefore, this paper proposes a method of coal mine dust image defogging with a three-stream and three-channel color balance, which is specially used for the restoration of disturbed coal mine images. The method performs color balance on the image R, G, and B channels respectively to eliminate the color shift caused by the coal mine environment; then uses a quad-tree subdivision search algorithm and dark channel prior to obtain the atmospheric light and transmittance of the three-channel color balanced image, respectively; then proposes a weighting algorithm to realize transmittance fusion of three-stream coal mine images, and finally realizes coal mine dust image defogging according to the haze weather degradation model. Extensive experimental results on the ground, underwater, sand and dust images and real coal mine images show that our method outperforms state-of-the-art coal mine dust image defogging algorithms and has good generality.

Similar content being viewed by others

Introduction

For mining and mining engineering, important applications such as smart mining, mine disaster monitoring and robot rescue require clear and high-quality images, however, due to the coal mine environment, the actual acquired coal mine images have low visual characteristics such as blur, low resolution and low contrast, which cannot meet the needs of vision tasks in coal mines. Therefore, there is a need to develop fast, accurate and effective image restoration techniques to improve the sharpness, contrast and color characteristics of coal mine images.

Coal mines have a special working environment, and shearers produce a large amount of coal dust during the production process, which forms a smoke effect in a light environment1. The camera is affected by this smoke effect when capturing images in coal mines, and dust and fog images are usually obtained. Due to the lack of underground space and lighting in coal mines significantly different from the surface environment, coal mine dust images are more prone to be blurred and have lower resolution and contrast than traditional ground haze images (as shown in Fig. 1), and traditional defogging methods cannot effectively recover coal mine dust images.

There have been some attempts to recover and enhance degraded coal mine images1,2,3,4. Wu et al.1 proposed dark primary color prior and Contrast Limited Adaptive Histogram Equalization (CLAHE)5 algorithm for coal mine dust image enhancement. In1, Wu et al. used the quad-tree subdivision6 search method to seek atmospheric light and then combined with CLAHE algorithm to enhance the image according to the special conditions of coal mines. Although the dust fog in the image is effectively removed, there are still problems such as resolution reduction and color distortion. Li et al.2 proposed a cuckoo search-based image enhancement method, which significantly improved the brightness and contrast of coal mine images and made the images more distinguished. However, the method can not realize image defogging. Shang et al.3 utilized Gaussian white noise to blur the coal mine image and restore the image by a K-fold cross-validation BP neural network, but did not consider that the actual environment is much more complex than Gaussian white noise.Eventually, image enhancement is achieved by training both blurred and clear images, but the method requires many training samples and training time. Although the above methods achieve the enhancement of coal mine images, no specialized restoration method has been proposed for the images affected by dust. And Tian et al.4 noticed this problem in their work and proposed a coal mine image enhancement algorithm based on dual-___domain decomposition to achieve image defogging in low-frequency images, thus eliminating the effect of dust on the images. However, the method simply uses the traditional defogging algorithm to defog the low-frequency images, ignoring the color shift effect of the coal mine dust images compared to the ground images. The final recovered images have the problem of color distortion, and the defogging effectiveness is not good. In order to solve the problems of the above methods and get clearer and more realistic coal mine dust restoration images, this paper proposes a coal mine image restoration method with three-stream and three-channel color balance, which can quickly and accurately eliminate the color shift and dust effects of coal mine images. The main contributions of this paper are as follows:

-

We propose a three-channel color balance algorithm that preserves the mean values of the R, G, and B channels, respectively, and eliminates the gray shift produced by coal mine dust through a three-channel color balanced image.

-

We propose a three-stream atmospheric light value estimation algorithm based on the quad-tree subdivision search for image defogging.

-

We propose a distance level-based weight estimation method for three-stream transmittance fusion.

Related work

Color balance

Different sensitivities of color channels, light enhancement factors and offsets are the main causes of color imbalance in images, and color balance process is to correct imbalanced images. In the 1980s, Buchsbaum et al.7 developed an integrated mathematical model for color correction based on the color consistency principle. Van et al.8 proposed color consistency algorithms based on the gray edge assumption. This algorithm combines various known (e.g., gray world, maximum RGB, Minkowski parametrization), newly proposed, and higher-order gray edge algorithms to achieve color correction. To recover color images more simply and effectively, Li et al.9 improved the gray world method and calculated the weights by matching the average color values with the gray reference values in the YUV color space. Finally, color restoration of images was achievable. Although these methods are classical color correction algorithms, they are not directly suitable for adjusting color shifts or casting of dust images. Fu et al.10 performed color correction of images based on a statistical strategy. Although the method achieved good results in images with lighter color shifts, it had no effect on images with deep color shifts. Wang et al.11 achieved color cast correction by combining two chromaticity components in Lab color space, but the corrected images are prone to having blue color phenomenon. Recently, Park et al.12 proposed a continuous color balance algorithm for removing the color veil of images in order to solve the defects of the above color correction algorithm. The R and B channels of the image were balanced according to the color distribution characteristics of yellow sand images. Although this method effectively removes the color veil of images, more improvements are needed for the black veil of coal mine images.

Image defogging

The existing defogging methods mainly include priori-based methods13,14,15, fusion-based methods16,17,18, and learning-based methods19,20,21,22. Ancuti et al.13 proposed a fast half-inverse image defogging method by applying a single-pixel operation to the original image to generate a “half-inverse” of the image. Then, the atmospheric light constant and transmittance were estimated, based on the difference in hue between the original and its half-inverse images. Although image defogging was achievable to some extent in13, the defogging effect still can be improved. For more accurate and effective image defogging, He et al.14 proposed a dark channel prior (DCP) technique for single image defogging, which is simple but shows amazing defogging effectiveness and brings great progress to the field of image defogging. Although their work is very effective, the prior does not hold in the bright regions of the scene. Therefore, Berman et al.15 proposed a nonlocal prior defogging method by observing the color clusters in RGB space for fogged and fog-free images. The clear images were recovered based on the fog lines formed by the color clusters. However, these prior-based methods only work well in predetermined scenarios23.

Ancuti et al.16 extracted information from two original blurred images and fused important features through a white balance and contrast enhancement procedure. Their work demonstrated the applicability and effectiveness of fusion-based techniques for image defogging. Gao et al.18 proposed a self-constructed image fusion method for single image defogging. The image defogging was achieved by fusing self-constructed images with different exposure degrees. Although fusion-based defogging methods do not require a predetermined scene, the defogging effectiveness is poor when the fog concentration is high. Learning-based approaches have been proposed in recent years to address the drawbacks of the prior and fusion-based methods. Among them, Cai et al.19 first proposed an end-to-end network: DehazeNet, which utilized haze images as input to estimate the transmittance and recovered fog-free images by the physical scattering model. Since most defogging methods use the individual pipe to estimate transmittance and atmospheric light, which reduces the efficiency of the defogging model and accumulates errors. Zhu et al.20 introduced a new synthetic generator and depth-supervised discriminator in their work. Transmittance and atmospheric light can be learned directly from the data and a clear image can be recovered from the haze image in end-to-end. Engin et al.21 also proposed an end-to-end defogging network called Cycle-Dehaze. Unlike work in19,20, Cycle-Dehaze does not rely on atmospheric scattering models to estimate parameters but improves the quality of texture information restoration and generates visually better fog-free images by incorporating period consistency and perception loss. To remove impurities from images more comprehensively, Zhang et al.22 decomposed images into two parts, i.e., basic content and detail features to be processed separately. Two parallel branches were used to learn the basic content and detail features of haze images, and image defogging was achieved by dynamically fusing the features of basic content and image details. Most learning-based defogging methods are trained on pairs of clear and synthetic fog images of the same scene, which limits their defogging ability in real situations23. Also, model training takes a lot of time, and the robustness and accuracy of the models depend heavily on the size of the training sample set.

Based on the above-related work, we propose a fast, accurate, and highly robust method for defogging coal mine dust images, which not only overcomes the shortcomings of existing methods but also effectively solves the problems specific to coal mine images.

Methods

Our proposed coal mine dust image defogging model mainly consists of two parts: three-channel color balance and three-stream image defogging, and the structure of the coal mine dust image defogging model is shown in Fig. 2.

we will introduce the three-channel color balance and three-stream image defogging modules respectively.

Three-channel color balance

Before color balance for the coal mine dust images, the color distribution of the images needs to be analyzed. The image color is affected by the coal mine environment (i.e., the low light intensity and the black coal dust) when capturing images, and the captured images are usually dark. According to the optical principle, black absorbs all colors, thus, different from the processing of other color-shifted images, we need to enhance color in the R, G and B channels at the same time.

We first perform the initial color correction using weighted color compensation as shown in Eq. (1):

where \(I_1^c\left( x \right)\) represents the pixel of the initial color correction, c represents the color channel, i.e. \(c \in \left\{ {r,g,b} \right\}\); \({I^c}\left( x \right)\) represents the input mine image color channel; \({I^{\{ r,g,b\} }}\left( x \right)\) represents \({I^r}\left( x \right)\), \({I^g}\left( x \right)\) and \({I^b}\left( x \right)\) respectively;\({\Delta ^c}\) is the weight of the corresponding color pixel, as shown in Eq. (2) :

where \(\delta _{md}^c\) and \(\delta _{sr}^c\) represent the global weighting factors of the mean difference and standard deviation rate, respectively, which can be obtained from Eqs (3) and (4). And \(\delta _{wm}^c\left( x \right)\) is a local weighting factor related to the pixel, and the color is corrected according to the intensity of the color pixel, as shown in Eq. (5).

In (3) and (4), \(m\left( \cdot \right)\) and \(\sigma \left( \cdot \right)\) represent mean operation and standard deviation operation, respectively, while k in (5) is a control parameter, which can be calculated by Eq. (6):

Then, according to the characteristics of coal mine dust images, the initial color-corrected images are normalized to guarantee that the color of correcting images look natural, and for this purpose, we propose an image normalization method in which the color mean value of each channel is preserved.

According to the gray world theory assumption7, all color values are almost equal and the color in each channel is averaged over the whole image as gray, then the normalization method for preserving color mean in each channel can be expressed as:

Using the image normalization process can remove the gray veil from the coal mine image and achieve the color correction of the coal mine image. In addition, it can be seen from Eq. (7) that the image normalization process is influenced by the initial color correction of the image, thus, the initial color correction is crucial for the color balance.

Three-stream image defogging module

The three-stream image defogging module is proposed based on the dark channel prior14, and the haze weather degradation model14 is represented by Eq. (8):

in the Eq. (8), \(I\left( {x,y} \right)\) represents the collected foggy image; \(J\left( {x,y} \right)\) is the clear image to be restored; \(t\left( {x,y} \right)\) is the scene transmittance, which is used to describe the scene image transmittance ability from the air medium to the imaging device; A is the ambient atmospheric light. A clear haze-free image can be recovered by calculating the transmittance and atmospheric light of the haze image.

According to the dark channel prior principle, the transmittance \(t\left( {x,y} \right)\) can be expressed as:

where, \(\omega\) is tested its value is 0.95 in14.

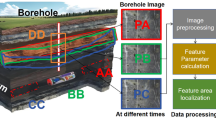

It can be seen from Eq. (9) that if we want to obtain the transmittance \(t\left( {x,y} \right),\) we first need to estimate the atmospheric light A. To this end, we introduce the quad-tree subdivision search algorithm6 to help estimate the atmospheric light of the image. The steps for estimating atmospheric light are as follows:

Step 1: Divide the input hazy map into four rectangular blocks;

Step 2: Find the difference between the pixel mean and the pixel variance in each rectangular block;

Step 3: Take the largest value among the four blocks;

Step 4: Continue steps 1-3 until the rectangular block is less than the set threshold;

Step 5: Take the maximum value of the pixel to make \(\left\| {\left. {\left( {{I_r}\left( {x,y} \right), {I_g}\left( {x,y} \right), {I_b}\left( {x,y} \right) } \right) - (255,255,255)} \right\| } \right.\) the smallest.

The estimation process of A is shown in Fig. 3.

In Fig. 3, the red area is the selected area, and the RGB three-channel average of this area can represent the atmospheric light: \(A = \left[ {{A_r},{A_g},{A_b}} \right] = \left[ {{\overline{R}}, {\overline{G}}, {\overline{B}} } \right].\)

Finally, the transmittance \(t\left( {x,y} \right)\) can be estimated from the atmospheric light A.

To recover a clearer coal mine image, we propose a weight estimation method based on the distance level (i.e., the difference between one of a series of values and the average value, divided into positive and negative distance levels) for fusing the transmittance of three-stream images, as shown in Eq. (10):

in Eq. (10),\({t_f}\left( {x,y} \right)\), \({t_r}\left( {x,y} \right)\), \({t_g}\left( {x,y} \right)\) and \({t_b}\left( {x,y} \right)\) represent the transmittance of the original image, color-corrected images by preserving the mean of R, G and B channels respectively. And \({n_r}\), \({n_g}\) and \({n_b}\) are the corresponding fusion coefficients, where n is calculated as shown in (11):

where \({t_m}\left( {x,y} \right)\) is the mean of \({t_r}\left( {x,y} \right)\), \({t_g}\left( {x,y} \right)\) and \({t_b}\left( {x,y} \right)\); \({t_d}\left( {x,y} \right)\)is the difference between \({t_r}\left( {x,y} \right)\) (or \({t_g}\left( {x,y} \right)\) or \({t_b}\left( {x,y} \right)\)) and the mean \({t_m}\left( {x,y} \right)\).

Based on the fused transmittance \(t\left( {x,y} \right)\) and atmospheric light A, a defogged dust image in the underground coal mine is obtained.

Experimental

Our method is implemented using python3.8 and OpenCV on an NVIDIA 2080Ti GPU. Firstly, our method is applied to existing ground haze images, underwater images, sand and dust images and real dust images of coal mines, respectively. The generality of our method is verified by processing ground haze images, the effectiveness of our method on color-shifted images is verified by processing underwater images and sand and dust images, and the practicality and accuracy of our method is verified by the defogging results on coal mine dust images. Then our method is compared with representative methods, and at the same time a quality evaluation of the restoration of mine images is conducted. Finally, a series of ablation studies are designed to validate each module of our method.

Experimental results

Our method dehazes existing ground fog images, underwater images, and sand and dust images and compares them with the dark channel prior method (DCP)14 and the latest image defogging method IDE24 and RIDCP25, the results are shown in Fig. 4.

As shown in Fig. 4, for ground haze images, DCP, IDE, RIDCP and our method can achieve image defogging, and our method has better defogging effect compared with the DCP, but the IDE’s defogging effect is better than our method. For images with color shifts such as underwater and sand, the DCP does not have a significant defogging effect, and the IDE also did not show good defogging and color correction effects. However, our method and RIDCP effectively remove the image blur, enhances the image contrast, highlights the image details, and has a better restoration. This verifies the generality and effectiveness of our method.

Meanwhile, we defog four sets of coal mine dust images and compare them with the existing latest coal mine dust image defogging methods (reproduced by ourselves) and the latest image defogging method IDE and RIDCP, the results are shown in Fig. 5.

In Fig. 5, for the input coal mine original image, the DCP, DCP&CLA, MIE, IDE, RIDCP and our proposed method all achieve image defogging, but the results vary greatly. The DCP and IDE has the worst defogging effect among the six methods, while the DCP&CLA and MIE methods obtain better defogging effect than the DCP and IDE, but the visual effect is poor due to the problem of low resolution and color distortion arising from excessive image enhancement. Although RIDCP has achieved better recovery effects than DCP, DCP&CLA, MIE, and IDE, there are still dust and fog effects.Our method not only achieves the best defogging effect, but also avoids the disturbances such as color distortion and low resolution, which can demonstrate the practicality of our method in the actual coal mine image restoration. IDE and RIDCP are the latest ground image defogging method, from the results in Fig. 5, we can see that IDE does not play an advantage in coal mine images, not only does not achieve the defogging effect, but also brings color shift, and RIDCP also failed to achieve better dehazing effect due to the black offset problem in the underground environment, which further explains the necessity of our method.

Quality evaluation

Generally, the evaluation metrics for image restoration effectiveness are divided into two categories, i.e., reference and non-reference methods. However, real-world fog-free reference images cannot be used to compare the restoration effect of the proposed method and other existing methods, thus this paper uses the non-reference method to evaluate the restoration effect. The three available quantification metrics for the non-reference method are e (representing the restoration rate of visible edges in haze-free image),\({\overline{r}}\) (meaning the quality of restoration of contrast in haze-free image), and \(\sigma\) (representing the number of white and black pixels from overexposure and underexposure in the recovered image). From26, we know that e, \({\overline{r}}\) and \(\sigma\) are calculated as follows:

In Eq. (12), \({V_r}\) and \({V_o}\) represent the cardinal numbers of the set of visible edges in restored image and original image, respectively. In Eq. (13), \({P_i}\) is the corresponding element in the set \(\wp r\), and \({r_i}\) is the gradient rate between the restored image and the original hazy image. In Eq. (14), \({V_s}\) represents the total number of overexposed and underexposed pixels in the recovered image, and \({\dim _x}{\dim _y}\) represents the size of the incoming image.Therefore, we know that when e and \({\overline{r}}\) have larger values, it indicates that the restoration effect is better, and when the value of \(\sigma\) is smaller, it has a better restoration effect.

According to the three evaluation indexes of non-reference image restoration, we evaluated the restoration quality of the proposed method and existing coal mine image restoration methods, and the evaluation results of different methods for the original images of groups 1–4 in Fig. 5 are shown in Tables 1, 2, 3 and 4.

The bolded in the table indicates the best result. From the evaluation results in Tables 1, 2, 3, 4, it is clear that our method has a better restoration effect than other coal mine dust image restoration methods.

To investigate the effectiveness of our proposed three-channel color balance and three-stream defogging model, a series of ablation experiments are designed to verify the effect of each module on the defogging effect of coal mine dust images.

Ablation studies

First, for the validation of the three-channel color balance module, we remove the three-channel color balance module of the model and directly estimate the transmittance and atmospheric light from the original coal mine image according to the dark channel prior and quad-tree subdivision search algorithm6 (shown in Fig. 6), and restore the image according to the transmittance and atmospheric light, and the original image and the corresponding restored image are shown in Fig. 7.

In Fig. 7, the removal of the three-channel color balance module method is worse in defogging compared to our full model, because the image without color balance is affected by the three-channel color shift, which leads to a smaller atmospheric light and affects the restoration of the image. It shows that the color balance module has an important role in our method for image defogging.

In addition, we also designed an ablation experiment of the three-stream image dehazing module, removing the three-stream branch of the module, and passing the color-balanced image through a single dehazing module to obtain transmittance and atmospheric light (as shown in Fig. 8). According to the transmittance and atmospheric light to restore the image, the original image and the corresponding restored image are shown in Fig. 9.

In Fig. 9, both our full model and the method of removing three-stream branches achieve the dehazing of the original coal mine image, but compared with our full model, the dehazing effect of removing three-stream branches is not ideal, because the single transmittance limits the defogging capability of the model. It can be seen from the comparison results that the three-stream branch is also the key to achieve high-quality image restoration in our method.

In order to more intuitively display the contribution of the multi-channel color balance module and multi-stream branch to the restoration of dust and fog images in coal mines, the restored images after removing the multi-channel color balance module and multi-stream branch are quantitatively evaluated. The evaluation results are shown in Table 5, 6, 7, 8, further verifying the contribution of the proposed multi-channel color balance module and multi-stream branch.

Limitations and future work

Although the method proposed in this paper has achieved good results in coal mine image restoration, there are some failure cases in the dehazing of some ground images, as shown in Fig. 10.

It can be seen from Fig. 10 that the restoration effect of our method on the original image in the figure has the phenomenon of color distortion and poor dehazing effect. By observing and comparing the results of the proposed image restoration method in coal mine and ground images, we analyze two main reasons for the above phenomenon.

-

(1)

Our method is a color balance module proposed for mine-specific gray-shifted images, which enhances three color channels of R, G, and B. Therefore, when performing color balancing for other images with monochromatic color shifts on the ground, there is a possibility of over-enhancement, resulting in color distortion of the restored image.

-

(2)

Our method does not consider the distribution of fog concentration in the image when dehazing an image, and fog is considered evenly distributed in the image. Therefore, defogging images with different fog density distributions in an image leads to poor defogging. Solving the above two problems will be the main content of our future work.

Conclusion

We propose a coal mine image restoration method with three-stream and three-channel color balance. First, the gray color shift of the mine image affected by light and dust is eliminated by reserving the R, G, and B three-channel color mean values respectively; Then the atmospheric light and transmittance of the images after color balancing in the R, G and B channels are obtained based on quad-tree subdivision search and dark channel prior, respectively. Then a distance-level value-based weighting algorithm is designed for the fusion of three-stream atmospheric light and transmittance. Finally, the coal mine dust image is defogged transmittance to achieve the restoration of coal mine images. We design many experiments on the ground, sand, underwater and real coal mine dust images. The restoration experiments on ground, sand and underwater images demonstrate the generality of our approach. Experiments on real coal mine dust images and comparisons with other methods show that our method not only meets the practical requirements of coal mine dust image defogging but also has better restoration results compared with existing methods.

Data availability

The datasets used in the present study are available from https://github.com/caopping/DehazingCoalMineDustImages.git, the datasets’ name is Allimage.zip.

References

Wu, D. & Zhang, S. Research on image enhancement algorithm of coal mine dust. In 2018 International Conference on Sensor Networks and Signal Processing (SNSP), 261–265, https://doi.org/10.1109/SNSP.2018.00057 (2018).

Li, C., Liu, J., Zhu, J., Zhang, W. & Bi, L. Mine image enhancement using adaptive bilateral gamma adjustment and double plateaus histogram equalization. Multimed. Tools Appl. 81, 12643–12660. https://doi.org/10.1007/s11042-022-12407-z (2022).

Shang, C. A novel analyzing method to coal mine image restoration. In Proceedings of the 2015 Asia-Pacific Energy Equipment Engineering Research Conference, Zhuhai, China, 13–14, https://doi.org/10.2991/ap3er-15.2015.67 (2015).

Tian, Z., Wang, M.-L., Jun, W., Gui, W. & Wang, W. Mine image enhancement algorithm based on dual ___domain decomposition. Acta Photonica Sin. 48, 0510001 (2019).

Pisano, E. D. et al. Contrast limited adaptive histogram equalization image processing to improve the detection of simulated spiculations in dense mammograms. J. Digit. Imaging 11, 193–200. https://doi.org/10.1007/BF03178082 (1998).

Kim, J.-H., Jang, W.-D., Sim, J.-Y. & Kim, C.-S. Optimized contrast enhancement for real-time image and video dehazing. J. Vis. Commun. Image Represent. 24, 410–425. https://doi.org/10.1016/j.jvcir.2013.02.004 (2013).

Buchsbaum, G. A spatial processor model for object colour perception. J. Frankl. Inst. 310, 1–26. https://doi.org/10.1016/0016-0032(80)90058-7 (1980).

Van De Weijer, J., Gevers, T. & Gijsenij, A. Edge-based color constancy. IEEE Trans. Image Process. 16, 2207–2214. https://doi.org/10.1109/TIP.2007.901808 (2007).

Liu, C., Chen, X. & Wu, Y. Modified grey world method to detect and restore colour cast images. IET Image Proc. 13, 1090–1096. https://doi.org/10.1049/iet-ipr.2018.5523 (2019).

Fu, X., Huang, Y., Zeng, D., Zhang, X.-P. & Ding, X. A fusion-based enhancing approach for single sandstorm image. In 2014 IEEE 16th International Workshop on Multimedia Signal Processing (MMSP), 1–5, https://doi.org/10.1109/MMSP.2014.6958791 (2014).

Wang, J., Pang, Y., He, Y. & Liu, C. Enhancement for dust-sand storm images. In International Conference on Multimedia Modeling, 842–849, https://doi.org/10.1007/978-3-319-27671-7_70 (2016).

Park, T. H. & Eom, I. K. Sand-dust image enhancement using successive color balance with coincident chromatic histogram. IEEE Access 9, 19749–19760. https://doi.org/10.1109/ACCESS.2021.3054899 (2021).

Ancuti, C. O., Ancuti, C., Hermans, C. & Bekaert, P. A fast semi-inverse approach to detect and remove the haze from a single image. In Asian Conference on Computer Vision, 501–514, https://doi.org/10.1007/978-3-642-19309-5_39 (2010).

He, K., Sun, J. & Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33, 2341–2353. https://doi.org/10.1109/TPAMI.2010.168 (2010).

Berman, D. et al. Non-local image dehazing. In Proceedings of the IEEE conference on computer vision and pattern recognition, 1674–1682 (2016).

Ancuti, C. O. & Ancuti, C. Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 22, 3271–3282. https://doi.org/10.1109/TIP.2013.2262284 (2013).

Galdran, A. Image dehazing by artificial multiple-exposure image fusion. Signal Process. 149, 135–147. https://doi.org/10.1016/j.sigpro.2018.03.008 (2018).

Gao, Y., Su, Y., Li, Q., Li, H. & Li, J. Single image dehazing via self-constructing image fusion. Signal Process. 167, 107284. https://doi.org/10.1016/j.sigpro.2019.107284 (2019).

Cai, B., Xu, X., Jia, K., Qing, C. & Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 25, 5187–5198. https://doi.org/10.1109/TIP.2016.2598681 (2016).

Zhu, H. et al. Single-image dehazing via compositional adversarial network. IEEE Trans. Cybern. 51, 829–838. https://doi.org/10.1109/TCYB.2019.2955092 (2019).

Engin, D., Genç, A. & Kemal Ekenel, H. Cycle-dehaze: Enhanced cyclegan for single image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 825–833, https://doi.org/10.48550/arXiv.1805.05308 (2018).

Zhang, X., Jiang, R., Wang, T. & Luo, W. Single image dehazing via dual-path recurrent network. IEEE Trans. Image Process. 30, 5211–5222. https://doi.org/10.1109/TIP.2021.3078319 (2021).

Guo, Y. et al. Scanet: Self-paced semi-curricular attention network for non-homogeneous image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1885–1894, https://doi.org/10.48550/arXiv.2304.08444 (2023).

Huang, S.-C., Ye, J.-H. & Chen, B.-H. An advanced single-image visibility restoration algorithm for real-world hazy scenes. IEEE Trans. Ind. Electron. 62, 2962–2972. https://doi.org/10.1109/TIE.2014.2364798 (2014).

Wu, R.-Q., Duan, Z.-P., Guo, C.-L., Chai, Z. & Li, C. Ridcp: Revitalizing real image dehazing via high-quality codebook priors. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 22282–22291, https://doi.org/10.48550/arXiv.2304.03994 (2023).

Shao, Y., Li, L., Ren, W., Gao, C. & Sang, N. Domain adaptation for image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2808–2817, https://doi.org/10.48550/arXiv.2005.04668 (2020).

Acknowledgements

This work is supported by the Doctoral research start-up fee of Cao Pingping for 2024 No.2024KYQD0055 and Fuyang Normal University Youth Talent key project (rcxm202303).

Author information

Authors and Affiliations

Contributions

Cao Pingping conceived and designed the method and experiment, Wang Xianchao and Li Linguo conducted the experiment, and Liu Mingjun and Wang Mengting analyzed the results. All authors have reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Cao, P., Wang, X., Li, L. et al. An improved and advanced method for dehazing coal mine dust images. Sci Rep 15, 11235 (2025). https://doi.org/10.1038/s41598-025-95912-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-95912-z