Abstract

Digital information has permeated all aspects of life, and diverse forms of information exert a profound influence on social interactions and cognitive perceptions. In contrast to the flourishing of digital interaction devices for sighted users, the needs of blind and partially sighted users for digital interaction devices have not been adequately addressed. Current assistive devices often cause frustration in blind and partially sighted users owing to the limited efficiency and reliability of information delivery and the high cognitive load associated with their use. The expected rise in the prevalence of blindness and visual impairment due to global population ageing drives an urgent need for assistive devices that can deliver information effectively and non-visually, and thereby overcome the challenges faced by this community. This Perspective presents three potential directions in assistive device design: multisensory learning and integration; gestural interaction control; and the synchronization of tactile feedback with large-scale visual language models. Future trends in assistive devices for use by blind and partially sighted people are also explored, focusing on metrics for text delivery efficiency and the enhancement of image content delivery. Such devices promise to greatly enrich the lives of blind and partially sighted individuals in the digital age.

Similar content being viewed by others

Introduction

Vision impairment is a major global issue that affects 2.2 billion people (27.5% of the world’s population). Of these individuals, 70% have vision impairment and 2% have blindness1. With their close links to ageing, the prevalence of both visual impairment and blindness is expected to increase rapidly as population ageing rates accelerate globally2. For the rapidly increasing population of individuals with impaired vision, the already ubiquitous digital information needed for education, social interaction and entertainment purposes can be very challenging to access, as nearly all current digital interactions rely on vision3,4. This reliance results in unintended exclusion and unfair treatment of blind and partially sighted people. Therefore, there is an urgent need for assistive technologies that enable digital interaction for these groups of individuals. Such devices could potentially enhance the daily quality of life for blind and partially sighted individuals, improve their employment opportunities and prospects, and promote increased social inclusivity and equality.

For blind and partially sighted individuals, interacting with digital information poses several challenges. People with impaired vision need to utilize non-visual methods of information delivery, including auditory, tactile and multimodal interactions, to facilitate comprehension of its content. These difficulties are further compounded when considering the distinct needs of different age groups. Older adults (aged ≥60 years) often experience decreased cognitive processing speed and reduced motor dexterity, which complicate their interactions with complex or fast-paced systems5. Additionally, the decline in primary sensory functions associated with ageing can make it difficult for older individuals to accurately interpret auditory or tactile feedback6,7. Children with congenital or early-onset vision impairment face distinct challenges related to cognitive development, language acquisition and spatial understanding8,9 that can make abstract concepts difficult to grasp and hinder adaptation to new interaction methods. These age-specific challenges require tailored solutions to overcome these accessibility barriers while ensuring the equitable delivery of information.

Although current devices for use by blind and partially sighted people offer plausible substitutes for visual information, the representations of visual information provided by these devices are often incomplete or even inaccurate3,10,11. Another critical limitation is that these devices typically convert visual elements into text descriptions, which are often not provided in a sufficiently timely fashion and might also fail to fully capture the richness of visual information10,12,13. These limitations can prevent blind and partially sighted device users from selectively comprehending key information. Such inadequacies in information representation arise from an insufficient understanding of these users’ personalized interaction needs and the complexities of multimodal information presentation. Therefore, the research and development of intelligent assistive devices that can provide efficient and rich information delivery is particularly urgent and adds considerable value.

In this Perspective, we summarize the opportunities and challenges associated with current assistive devices, in terms of their information delivery efficiency and cognitive burden (Fig. 1). We propose design ideas for future wearable assistive devices that could address existing knowledge gaps via the optimization of multisensory learning, to boost the efficiency of information delivery; synchronization with haptic large-scale visual language models (LVLMs), to decrease the cognitive burden associated with device use; and the integration of gesture-mediated control, to enhance the efficiency and reliability of interactions. We aim to provide theoretical and technical insights into the development of assistive wearable devices that enable non-visual information delivery to blind and partially sighted people and seek to raise societal awareness regarding the digital information needs of this community.

Current wearable assistive devices that provide multimodal digital information (such as audio descriptions of scenes and braille-to-speech translations) are limited by the need for sequential information output, reliability issues and low-quality image descriptions. These factors pose substantial challenges for blind and partially sighted users. The use of haptic feedback synchronized to the output of large-scale visual language models (LVLMs), multisensory learning and gestural controls might help alleviate these difficulties. Left: wrist device, reprinted from ref. 28, CC BY 4.0 (https://creativecommons.org/licenses/by/4.0/); hand image, reprinted from ref. 174, Springer Nature Limited. Photographs: crisps, mrs/Moment/Gettyimages; Mona Lisa, GL Archive/Alamy Stock Photo.

Research advances

Wearable assistive devices offer the distinct advantages of portability, continual accessibility and integration into users’ daily lives14,15. Compared with handheld devices, wearable devices such as smart gloves, rings and wristbands provide blind and partially sighted people with real-time information delivery and minimal restriction of hand movements16, which are essential for tasks such as navigation, accessing information and interaction with dynamic environments. The unobtrusive nature of wearable assistive devices and their potential to integrate multisensory feedback make them ideal platforms for the synchronization of auditory and tactile outputs as well as gesture-based inputs, to enhance information delivery and interaction efficiency.

Current hardware

The rapid development of wearable devices is aligned with the growing demand for a convenient and vibrant life, which has brought new applications and opportunities across various fields16,17. Devices equipped with sensors and wireless communication technologies now enable real-time and continuous collection of the wearer’s physiological information18 while providing lag-free and bidirectional interaction with the user. These devices come in various forms, including as accessories, e-textiles and e-patches19. Accessories, such as head-mounted devices (HMDs), wrist-worn devices and hand-worn devices, are the most popular and most common category of wearable device. E-patches, which are often subdivided into sensor patches and e-skins, adhere directly to the skin where they provide ultra-thin and flexible platforms for continuous physiological monitoring20. E-textiles integrate sensors and conductive fibres into fabrics, which enable washable, flexible and breathable human–machine interfaces20,21,22. The skin-like flexibility, lightweight design and adaptability to dynamic surfaces of wearable devices make them comfortable and practical for extended use. These devices can monitor body parameters, including temperature23, respiration24 and posture25, and can also perform attention tracking26 and safety assurance27. This multifunctionality has spurred applications not only in virtual reality and augmented reality28,29 but also in education17, communication30 and assistive technologies31.

In the past 20 years, considerable emphasis has been placed on wearable devices to assist blind and partially sighted people with navigation and mobility. These devices use various sensors to perceive the environment and relay information to users32,33. A typical example is the LightGuide cap34, an HMD that employs a set of sensors and light cues to aid people with impaired vision who retain some capacity to sense light levels. Such mobility aids can substantially improve the independence of blind and partially sighted users. Alongside these advances, information accessibility has also garnered growing attention. Most devices now combine wearable display and interaction technologies to enhance their assistive function35,36,37. Wearable devices that aim to improve information accessibility now come in auditory, surface-tactile, audio–tactile and tactile-only forms (Fig. 2).

a, Audio interactions include audio narration of the data included in charts175, audio description of videos and images176, and perception of object shape by sonification177. b, Tactile interactions include refreshable braille displays47,178, 3D printing of lithophanes (translucent engravings, typically <2 mm thick, that appear opaque under incident light but can be felt and reveal an image under transmitted light)179 and tactile displays180. c, Audio–tactile interactions include strategies for improving the accessibility of charts and graphical information in educational environments56,57,181 and image exploration systems for social media and webpages58. d, Wearable devices that use tactile interactions include a braille recognition platform44 and haptic watches45,182. Part b: bottom right (lithophane), reprinted from ref. 183, CC BY 4.0 (https://creativecommons.org/licenses/by/4.0/); bottom left (tactile graphic display), adapted from ref. 179, AAAS. Part c: left, adapted from ref. 184, CC BY 4.0 (https://creativecommons.org/licenses/by/4.0/). Part d: left and second left, adapted with permission from ref. 44, Elsevier; centre (watch), reprinted with permission from ref. 45, J. Edward Colgate.

Commercially produced HMDs38 include smart glasses, head-mounted displays39 and headphones40. As such, they are one of the most prevalent types of wearable assistive devices for blind and partially sighted individuals. HMDs for blind and partially sighted users primarily deliver audio information via integrated speakers or bone-conduction technology. A typical function of these auditory devices is audio description. Digital and scene information detected by built-in or external sensors is converted into audio output, which enables blind and partially sighted users to interpret charts, read text and understand their surrounding environment. Devices that incorporate virtual reality or augmented reality visual displays and provide audio guidance have been widely utilized for both vision assistance and therapy for partially sighted people with central vision loss, peripheral visual field loss and colour vision deficiency41,42,43.

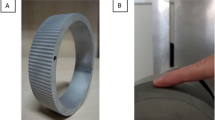

Wearable tactile interactive devices, which are typically worn on the wrist or as e-patches, represent an innovative form of assistive technology with greatly enhanced portability and improved operational freedom. These devices could provide access in real time to text and other data represented by embossed patterns or textures, and an enhanced diversity and efficiency of information acquisition through tactile feedback44,45. These devices are designed to be compact and practical for everyday use. For example, a wearable braille platform44 (Fig. 2d) uses the sequential inflation of air capsules to mimic the sensation of sweeping a finger across a braille letter. Additionally, some currently non-wearable technologies, such as braille displays46,47,48 and tactile graphics49,50,51, could potentially become wearable display devices in the future.

Common forms of wearable display technology, such as smartwatches and HMDs, already include tactile functions that are widely used and accepted by people with and without vision impairments19,44,52. Tactile and braille displays could extend the functionality of wearable devices by enabling touch-based forms of interaction. However, existing tactile and braille display devices are often constrained by their stationary design, which limits their usability in mobile and dynamic environments. The use of thinner actuator arrays and flexible or stretchable materials could enable the implementation of these technologies in lightweight, portable and wearable formats that facilitate their integration into the daily routines of blind and partially sighted users53,54. Such advances are crucial for improving access to text and images while ensuring practicality and convenience.

Audio–tactile devices primarily designed for use in educational and creative environments are also effective in enhancing the engagement and understanding of blind and partially sighted users. The A11yBoard digital artboard55, for instance, can provide access to the creation of digital artworks, whereas TPad (a mobile audio–tactile learning system that provides real-time auditory feedback while users explore tactile graphics)56 and PantoGuide (a haptic and audio guidance system that offers directional cues via skin-stretch feedback and audio prompts to aid in tactile navigation)57 assist blind and partially sighted students to understand and interpret graphics. Although these devices are mainly implemented in stationary or desktop configurations at present and often rely on external peripherals (such as tablets or touchscreens) for their interactive functions56,58, they show potential for development into wearable display devices. Additional multimodal interaction capabilities could be realized by leveraging the hardware infrastructure of existing wearable display devices and advances in material technology57. Although existing audio–tactile devices can provide real-time assistance for blind and partially sighted users, they are affected by inherent deficiencies such as slow and inefficient information delivery.

In the past few years, efforts have been devoted to the integration of navigation and information delivery functions to enhance the usability of wearable display devices. Hardware for navigation and information delivery is usually technologically compatible. For instance, the cameras and light detection and ranging (LiDAR) components that are commonly used for obstacle detection during navigation can also capture detailed scenes for the purpose of information delivery59. Similarly, the audio and haptic feedback mechanisms used for navigation can also be used to provide contextual descriptions of the user’s surroundings. Synchronized vibrotactile feedback can notify users about nearby obstacles31 while simultaneously using varying vibration intensities and frequencies to provide cues about the surrounding landmarks and destinations60. Gesture control also plays an essential role in navigation tasks by enabling users to initiate navigation or select a specific path. The integration of gesture control and audio or haptic feedback enhances users’ spatial awareness and navigation efficiency. Notable devices that combine navigational aids with scene-description capabilities include the OrCam MyEye 3 Pro and advanced wearable camera systems61 that use computer vision and text-to-speech technologies to enable real-time object detection, facial recognition and text reading. However, most current devices are designed for specific tasks, and might excel in one function yet lack optimal performance in others. For example, some devices provide effective support for navigation assistance but struggle with scene description. As both navigation and accessibility are essential for daily life, the lack of integration between these functions reduces the overall usability of these devices and limits their effectiveness in real-world applications.

Software design

Web accessibility tools are essential for enabling blind and partially sighted users to access online content. Screen readers (such as Job Access With Speech (JAWS), NonVisual Desktop Access (NVDA) and VoiceOver) and browser extensions (such as Revamp62, AccessFixer63 and Twitter A11y (ref. 64)) that adjust font sizes and contrast settings can help blind and partially sighted individuals to navigate websites. However, these tools cannot support an interactive experience comparable with that of sighted users. When exploring images, tables or videos, for instance, users of screen readers are often restricted to a linear sequence of information and must remember previous device outputs10,65,66, factors that impose high cognitive loads on users and limit the efficiency of information retrieval.

Accessible visualization software for blind and partially sighted people has been developed to convey the visual information contained in charts, images and artworks. Current iterations can convey charts and images through sonification and the use of alternative text (Alt Text), which is automatically generated descriptive text that describes a visual item. These visualization schemes are an imperfect solution because Alt Text does not support autonomous user interaction and exploration, and sonification imposes an additional cognitive burden on users. Some chart visualization schemes have structured navigational and conversational interactions that aim to compensate for these shortcomings12,67,68,69. For image information, researchers have explored various algorithms to enhance the experience of blind and partially sighted users. Notably, ImageExplorer58 enables touch-based exploration, and efficacy has also been demonstrated for using musical and haptic elements to increase the accessibility of artwork. The increased availability of smartphones and artificial intelligence (AI) has facilitated the development of various types of visual assistance apps for blind and partially sighted users70, such as Seeing AI and Be My Eyes. These apps rely on image capture and AI-driven image recognition to provide real-time descriptions of the environment and objects.

Several strategies can help address the limitations of existing accessibility tools. A multilayered system that can handle different levels of information granularity would enable blind and partially sighted users to explore webpage content at both broad and detailed levels, depending on their preferences. The integration of feedback mechanisms that operate in real time could also enable users to dynamically adjust the granularity level, thereby facilitating more effective navigation of complex content. Blind and partially sighted users should also be included in the design and testing phases of web development to ensure that accessibility tools are aligned closely with their specific needs71. These efforts could reduce users’ cognitive load, improve information retrieval efficiency and, ultimately, provide blind and partially sighted people with a more equitable and accessible online experience.

Information delivery

The limited visual capabilities of blind and partially sighted individuals often lead to challenges in perceiving visual information, including digital content such as figures or videos and surrounding scenes. To address these challenges, it is essential to examine the limitations of current assistive tools and computational methods. These limitations often stem from constraints in computer vision algorithms, data quality and the adaptability of assistive devices to dynamic real-world conditions.

Information comprehension

Difficulties in the comprehension of digital information by blind and partially sighted individuals arise from both the constraints of computer vision algorithms and the limitations of assistive devices. Over the past 4 years, advances in automated captioning72,73 and visual question answering74,75,76 have substantially improved access to digital information for blind and partially sighted people. However, these technologies are heavily influenced by the quality of training datasets and, in particular, by the selection of input images, which means that images in real-world digital interactions can differ markedly from the training set images in terms of style, contrast and quality73,77,78. Consequently, the output of computer vision systems is often limited by algorithmic constraints and data-driven biases, which can result in a lack of the relational, spatial and contextual details necessary for a thorough understanding of images.

Unlike digital information displayed on screens for sighted users, scene understanding for blind and partially sighted people requires a more dynamic and more reliable perception of (and interaction with) their surrounding environment. Existing visual assistive systems often struggle to adapt to rapidly changing environments79,80 and are heavily affected by suboptimal external factors and poor image quality, which can affect images captured by blind and partially sighted users80,81. Moreover, the processing speed of these systems is sometimes insufficient to provide real-time updates. Advanced versions of traditional tools for blind and partially sighted people (such as white canes) could provide haptic feedback to improve the detection of obstacles on the ground and to assist their users in following predefined routes82,83. However, the range of these tools is inherently limited because they cannot identify hazards beyond the cane’s length or detect obstacles in mid-air31,79,84. This limitation can increase the risk of accidents in complex environments and restrict the user’s spatial awareness.

Sequential information output

Current wearable assistive devices mainly use speech and braille to convey information11,85,86. Both modalities present information in sequential letter or word streams, which poses a challenge for blind and partially sighted people who need to efficiently locate specific content within a document or rapidly skim through a large volume of data. Sequential navigation demands considerable time to extract key information and requires blind and partially sighted people to retain and mentally manipulate more information than is typically feasible for sighted humans87.

Blind and partially sighted individuals who need to engage with content that comprises spatial, temporal and structural elements (such as images, videos, charts and maps) find that the limited analytical capabilities of assistive technologies hinder these individuals’ ability to independently perform in-depth analysis or explore information at various levels of detail in relation to spatial, temporal and hierarchical data structures10,13. For example, blind and partially sighted people who are navigating a table or map using a screen reader must follow the predefined order of information delivery, even though the extraction of relevant information via this approach is very time-consuming and they might prefer to start with a holistic view of the data before deciding whether to delve further65. For sighted individuals, a saliency-driven attention mechanism enables the parallel processing of complex visual inputs, which enables the person to focus on relevant information88,89.

Blind and partially sighted people also require time to learn and adapt to the information delivery methods of different assistive devices. This learning burden is complicated by the lack of comprehensive and accessible tutorials for many devices, which impedes their independent utilization90. Non-visual information delivery is essential in learning how to use an assistive device (and, therefore, the effectiveness of information delivery depends on the capabilities of that device). Although current non-visual information delivery methods offer plausible ways of providing improved information accessibility for blind and partially sighted users, it is unreasonable to expect these users to learn multiple different systems to meet their varying information needs.

Cognitive burden

Blind and partially sighted people often depend on Alt Text to decipher images and complex non-textual information91. However, the Alt Text used in mainstream assistive applications, such as image captioning and scene description, is typically generated by computer vision algorithms92,93,94. These one-size-fits-all approaches generally result in Alt Text descriptions that include only object names and their spatial relationships, and this lack of detail hinders the contextual understanding and exacerbates the cognitive burden of users with visual impairment. Indeed, blind and partially sighted users report that Alt Text is often poor quality, misses crucial details and struggles to accurately describe complex images and tables11,95,96. For example, blind and partially sighted people prefer to understand the purpose of images posted on social networking sites and the expressions or body language of any individuals depicted97. Simplistic Alt Text image descriptions make it challenging for these users to fully understand the content conveyed by the person who posted the image.

The robustness of image description algorithms when applied to poor-quality images is another issue. Images taken by blind and partially sighted people without visual feedback can often be out of focus, affected by unsuitable lighting, show motion-induced blurring and present inadvertent occlusion of areas of interest72,81,98. These image-quality issues might result in blind and partially sighted people receiving inaccurate or incomplete Alt Text descriptions that could potentially place them in dangerous situations or compel them to expend considerable effort to verify and understand the information provided.

Alt Text particularly struggles to represent structural and spatial features, the interactions between image elements and the emotional atmosphere conveyed13,58,96. These factors make it challenging for users to form a vivid mental visualization of the image content99. Moreover, images convey information in an inherently non-sequential way, and even if the quality of Alt Text improves, an inevitable loss of information occurs when the image content is sequentially presented. This information gap not only impedes full comprehension of the narrative and context but also is affected by the imaginative capacity of the user. To bridge this gap, blind and partially sighted people might need to incorporate prior or additional sources of knowledge to mentally reconstruct and interpret an image, which further increases the cognitive complexity of this task. This challenge may be more pronounced for individuals with congenital blindness, as their lack of prior visual experience can make it more difficult to form mental imagery based on Alt Text descriptions.

Device design considerations

The effective design of assistive devices for blind and partially sighted individuals requires careful consideration of multisensory integration, cognitive load and interaction efficiency to ensure optimal usability. Key factors in assistive devices development include multisensory stimulation, the integration of AI-driven models and the use of gesture-based controls, which all contribute to improved accessibility and user experience.

Multisensory stimulation

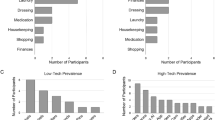

Multimodal sensory stimulation, which leverages the principle of multisensory integration, can effectively modulate attention within specific modalities100,101. Such an approach might considerably improve the efficiency of information delivery and processing by increasing the ability of blind and partially sighted individuals to acquire large volumes of information and simultaneously reducing the mental effort required to understand it. Current assistive technologies increasingly favour multimodal approaches, particularly for tasks involving charts and images (Fig. 3). The US National Aeronautics and Space Administration Task Load Index (NASA-TLX) is widely utilized for evaluating cognitive load, with lower NASA-TLX scores indicating reduced cognitive burden. For example, the TPad system, which integrates tactile graphics with auditory feedback, attained a NASA-TLX score of 26.4, whereas Digital Key, which relies exclusively on tactile input, received a NASA-TLX score of 38.2 in relation to a graphic comprehension task56. This result suggests that the adoption of multisensory information transfer could decrease the cognitive load associated with performing a given task. Relevant information on the technologies presented in Fig. 3 is detailed in Supplementary Table 1.

a, Accessibility schemes for different forms of visual data56,58,68,96,171,185,186,187,188,189. b, Comparison of US National Aeronautics and Space Administration Task Load Index (NASA-TLX) scores for different accessibility schemes55,56,58,96,186,187,188,190,191. NASA-TLX scores that were not directly provided are estimated using the Raw TLX standard (in which sub-score ratings are averaged or summed) and exploration time, with all scoring converted to a 100-point scale. LLM, large language model. Part a: top right (3D-printed map), adapted from ref. 185, CC BY 4.0 (https://creativecommons.org/licenses/by/4.0/). Photographs: motorcyclists, Westend61/Westend61/Gettyimages; room, Westend61/Westend61/Gettyimages; horse and rider, Tina Terras & Michael Walter/Moment/Gettyimages.

Multimodal stimulation activates multiple senses concurrently and enhances information processing more effectively than single-sense stimuli. The neuroscientific principle underlying this enhancement is that multisensory processing activates more complex neural activities than does single-sensory input102. Such collaborative neural activity bolsters perception and attention, thereby furnishing blind and partially sighted users with a more comprehensive analytical framework for decision-making and providing vital support for this group, considering their reliance on non-visual sensory input102. The amalgamation of tactile and auditory stimuli has also been shown to improve blind and partially sighted individuals’ spatial awareness and comprehension of data representations103,104,105.

However, simply combining different types of sensory input might not fully leverage their interactions or harness their potential for synergistic effects. One study demonstrated that modulating auditory input frequencies improves tactile perception accuracy through an auditory–tactile frequency modulation mechanism106. Optimization of the frequencies selected for multisensory integration enhanced both perception accuracy and consistency, which are crucial for the design of assistive devices to ensure efficient information processing and comprehension106. Moreover, the balance between supplying information of complementary types and sensory occupancy should be carefully considered. Attention to auditory stimuli markedly improves auditory accuracy and indirectly worsens haptic accuracy when performing a spatial localization task, which results in optimized perceptual output during the integration of auditory and haptic senses107. This evidence supports the premise that sensory complementarity and the intentional, modality-specific direction of attention can fortify the veracity of multimodal sensory information.

In the context of assistive device design, the delivery of information should be appropriately balanced between primary and secondary modalities. For example, auditory output might serve as the principal channel for holistic information description, and haptic feedback could be used to localize and underscore particularly important data, thereby facilitating information transmission and comprehension. Furthermore, the assistive device should be able to dynamically adjust the relative intensity of auditory and haptic inputs according to the blind or partially sighted user’s attention, to further optimize the balance of the multisensory integration process.

Large vision and language models

The advent of AI, particularly the deployment of large vision models and large language models (LLMs), presents numerous potential solutions to alleviate the high cognitive burden implicit in the interactions of blind and partially sighted people with information technology systems108,109. These systems facilitate a range of functions, such as assisted reading68,110, target scene-recognition art appreciation111,112 and art creation113,114. With the increasing prevalence of LVLMs, the integration of AI into commercial assistive software and devices has already resulted in several exciting developments: Be My Eyes, Seeing AI and OrCam MyEye 3 Pro. LVLMs are an essential component of these devices, which translate complex visual information into accessible textual descriptions and support users in autonomously exploring areas of interest. The interactivity and precision of these AI-enabled assistive devices can help blind and partially sighted individuals better understand both static images and dynamic scenes. Although AI-enabled assistive devices are recognized by many blind and partially sighted people as being helpful in daily life, the current assisted reading devices still do not provide satisfactory performance when applied to non-planar and unformatted text70,115. Moreover, AI-assisted computer vision systems often capture the main elements of an image without sufficient details. As a result, these systems often fail to provide feedback according to prompts that relate to the specific region of interest. The system can even require users to re-upload images, particularly when the initially supplied images are of low quality.

Spatial awareness can also impose an extra cognitive burden, which is a critical concern for blind and partially sighted people. Several studies have indicated that autonomous exploration of an image or scene gives blind and partially sighted people a more profound sense of the spatial distribution and size of image elements, and fosters a sense of agency that enhances their ability to vividly imagine the scene or image13,58,89,116. Haptic feedback is an effective method for enhancing spatial awareness117,118,119. Therefore, it is essential to design an LVLM framework for assistive wearable devices that supports synchronized haptic output and performs robustly even with low-quality images. Haptic synchronization could enable an LVLM to generate audio descriptions for image comprehension while simultaneously creating corresponding tactile information based on the input image. The generated tactile information could provide blind and partially sighted users with haptic feedback that aligns exactly with the audio information. For instance, when the LVLM identifies or segments objects with a verbal description, the haptic device simultaneously converts these objects into tactile representations, which enable users to physically explore elements of the image. Synchronized haptic feedback can also be valuable to mitigate the hallucinations generated by LVLMs120,121,122. LVLMs might struggle to accurately describe the characteristics of visually similar objects, owing to the model’s limited understanding of fine-grained details121. Synchronized haptic feedback can help bridge this gap by providing tactile patterns or textures that help users differentiate the images more reliably.

This framework can be adapted to assist blind and partially sighted individuals in understanding video content by incorporating advanced AI models that are specifically designed for video analysis. As video consumption increasingly shifts to mobile devices123,124, the demand for real-time and accessible video assistance has grown considerably. Video-based large AI models can analyse scenes and actions while automatically generating structured and personalized descriptions125,126,127 and answering the queries of blind and partially sighted users about video content. This approach enables blind and partially sighted people to interact with video content according to their preferences across diverse scenarios.

Gesture-based interaction

Gestures provide a convenient and simple mode of human–machine interaction. As such, gesture-based interactions offer uniquely important advantages as a medium of information exchange128, especially for blind and partially sighted individuals. Most currently available assistive devices and smartphone apps require the use of both hands, which is impractical during many daily activities, particularly when use of a white cane is also required. Indeed, many blind and partially sighted people prefer not to have both hands fully occupied while travelling129,130. A key benefit of gesture-based controls is that they can alleviate the constraints of having both hands occupied. For example, one hand can make a simple swipe or pinch gesture to accelerate or pause audio content, which provides an efficient form of interaction, while keeping the other hand free. Compared with audio or haptic feedback, gestures can also mitigate privacy concerns and minimize the attraction of undesired attention when using the device in public spaces129,131,132. For blind and partially sighted individuals, the operation of currently available assistive devices often relies on sounds or vibrations that might be hard to detect in noisy environments, inappropriate in noise-sensitive environments (such as theatres and libraries) or cause self-consciousness in busy environments (such as on public transport).

Consequently, gesture-based interactive controls are expected to be an essential component of future assistive devices to facilitate efficient access to crucial information. Gesture interpretation requires the integration of sensitive, adaptable and reliable sensors, as well as high-speed synchronized communication, to deliver an immersive and low-latency feedback experience. The use of gesture recognition technologies is projected to enable blind and partially sighted people to interact effortlessly and efficiently with assistive devices. For example, when engaging with audio information, blind and partially sighted users could employ gestures to adjust the speed and the position of reading, or to command repetitions.

The integration of haptic feedback into gesture interactions greatly benefits blind and partially sighted individuals133,134. Interaction efficiency, reliability and user satisfaction improve through three main aspects: gesture confirmation; expanded degrees of freedom in communication flexibility (that is, the number of independent data types that can be simultaneously employed by an assistive device during gesture interactions); and guided gesture learning. Gesture confirmation can be achieved through real-time tactile feedback, which can reduce the need for repetition of gestures, minimize the frustration associated with miscommunication and improve both the interaction experience and user confidence135,136. Expanded degrees of freedom in information exchange between users and devices can be supported by haptic feedback, which enables users to achieve precise and nuanced control by modulating the gesture intensity pattern and duration. This flexibility enables users to convey more complex commands and to receive an increased richness of feedback, which substantially improves interaction efficiency and reliability. Gesture learning also benefits from haptic guidance137,138. By providing tangible cues that are associated with specific actions and prediction prompts, corrective feedback can guide users towards appropriate gesture execution, which helps them quickly understand and memorize the desired action. These enhancements lead to a more intuitive and user-friendly assistive experience for blind and partially sighted individuals.

Delivery of text and images

The reading efficiency of assistive technology can be quantified as the number of words read per minute (WPM) (Fig. 4). For reference, the average speed of sighted individuals reading an English-language text is around 251 WPM, with an upper limit of 500 WPM that is imposed by the information-processing capacity of the human eye139. Braille users typically read paper-based materials at around 120 WPM, although skilled individuals can reach speeds of up to 170 WPM by employing refined hand movement techniques and precise localization140,141. Most braille displays, however, show only a few lines of text comprising 12–80 braille units, which restricts users’ reading speeds to 30–50 WPM142.

Reading efficiency can be conveniently quantified using the number of words read per minute (WPM) metric. Braille and braille displays have a substantially lower reading efficiency compared with visual reading of printed text by sighted individuals. However, text-to-speech technologies enable blind and partially sighted people to read at speeds comparable with or exceeding those of sighted people. Combinations of tactile or text-to-speech technologies with large language models (LLMs) could result in WPM values that approach or even exceed the upper bound of visual reading speeds.

By contrast, the increased sensitivity of hearing experienced by blind and partially sighted individuals enables them to adapt to audio output speeds of up to 278 WPM143,144. Therefore, the use of text-to-speech technology enables blind and partially sighted people to achieve increased reading efficiency while maintaining comprehension. Of note, 278 WPM (which is around 1.75-fold the normal speaking rate of 150 WPM145) represents a feasible level of efficiency for delivering audio information under optimal conditions. In real-world settings, the efficiency of audio information delivery is hampered by several limiting factors. For text-based information, the requirement for sequential display of audio information often prevents blind and partially sighted users from quickly locating specific details, especially when searching large volumes of data. By contrast, sighted users can efficiently identify target information while reading by skimming or scanning the text146. The efficiency of information delivery is further reduced when accessing general digital information (such as browsing webpages that contain both text and images) or performing complex tasks such as online shopping147,148. Cognitive burden also affects the efficiency of information delivery. High audio speech rates substantially increase cognitive load and impair users’ comprehension143,149,150. The reading speed of sighted people performing a reading task can surpass 251 WPM and even reach 400 WPM after appropriate training in speed-reading techniques and with the use of optimized text presentation methods151,152,153. Within the 251–400 WPM range, the gap between auditory processing capacity and visual reading efficiency is further widened, a disparity that cannot be bridged by optimization of auditory information delivery alone. To reach or even exceed the visual upper limit of 500 WPM, current assistive technologies need to move beyond a single mode of information delivery and incorporate capabilities for text processing and refinement, including summarizing key information, filtering out redundant detail and prioritizing relevant content.

LLMs can analyse language and contextual patterns in text to identify key details and remove redundancies, processes that have the potential to improve reading efficiency154,155. This capability to distil information into concise summaries offers the benefit of improved readability and makes information consumption faster and more accessible. Compared with predesigned summary descriptions, the summaries generated by LLMs provide well-organized, concise, context-aware content that aligns with human language processing, which could potentially improve user comprehension154,155. However, current LLMs still face difficulties in interpreting sarcasm, humour and real-world contextual knowledge that is not explicitly stated in the training data, which might lead to misunderstanding. Consequently, assistive wearable devices integrated with LLMs are expected to further optimize WPM metrics and improve the efficiency and accuracy of textual information delivery. These devices could also customize the delivered information according to context. Ultimately, these technologies might reach or exceed the visual upper limit (500 WPM) for information extraction from text.

Another important task for assistive devices is the delivery of information contained in images. A lot of information in digital communications is presented in image form, and blind or partially sighted individuals must rely strongly on image descriptions to access these communications and stay up to date. Approximately 72% of images on mainstream websites are now covered by image descriptions156. In one study, blind and partially sighted users prioritized the description of image elements such as emotion, ___location, colour, activity and objects13. Additionally, blind and partially sighted users emphasize the importance of including the number of people, their facial expressions and gestures in descriptions of images included in social media11. Although the above studies provide guidelines on how image descriptions should be presented for blind and partially sighted users, they overlook the information preferences of different individuals, who additionally report that one-size-fits-all image descriptions do not meet the accessibility needs of all users, and that contextual information is essential for users to understand the described images97,157. To address these shortcomings, one approach used a multimodal model that integrated textual and visual information from social media to generate more accurate and contextually relevant image descriptions158. However, this approach is limited to images that have accompanying social media posts and does not perform well for describing contextless images or in dealing with text-recognition errors.

The effective delivery of information included in images also faces specific challenges related to computation, communication and hardware. Computational latency slows the processing of complex visual tasks, such as the recognition of multiple objects or analysis of the spatial arrangement of image elements. Slow communication between local devices and the cloud-based servers capable of hosting large-scale LLM and LVLM computational services further contributes to the delay. Hardware constraints, such as the amount of local computational power (which is limited by the central processing unit and graphics processing unit chip speeds), restrict the ability of wearable devices to perform these tasks in real time. These communication and computation bottlenecks impose limitations on the ability of wearable devices to achieve a desirable refresh rate (preferably >120 Hz159).

Future wearable assistive devices might benefit from next-generation communication and computing technologies, such as a 6 G network, edge computing (the processing and storage of data close to the user rather than in a remote data centre) and AI accelerators. These improvements are essential for providing blind and partially sighted users with a seamless and real-time interactive experience. The ultra-low latency and high bandwidth of 6 G wireless networks can facilitate accelerated data transmission to alleviate the communication bottleneck160 and enable real-time processing of image content. Edge computing can offload computational tasks from centralized servers, which minimizes reliance on cloud services and reduces communication delays161. The use of AI-accelerator chips that are specifically designed for wearable devices can improve the efficiency of processes used by LVLMs and other AI models while optimizing the device’s power management, thereby enabling more reliable real-time interactions162. Assistive devices that leverage these technologies could benefit from the enhanced collection and utilization of contextual information to support the real-time analysis of both image content and contextual information from multiple sources.

The visual elements that comprise the foreground and background content in different types of images need to be correctly highlighted by wearable assistive devices (Fig. 5). In scenes without characters, the foreground comprises the designated target and its spatial position relative to other image components, whereas the background encompasses the locational context of the target and any accompanying textual details. Conversely, in event-type images characterized by the presence of characters, the foreground is dominated by the characters and their interactions. In these kinds of images, blind and partially sighted users of wearable assistive devices typically prioritize the characters, which necessitates their detailed description, including of aspects such as gender, celebrity status and facial expressions. Background elements in these scenes often include architectural characteristics and any relevant textual information. To provide this information efficiently to users, wearable devices can incorporate information derived from the user’s movements and body position163,164 that can be adapted to the individual user’s behaviours and preferences165. Such wearable assistive devices could enable blind and partially sighted users not only to access the factual narrative of an image but also to understand its spatial layout, the interaction of the elements in the scene and the emotion or atmosphere conveyed. Device adaptability and convenience is assured by making the visual information conveyed by the descriptive text (which can be transmitted via auditory, tactile or multimodal means) both accessible and meaningful.

The design of information delivery methods must also account for the different requirements of people with congenital blindness or who lost their sight during childhood versus those who experienced vision loss later in life. These considerations affect both the usability and functionality of assistive devices, as well as user training and support strategies. For users with congenital blindness, the absence of remembered visual knowledge and the delayed development of verbal and cognitive skills166 mean that visual descriptions are less effective as an information delivery method than multimodal sensory stimulation. In one study, devices that incorporated auditory and tactile signals achieved notable improvements in spatial cognition in users with congenital blindness167. By contrast, assistive devices for use by individuals who experienced vision loss later in life might rely on familiar descriptions and intuitive feedback. For example, voice-controlled virtual assistants (such as Amazon Echo) enable users to perform tasks such as Internet browsing or shopping by using audible versions of familiar text prompts168.

Future wearable assistive devices that can connect to social media and crowdsourcing platforms via application programming interfaces and automatically gather relevant information from multiple sources could improve access to social media for blind and partially sighted individuals. Metadata such as the temporal and spatial details of posted images, as well as user comments, can also be used by LVLMs to infer image themes and provide more comprehensive contextual information. As an extra benefit, the collection and analysis of user preferences and past interactions by LVLMs could provide a customized or personalized experience that prioritizes more relevant information content.

Conclusions and outlook

In this Perspective, we have summarized the challenges faced by blind and partially sighted people in relation to the use of current assistive devices and accessible tools for the delivery of non-visual information. Additionally, we have considered appropriate efficiency metrics for assistive devices in delivering textual information while emphasizing key areas in conveying image-based information. Despite considerable advances in the development of multimodal interaction aids and tools for data visualization, which show promise for addressing these challenges, the performance of these technologies has not yet fully met expectations in terms of efficiency, cognitive load and reliability. We hope that this Perspective will evoke and foster an increased breadth of interest in design concepts for future wearable assistive devices that could avoid these bottlenecks.

We believe that such future assistive devices will greatly enhance the digital information experience of blind and partially sighted users and facilitate their pursuit of education, entertainment and employment. In return, the enhanced productivity of these individuals might help alleviate the productivity deficit caused by global population ageing and increase global resilience to economic slowdowns. We anticipate that these devices will also have a substantial effect on the future care of older people. For example, next-generation assistive devices could support older individuals who have deteriorating vision by providing haptic feedback or audio prompts, thereby improving their ability to access information and enhancing their autonomy and quality of life. In addition to hardware integration and innovative designs, software solutions could leverage existing wearable platforms, such as Ray-Ban Meta AI glasses and Apple’s Vision Pro, to support visual assistance and rehabilitation for blind and partially sighted individuals. These products already incorporate the essential hardware features, such as integrated, built-in cameras and auditory or haptic feedback systems. Building on this foundation, software applications could include enhanced image or video processing tailored to the specific visual needs of blind and partially sighted users, including object detection integrated with distance information. Through thoughtful software design and upgrades, these commercial products could be transformed into flexible and accessible assistive devices that support multiple kinds of information interactions.

Future assistive devices should consider the needs of blind and partially sighted individuals across different age groups. For children aged 0–5 years, the integration of tactile and auditory assistive tools with sensory-rich learning materials supports early cognitive and language development8,9. Primary school-age children (6–12 years) benefit from technologies such as tactile displays and audio guides that facilitate access to educational resources, whereas auditory tools promote their participation in sports, reduce isolation and foster cognitive and social growth169. For teenagers (aged 13–18 years), audio-guided skill-training tools and tactile simulators improve information delivery for vocational tasks, which promotes independence and career readiness170. Blind and partially sighted adults require workplace-adapted technologies that also foster social inclusion, including tactile graphic interfaces, real-time audio feedback systems and AI-powered virtual assistants113,171. These tools can streamline access to work-related information, enhance productivity and facilitate communication. Older individuals need assistive devices that feature intuitive interfaces and have a low cognitive load to address age-related impairments in cognitive and motor functioning172,173. For example, the integration of tactile feedback and audio prompts into smart solutions such as voice-activated home systems and touch-based navigation aids can simplify information delivery and enhance the autonomy of older people in activities of daily living.

As the global population ages and technology advances, the market size and demand for assistive wearable devices are expected to grow. Major technology companies have already invested substantial sums in enhancing the accessibility of their wearable products and in driving the development of smarter and more personalized assistive devices. However, the widespread adoption and affordability of these (currently expensive) devices might require various forms of financial support, including government subsidies, insurance coverage and partnerships with non-profit organizations. The exploration of innovative and reliable business models is essential to alleviate the financial burden of blind and partially sighted users. Flexible payment options, such as leasing, instalment plans and crowdfunding, could reduce the initial purchase costs and enable more users to access these devices by reducing up-front expenses. Such strategies not only increase device accessibility and market penetration but also provide companies with sustainable financing opportunities. These approaches contribute to improving the accessibility and acceptance of assistive devices.

References

World Health Organization. Blindness and vision impairment. WHO https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (2023).

Bourne, R. et al. Trends in prevalence of blindness and distance and near vision impairment over 30 years: an analysis for the Global Burden of Disease Study. Lancet Glob. Health 9, e130–e143 (2021).

Kim, N. W., Joyner, S. C., Riegelhuth, A. & Kim, Y. Accessible visualization: design space, opportunities, and challenges. Comput. Graph. Forum 40, 173–188 (2021).

Choi, J., Jung, S., Park, D. G., Choo, J. & Elmqvist, N. Visualizing for the non‐visual: enabling the visually impaired to use visualization. Comput. Graph. Forum 38, 249–260 (2019).

Morrison, J. H. & Baxter, M. G. The ageing cortical synapse: hallmarks and implications for cognitive decline. Nat. Rev. Neurosci. 13, 240–250 (2012).

Decorps, J., Saumet, J. L., Sommer, P., Sigaudo-Roussel, D. & Fromy, B. Effect of ageing on tactile transduction processes. Ageing Res. Rev. 13, 90–99 (2014).

Price, D. et al. Age-related delay in visual and auditory evoked responses is mediated by white- and grey-matter differences. Nat. Commun. 8, 15671 (2017).

Maurer, D., Lewis, T. & Mondloch, C. Missing sights: consequences for visual cognitive development. Trends Cogn. Sci. 9, 144–151 (2005).

Maurer, D., Mondloch, C. J. & Lewis, T. L. Effects of early visual deprivation on perceptual and cognitive development. Prog. Brain Res. 164, 87–104 (2007).

Schaadhardt, A., Hiniker, A. & Wobbrock, J. O. Understanding blind screen-reader users’ experiences of digital artboards. In Proc. 2021 CHI Conference on Human Factors in Computing Systems 1–19 (ACM, 2021).

Salisbury, E., Kamar, E. & Morris, M. Toward scalable social alt text: conversational crowdsourcing as a tool for refining vision-to-language technology for the blind. In Proc. AAAI Conference on Human Computation and Crowdsourcing Vol. 5, 147–156 (AAAI, 2017).

Zong, J. et al. Rich screen reader experiences for accessible data visualization. Comput. Graph. Forum 41, 15–27 (2022).

Morris, M. R., Johnson, J., Bennett, C. L. & Cutrell, E. Rich representations of visual content for screen reader users. In Proc. 2018 CHI Conference on Human Factors in Computing Systems 1–11 (ACM, 2018).

Ates, H. C. et al. End-to-end design of wearable sensors. Nat. Rev. Mater. 7, 887–907 (2022).

Liu, Z. et al. Functionalized fiber-based strain sensors: pathway to next-generation wearable electronics. Nano-Micro Lett. 14, 61 (2022).

Yin, R., Wang, D., Zhao, S., Lou, Z. & Shen, G. Wearable sensors-enabled human–machine interaction systems: from design to application. Adv. Funct. Mater. 31, 2008936 (2021).

Shen, S. et al. Human machine interface with wearable electronics using biodegradable triboelectric films for calligraphy practice and correction. Nano-Micro Lett. 14, 225 (2022).

Lin, M., Hu, H., Zhou, S. & Xu, S. Soft wearable devices for deep-tissue sensing. Nat. Rev. Mater. 7, 850–869 (2022).

Seneviratne, S. et al. A survey of wearable devices and challenges. IEEE Commun. Surv. Tuts 19, 2573–2620 (2017).

Yang, J. C. et al. Electronic skin: recent progress and future prospects for skin-attachable devices for health monitoring, robotics, and prosthetics. Adv. Mater. 31, 1904765 (2019).

Wang, Z., Liu, Y., Zhou, Z., Chen, P. & Peng, H. Towards integrated textile display systems. Nat. Rev. Electr. Eng. 1, 466–477 (2024).

Shi, X. et al. Large-area display textiles integrated with functional systems. Nature 591, 240–245 (2021).

Han, S. et al. Battery-free, wireless sensors for full-body pressure and temperature mapping. Sci. Transl. Med. 10, eaan4950 (2018).

Yang, Y. et al. Artificial intelligence-enabled detection and assessment of Parkinson’s disease using nocturnal breathing signals. Nat. Med. 28, 2207–2215 (2022).

Sardini, E., Serpelloni, M. & Pasqui, V. Wireless wearable T-shirt for posture monitoring during rehabilitation exercises. IEEE Trans. Instrum. Meas. 64, 439–448 (2015).

Malasinghe, L. P., Ramzan, N. & Dahal, K. Remote patient monitoring: a comprehensive study. J. Ambient. Intell. Hum. Comput. 10, 57–76 (2019).

Zu, L. et al. A self-powered early warning glove with integrated elastic-arched triboelectric nanogenerator and flexible printed circuit for real-time safety protection. Adv. Mater. Technol. 7, 2100787 (2022).

Sun, Z., Zhu, M., Shan, X. & Lee, C. Augmented tactile-perception and haptic-feedback rings as human–machine interfaces aiming for immersive interactions. Nat. Commun. 13, 5224 (2022).

Zhu, M., Sun, Z. & Lee, C. Soft modular glove with multimodal sensing and augmented haptic feedback enabled by materials’ multifunctionalities. ACS Nano 16, 14097–14110 (2022).

Zhou, Q., Ji, B., Hu, F., Luo, J. & Zhou, B. Magnetized micropillar-enabled wearable sensors for touchless and intelligent information communication. Nano-Micro Lett. 13, 197 (2021).

Slade, P., Tambe, A. & Kochenderfer, M. J. Multimodal sensing and intuitive steering assistance improve navigation and mobility for people with impaired vision. Sci. Robot. 6, eabg6594 (2021).

Mai, C. et al. Laser sensing and vision sensing smart blind cane: a review. Sensors 23, 869 (2023).

Tapu, R., Mocanu, B. & Zaharia, T. Wearable assistive devices for visually impaired: a state of the art survey. Pattern Recognit. Lett. 137, 37–52 (2020).

Yang, C. et al. LightGuide: directing visually impaired people along a path using light cues. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 5, 1-27 (2021).

Kim, E. H. et al. Interactive skin display with epidermal stimuli electrode. Adv. Sci. 6, 1802351 (2019).

Ehrlich, J. R. et al. Head-mounted display technology for low-vision rehabilitation and vision enhancement. Am. J. Ophthalmol. 176, 26–32 (2017).

Jafri, R. & Ali, S. A. Exploring the potential of eyewear-based wearable display devices for use by the visually impaired. In 2014 3rd International Conference on User Science and Engineering (i-USEr) 119–124 (IEEE, 2014).

Jensen, L. & Konradsen, F. A review of the use of virtual reality head-mounted displays in education and training. Educ. Inf. Technol. 23, 1515–1529 (2018).

Mauricio, C., Domingues, G., Padua, I., Peres, F. & Teixeira, J. in Symp. Virtual and Augmented Reality 203–212 (ACM, 2024).

Chew, Y. C. D. & Walker, B. N. What did you say?: visually impaired students using bonephones in math class. In Proc. 15th International ACM SIGACCESS Conference on Computers and Accessibility 1–2 (ACM, 2013).

Deemer, A. D. et al. Low vision enhancement with head-mounted video display systems: are we there yet? Optom. Vis. Sci. 95, 694–703 (2018).

Htike, H. M., Margrain, T. H., Lai, Y.-K. & Eslambolchilar, P. Ability of head-mounted display technology to improve mobility in people with low vision: a systematic review. Transl. Vis. Sci. Technol. 9, 26 (2020).

Li, Y. et al. A scoping review of assistance and therapy with head-mounted displays for people who are visually impaired. ACM Trans. Access. Comput. 15, 1–28 (2022).

Wang, Y. et al. Multiscale haptic interfaces for metaverse. Device 2, 100326 (2024).

Twyman, M., Mullenbach, J., Shultz, C., Colgate, J. E. & Piper, A. M. Designing wearable haptic information displays for people with vision impairments. In Proc. 9th International Conference on Tangible, Embedded, and Embodied Interaction (TEI) 341–344 (ACM, 2015).

Yang, W. et al. A survey on tactile displays for visually impaired people. IEEE Trans. Haptics 14, 712–721 (2021).

Qu, X. et al. Refreshable braille display system based on triboelectric nanogenerator and dielectric elastomer. Adv. Funct. Mater. 31, 2006612 (2021).

Kim, J. et al. Braille display for portable device using flip-latch structured electromagnetic actuator. IEEE Trans. Haptics 13, 59–65 (2020).

Ray, A., Ghosh, S. & Neogi, B. An overview on tactile display, haptic investigation towards beneficial for blind person. Int. J. Eng. Sci. Technol. Res. 6, 88 (2015).

Mukhiddinov, M. & Kim, S.-Y. A systematic literature review on the automatic creation of tactile graphics for the blind and visually impaired. Processes 9, 1726 (2021).

Holloway, L. et al. Animations at your fingertips: using a refreshable tactile display to convey motion graphics for people who are blind or have low vision. In Proc. 24th International ACM SIGACCESS Conference on Computers and Accessibility 1–16 (ACM, 2022).

Kim, J. et al. Ultrathin quantum dot display integrated with wearable electronics. Adv. Mater. 29, 1700217 (2017).

Luo, Y. et al. Programmable tactile feedback system for blindness assistance based on triboelectric nanogenerator and self-excited electrostatic actuator. Nano Energy 111, 108425 (2023).

Jang, S.-Y. et al. Dynamically reconfigurable shape-morphing and tactile display via hydraulically coupled mergeable and splittable PVC gel actuator. Sci. Adv. 10, eadq2024 (2024).

Zhang, Z. J. & Wobbrock, J. O. A11yBoard: making digital artboards accessible to blind and low-vision users. In Proc. 2023 CHI Conference on Human Factors in Computing Systems 1–17 (ACM, 2023).

Melfi, G., Müller, K., Schwarz, T., Jaworek, G. & Stiefelhagen, R. Understanding what you feel: a mobile audio-tactile system for graphics used at schools with students with visual impairment. In Proc. 2020 CHI Conference on Human Factors in Computing Systems 1–12 (ACM, 2020).

Siu, A. F. et al. In Design Thinking Research: Translation, Prototyping, and Measurement (eds Meinel, C. & Leifer, L.) 167–180 (Springer, 2021).

Lee, J., Herskovitz, J., Peng, Y.-H. & Guo, A. ImageExplorer: multil-layered touch exploration to encourage skepticism towards imperfect AI-generated image captions. In CHI Conference on Human Factors in Computing Systems 1–15 (ACM, 2022).

Liu, H. et al. HIDA: towards holistic indoor understanding for the visually impaired via semantic instance segmentation with a wearable solid-state LiDAR sensor. In 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW) 1780–1790 (IEEE, 2021).

Wang, H.-C. et al. Enabling independent navigation for visually impaired people through a wearable vision-based feedback system. In 2017 IEEE International Conference on Robotics and Automation (ICRA) 6533–6540 (IEEE, 2017).

Chen, Z., Liu, X., Kojima, M., Huang, Q. & Arai, T. A wearable navigation device for visually impaired people based on the real-time semantic visual SLAM system. Sensors 21, 1536 (2021).

Wang, R. et al. Revamp: enhancing accessible information seeking experience of online shopping for blind or low vision users. In Proc. 2021 CHI Conference on Human Factors in Computing Systems 1–14 (ACM, 2021).

Zhang, M. et al. AccessFixer: enhancing GUI accessibility for low vision users with R-GCN model. IEEE Trans. Softw. Eng. 50, 173–189 (2024).

Gleason, C. et al. Twitter A11y: a browser extension to make twitter images accessible. In Proc. 2020 CHI Conference on Human Factors in Computing Systems 1–12 (ACM, 2020).

Sharif, A., Chintalapati, S. S., Wobbrock, J. O. & Reinecke, K. Understanding screen-reader users’ experiences with online data visualizations. In 23rd International ACM SIGACCESS Conference on Computers and Accessibility 1–16 (ACM, 2021).

Billah, S. M., Ashok, V., Porter, D. E. & Ramakrishnan, I. V. Ubiquitous accessibility for people with visual impairments: are we there yet? In Proc. 2017 CHI Conference on Human Factors in Computing Systems 5862–5868 (ACM, 2017).

Thompson, J. R., Martinez, J. J., Sarikaya, A., Cutrell, E. & Lee, B. Chart reader: accessible visualization experiences designed with screen reader users. In Proc. 2023 CHI Conference on Human Factors in Computing Systems 1–18 (ACM, 2023).

Gorniak, J., Kim, Y., Wei, D. & Kim, N. W. VizAbility: enhancing chart accessibility with LLM-based conversational interaction. In Proc. 37th Annual ACM Symposium on User Interface Software and Technology 1–19 (ACM, 2024).

Moured, O. et al. Chart4Blind: an intelligent interface for chart accessibility conversion. In Proc. 29th International Conference on Intelligent User Interfaces 504–514 (ACM, 2024).

Pundlik, S., Shivshanker, P. & Luo, G. Impact of apps as assistive devices for visually impaired persons. Annu. Rev. Vis. Sci. 9, 111–130 (2023).

Wang, Y., Zhao, Y. & Kim, Y.-S. How do low-vision individuals experience information visualization? In Proc. 2024 CHI Conference on Human Factors in Computing Systems 1–15 (ACM, 2024).

Tseng, Y,-Y., Bell, A. & Gurari, D. VizWiz-FewShot: locating objects in images taken by people with visual impairments. In Computer Vision – ECCV 2022 (eds Avidan, S. et al.) Vol. 13668 (Springer, 2022).

Gurari, D., Zhao, Y., Zhang, M. & Bhattacharya, N. Captioning images taken by people who are blind. In Computer Vision – ECCV 2020 (eds Vedaldi, A. et al.) Vol. 12362, 417–434 (Springer International, 2020).

Chen, C., Anjum, S. & Gurari, D. Grounding answers for visual questions asked by visually impaired people. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 19076–19085 (IEEE, 2022).

Ishmam, M. F., Shovon, M. S. H., Mridha, M. F. & Dey, N. From image to language: a critical analysis of visual question answering (VQA) approaches, challenges, and opportunities. Inf. Fusion. 106, 102270 (2024).

Naik, N., Potts, C. & Kreiss, E. Context-VQA: towards context-aware and purposeful visual question answering. In 2023 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW) 2813–2817 (IEEE, 2023).

Fan, D.-P. et al. Camouflaged object detection. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition 2774–2784 (IEEE, 2020).

Dessì, R. et al. Cross-___domain image captioning with discriminative finetuning. In 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition 6935–6944 (IEEE, 2023).

Real, S. & Araujo, A. Navigation systems for the blind and visually impaired: past work, challenges, and open problems. Sensors 19, 3404 (2019).

Caraiman, S. et al. Computer vision for the visually impaired: the sound of vision system. In 2017 IEEE Internatinal Conference Computer Vision Workshops (ICCVW) 1480–1489 (IEEE, 2017).

Mandal, M., Ghadiyaram, D., Gurari, D. & Bovik, A. C. Helping visually impaired people take better quality pictures. IEEE Trans. Image Process. 32, 3873–3884 (2023).

Motta, G. et al. In Mobility of Visually Impaired People: Fundamentals and ICT Assistive Technologies (eds Pissaloux, E. & Velazquez, R.) 469–535 (Springer, 2018).

Rodgers, M. D. & Emerson, R. W. Materials testing in long cane design: sensitivity, flexibility, and transmission of vibration. J. Vis. Impair. Blin. 99, 696–706 (2005).

Liu, H. et al. Toward image-to-tactile cross-modal perception for visually impaired people. IEEE Trans. Autom. Sci. Eng. 18, 521–529 (2021).

Dai, X. et al. A phonic braille recognition system based on a self-powered sensor with self-healing ability, temperature resistance, and stretchability. Mater. Horiz. 9, 2603–2612 (2022).

Liu, W. et al. Enhancing blind–dumb assistance through a self-powered tactile sensor-based braille typing system. Nano Energy 116, 108795 (2023).

Thiele, J. E., Pratte, M. S. & Rouder, J. N. On perfect working-memory performance with large numbers of items. Psychon. Bull. Rev. 18, 958–963 (2011).

Wolfe, J. M. & Horowitz, T. S. Five factors that guide attention in visual search. Nat. Hum. Behav. 1, 0058 (2017).

Mangun, G. R. Neural mechanisms of visual selective attention. Psychophysiology 32, 4–18 (1995).

Li, J., Yan, Z., Shah, A., Lazar, J. & Peng, H. Toucha11y: making inaccessible public touchscreens accessible. In Proc. 2023 CHI Conference on Human Factors in Computing Systems 1–13 (ACM, 2023).

World Wide Web Consortium (W3C). Understanding success criterion 1.1.1—understanding WCAG 2.0. W3C https://www.w3.org/TR/UNDERSTANDING-WCAG20/text-equiv-all.html (2023).

He, X. & Deng, L. Deep learning for image-to-text generation: a technical overview. IEEE Signal. Process. Mag. 34, 109–116 (2017).

Liu, S., Zhu, Z., Ye, N., Guadarrama, S. & Murphy, K. Improved image captioning via policy gradient optimization of SPIDEr. In 2017 IEEE International Conference on Computer Vision (ICCV) 873–881 (IEEE, 2017).

Stefanini, M. et al. From show to tell: a survey on deep learning-based image captioning. IEEE Trans. Pattern Anal. Mach. Intell. 45, 539–559 (2023).

MacLeod, H., Bennett, C. L., Morris, M. R. & Cutrell, E. Understanding blind people’s experiences with computer-generated captions of social media images. In Proc. 2017 CHI Conference on Human Factors in Computing Systems 5988–5999 (ACM, 2017).

Nair, V., Zhu, H. H. & Smith, B. A. ImageAssist: tools for enhancing touchscreen-based image exploration systems for blind and low vision users. In Proc. 2023 CHI Conference on Human Factors in Computing Systems 1–17 (ACM, 2023).

Stangl, A., Morris, M. R. & Gurari, D. ‘Person, shoes, tree. Is the person naked?’ What people with vision impairments want in image descriptions. In Proc. 2020 CHI Conference on Human Factors in Computing Systems 1–13 (ACM, 2020).

Gurari, D. et al. VizWiz grand challenge: answering visual questions from blind people. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 3608–3617 (IEEE, 2018).

Murphy, E., Kuber, R., McAllister, G., Strain, P. & Yu, W. An empirical investigation into the difficulties experienced by visually impaired Internet users. Univers. Access. Inf. Soc. 7, 79–91 (2008).

Ohshiro, T., Angelaki, D. E. & DeAngelis, G. C. A normalization model of multisensory integration. Nat. Neurosci. 14, 775–782 (2011).

Talsma, D., Senkowski, D., Soto-Faraco, S. & Woldorff, M. G. The multifaceted interplay between attention and multisensory integration. Trends Cogn. Sci. 14, 400–410 (2010).

Stein, B. E. & Stanford, T. R. Multisensory integration: current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 9, 255–266 (2008).

McDonald, J. J., Teder-Sälejärvi, W. A. & Ward, L. M. Multisensory integration and crossmodal attention effects in the human brain. Science 292, 1791 (2001).

Shams, L. & Seitz, A. R. Benefits of multisensory learning. Trends Cogn. Sci. 12, 411–417 (2008).

Noppeney, U. Perceptual inference, learning, and attention in a multisensory world. Annu. Rev. Neurosci. 44, 449–473 (2021).

Foxe, J. J. Multisensory integration: frequency tuning of audio–tactile integration. Curr. Biol. 19, R373–R375 (2009).

Vercillo, T. & Gori, M. Attention to sound improves auditory reliability in audio–tactile spatial optimal integration. Front. Integr. Neurosci. 9, 34 (2015).

Wang, J., Wang, S. & Zhang, Y. Artificial intelligence for visually impaired. Displays 77, 102391 (2023).

Fei, N. et al. Towards artificial general intelligence via a multimodal foundation model. Nat. Commun. 13, 3094 (2022).

Mukhiddinov, M. & Cho, J. Smart glass system using deep learning for the blind and visually impaired. Electronics 10, 2756 (2021).

Ahmetovic, D., Kwon, N., Oh, U., Bernareggi, C. & Mascetti, S. Touch screen exploration of visual artwork for blind people. In Proc. Web Conference 2021 (WWW) 2781–2791 (ACM, 2021).

Holloway, L., Marriott, K., Butler, M. & Borning, A. Making sense of art: access for gallery visitors with vision impairments. In Proc. 2019 CHI Conference on Human Factors in Computing Systems 1–12 (ACM, 2019).

Liu, H. et al. Angel’s girl for blind painters: an efficient painting navigation system validated by multimodal evaluation approach. IEEE Trans. Multimed. 25, 2415–2429 (2023).

Bornschein, J., Bornschein, D. & Weber, G. Blind Pictionary: drawing application for blind users. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems 1–4 (ACM, 2018).

Granquist, C. et al. Evaluation and comparison of artificial intelligence vision aids: Orcam MyEye 1 and Seeing AI. J. Vis. Impair. Blind. 115, 277–285 (2021).

Goncu, C. & Marriott, K. In Human–Computer Interaction—INTERACT 2011 (eds Campos, P. et al.) 30–48 (Springer, 2011).

de Jesus Oliveira, V. A., Brayda, L., Nedel, L. & Maciel, A. Designing a vibrotactile head-mounted display for spatial awareness in 3D spaces. IEEE Trans. Vis. Comput. Graph. 23, 1409–1417 (2017).

Peiris, R. L., Peng, W., Chen, Z. & Minamizawa, K. Exploration of cuing methods for localization of spatial cues using thermal haptic feedback on the forehead. In 2017 IEEE World Haptics Conference (WHC) 400–405 (IEEE, 2017).

Tang, Y. et al. Advancing haptic interfaces for immersive experiences in the metaverse. Device 2, 100365 (2024).

Leng, S. et al. Mitigating object hallucinations in large vision-language models through visual contrastive decoding. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 13872–13882 (IEEE, 2024).

Zhou, Y. et al. Analyzing and mitigating object hallucination in large vision-language models. In 12th International Conference on Learning Representations (ICLR, 2024).

Zhang, Y.-F. et al. Debiasing multimodal large language models. Preprint at https://doi.org/10.48550/arXiv.2403.05262 (2024).

Jiang, L., Jung, C., Phutane, M., Stangl, A. & Azenkot, S. ‘It’s kind of context dependent’: Understanding blind and low vision people’s video accessibility preferences across viewing scenarios. In Proc. 2024 CHI Conference on Human Factors in Computing Systems 1–20 (ACM, 2024).