Abstract

Real-time detection and accurate segmentation of chip pads are important tasks to ensure chip alignment and position correction. To address the challenges of small target chip pad detection, segmentation accuracy and model lightweight, this paper proposes a lightweight chip pad instance segmentation algorithm based on an improved YOLOv8-seg, named YOLOv8-seg-CP (chip pad). Firstly, we integrate the next-generation lightweight StarNet into the original backbone network to enhance fine feature capture capabilities while reducing the number of parameters. Then, we construct the C2f-Star module in the neck network, which enhances the feature extraction performance for small chip pad targets. This maintains accuracy, reduces computational load, and improves detection and segmentation speed. On this basis, we introduce a lightweight shared convolution segmentation head (LSCSH), significantly reducing both parameter count and computational load while enhancing segmentation performance. Additionally, we propose a CGCAFusion convolutional attention fusion module. This module uses a content-guided convolutional attention fusion mechanism to dynamically adjust attention weights based on the content of input features, capturing both global and local feature information and enhancing multimodal feature fusion. Experiments on the chip pad dataset demonstrate that our algorithm achieves a detection and segmentation accuracy of 89.8%. The model size, parameters, and FLOPs are 3.7 M, 1.7 M, and 8.2 G respectively, representing reductions of 45.6%, 50%, and 31.7% compared to the baseline model. The FPS is 1399.3, an improvement of 25.8% over the baseline model. The inference time is 0.72ms, which is 0.18ms less than the baseline model. Extensive experimental results on COCO, carparts-seg, and crack-seg datasets further show that the improved YOLOv8n-seg model outperforms many existing advanced methods in terms of performance. This approach holds significant industrial application value for fully automated chip testing and sorting integrated production.

Similar content being viewed by others

Introduction

The chip pad segmentation task is a key link in wafer accurate positioning, alignment, and auxiliary correction. It is one of the key technologies in the field of semiconductor automated manufacturing. Accurate detection and segmentation of chip pads can help correct potential production defects in the fully automatic chip test and sorting production integrated process, ensure the quality and reliability of the chip, and play an important role in the subsequent alignment and correction decision-making process1,2,3.

In the actual chip industrial manufacturing process, chip alignment and correction operations greatly impact the chip yield. In recent years, the rapid advancement of machine vision and deep learning technology has offered new solutions for automating industrial quality inspection in chip manufacturing. These methods are particularly effective in the chip alignment and correction process, enabling real-time detection and segmentation of chip pad defects4. Additionally, they allow for accurate positioning and specific segmentation shapes to correct the alignment of the probe and chip pad5,6. In the actual chip testing and sorting production, the instance segmentation algorithm is used to detect and segment the chip pad in real time. If the chip pad is detected, it means that the chip pad is not aligned, and the probe and pad position are corrected using the segmented pad shape. If the chip pad cannot be detected during the alignment process, the alignment is considered successful7,8,9.

Chip pad image segmentation techniques can be broadly categorized into traditional image segmentation algorithms and deep learning-based instance segmentation algorithms10. Traditional chip image segmentation methods mostly rely on threshold segmentation methods, which screen and assign images to corresponding categories based on their grayscale features. However, this method is inefficient and has poor robustness. Currently, most advanced instance segmentation algorithms integrate deep learning technologies, which meet the high real-time performance and accuracy demands of industrial applications11. Classical two-stage instance segmentation algorithms include Mask R-CNN12 and Cascade Mask-R-CNN13. Chiu et al.14 used Mask R-CNN for wafer defect classification. Wu et al.15 applied Mask R-CNN for the classification, localization, and segmentation of solder joint defects. Despite the high accuracy of R-CNN series algorithms, their complex computations and slow segmentation speeds prevent real-time application. One-stage instance segmentation algorithms include YOLACT16, SOLO series17,18, and YOLO series19,20,21 algorithms. Zeng et al.22 improved small object segmentation accuracy by designing a lightweight YOLACT. Zou et al.23 proposed a lightweight segmentation network based on SOLOv2 for extracting weld seam features. Shinde et al.24 utilized an improved YOLO algorithm for accurate wafer defect localization and classification. Xu et al.25 proposed a chip pad alignment detection algorithm based on improved YOLOv5. Glučina et al.26 used a YOLOv5 segmentation variant for accurate PCB classification and segmentation. Yasir et al.27 applied YOLOv7 for precise position and shape extraction in ship image instance segmentation. The goal of improving the lightweight detection performance of small targets is to reduce the parameters and computational complexity in the network model. Im Choi et al.28 proposed a gradient-based visual detection saliency measurement method to guide channel pruning, which uses fewer parameters to achieve better performance than the original model. Shang et al.29 used channel pruning technology based on the YOLOv5s model to achieve a lightweight coal mine stone recognition and detection model. Although pruning technology can reduce network model parameters, it will cause a significant decrease in accuracy. The YOLO algorithm is one of the most mainstream one-stage instance segmentation algorithms, especially the YOLOv8-seg algorithm30, which stands out for its significant advantages in segmentation accuracy and efficiency. Therefore, this paper selects the YOLOv8-seg network as the baseline for chip pad detection and segmentation, with the aim of further enhancing its accuracy and reducing its parameter size.

To address the challenges of real-time detection and precise segmentation of chip pads, this paper proposes an improved YOLOv8-seg network algorithm. This algorithm improves the accuracy of detecting and segmenting small target chip pads. It also reduces model parameters and computational load, which meets the requirements of automated production in fully automatic chip testing and sorting machines in the industry.

The main contributions and innovations of this work are as follows:

-

1.

Adoption of a new, more lightweight backbone network: We conducted in-depth research on lightweight backbone networks and replaced the original backbone network. This new lightweight network significantly reduces the model’s computational cost and improves inference speed.

-

2.

Construction of a new C2f-Star Block: While enhancing the feature extraction performance of small target chip pads, this reduces model complexity, ensuring dual improvements in accuracy and efficiency, making it suitable for fully automated chip testing and sorting integrated production detection and segmentation tasks.

-

3.

We propose a novel lightweight shared convolution segmentation head: by introducing shared convolution and innovatively incorporating multi-scale perception layers, this approach enhances segmentation performance representation, significantly reduces the model’s computational load, and effectively improves the real-time detection and precise segmentation of chip pads.

-

4.

Introduction of a new attention fusion module: Using a content-guided convolutional attention fusion mechanism, this module dynamically and adaptively adjusts attention weights based on the content of input features, enhancing the ability to capture both global and local feature information and improving multimodal feature fusion effects, which is beneficial for real-time tasks in industrial chip pad detection and segmentation.

Datasets and methods

Datasets and pre-processing

The chip pad dataset used in this study was collected from a semiconductor company in Guangxi, China. Based on the chip pads from actual tests, we constructed an image dataset of chip pads using an industrial camera with a resolution of 6112 × 3440 pixels, capturing images under various lighting conditions. The industrial camera was equipped with a 10 cm focal length, a 200× magnification eyepiece, and a 20-megapixel CMOS sensor. Some samples from the dataset are shown in Fig. 1a–d, where chip pad (rig) requires alignment with the probe, while chip pad (wro) does not require probe alignment.

The collected data underwent filtering and organization, and data augmentation was applied to some images to enhance sample usability. Subsequently, we used the X-Anylabeling31 software to label the collected images of different chip pads and probe samples. The chip pad dataset contains 6017 chip pads (rig), 1151 chip pads (wro) and 3566 probes, with a total of 1006 image samples divided into training set, validation set and test set in a ratio of 7:1.5:1.5. The upper part of Fig. 1e illustrates the target categories, quantities, and the size of bounding boxes used for labeling in the training dataset. The lower part of Fig. 1e shows the distribution of target sizes and the distribution of target locations. The figure indicates that target sizes are uneven, with a large number of small targets, and target locations are concentrated in the center of the images.

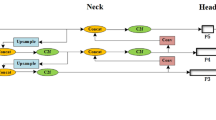

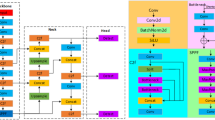

Improved YOLOv8-seg network

To address the issues of non-real-time detection, imprecise segmentation, and excessive parameters and computational load in traditional chip pad detection networks, This paper proposes a novel lightweight instance segmentation model for chip pads, named YOLOv8-seg-CP (chip pad), as illustrated in Fig. 2 (In the figure, P3, P4, and P5 are feature maps of different downsampling scales extracted by Backbone). This model not only improves the accuracy of chip pad and probe detection and segmentation but also significantly reduces the network’s computational load and parameter count while maintaining accuracy. The model includes four key improvements. Firstly, the integration of the next-generation lightweight StarNet into the backbone network enables the network to capture long-range dependencies in chip pad images, achieving high computational efficiency and acquiring more global feature information. Secondly, the construction of the C2f-Star Block in the neck network of YOLOv8n-seg enhances the feature extraction of complex chip pad images, reduces computational load, and improves detection and segmentation speed. Thirdly, the introduction of a novel lightweight segmentation head module (LSCSH) enhances the representation and segmentation capabilities while significantly reducing the parameter count. In addition, we have introduced a CGCAFusion convolutional attention fusion module. This module dynamically builds contextual relationships based on input feature content using a content-guided convolutional attention mechanism. As a result, it effectively captures both global and local information, leading to a significant improvement in detection accuracy and segmentation precision. Finally, the loss functions of the improved model remain consistent with those of the YOLOv8n-seg model. This includes the VFL loss function for classification tasks and the CIOU loss function combined with the DFL loss function for regression tasks.

StarNet

StarNet32 is an efficient and lightweight network designed by Microsoft in 2024. As illustrated in Fig. 3, StarNet introduces a method to simplify network design through star operations, which can achieve efficient feature representation without complex feature fusion or multi-branch structures. By replacing the original backbone network with the StarNet network, the computational load and the number of parameters can be significantly reduced, while maintaining the same number of output feature map channels as in the original convolutional layers.

StarNet adopts a four-level hierarchical architecture, utilizing convolutional layers for downsampling and employing Blocks for feature extraction. To meet efficiency requirements, Batch Normalization has replaced Layer Normalization. It is positioned after the depth-wise convolution, enabling feature fusion during the inference stage. As shown in Fig. 4, the “star operation” is a method that merges features through element-wise multiplication of two linear transformations. The StarConvBN module in StarNet is a form of depth-wise separable convolution. It first applies a 2D convolution with a stride of 1, dilation factor of 1, and a kernel size of 1 to generate feature maps. These feature maps are then added to the module and undergo the “star operation” of element-wise multiplication to obtain the fused features. Finally, batch normalization is applied to the output of the 2D convolution to produce the complete feature representation. The batch normalization weights and biases are initialized to 1 and 0, respectively.

As shown in Fig. 5, the StarBlock first applies a Depth-wise Convolution (DWConv) layer for preliminary feature extraction and channel reorganization. This is followed by two Conv2d layers to expand the number of channels. Next, an element-wise multiplication operation is performed, enabling feature interaction and fusion. The result of the multiplication is then activated using ReLU6. The activated features are then passed through a second DWConv layer to reduce the number of channels, further refining the features to match the input channel count. DropPath regularization is applied to improve the model’s generalization capabilities. Finally, the features obtained from the previous step are combined with the initial input features through a residual connection and feature fusion. Compared to the Bottleneck structure, the StarBlock achieves higher feature extraction capabilities with fewer parameters.

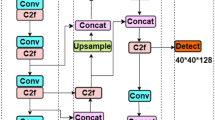

C2f-Star

The C2f-Star module replaces the Bottleneck layers in the original network with StarBlock layers, significantly reducing the redundant computations introduced by standard convolutions in the Bottleneck. This reduction in parameters does not compromise the chip pad feature extraction capability, making the model suitable for deployment on mobile or edge devices. Consequently, this enhancement facilitates real-time detection and precise segmentation of chip pads. The structure of the C2f-Star module is shown in Fig. 6. First, the input passes through a convolution layer (Conv) to extract initial features. After the Split operation, the output of the convolution layer is divided into multiple branches and sent to subsequent processing modules. The input features are then processed by the first StarBlock module, followed by further refinement in the second StarBlock module. StarBlock is an improved structure that replaces the traditional Bottleneck module to reduce redundant computations and enhance feature extraction efficiency. The output from the StarBlock and the features from other branches are concatenated (Concat). This fusion of features from different paths integrates multiple scales of information, boosting the model’s feature representation capability. Finally, the concatenated features pass through another convolution layer to generate the final output feature map. Compared to the original neck convolution, C2f-Star reduces computational complexity while improving feature extraction.

LSCSH

The original segmentation head of YOLOv8-seg has several limitations. First, the original segmentation head contains a large number of parameters, accounting for one-fifth of the total computation of the algorithm. Each of the three segmentation heads in the original network requires two 3 × 3 convolutions and one 1 × 1 convolution to extract image feature information. This complex structure significantly increases both the parameter count and the computational load of the model. Second, the original segmentation head module uses a traditional single-scale segmentation structure, which is ineffective at handling multi-scale targets. It segments from only one scale of the feature map, ignoring contributions from other scales and failing to address the issue of inconsistent target scales for each segmentation head. Third, the original segmentation head struggles to achieve fine-grained segmentation for small targets.

To address these three key issues, we propose a novel segmentation head structure called LSCSH (Lightweight Shared Convolution Segmentation Head). We introduced GroupNorm convolution in this head structure, which has been proved in the FOCS33 paper to improve the segmentation head localization and classification performance greatly. The structure is illustrated in Fig. 7. The core idea of this design is to replace the two conventional convolutions used in the three segmentation heads with a shared Group Normalization convolution (as indicated by the green and yellow parts in the figure). Additionally, we introduce an unshared 1 × 1 shared convolution, a 3 × 3 shared convolution, and a mask convolution at the P3, P4, and P5 levels of the feature pyramid, respectively (as indicated by the white parts in the figure). This allows for a lightweight segmentation head that improves fine-grained segmentation while reducing parameter weights. Furthermore, to address the issue of inconsistent target scales segmented by each head, a scale layer is introduced to adjust feature scaling. Through this new segmentation head structure, we can significantly reduce the number of parameters and computational load while maintaining accuracy, and endow the segmentation head with stronger multi-scale perception capabilities. This makes it highly suitable for deployment on resource-constrained edge devices.

CGCAFusion

Multimodal learning and attention mechanisms are among the most prominent research areas in contemporary deep learning. Integrating attention fusion into small object recognition tasks offers significant innovation. As a component of multimodal fusion, attention fusion constructs connections between different modalities through attention mechanisms, thereby enhancing the model’s ability to handle complex tasks. Introducing a multimodal attention mechanism network during the feature fusion stage allows the model to focus more on critical feature areas. There are several mainstream attention mechanisms, including channel attention mechanisms like SE34 and CA35, spatial attention mechanisms like SGE36, coordinate attention mechanisms like ELA37, sparse attention mechanisms like BRA38, and hybrid attention mechanisms like CBAM39, CPCA40, MLCA41, and EMA42. However, these attention mechanisms are not optimized for fusing modal features.

Due to the high number and clutter of small objects such as chip pads and probes, precise segmentation is challenging. To enhance the model’s ability to extract and fuse features from multiple chip pads and probes, we propose CGCAFusion. It utilizes a content-guided convolutional attention fusion mechanism. This mechanism dynamically adjusts attention weights based on the content of the input features, capturing long-range dependencies and enhancing the modeling capabilities of both global and local feature information, thereby achieving effective multimodal feature fusion. As illustrated in Fig. 8, the structure of CGCAFusion involves weighting the input features, which are then processed through the CGA43 content-guided module and the CAFM44 convolutional attention fusion for feature extraction. Following this, a convolutional transformation adjusts the channel numbers to enhance the model’s expressive power, resulting in the final output of fused features. The CGA (Content-Guided Attention) module assigns unique spatial features to each input channel in a coarse-to-fine manner, helping the model focus on key regions within each channel. It combines channel and spatial attention weights, emphasizing the most important information during feature encoding to ensure effective information exchange. The CAFM (Convolution and Attention Fusion Module) is a simplified design, inspired by transformer-based45 methods known for extracting global features and capturing long-range dependencies. The global branch uses self-attention to capture comprehensive chip pad information, while the local branch focuses on extracting local features to reduce noise. While transformer-based methods offer strong global feature modeling, they come with high computational complexity in high-resolution tasks, which hampers real-time processing. In contrast, CGCAFusion simplifies capturing both global and local information, maintaining efficiency and lightweight computation. This balance between local and global feature integration makes it well-suited for real-time chip pad detection and segmentation tasks.

As can be seen from Fig. 8, for the given two input tensors\(F \in {R^{C \times H \times W}}\), where C represents the channel dimension, \(H \times W\) indicates spatial resolution, CGCAFusion Output in sequence \({F^{\prime }}\), \({M_{CAFM}}\), \({F^{\prime \prime }},\) \({M_{CGA}},\) \({F^{\prime \prime \prime }},\) \({M_{Fusion}}\) and \({F_{out}}\). The overall attention fusion process can be described as follows: First, the corresponding elements of the two input tensors are added together. The resulting initial features are then processed by the CAFM module, which captures relationships between different channel features to facilitate feature fusion. Next, these features are input into the CGA module to learn spatial feature fusion, enabling the network to focus on important regions within the image. This is achieved by applying the \(Sigmoid\) activation function to each spatial attention map to obtain the spatial fusion weights for each depth convolution. The channel-level and spatial-level fusion results are then weighted and combined using the Fusion module, enhancing the network’s ability to extract and represent fused attention features. Finally, a convolutional layer adjusts the channels of the fused features, generating the final attention-fused features tailored to the requirements of downstream tasks. The fused result \({F_{out}},\) effectively balances local and global feature channel information. It reduces noise and redundant information in the features, enhancing the model’s robustness. In Fig. 8, there are three key operations: \({M_{CAFM}},\) \({M_{CGA}},\) and \({M_{Fusion}}.\) For step \({M_{CAFM}},\) the initial input feature \({F^{\prime }}\) is first received. It is processed through a 3D convolutional layer to generate queries, keys, and values, and further refined by a depthwise separable convolution. The module then splits the queries, keys, and values into multiple attention heads and uses the self-attention mechanism to capture global features. The attention weights are calculated by normalizing the queries and keys, then multiplied with the values to obtain the global attention output. At the same time, local convolution enhances the representation of local features. Finally, a weighted fusion of local and global attention features results in the output feature \({F^{\prime \prime }},\) which integrates both global and local information to meet the needs of context-aware tasks. For \({M_{CGA}},\) the input features \({F^\prime }\) and \({F^{\prime \prime }}\) are first received, and channel attention and spatial attention are applied to extract important information from the initial features. By combining the results of both attentions, a fused feature map is generated. Next, pixel attention calculates pixel-level attention weights based on the previously fused features. Finally, these weights dynamically adjust the contributions of the two input features, and the output is further refined through a convolutional layer. This process effectively integrates information at the channel, spatial, and pixel levels, enhancing the feature representation capacity.

where \({M_{CAFM}}\)is the convolutional attention fusion module, \({M_{CGA}}\) is the content-guided attention module, \(Sigmoid\) is the activation function, \(Conv\) is a 1 × 1 convolution, which is mainly implemented by a 1 × 1 2D convolution layer, \({M_{Fusion}}\) is the fusion module, and its structure is shown in Fig. 9.

For\({M_{Fusion}}\), Fig. 9 illustrates the details of the proposed Fusion hybrid integration structure. The core component utilizes CAFM to dynamically adjust spatial weights. The low-dimensional and high-dimensional input features are weighted and summed before being processed by the CAFM to compute the fusion weights, which are then combined using a weighted summation method. A skip connection layer is subsequently added to incorporate the features after convolutional attention fusion, enhancing the fusion capability of local and global spatial features while mitigating the gradient vanishing problem. Finally, the convolutional fusion features are projected through a 1 × 1 convolutional layer to obtain the final convolutional attention fusion features \({F_{fusion}}\). By integrating global and local feature information, the model achieves more precise feature fusion and enhancement. It combines the convolution and self-attention mechanisms of the CAFM module to capture multi-scale features. At the same time, the pixel attention module adjusts the feature weights to ensure the model focuses on important regions of the image. This feature fusion significantly improves the model’s performance in real-time detection and segmentation tasks.

Experiments and results

Experimental environment and training strategies

The experimental setup for the YOLOv8-seg algorithm in this study is composed of the components listed in Table 1. This includes the hardware platform and the deep learning framework employed in the experiments.

This study uses the YOLOv8n-seg model as the baseline network for improved training. The hyperparameter settings of the training process are outlined in Table 2.

Performance comparison of different models

The improved algorithm in this paper is compared with most of the current mainstream instance segmentation models: Mask-RCNN, YOLOv5n-seg, YOLOv7-seg, YOLOv8n-seg, YOLOv8s-seg, YOLOv8x-seg, YOLACT, SOLOv2, CondInst46, BoxInst47, ConvNeXt-V248 and YOLOv9c-seg49. The model performance is evaluated using main indicators such as detection average precision mAP(Box), segmentation average precision mAP(Mask), floating-point operations per second (FLOPs), parameters, FPS (Frames Per Second), model size, and inference time, as shown in Table 3.

Firstly, the improved model achieved an average detection and segmentation accuracy of 89.8%, which is 1.1% higher in mAP(Box) and 1.5% higher in mAP(Mask) compared to the original YOLOv8n-seg algorithm. This indicates that our algorithm outperforms most existing mainstream algorithms in both detection and segmentation accuracy. For individual target class recognition accuracy, the improved model achieved 91.8% for chip pads (rig), 96.9% for chip pads (wro), and 80.7% for probes, surpassing other algorithms and matching the latest YOLOv9c-seg algorithms. This demonstrates significant improvements in recognition accuracy and robustness across different target categories. Secondly, the improved model achieves a size of 3.5 MB, FLOPs of 8.2G, with 1.7 M parameters, an FPS of 1399.3, and an inference time of 0.72 ms, outperforming the latest lightweight algorithms such as YOLOv5n-seg. These enhancements make the model more suitable for deployment in automated industrial chip testing and sorting processes. Compared to the aforementioned algorithms, the proposed method demonstrates superior performance in terms of mAP (Box), mAP (Mask), parameter count, FPS, model size, and inference time. Considering all the key metrics, the proposed algorithm balances accuracy and real-time performance, making the model more lightweight and effective for real-time chip pad detection and precise segmentation tasks. In order to more intuitively compare the performance of these models, scatter plots are used for visualization, as shown in Figs. 10 and 11. As shown in Fig. 10, the improved model (highlighted in the green square) outperforms other algorithms in terms of accuracy and FPS. As shown in Fig. 11, the improved model outperforms other algorithms in terms of accuracy and parameter performance.

The performance of the algorithm has been experimentally validated, and the final detection and segmentation results are shown in Fig. 12. The figure shows that the improved model detects more chip pads and probes than the baseline model. It also achieves higher detection and segmentation accuracy. Red boxes mark chip pads needing probe alignment (rig), pink boxes show pads that don’t require alignment (wro), and orange boxes highlight the detected probes. Under identical conditions, the improved model boosts chip pad recognition accuracy and increases the number of identified probes and chip pads. This confirms the superior performance of our improved model. To further verify the effectiveness, Grad-CAM50 was used for heatmap visualization of the results. As shown in Fig. 13, the red areas represent the network’s focus, with deeper colors indicating higher attention. Compared to the baseline model, the improved model shows deeper attention to chip pads and detects more chip pads and probes. This indicates the improved model’s higher effectiveness and superior performance. It also strikes a better balance between accuracy and speed, highlighting its advantages in model size, precision, and processing speed.

Backbone comparison experiment

In Table 4, the performance of StarNet is compared to GhostNet51, FasterNet52, and MobileNetv453. The results indicate that using the StarNet backbone module achieves the highest recognition accuracy, with mAP50 (Box) increasing by 0.7% and mAP (Mask) increasing by 1%. Both accuracy are much higher than GhostNet, and it is lighter and better than FasterNet and MobileNet4 in terms of parameters. The other indicators in the comprehensive table show that the use of the StarNet module has good performance in parameters, FLOPS, FPS, and model size while ensuring high accuracy.

Comparison experiment on different convolutions in neck

The C2f-Star module aims to leverage the benefits of StarNet and C2f to be more lightweight while maintaining original convolution accuracy. Table 5 shows the performance comparison experiment between C2f-RetBlock54, C2f-PKI55, C2f-fadc56 and C2f-Star. The main indicators in the table show that the C2f-Star module not only has the highest accuracy but also outperforms other modules in terms of parameters, model size, FLOPs, and speed.

Comparison experiment on segment head

LSCSH combines the advantages of GroupNormalization and shared convolution, improving the fine-grained segmentation performance while being more lightweight. Table 6 is a performance comparison experiment between EfficientHead57, LADH58 and LSCSH. It can be seen that the detection and segmentation accuracy are the highest when the LSCSH module is used, and it is also lighter, with mAP50 (Box) increased by 1.2%, mAP50 (Mask) increased by 1.4%, parameters, model size, and FLOPs reduced by 26.48%, 26.48%, and 16.7%, respectively, and FPS increased by 17.2%.

Comparison experiment on attention mechanisms

Table 7 selects mAP(Box) and mAP(Mask) as the main evaluation indicators to compare the attention method proposed in this article with the current mainstream attention method. It can be seen from a large number of experimental results that the mAP (Box) of the original baseline is 88.7%, and the mAP (Mask) is 88.3%. After implementing the CCGAusion attention fusion method, the mAP50 (Box) reaches 90.3%, and the mAP50 (Mask) reaches 90.2%. Compared with other attention mechanism modules, the average accuracy of detection and segmentation of our proposed attention method is the highest. Significant accuracy improvements can be achieved in small target detection and segmentation tasks.

Ablation experiment

To verify whether the improved module of YOLOv8-seg-CP is effective, this article conducted an ablation experiment on the chip pad data set. The experimental results are shown in Table 8. The improved algorithm uses a more efficient network structure to enhance the YOLOv8n-seg architecture, thereby improving recognition accuracy while reducing model parameters and computational complexity to increase inference speed. The improved algorithm adds StartNet, C2f-Star and LSCSH modules respectively to improve the accuracy of the algorithm while significantly reducing the parameters and computational complexity of the model. The last added CGCAFusion attention fusion mechanism effectively improves the accuracy of detection and segmentation. Compared with the YOLOv8n-seg algorithm, the final improved model not only improves in accuracy, but also reduces the model size, parameters and FLOPs by 45.6%, 50% and 31.7% respectively, and increases the FPS by 25.8%, which effectively reduces the model’s performance at the edge. The difficulty and cost of on-device deployment while significantly improving accuracy to meet real-time requirements.

Generalization experiment

To evaluate the generalization ability of the improved YOLOv8-seg model, this paper compares the improved method with the current mainstream instance segmentation model on the crack-seg, carparts-seg and coco datasets. The experimental results are shown in Tables 9, 10 and 11.

In Table 9, the improved model achieved an mAP(Box) of 82.3% and an mAP(Mask) of 70.6% on the crack-seg dataset. This is 1.8% higher than the original YOLOv8n-seg mAP(Box) and 4.2% higher than the mAP(Mask). Additionally, it is 0.7% higher than the latest YOLOv9c-seg mAP(Box) and 4.5% higher than the mAP(Mask). These improvements greatly enhance the accuracy of detection and segmentation. The improved model has 15% fewer parameters and 12% less model size than YOLOv5n-seg, with an FPS of 1143.9 and an inference time of only 0.87ms, less than other models. Overall, the method in this paper achieves excellent detection and segmentation accuracy with fewer parameters.

Table 10 shows that the improved model achieves an mAP(Box) of 66.4% and an mAP(Mask) of 67.7% on the carparts-seg dataset. These values are 1.6% and 1.2% higher than those of the original YOLOv8n-seg, respectively. Although the accuracy is slightly lower than that of the latest YOLOv9c-seg algorithm, the improved model size is 3.7 M, the parameters are 1.7 M, the FPS is 1144.7, and the inference time is 0.87ms. The overall performance is better than other mainstream algorithms.

It can be seen from Table 11 that the mAP (Box) and mAP (Mask) of the improved model on the coco dataset are 46.4% and 43.7% respectively, higher than YOLOv5n seg and YOLOv8n seg. Although the accuracy of the improved model is lower than YOLOv5s seg, YOLOv8s seg, and YOLOv9c seg, it is better in model size, parameters, FLOPs, reasoning time, and FPS performance, and is more suitable for deployment on edge computing devices.

Discussion

It has been proven that instance segmentation algorithms based on deep learning can effectively achieve high accuracy in detection and segmentation in various tasks, making it a popular choice for machine learning researchers. However, the complexity and computational requirements of most deep neural networks make it challenging to deploy in resource-constrained real-world industrial applications. In view of this, it is critical to consider using deep neural network models with fewer parameters, which may not have high accuracy but are more suitable for industrial deployment. Although more complex neural networks still have the potential to improve accuracy, it is necessary to ensure a balance between real-time performance and accuracy in industrial chip testing and sorting production tasks.

As shown in Fig. 14, this paper uses receptive field64 visualization to verify the effect of the improved model. The larger the proportion of the green part, the stronger the effective receptive field and the more global information captured. Therefore, the proposed improved model is better than the original YOLOv8n-seg. The improved model has obvious advantages over some current mainstream instance segmentation and lightweight methods. It performs well in evaluation indicators such as mAP, number of parameters, FLOPs, FPS, and model size. Despite occasional misdetections in dim and blurry conditions, the algorithm achieves an impressive mAP of 89.8%, with a lightweight model size of 3.7 M, 1.7 M parameters, and 8.2G FLOPs. It also delivers a remarkable inference speed of 1399.3 frames per second with a processing time of just 0.72ms. These results demonstrate the algorithm’s strong capability for real-time detection and efficient segmentation of industrial chip pads.

Conclusion

This paper proposes a lightweight chip pad detection and segmentation network based on improved YOLOv8-seg, which solves the problem of chip pad alignment detection in the actual industrial fully automatic chip test sorting machine, and its performance is better than the current mainstream one-stage instance segmentation method. In the proposed method, first, a new generation of lightweight StarNet backbone network is used to improve the detection and segmentation accuracy of the model and reduce the model calculation amount. Second, C2f-Star Block is constructed in the neck network to enhance the feature extraction performance of small targets of chip pads, while further reducing the computational load. Third, a lightweight shared convolutional segmentation head module LSCSH is also proposed, which greatly reduces the number of parameters and computation while maintaining accuracy and segmentation speed. Fourth, in order to improve the accuracy of chip pad detection and segmentation, we propose a CGCAFusion convolutional attention fusion module. Experiments show that the improved network has the advantages of small parameters, low computational load, fast inference time, and high detection and segmentation accuracy, which meets the integrated task of fully automatic chip test sorting in the actual industry. Compared with the existing mainstream models, this method improves the detection and segmentation accuracy while reducing the requirements for platform computing and storage capacity. By deploying the trained network model on the edge computing device, the real-time alignment detection task of chip pads in the fully automatic chip testing and sorting machine is realized. Future work will focus on improving the real-time detection efficiency of chip pads in complex scenes with dim light and unclear color features, and enhancing the production efficiency of fully automatic chip testing and sorting machines.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Kim, J. New wafer alignment process using multiple vision method for industrial manufacturing. Electronics 7(3), 39 (2018).

Chen, R., Fennell, B. & Baldwin, D. F. Flip chip self-alignment mechanism and modeling. In Proceedings International Symposium on Advanced Packaging Materials Processes, Properties and Interfaces (Cat. No. 00TH8507), 158–164 (IEEE,. 2000).

Wu, Z. et al. A novel self-feedback intelligent vision measure for fast and accurate alignment in flip-chip packaging. IEEE Trans. Industr. Inf. 16(3), 1776–1787 (2019).

Ren, Z., Fang, F., Yan, N. & Wu, Y. State of the art in defect detection based on machine vision. Int. J. Precis. Eng. Manuf. Green Technol. 9(2), 661–691 (2022).

Liu, W., Yang, X., Yang, X. & Gao, H. A novel industrial chip parameters identification method based on cascaded region segmentation for surface-mount equipment. IEEE Trans. Industr. Electron. 69(5), 5247–5256 (2021).

Wang, F. et al. An improved adaptive genetic algorithm for image segmentation and vision alignment used in microelectronic bonding. IEEE/ASME Trans. Mechatron. 19(3), 916–923 (2013).

Cui, Y. et al. An automatic channel test scheme for multi-chip stacked package with inductively coupled interconnection. In 2023 IEEE Electrical Design of Advanced Packaging and Systems (EDAPS), 1–3 (IEEE, 2023).

Chen, S. H. & Tsai, C. C. SMD LED chips defect detection using a YOLOv3-dense model. Adv. Eng. Inform. 47, 101255 (2021).

Nag, S., Makwana, D., Mittal, S. & Mohan, C. K. WaferSegClassNet-A light-weight network for classification and segmentation of semiconductor wafer defects. Comput. Ind. 142, 103720 (2022).

Gu, W., Bai, S. & Kong, L. A review on 2D instance segmentation based on deep neural networks. Image Vis. Comput. 120, 104401 (2022).

Minaee, S. et al. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 44(7), 3523–3542 (2021).

He, K., Gkioxari, G., Dollár, P. & Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, 2961–2969 (2017).

Cai, Z. & Vasconcelos, N. Cascade R-CNN: High quality object detection and instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 43(5), 1483–1498 (2019).

Chiu, M. C. & Chen, T. M. Applying data augmentation and mask R-CNN-based instance segmentation method for mixed-type wafer maps defect patterns classification. IEEE Trans. Semicond. Manuf. 34(4), 455–463 (2021).

Wu, H., Gao, W. & Xu, X. Solder joint recognition using mask R-CNN method. IEEE Trans. Compon. Packag. Manuf. Technol. 10(3), 525–530 (2019).

Bolya, D., Zhou, C., Xiao, F. & Lee, Y. J. Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 9157–9166 (2019).

Wang, X., Kong, T., Shen, C., Jiang, Y. & Li, L. Solo: Segmenting objects by locations. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XVIII 16, 649–665 (Springer, 2020).

Wang, X., Zhang, R., Kong, T., Li, L. & Shen, C. Solov2: Dynamic and fast instance segmentation. Adv. Neural. Inf. Process. Syst. 33, 17721–17732 (2020).

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 779–788 (2016).

Ultralytics. https://github.com/ultralytics/yolov5.

Wang, C. Y., Bochkovskiy, A. & Liao, H. Y. M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 7464–7475 (2023).

Zeng, J., Ouyang, H., Liu, M., Leng, L. U. & Fu, X. Multi-scale YOLACT for instance segmentation. J. King Saud Univ. Comput. Inf. Sci. 34(10), 9419–9427 (2022).

Zou, Y. & Zeng, G. Light-weight segmentation network based on SOLOv2 for weld seam feature extraction. Measurement 208, 112492 (2023).

Shinde, P. P., Pai, P. P. & Adiga, S. P. Wafer defect localization and classification using deep learning techniques. IEEE Access 10, 39969–39974 (2022).

Wang, J. et al. YOLO-Xray: A bubble defect detection algorithm for chip X-ray images based on improved YOLOv5. Electronics 12(14), 3060 (2023).

Glučina, M., Anđelić, N., Lorencin, I. & Car, Z. Detection and classification of printed circuit boards using YOLO algorithm. Electronics 12(3), 667 (2023).

Yasir, M. et al. Instance segmentation ship detection based on improved Yolov7 using complex background SAR images. Front. Mar. Sci. 10, 1113669 (2023).

Im Choi, J. & Tian, Q. Visual-saliency-guided channel pruning for deep visual detectors in autonomous driving. In 2023 IEEE Intelligent Vehicles Symposium (IV), 1–6 (IEEE, 2023).

Shang, D., Lv, Z., Gao, Z. & Li, Y. Detection of coal gangue by YOLO deep learning method based on channel pruning. Int. J. Coal Prep. Util., 1–13 (2024).

Ultralytics. https://github.com/ultralytics/ultralytics.

CVHub520. https://github.com/CVHub520/X-AnyLabeling.

Ma, X., Dai, X., Bai, Y., Wang, Y. & Fu, Y. Rewrite the stars. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5694–5703 (2024).

Tian, Z., Shen, C., Chen, H. & He, T. FCOS: A simple and strong anchor-free object detector. IEEE Trans. Pattern Anal. Mach. Intell. 44(4), 1922–1933 (2020).

Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 7132–7141 (2018).

Hou, Q., Zhou, D. & Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 13713–13722 (2021).

Li, X., Hu, X. & Yang, J. Spatial group-wise enhance: Improving semantic feature learning in convolutional networks. arXiv preprint arXiv:1905.09646 (2019).

Xu, W. & Wan, Y. ELA: Efficient local attention for deep convolutional neural networks. arXiv preprint arXiv:2403.01123 (2024).

Zhu, L., Wang, X., Ke, Z., Zhang, W. & Lau, R. W. Biformer: Vision transformer with bi-level routing attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10323–10333 (2023).

Woo, S., Park, J., Lee, J. Y. & Kweon, I. S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), 3–19 (2018).

Huang, H., Chen, Z., Zou, Y., Lu, M. & Chen, C. Channel prior convolutional attention for medical image segmentation. arXiv Preprint arXiv:2306.05196 (2023).

Wan, D. et al. Mixed local channel attention for object detection. Eng. Appl. Artif. Intell. 123, 106442 (2023).

Ouyang, D. et al. Efficient multi-scale attention module with cross-spatial learning. In ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 1–5 (IEEE, 2023).

Chen, Z., He, Z. & Lu, Z. M. DEA-Net: Single image dehazing based on detail-enhanced convolution and content-guided attention. IEEE Trans. Image Process. (2024).

Hu, S., Gao, F., Zhou, X., Dong, J. & Du, Q. Hybrid convolutional and attention network for hyperspectral image denoising. IEEE Geosci. Remote Sens. Lett. (2024).

Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. (2017).

Tian, Z., Shen, C. & Chen, H. Conditional convolutions for instance segmentation. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part I 16, 282–298 (Springer, 2020).

Tian, Z., Shen, C., Wang, X. & Chen, H. Boxinst: High-performance instance segmentation with box annotations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5443–5452 (2021).

Woo, S. et al. Convnext v2: Co-designing and scaling convnets with masked autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 16133–16142 (2023).

Wang, C. Y., Yeh, I. H. & Liao, H. Y. M. Yolov9: Learning what you want to learn using programmable gradient information. arXiv Preprint arXiv:2402.13616 (2024).

Selvaraju, R. R. et al. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, 618–626 (2017).

Han, K. et al. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1580–1589 (2020).

Chen, J. et al. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 12021–12031 (2023).

Qin, D. et al. MobileNetV4-Universal Models for the Mobile Ecosystem. arXiv preprint arXiv:2404.10518 (2024).

Fan, Q., Huang, H., Chen, M., Liu, H. & He, R. Rmt: Retentive networks meet vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5641–5651 (2024).

Cai, X. et al. Poly kernel inception network for remote sensing detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 27706–27716 (2024).

Wu, L., Lin, H., Gao, Z., Zhao, G. & Li, S. Z. A teacher-free graph knowledge distillation framework with dual self-distillation. IEEE Trans. Knowl. Data Eng. (2024).

Li, C. et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv Preprint arXiv:2209.02976 (2022).

Zhang, J., Chen, Z., Yan, G., Wang, Y. & Hu, B. Faster and Lightweight: An improved YOLOv5 object detector for remote sensing images. Remote Sens. 15(20), 4974 (2023).

Yang, L., Zhang, R. Y., Li, L. & Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In International Conference on Machine Learning, 11863-11874 (PMLR, 2021).

Misra, D., Nalamada, T., Arasanipalai, A. U. & Hou, Q. Rotate to attend: Convolutional triplet attention module. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 3139–3148 (2021).

Li, Y. et al. Large selective kernel network for remote sensing object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 16794–16805 (2023).

Lee, Y. & Park, J. Centermask: Real-time anchor-free instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 13906–13915 (2020).

Lau, K. W., Po, L. M. & Rehman, Y. A. U. Large separable kernel attention: Rethinking the large kernel attention design in cnn. Expert Syst. Appl. 236, 121352 (2024).

Ding, X., Zhang, X., Han, J. & Ding, G. Scaling up your kernels to 31×31: Revisiting large kernel design in CNNs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 11963–11975 (2022).

Acknowledgements

We acknowledge support from Guangxi Innovation Driven Development Project Guike AA21077015, National Natural Science Foundation of China Grants 12162005 and Innovation Project of Guangxi Graduate Education (YCSW2024230).

Author information

Authors and Affiliations

Contributions

Y.Z. and Z.Z. performed the analysis.Z.Z. validated the analysis and drafted the manuscript. Y.Z. reviewed the manuscript. Z.Z. designed the research. Y.T. reviewed the manuscript. C.Z. reviewed the manuscript. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, Z., Zou, Y., Tan, Y. et al. YOLOv8-seg-CP: a lightweight instance segmentation algorithm for chip pad based on improved YOLOv8-seg model. Sci Rep 14, 27716 (2024). https://doi.org/10.1038/s41598-024-78578-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-78578-x

Keywords

This article is cited by

-

Review of solder joint vision inspection for industrial applications

The International Journal of Advanced Manufacturing Technology (2025)