Abstract

Swarm Intelligence-based metaheuristic algorithms are widely applied to global optimization and engineering design problems. However, these algorithms often suffer from two main drawbacks: susceptibility to the local optima in large search space and slow convergence rate. To address these issues, this paper develops a novel cooperative metaheuristic algorithm (CMA), which is inspired by heterosis theory. Firstly, simulating hybrid rice optimization algorithm (HRO) constucted based on heterosis theory, the population is sorted by fitness and divided into three subpopulations, corresponding to the maintainer, restorer, and sterile line in HRO, respectively, which engage in cooperative evolution. Subsequently, in each subpopulation, a novel three-phase local optima avoidance technique-Search-Escape-Synchronize (SES) is introduced. In the search phase, the well-established Particle Swarm Optimization algorithm (PSO) is used for global exploration. During the escape phase, escape energy is dynamically calculated for each agent. If it exceeds a threshold, a large-scale Lévy flight jump is performed; otherwise, PSO continues to conduct the local search. In the synchronize phase, the best solutions from subpopulations are shared through an elite-based strategy, while the classical Ant Colony Optimization algorithm is employed to perform fine-tuned local optimization near the shared optimal solutions. This process accelerates convergence, maintains population diversity, and ensures a balanced transition between global exploration and local exploitation. To validate the effectiveness of CMA, this study evaluates the algorithm using 26 well-known benchmark functions and 5 real-world engineering problems. Experimental results demonstrate that CMA outperforms the 10 state-of-the-art algorithms evaluated in the study, which is a very promising for engineering optimization problem solving.

Similar content being viewed by others

Introduction

In recent years, numerous optimization challenges have emerged across various areas including engineering, medicine, and computer science1. This has brought about a pressing need for effective and reliable optimization algorithms. It is difficult to build mathematical models for complex systems. Even upon the successful creation of these models, they are often hindered by high costs and long solution time2. Recently, algorithms inspired by nature for solving optimization problems have become increasingly popular. As traditional optimization techniques falter against intricate combinatorial and nonlinear obstacles, the demand for innovative solutions has been underscored. Metaheuristic optimization algorithms are extensively used because of the benefits listed below: (i) ease of implementation based on simple concepts, (ii) no need for gradient information, (iii) capability to avoid the local optima, and (iv) flexibility to address diverse optimization tasks without needing specific modifications3. Unlike traditional algorithms, metaheuristics could investigate a vast number of potential solutions, frequently identifying superior solutions with lower computational effort. The recent emphasis on the cost-effectiveness and high efficiency of metaheuristics has significantly boosted their popularity and garnered considerable interest from researchers. Many metaheuristics draw inspiration from natural phenomena, such as animal behavior and physical processes4,5.

Common metaheuristic algorithms (MAs) include the Genetic Algorithm (GA)6 and Particle Swarm Optimization algorithm (PSO)7. Other examples include the Cooperative Co-evolution Algorithm (CCEA)8, Ant Colony Optimization Algorithm (ACO)9, hybrid rice optimization algorithm (HRO)10, the Cheetah Optimizer (CO)11, Hippopotamus optimization algorithm (HOA)12, Mother optimization algorithm (MOA)13, Walrus Optimization Algorithm (WaOA)14, Differential Evolution (DE)15, Butterfly Optimization Algorithm (BOA)16, Grey Wolf Optimizer (GW)17, Simulated Annealing (SA)18, Gravitational Search Algorithm (GSA)19, Aladdin’s Forty Thieves Algorithm (AFTA)20, and Archimedes Optimization Algorithm (AOA)21. These algorithms have been applied in various optimization domains, such as minimizing manufacturing defects, maximizing profits/investment portfolios, minimizing business losses, and maximizing productivity22. Additionally, Agrawal et al.23 and Ezugwu et al.24 have reported over 300 metaheuristic optimization algorithms, including the invention of new algorithms, enhancements of existing ones, and the development of hybrid approaches. The main objective of these algorithms is to identify the global optimum, or the best solution, within the search space. MAs function in two stages: exploration and exploitation25. During the exploration phase, the global optimum is searched throughout the entire search space. In contrast, the exploitation phase involves optimization within previously identified promising subspaces to converge to the optimal solution.

It has been highlighted by Morales-Castañeda et al.26 that most MAs face two main issues: early convergence and getting stuck in the local optima. To address these challenges, various techniques have been proposed by scholars. Additionally, it has been noted by Houssein et al.27 that a lack of smooth transition between exploration and exploitation phases creates a gap between these stages, posing a challenge to maintaining algorithmic balance and achieving the optimal performance. To overcome these shortcomings, hybrid algorithms have been developed. These algorithms combine multiple MAs, making the weaknesses of each base algorithm be complemented by the others, thereby enhancing overall performance28. For instance, Salgotra et al.29 proposed a multi-hybrid algorithm named DHPN, which combines the essential features of the Dwarf Mongoose Algorithm (DMA), Cuckoo Search (CS), Prairie Dog Optimizer (PDO), Honey Badger Algorithm (HBA), GWO, and Naked Mole Rat Algorithm (NMRA). Additionally, they optimized the parameter settings for these algorithms to enhance their performance. Qiao et al.30 developed an advanced PSO called NDWPSO, which employs multiple hybrid strategies to significantly improve the efficiency of solving classical engineering problems. Xu et al.31 introduced an improved Butterfly Optimization Algorithm integrated with Black Widow Optimization (BWO-BOA), successfully tackling the challenges of feature selection in network intrusion detection. PSO was combined with the GA by Xue et al.32 to optimize a set of 26 benchmark functions. A hybrid algorithm (PSOGSA) that integrates PSO and the Gravitational Search Algorithm (GSA) was proposed by Mirjalili et al.33 for solving optimization problems. A crossover mechanism combining Simulated Annealing (SA) with Moth Flame Optimization (MFO) was introduced by Xu et al.34 to enhance the optimization algorithm for feature selection problems.

According to the “No Free Lunch” (NFL) theorem35, no universal optimization algorithm could achieve the optimal results for every problem. While existing hybrid algorithms have somewhat alleviated issues such as early convergence and entrapment in local optima in MAs, they still face challenges like integration difficulties, complex parameter tuning, and the need for improved adaptability and robustness. Therefore, more new hybird techniques are required. HRO, introduced by Ye et al.10, is a population-based metaheuristic inspired by the breeding process of Chinese hybrid rice. In essence, hybrid rice is based on the theory of heterosis. The heterosis theory, which suggests hybrids outperform their parents, has brought about its application in various problems, such as the 0-1 knapsack problem36, feature selection37, and intrusion detection38. Additionally, HRO has shown improved performance when combined with other algorithms. For example, Ye et al.39 merged ACO with HRO to develop a high-dimensional feature selection algorithm. Therefore, drawing inspiration from the co-evolutionary concepts of HRO, a new framewok for combining different MAs based on HRO is investigated in the paper, which is used to address engineering and optimization problems. It is noted that among many MAs, the convergence of PSO and ACO has been demonstrated to a certain extent in theory, and their strong performance has been further validated through experimental results. PSO and ACO are one of the most roubst algorithms in solving practical engineering optimization problems. As a result, the paper chooses to use these two classical algorithms to testify the effectiveness of the proposed hybrid framework.

This paper aims to achieve two primary objectives: (1) to develop a novel Cooperative Metaheuristic Algorithm (CMA) inspired by Heterosis theory, designed to balance exploration and exploitation through cooperative evolution, improving performance in global optimization and engineering problems, and (2) to rigorously evaluate the proposed CMA by conducting comparative experiments, benchmarking it against state-of-the-art algorithms across a wide range of benchmark functions and real-world engineering challenges. Specifically, first, the population is divided into three subpopulations based on the fitness ranking of individuals, categorized as the optimal, suboptimal, and worst subpopulations. These subpopulations play the role of the maintainer, restorer, and sterile line in HRO, respectively. Second, a novel local optima avoidance technique-Search-Escape-Synchronize (SES) is introduced into each subpopulation, SES consists of three key phases. In the search phase, PSO is utilized for rapid global exploration. In the escape phase, the Lévy flight strategy is employed, and the escape energy of each agent is dynamically calculated to determine whether a large-scale jump is necessary to avoid entrapment in local optima. In the synchronize phase, an elite-based strategy is employed to facilitate information exchange between the optimal solutions of subpopulations, while ACO is applied to perform fine-tuned local optimization near these optimal solutions. This promotes information flow, accelerates convergence, maintains population diversity, and ensures a smooth balance and transition between exploration and exploitation. To verify the effectiveness of CMA, the algorithm is evaluated using 26 well-known benchmark functions and 5 practical engineering challenges. Experimental results demonstrate that CMA outperforms 10 state-of-the-art algorithms in terms of convergence speed and solution quality.

The main contributions of the paper are as follows:

-

This study, inspired by heterosis theory, integrates the classical and well-established PSO and ACO, along with Lévy flight and elite-based strategies, to construct the SES, which is then integrated into the hybird ramework originating from HRO. The algorithm balances global exploration and local exploitation through cooperative evolution, enhancing performance in global optimization and engineering design problems.

-

An innovative SES strategy is proposed in this study, which follows a three-phase process. In the search phase, PSO enables rapid global exploration. In the escape phase, escape energy is dynamically calculated, and if it exceeds a threshold, a Lévy flight is performed to avoid the local optima; otherwise, PSO continues to conduct the local search. In the synchronize phase, subpopulations share optimal solutions using elite-based strategy, and ACO refines the search near these solutions, accelerating convergence and maintaining diversity.

-

CMA is evaluated on 26 prominent benchmark functions and 5 real-world engineering challenges. Results indicate that CMA exceeds the performance of 10 advanced algorithms, including PSOBOA, HPSOBOA, PS-BES, BES, and others, in terms of convergence speed and solution quality.

The remaining sections of this paper are organized as follows: “Related work” reviews relevant literature. “Preliminaries” provides an overview of the fundamental algorithms used in the study. “The proposed cooperative metaheuristic algorithm” describes the cooperative metaheuristic algorithm introduced in this paper. In “Experimental result and analysis”, presents the application of the proposed CMA to 26 benchmark functions and 5 real-world engineering design problems, and compares its performance with other metaheuristic optimization algorithms “Conclusions” summarizes the key findings and suggests directions for future research.

Related work

Meta-heuristic algorithm (MA)

MAs are rule-based approaches developed to find the optimal solutions among numerous candidates for specific problems40. In the past twenty years, these algorithms have been widely applied to optimize complex real-world issues across diverse domains such as engineering, economics, health sciences, and finance. Their popularity stems from their simplicity in implementation and their capacity to converge to the global optima rather than local ones, offering greater efficiency compared to traditional algorithms.

MAs might be classified into four primary types: evolutionary algorithms, swarm intelligence algorithms, physics-inspired algorithms, and algorithms based on human behavior. Evolutionary-based algorithms include GA, CCEA, and HRO. Swarm-based algorithms, inspired by the social behaviors of populations such as wolves, insects, birds, and fish, include ACO, PSO, GWO, BOA, and Harris Hawk Optimization Algorithm (HHO)41. GSA, SA, and Collective Predator Algorithm (CPA)42are based on physical, chemical, and mathematical principles, like Atomic Search Optimization Algorithm (ASO)43 and AOA. Human behavior-based algorithms simulate human strategies to solve complex problems, including the Teaching-Learning-Based Optimization Algorithm (TLBO)44, Harmony Search Algorithm (HS)45, and Poor and Rich Optimization Algorithm (PRO)46.

Various MAs have been utilized to tackle optimization challenges. For example, Zhang et al.47 utilized PSO to find the optimal concrete mix. Karimi et al.48 introduced a semi-supervised feature selection algorithm using ACO. Liu et al.49 framed the Multiple Autonomous Underwater Vehicles problem as a dynamic multi-objective optimization challenge and introduced a cooperative coevolutionary algorithm to provide a range of high-quality solutions for decision-makers. Yassami and Ashtari50 introduced a novel hybrid optimization algorithm called the Dynamic Hybrid Optimization Algorithm (DHOA). This algorithm leverages the combined strengths of PSO, HHO, and GA to achieve superior and more precise results in a shorter time frame. Özbay51 proposed an improved chaotic map-based Sea Horse Optimization algorithm for solving global optimization and engineering problems.

Hybrid metaheuristic algorithms

In recent years, hybrid MAs have been widely used in diverse fields such as structural modeling, computer science, global optimization, environmental science, biomedical engineering, wireless sensor networks, engineering design, and energy efficiency50. The primary benefit of hybrid optimization techniques over single algorithms is their ability to closely approximate the global optimum (maximization or minimization )52. For instance, an enhanced ACO combined with a GA was proposed by Chen et al. for feature extraction53. Garg et al.54 combined the GSA with a traditional GA, resulting in a hybrid GSA-GA algorithm for constrained optimization problems. Tam et al.55 introduced a novel hybrid GA-ACO-PSO algorithm to address various engineering design challenges.

CCEA8, an evolution-based metaheuristic, tackles complex optimization problems through a modular design of multiple subpopulations. This approach decomposes a complex problem into several subproblems, which are evolved independently before being coordinated to yield an optimized overall solution. Various CCEA variants have been developed to address different optimization tasks. For example, Zhou et al.56 introduced a multi-strategy competition-cooperation coevolutionary algorithm (MSCOEA), which effectively balances uniformity and convergence in multi-objective optimization by using adaptive random competition and neighborhood crossover. Zhao et al.57 combined CCEA with a hybrid MA, designing a multi-population cooperative coevolution framework with a two-stage orthogonal learning (OL) mechanism to improve the whale optimization algorithm (WOA), termed MCCWOA. Despite their strengths, these improved CCEA algorithms face significant challenges, including complex parameter settings, high tuning difficulty, inconsistent subpopulation convergence rates, and limited global search capability.

PSO, a swarm intelligence-based metaheuristic, excels at solving optimization tasks with various constraints and objectives58. Known for its rapid convergence, strong parallel computing capability, easy parameter adjustment, and simplicity, PSO has drawn considerable research interest. However, PSO often suffers from the local optima and insufficient exploitation. Numerous PSO variants have been proposed to overcome these issues. For example, Liu et al.59 introduced intensity learning PSO to optimize multi-objective problems in cooperative heterogeneous multi-robot systems, guiding particles through intensity learning techniques to enhance convergence. Kwakye et al.60 proposed the particle swarm-guided bald eagle search algorithm (PS-BES), which uses particle swarm velocities to guide the bald eagle search, ensuring a smooth transition from exploration to exploitation and effectively solving global optimization and feature selection problems. However, these algorithms still have limited local refinement capabilities.

ACO, another swarm intelligence-based MA, is renowned for its fine-tuned search capabilities and effectiveness in global optimization and feature selection problems61,62. However, ACO has drawbacks such as slow convergence, complex implementation, high computational cost, and issues balancing exploration and exploitation, often leading to premature convergence to suboptimal solutions and lacking diversity and global search capability. To address these challenges, various ACO variants have been developed. For example, ACO-based algorithms for feature selection (FS) exhibit great potential with regard to their problem-oriented search operators and discrete graph representation63. Liu et al.64 proposed a hybrid BSO-ACO algorithm that combines Brain Storm Optimization (BSO), ACO, and neighborhood search (2-opt, relocation, and exchange) to solve the dynamic vehicle routing problem with time windows. Additionally, ACO has been adapted for global optimization and feature selection, with Zhou et al.65 proposing a random-following strategy to enhance communication among ant search agents, demonstrating strong global optimization performance and high-dimensional feature selection capability. Despite the strengths of ACO-based algorithms, challenges such as slow convergence, difficulty in handling large-scale datasets, lack of robustness considerations, and limited global vision for ants persist.

In summary, the extensive applications and advancements in MAs and their hybrid variants, as explored in the related works, highlight their effectiveness in solving complex optimization problems across diverse domains. These studies, particularly in the development of CCEA and hybrid algorithms, provide a solid foundation for further exploration of more specialized and adaptive algorithms. The limitations identified in the literature, such as premature convergence and insufficient global search capabilities in MAs like PSO and ACO, guide the motivation for studying improved optimization algorithms. In the following section, we build upon these foundations by introducing the HRO, designed to address some of the existing challenges, such as balancing exploration and exploitation while maintaining computational efficiency.

Preliminaries

Hybrid rice optimization algorithm

HRO10 is an advanced Evolutionary-based algorithm known for its robust search capabilities and computational efficiency. During each iteration, the population is ordered based on fitness values. The sorted population \(X = (X_1, ..., X_i, ..., X_n)\) is then divided into three segments: the maintainer group \(\{X_1, X_2, ..., X_a\} \ (a = \lfloor n/3 \rfloor )\), the restorer group \(\{X_{a+1}, X_{a+2}, ..., X_{2a}\}\), and the sterile group \(\{X_{2a+1}, X_{2a+2}, ..., X_n\}\). This algorithm allocates the top third of individuals with the best fitness values to the maintainer group, the bottom third with the lowest fitness values to the sterile group, and the middle third to the restorer group. The relationship of the three-line hybrid rice is shown in Figure 1.

(i) Hybridization: This stage focuses on renewing the sterile line by creating new individuals through the crossbreeding of selected members from both the sterile and maintainer groups. The hybridization is computed according to Eq. (1).

Here, \(X_s^k(t)\) and \(X_m^k(t)\) represent the \(k\)-th genes of randomly selected individuals from the sterile and maintainer lines, respectively. \(r_1\) is a random variable ranging from 0 to 1.

(ii) Selfing: Individuals in the restorer line move towards the global optimum through self-crossing, as calculated by Eq. (2).

Where \(x_i^k(t)\) and \(x_j^k(t)\) represent the \(k\)-th genes of the \(i\)-th and \(j\)-th individuals in the restorer line, respectively. \(x_{\text {best}}^k\) represents the \(k\)-th gene of the globally best solution, and \(r_2\) is a random variable within the range [0, 1].

In the stages mentioned above, updating \(X_i(t + 1)\) involves comparing the fitness values of \({\hat{X}}_i(t)\) and \(X_i(t)\), and it is computed using Eq. (3).

In HRO, the number of self-crossings (SC) tracks how frequently an individual in the restorer line remains unchanged. Once the number of SC reaches the threshold (\(SC_{\text {max}}\)), the selfing phase transitions to a renewal phase for that individual.

(iii) Renewal: The genetic information of the individual in the restorer line is refreshed, and the SC count is reset to zero, as computed by Eq. (4).

Here, \(R_{\text {max}}^k\) and \(R_{\text {min}}^k\) represent the upper and lower bounds of the \(k\)-th dimension, and \(r_3\) is a random variable ranging from 0 to 1.

Cooperative co-evolutionary algorithm

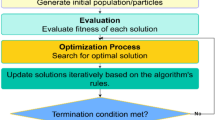

The evolution of life on Earth has been a lengthy process, moving from simple to complex forms and from lower to higher levels. Darwin’s theory of evolution highlights natural selection and survival of the fittest but mainly considers competition within a population, ignoring interspecies interactions. In real ecosystems, organisms engage in various relationships, such as cooperation, competition, predation, and parasitism. To address these complex interactions, the concept of coevolution emerged. Ehrlich and Rave first introduced the term coevolution in 1964 to describe the mutual evolution of plants and herbivorous insects8. Jazen later defined it more precisely: the evolution of traits in one species in response to traits of another species, and vice versa. This definition requires specificity and reciprocity, indicating that any evolutionary change in one trait is driven by another, with both traits evolving. Coevolution emphasizes the interconnectedness of individuals, where changes in one affect others. Throughout evolutionary history, organisms have coevolved through mutual adaptation and interaction. Coevolutionary algorithms, inspired by biological coevolution theory, involve the simultaneous evolution of several interdependent populations. The basic framework of coevolutionary theory is illustrated in Fig. 2.

CCEA is classified into Competitive Coevolutionary Algorithms (CompCEA)66 and Cooperative Coevolutionary Algorithms (CoopCEA)67 based on their relationship characteristics. In CompCEA, an individual’s fitness is determined by the number and strength of opponents it defeats in competition. The interactions between competing individuals mean that progress by one can threaten the survival of others. In CoopCEA, fitness is determined by cooperative performance, using a divide-and-conquer approach to optimize subproblems, which reduces runtime through parallel computation. Cooperative individuals positively influence each other, and progress by one supports the survival of others. The classic steps of coevolutionary algorithms involve problem decomposition, where high-dimensional problems are divided into smaller subproblems; subproblem optimization, where each subproblem is independently optimized; and cooperative integration, where subproblems adapt to each other and coevolve. Coevolutionary algorithms account for interactions between populations and their environment, enhancing adaptability. Compared to general evolutionary algorithms, they offer strong robustness and high adaptability, making them a significant research focus in evolutionary computation.

Particle swarm optimization algorithm

PSO is a MA that draws inspiration from swarm intelligence, mimicking the behavior of bird flocks as they search for resources in an \(m\)-dimensional space. The key features of PSO are the positions and velocities of the particles, which are utilized to find the optimal solution. Initially, the positions and velocities of each potential solution (known as particles) are randomly assigned within the search space.

Within PSO, particle movement is influenced by the global best position and the local best position. The global best position represents the best ___location identified by the entire swarm up to the current point, while the local best position signifies the best ___location discovered by an individual particle. Two significant factors, the social factor and the cognitive factor, assist particles in adjusting their trajectories during the search process. This combination of social and cognitive influences effectively steers the algorithm towards the global target. The mathematical expressions for updating particles’ velocities and positions in the search space are given by Eqs. (5–6).

Here, \(V_{i}^{t+1}\) and \(V_{i}^{t}\) represent the velocity of the \(i\)-th particle in the next and current iterations, respectively, which signifies the rate of change in the position of the particle. The inertia weight \(w\) regulates this rate of change. The coefficients \(c_{1}\) and \(c_{2}\), typically set to constants (equal to 2), represent cognitive and social factors. \(r_{1}\) and \(r_{2}\) are random numbers in the range (0,1). The position vector \(X_{i}^{t+1}\) denotes the ___location of the \(i\)-th particle in the (\(t+1\)) and \(t\) iterations. \(P_{Best}^{i}\) refers to the best position of the \(i\)-th particle in the current iteration, while \(G_{Best}\) is the global best position of the entire swarm. The cognitive component in Eqs. (5)–(6) enables each particle to remember its previous best position when no improvement is found at its current position, prompting the particle to move toward that previously identified the optimal position. The social component continually guides particles to learn from the swarm, steering them toward the best positions found within the community.

Existing literature develops \(w\) as a constant, random, or time-varying value depending on the algorithm’s objectives, such as rapid convergence, low error, or consistent average error. One of the most commonly used inertia weights is the linearly decreasing inertia weight, as shown in Eq. (7).

In this context, \(w(t)\) denotes the inertia weight at iteration \(t\), \(t_{\max }\) represents the maximum number of iterations, and \(w_{\max }\) and \(w_{\min }\) are the upper and lower bounds of the inertia weight set during the algorithm’s initialization. Based on extensive experimentation, researchers have recommended using \(w_{\min } = 0.2\) and \(w_{\max } = 0.9\). This paper also adopts this linearly decreasing inertia weight scheme.

Ant colony optimization algorithm

ACO is a metaheuristic inspired by swarm intelligence, initially proposed by Marco Dorigo in 1992. This algorithm takes inspiration from the pheromone-based communication of the ants during their food search and is mainly applied to combinatorial optimization problems like the Traveling Salesman Problem (TSP) and path planning. In nature, ants leave pheromone trails while foraging, and other ants probabilistically choose paths, preferring those with higher pheromone levels. This behavior eventually brings about the emergence of optimal or near-optimal paths. The ACO algorithm simulates this process by allowing artificial ants to explore the solution space, depositing pheromones, and iteratively identifying the best solution.

Ants update pheromone concentrations based on the quality of the paths they traverse. Pheromone levels on superior paths increase, while those on inferior paths gradually evaporate (decrease). The pheromone update is calculated using Eq. (8).

Here, \(\tau _{ij}(t)\) represents the pheromone level on the path between \(i\) and \(j\) at time \(t\), while \(\rho\) represents the pheromone evaporation rate. \(\Delta \tau _{ij}^{k}(t)\) indicates the quantity of pheromone added by the \(k\)-th ant to the path from \(i\) to \(j\).

Each ant selects its next move based on the pheromone concentration and a heuristic function (typically the inverse of the path length). The selection probability is computed using Eq. (9).

Here, \(\tau _{ij}(t)\) represents the pheromone level on the path from \(i\) to \(j\), and \(\eta _{ij}\) denotes the heuristic function, typically the inverse of the path length. The parameters \(\alpha\) and \(\beta\) control the influence of the pheromone trail and the heuristic information, respectively.

The ACO mimics ant foraging behavior, integrating pheromone updates and path selection strategies to address complex combinatorial optimization problems effectively, and it has widespread applications.

The proposed cooperative metaheuristic algorithm

Metaheuristic algorithms have garnered significant attention for their superior performance in solving optimization tasks68. This study aims to develop an efficient and robust hybrid MA by integrating SES strategy with PSO, ACO, and Lévy flight, inspired by the cooperative evolution concept of the HRO. The population is divided into three subpopulations for cooperative evolution, each executing specific optimization strategies. The specific implementation details are as follows.

Lévy flight

Lévy flight (LF)69, introduced by mathematician Paul Lévy in 1937, is a mathematical model often used in optimization algorithms. It features a random walk where each step follows a probability density function, allowing for large steps alongside smaller ones. This mechanism enables the algorithm to explore the search space more extensively, avoiding entrapment in the local optima and increasing exploration diversity. By incorporating long-distance jumps, LF enhances the algorithm’s ability to escape from suboptimal solutions. The distribution used for selecting step lengths in LF is defined by Eq. (10).

The step size \(t\) is given by Eqs. (11)–(12).

Here, \(\mu\) and \(\vartheta\) are sampled from normal distributions, where \(\mu \sim {\mathcal {N}}(0, \phi _{\mu }^2)\) and \(\vartheta \sim {\mathcal {N}}(0, 1)\). In this paper, \(\beta\) is set to 1.5.

Elite-based strategy

The elite-based strategy plays a crucial role in evolutionary algorithms by retaining the best-performing individual (solution) in each generation and directly passing it to the next, ensuring that high-quality solutions are not lost during genetic operations such as crossover or mutation. This method accelerates convergence and improves solution quality, making it a common practice in optimization algorithms like GA70, PSO71, and ACO72. The fundamental concept is to preserve the individual with the best fitness value (for minimization problems, the lowest) in the current generation, facilitating continuous enhancement of solution quality. This process can be mathematically expressed as by Eq. (13).

Where \(E_{t+1}\) is the elite individual retained for the next generation, \(\text {Fitness}(Sol_i)\) represents the fitness value of the \(i\)-th solution, \(N\) is the total number of individuals in the population.

In each generation, the elite individual with the lowest fitness is retained to avoid losing the optimal solutions and steer population evolution. This method speeds up convergence, enhances population quality, and facilitates finding better global solutions across generations.

Search-escape-synchronize (SES)

The SES strategy draws its inspiration from natural co-evolution and biological escape mechanisms, coupled with physical energy dynamics. SES uses a three-phase process that balances exploration and exploitation in metaheuristic optimization, helping prevent premature convergence and accelerating the overall search. This strategy is rooted in heterosis theory, wherein different subpopulations cooperate and evolve together to enhance search capabilities. SES integrates well-known optimization techniques such as PSO, LF, and ACO to produce a hybrid cooperative framework.

(1) Search phase: In this phase, PSO is utilized for global exploration. Particles update their velocities and positions based on both their individual best positions (\(P_{\text {best}}\)) and the global best position (\(G_{\text {best}}\)), thereby covering a wide solution space. The velocity is updated using Eq.(14).

Where \(\omega\) is the inertia weight, \(c_1, c_2\) are learning coefficients, and \(r_1, r_2\) are random values. Particle positions are updated as Eq. (15).

Where \(x_i^{t+1}\) is the position of particle i at iteration \(t+1\), \(x_i^t\) is its position at iteration t, and \(v_i^{t+1}\) is the updated velocity at iteration \(t+1\).

(2) Escape phase: In the escape phase, dynamic escape energy is introduced to determine whether a particle should perform a large-scale jump using LF. Escape energy \(E_i\) is calculated based on the fitness difference between the current particle and the global best particle using Eq. (16).

Where t denotes the current iteration and \(N_{\text {iter}}\) is the total number of iterations. \(\text {fitness}(x_{\text {best}})\) refers to the fitness value of the global best solution, and \(\text {fitness}(x_i)\) indicates the fitness value of the current particle \(x_i\). If \(E_i\) exceeds a threshold, the particle performs a Lévy flight, with its new position calculated as Eq. (17).

Where \(x_i^{t+1}\) represents the position of particle i at iteration \(t+1\), \(x_i^t\) is the position of particle i at iteration t, \(\lambda\) is the step size, and L(s) follows a Lévy distribution, which governs the step length.

(3) Synchronize phase: In the synchronization phase, subpopulations exchange their best solutions through an elite-based strategy using Eq. (13). In addition, ACO is applied to fine-tune the search near the shared optimal solutions. The pheromone update in ACO is governed by Eq. (18).

Where \(\tau _{ij}^{t+1}\) is the updated pheromone level on path ij at iteration \(t+1\), \(\rho\) is the pheromone evaporation rate, and \(\Delta \tau _{ij}\) represents the amount of pheromone deposited on path ij by the elite solutions.

The SES strategy integrates global exploration and local optimization techniques through a three-phase process: Search, Escape, and Synchronize. Initially, PSO is employed for global exploration to identify potential optimal solutions. Then, dynamic escape energy and Lévy flight are used to avoid the local optima. Finally, the best solutions are shared through an elite-based strategy, and ACO is applied for fine-tuned local optimization, which accelerates convergence and improves solution quality.

Implementation of CMA

The implementation process of CMA begins with the initialization of the population, which consists of N possible solutions, such as agents, particles, or ants, depending on the dimensions of the problem. During initialization, the starting positions of the search agents are randomly assigned according to Eq. (19).

Where \(Sol(i, j)\) represents the position of the \(i\)-th agent in the \(j\)-th dimension, \({Lb}_j\) denotes the lower bound of the \(j\)-th dimension, \({Ub}_j\) denotes the upper bound of the \(j\)-th dimension, and \({rand}()\) is a random number in the range \([0, 1]\).

Next, the fitness is evaluated, and the current best solution is found by calculating the fitness value of each individual using the objective function \(f_{{obj}}\) as shown in Eq. (20).

Where \({Fitness}(i)\) denotes the fitness value of the \(i\)-th individual, \(f_{{obj}}\) represents the objective function used to assess individual quality, and \({Sol}(i, :)\) indicates the position vector of the \(i\)-th individual in the solution space.

After evaluating the fitness of all individuals in the population, the individual with the lowest (or highest, depending on the objective) fitness value is chosen as the current best solution. The best solution is identified using the formula provided in Eq. (21).

Where \(f_{{min}}\) is the minimum fitness value in the current population, and \(I\) is the index of the individual with the best fitness value.

Subsequently, the population is sorted by fitness and divided into the optimal, suboptimal, and worst subpopulations. The SES strategy is then applied within each subpopulation using Eqs. (14)–(18). Specifically, in the search phase, global exploration is performed using Eqs. (14)–(15) to update velocity and position. Then, in the escape phase, escape energy is dynamically calculated using Eq. (16); if it exceeds a threshold (adjustable, set to 1 in this paper), the LF strategy is applied via Eqs. (10)–(12) to escape the local optima, followed by position updates using Eq. (17). Otherwise, PSO continues to conduct the local search. Finally, in the synchronize phase, the elite-based strategy is employed using Eq. (13) to retain the best solutions from each subpopulation and exchange information between subpopulations. Meanwhile, Eq. (18) is employed for fine-tuned local optimization around the best solutions using ACO.

Finally, cooperative evolution involves exchanging information between subpopulations. This critical part of the algorithm uses a fixed exchange frequency to share the global best solutions among subpopulations, enhancing search capabilities. The information exchange formula is given by Eq. (22).

Where \(\text {Sol}\) is the solution matrix, \(start\) and \(end\) denote the index range of the current subpopulation, and \(other\_start\) and \(other\_end\) denote the index range of another subpopulation.

CMA is inspired by the principles of cooperative evolution from HRO. It incorporates a SES strategy, which integrates PSO, ACO, and LF strategy within the HRO structure. The population is divided into three distinct subpopulations. In each subpopulation, different optimization strategies are executed across three phases to promote cooperative evolution. This approach balances exploration and exploitation effectively, enhancing the efficiency of CMA in complex optimization tasks. The detailed development process of CMA is illustrated in Fig. 3 and explained in Algorithm 1.

Experimental result and analysis

To validate the effectiveness and robustness of the proposed algorithm, 26 standard benchmark test functions are employed. Furthermore, to assess its practical applicability, the algorithm is tested on 5 real-world optimization engineering problems. Detailed descriptions of these benchmark test functions, parameter settings, and performance evaluation metrics are provided below. The performance is analyzed and compared with several well-known metaheuristic algorithms.

Experimental setup

All tests were conducted on a machine running Windows 10, with 8 GB RAM and an Intel(R) Core i5-10500 processor (3.10 GHz). MATLAB 2022b was used to program the proposed CMA and other metaheuristic algorithms.

Experiment I: global optimization with a set of 26 benchmark mathematical functions

Benchmark functions

In this study, 26 widely-used benchmark functions73,74,75 in the optimization field are selected to evaluate the performance of the proposed algorithm in a variety of optimization scenarios. The dimension of all standard test questions is set to 30, and the functions are categorized into two main groups:

-

Unimodal Functions: The first 15 benchmark functions (F1–F15) are unimodal, meaning they have a single global optimum. These functions are used to test the convergence speed and accuracy of the algorithms. Since they lack the local optima, they provide a straightforward landscape for assessing the efficiency of the algorithm in reaching the global optimum. Functions such as the Sphere and Rosenbrock functions fall into this category and are commonly used to evaluate how quickly an algorithm can converge in a smooth, simple search space.

-

Multimodal Functions: The remaining functions (F16–F26) are multimodal, containing multiple local optima. These functions are designed to test the ability of the algorithm to avoid premature convergence by escaping local optima and finding the global optimum. Multimodal functions pose a more challenging landscape, where exploration and global search capability are crucial. Examples include the Rastrigin and Ackley functions, which are often used to evaluate the robustness of the algorithm in complex search spaces with many misleading local minima.

By using these 26 benchmark functions, which include both unimodal and multimodal landscapes, we ensure a comprehensive assessment of the algorithm’s performance. The test set includes simple problems that assess convergence efficiency, as well as more complex multimodal problems that challenge the algorithm’s global exploration capabilities. Table 1 provides detailed information on each of the 26 benchmark functions, offering a diverse set of response surfaces for thorough performance evaluation.

The performance evaluation metrics

To evaluate the performance of the proposed CMA, the following evaluation criteria are used.

-

Average: The average fitness value M produced by the algorithm after multiple iterations, computed as shown in Eq. (23).

$$\begin{aligned} \text {Avg} = \frac{\sum _{i=1}^{M} (f_i)}{M}. \end{aligned}$$(23) -

Standard deviation (Std): the variability in the values of the objective function obtained from running the algorithm M times. The standard deviation is calculated by Eq. (24).

$$\begin{aligned} \text {Std} = \sqrt{\frac{1}{M-1} \sum _{i=1}^{M} (f_i - \text {Mean})^2}. \end{aligned}$$(24) -

Best: the lowest fitness value achieved after running the algorithm M times, expressed as Eq. (25).

$$\begin{aligned} \text {Best} = \min _{1 \le i \le M} f_i. \end{aligned}$$(25)

Where \(f_i\) represents the best fitness value achieved in run i.

Comparison with other algorithms and parameter setting

The setup of the hybrid algorithm and its comparison with other algorithms are summarized in Table 2. Common parameters, such as initial settings, were chosen based on previous studies to ensure a fair comparison, while other parameters were optimized experimentally. It’s important to adjust literature-based parameters to achieve reasonable performance. The competing algorithms include PSO76, ACO77, BOA78, GWO79, WOA80, DE81, and BES82. Hybrid MAs such as PSOBOA83 and HPSOBOA47, as well as the PSO variant PS-BES60 with time-varying acceleration coefficients, are also included. The stopping criterion for the objective function evaluation is based on the maximum number of iterations, as detailed in Table 4.

In this study,the choice of PSO30,60, ACO84,85, and DE86,87 as comparison algorithms is based on their widespread recognition and demonstrated effectiveness in solving global optimization problems. While the convergence of these algorithms has been theoretically validated to a certain extent, their strong experimental performance has further solidified their status as foundational metaheuristics. Many newly designed algorithms are indeed variations or hybridizations of these algorithms, making them representative of the state-of-the-art algorithms in the field. Therefore, comparing our algorithm with these well-established algorithms provides a solid benchmark for evaluating the performance and robustness of our algorithm.

Furthermore, the setting of parameters for PSO and ACO within the proposed algorithm was based on well-established default values drawn from extensive literature research. Specifically, the \(\omega\), \(c_1\), and \(c_2\) of PSO60,76,88, along with the \(\rho\), \(\beta\), and \(\alpha\) of ACO, were chosen according to best practices applied in similar optimization problems. These parameter settings have been shown in multiple studies to perform effectively in solving global optimization problems77,85,89. Therefore, in this work, we conducted empirical testing to fine-tune the parameters, ensuring robustness and efficiency in the specific application. The final optimal parameter combinations are presented in Table 2.

Numerical outcomes and statistical evaluation of experiment I

The performance of the proposed algorithm is evaluated against various well-known and recent algorithms, including hybrid MAs. The symbols ‘\(+\)’, ‘\(=\)’, and ‘−’ denote that the proposed algorithm outperforms, matches, or underperforms compared to the other algorithms, respectively.

Table 2 presents a comparison of the average fitness and standard deviation of the proposed algorithm with other existing algorithms across benchmark functions. Notably, the proposed algorithm exhibits the best average fitness in 18 of the 26 benchmark functions (F1-F3, F5, F7-F10, F12, F14, F15, F17, F19, F22-F26), surpassing 10 other algorithms. Specifically, the CMA achieves the best average fitness and standard deviation of 0.0000E+00 on functions F1-F3, F7, F8, F14, F15, F17, F19, F24, and F26, demonstrating high stability and efficiency. CMA ranks second in average fitness for functions F4 and F13, but ranks lower for functions F11, F16, F18, and F20, placing 8th, 5th, 6th, and 7th, respectively.

Table 3 compares the best fitness values of the proposed algorithm with those of other existing algorithms on benchmark functions. The proposed algorithm achieves the best fitness values in 19 out of 26 benchmark functions (F1-F5, F7-F9, F11, F13-F20, F24-F26), surpassing the other 10 algorithms. Specifically, CMA achieves the best fitness value of 0.0000E+00 on 17 functions (F1-F4, F7-F9, F11, F13-F17, F19, F20, F24-F26). CMA ranks 4th, 3rd, 4th, and 3rd for the best fitness values in functions F6, F10, F12, and F23, respectively, and ranks 5th and 7th for functions F21 and F22.

Although CMA performs well on most benchmark functions, its weaker performance on some functions reveals certain limitations. For example, in F11 (unimodal), the algorithm might overly prioritize global exploration, which results in inadequate local exploitation and slower convergence. This imbalance hinders the algorithm from efficiently achieving the global optimum in simpler unimodal problems.

In more complex multimodal functions like F16, F18, and F20, CMA’s global search capability seems restricted. While Lévy flights are used to avoid local optima, they may not be effective enough to handle the numerous local minima in these complex functions. Moreover, in functions like F21, F22, and F23, which have even more complex search spaces filled with many local optima, a lack of diversity and insufficient exploration further hinder CMA’s ability to find the global optimum.

These findings suggest that the balance between exploration and exploitation in CMA still needs improvement. Future work should aim at enhancing global exploration techniques, particularly for multimodal problems, while integrating adaptive strategies that dynamically adjust this balance throughout the optimization process. Such refinements could strengthen CMA’s performance across a broader range of optimization challenges.

Results of the Wilcoxon nonparametric test

To derive meaningful statistical conclusions, the widely known Wilcoxon nonparametric test90 was utilized to evaluate the performance of CMA against other algorithms. Table 5 displays the Wilcoxon signed-rank test outcomes for 26 renowned benchmark functions at an alpha level of 0.05, with the average objective value of each function serving as the sample for testing. In Table 5, “Better”, “Equal”, and “Worse” represent the number of test functions where CMA performs better, equally, or worse compared to the control algorithm. The P-value indicates the level of significance, and when it is below 0.05, it suggests a significant difference between the two algorthms. The symbols “+” and “\(\approx\)” indicate that CMA’s performance is better than or equal to the control algorithm, respectively.

The results presented in Table 5 indicate that CMA frequently achieves a higher number of “Better” outcomes across the 26 benchmark functions. For instance, CMA demonstrates superior performance over PSO in 25 cases, with one “Equal” and zero “Worse” outcomes. This implies that CMA outperforms PSO in 25 functions, achieves equivalent results in one function, and does not perform worse in any of the functions. Similar trends can be observed in comparisons with other algorithms, where CMA consistently has a greater number of “Better” symbols, with all P-values being less than 0.05. These findings statistically affirm that CMA is superior to the other algorithms tested.

Moreover, when analyzing both the P-values and the effect sizes derived from the Wilcoxon signed-rank test, CMA exhibits significant performance advantages over algorithms such as PSO, ACO, BES, and DE. Specifically, the effect sizes are 0.85749, 0.8642, 0.6621, and 0.87416, respectively, all of which fall within the large effect size range (defined as absolute values greater than 0.8). This demonstrates substantial practical performance improvements. Similarly, when compared with BOA, PS-BES, PSOBOA, and HPSOBOA, the effect sizes are 0.57032, 0.69152, 0.72771, and 0.56, respectively, which fall within the moderate effect size range (absolute values between 0.5 and 0.8), indicating a noticeable but smaller advantage. Overall, these results highlight that CMA not only exhibits statistical significance in most comparisons but also delivers meaningful practical performance gains, especially in cases where the effect size is large, underscoring CMA’s superior performance.

Convergence analysis

Figure 4 shows the convergence performance of CMA compared to other algorithms over 500 iterations. The plot displays the best fitness values achieved at each iteration on the vertical axis, with iterations on the horizontal axis. CMA consistently converges faster and more effectively in 20 out of 26 test functions (F1-F4, F8, F9, F11-F21, F24-F26), outperforming other algorithms including PS-BES. CMA demonstrates strong global optimization capabilities, while other algorithms show superior performance in fewer cases. For function F6, CMA effectively escapes local optima through exploration and random jumps, as depicted in Figure 4.

Exploitation analysis

Single-peak benchmark functions (F1–F15), containing only one minimum, are ideal for evaluating the exploitation capabilities of algorithms. Figure 4 shows that for functions F1–F4, F8–F11, and F13–F15, CMA reaches the theoretical optimal solution within about 50 iterations. Table 2 indicates that the average fitness and standard deviation values for these functions are often 0.0000E+00, reflecting CMA’s high precision and stability. These results clearly demonstrate that CMA performs better than most compared algorithms.

Exploration analysis

Benchmark functions F16–F26 encompass multimodal problems. As shown in Fig. 4, CMA achieves the theoretical optimal solution within approximately 50 iterations for functions F1–F4, F8–F11, F13, and F14. For functions F16–F19, F21, F22, and F24–F26, CMA also reaches the theoretical optimal solution within about 50 iterations. Table 3 displays that most algorithms could find the theoretical optimum. Furthermore, Table 2 highlights the superior average fitness and standard deviation of CMA compared to most other algorithms, demonstrating its robust and stable exploration performance.

Analysis of computational expenses

Recent research emphasizes the development of algorithms that address complex optimization problems with efficiency in computational time91. The ’Big O’ notation92 is employed to evaluate CMA’s execution time for a given problem, taking into account factors such as iteration count, problem dimension, and objective function evaluations. The overall time complexity of CMA is determined by several key operations. Initially, solutions are generated for each individual in the population, and parameters for PSO, ACO, and LF are initialized. The complexity of this initialization step is proportional to the population size n and the problem dimensionality \(\text {dim}\), resulting in \(O(n \cdot \text {dim})\). During each iteration, fitness evaluations are performed, with the time complexity depending on the complexity of the objective function f, yielding \(O(n \cdot f)\). The population is then sorted by fitness, requiring \(O(n \log n)\) time. Both global and local searches, including position updates and potential jumps, are carried out primarily through PSO, with a complexity of \(O(n \cdot \text {dim})\).

In the synchronization phase, elite solutions are shared using an elite strategy, and search is conducted by ACO. The time complexity for this phase depends on the number of ants, \(\text {numAnts}\), and the problem dimensionality, giving \(O(\text {numAnts} \cdot \text {dim})\). The overall time complexity of the algorithm is obtained by multiplying the number of iterations \(N_{\text {iter}}\) by the cumulative complexity of all these operations. Thus, the total time complexity can be expressed as:

Here, n represents the population size, \(\text {dim}\) is the problem’s dimensionality, f is the complexity of the objective function, \(N_{\text {iter}}\) is the number of iterations, and \(\text {numAnts}\) is the number of ants used in the search stage of ACO. This formula shows how the execution time of the CMA algorithm scales with respect to these parameters.

Experiment II: CMA for solving engineering optimization problems

In this section, CMA is utilized to identify the optimal solutions for engineering optimization challenges involving multiple equality and inequality constraints. For all design problems, CMA was tested with a population of 30 and up to 500 iterations over 30 runs. It was compared with 10 algorithms, including PSO, ACO, BES, DE, BOA, GWO, WOA, PS-BES, PSOBOA, and HPSOBOA.

Welded beam design problem

In Welded Beam Design Problem93, the goal is to minimize the production cost of a welded beam while satisfying specific constraints. The cost function and the seven associated constraint functions are detailed in Eqs. (27)–(28). Figure 5 illustrates the welded beam problem, which involves four decision variables: weld thickness (\(h\)), length of the welded bar (\(l\)), height of the bar (\(t\)), and thickness of the bar (\(b\)).

Figure 5 depicts the welded beam problem, which includes four decision variables: \(h\) representing weld thickness, \(l\) denoting the length of the welded bar, \(t\) indicating the height of the bar, and \(b\) for the bar’s thickness. The mathematical formulation is described as in Eqs. (27)–(28).

Where,

Where, \(P = 6000\, \text {lb}, \quad L = 14\, \text {in}, \quad E = 30 \times 10^6\,\) \(\text {psi}, \quad G = 12 \times 10^6\, \text {psi}, \tau _{\text {max}} = 13,600\,\) \(\text {psi}, \quad \sigma (x) = 30,000\, \text {psi}, \quad \delta (x) = 0.25\, \text {in}\).

This problem has been approached with several algorithms. The results and statistical analysis are shown in Tables 6 and 7. According to Tables 6, CMA, PS-BES, PSOBOA, and HPSOBOA all achieve near-optimal solutions, with the best cost value being 1.6072. According to Table 7, CMA’s average minimum cost (Mean) is 1.6808, ranking first among the 10 compared algorithms.

Figure 6 illustrates the convergence curve and constraint values generated by CMA, while Fig. 7 compares CMA’s convergence performance with that of other algorithms. The results show that the structure identified by CMA differs significantly from those produced by other algorithms, successfully achieving the lowest fabrication cost.

Speed reducer design problem

Figure 8 presents the schematic of the speed reducer design problem94. The objective is to find the optimal parameters that minimize the weight of the speed reducer. These parameters include face width \(b\), teeth module \(m\), number of pinion teeth \(z\), length of shaft 1 between bearings \(l_1\), length of shaft 2 between bearings \(l_2\), diameter of shaft 1 \(d_1\), and diameter of shaft 2 \(d_2\). Therefore, the variable vector for this problem is \(x = (x_1, x_2, x_3, x_4, x_5, x_6, x_7) = (b, m, z, l_1, l_2, d_1, d_2)\). The speed reducer design problem is represented as shown in Eq. (29).

This problem has been addressed by various evolutionary algorithms. Tables 8 and 9 present the best and the statistical results for the speed reducer design problem obtained by different algorithms. According to Table 8, CMA achieves optimal values of \(x_1 = 3.5000\), \(x_2 = 0.7000\), \(x_3 = 17.0000\), \(x_4 = 7.3050\), \(x_5 = 7.7158\), \(x_6 = 3.3505\), \(x_7 = 5.2867\), and a minimal reducer weight of 2994.4513. As shown in Table 9, CMA’s optimal value is 2994.4826, the median is 2994.7119, the mean is 2998.4532, and the worst value is 3007.4677, all of which rank first among the 10 compared algorithms. It consistently outperforms other algorithms in most metrics, showing its efficiency for engineering design.

Figure 9 displays the convergence curve and constraint values obtained from the CMA, whereas Fig. 10 compares the convergence performance of CMA with that of other algorithms. It indicates that the structure determined by CMA is markedly different from those derived by alternative algorithms, ultimately achieving the lowest fabrication cost.

Tension/compression spring design problem

Figure 11 depicts the spring design problem95, where the main objective is to minimize the total weight of the tension/compression spring. This problem involves three design parameters: \(d\) for wire diameter, \(D\) for the mean coil diameter, and \(N\) for the number of active coils. The objective function and constraints for the pressure vessel problem are detailed in Eqs. (30)–(31).

In the spring design problem, various MAs have been used. CMA was compared with several algorithms, as shown in Table 10, where CMA achieved optimal results with \(d = 0.0511\), \(D = 0.3432\), \(N = 11.4547\), and the lowest cost of 0.012660. Table 11 provides a statistical comparison, indicating that CMA has the lowest average cost of 0.013517 and a standard deviation of 0.0014673, outperforming other algorithms.

Figure 12 displays the convergence curve and constraint values obtained from the CMA, whereas Figure 13 compares the convergence performance of CMA with that of other algorithms. The findings indicate that the structure determined by CMA is markedly different from those derived by alternative algorithms, ultimately achieving the lowest fabrication cost.

Pressure vessel design problem

Figure 14 illustrates the pressure vessel design problem96, aimed at minimizing production costs while adhering to constraints for synthesis, welding, and material expenses. This problem involves four design variables: shell thickness (\(T_s\)), head thickness (\(T_h\)), inner radius (\(R\)), and cylinder length (\(L\)). The cost function and the four constraints are outlined in Eqs. (32)–(33).

.

In the pressure vessel design problem, various metaheuristic optimization algorithms have been explored in the literature. CMA was compared with ten other algorithms. Table 12 presents the optimal cost values obtained by these algorithms. According to Table 12, CMA, DE, PS-BES, PSOBOA, and HPSOBOA all achieved the lowest cost of 6059.7143, with CMA having \(T_s = 12.7283\), \(T_h = 6.5224\), \(R = 42.0984\), and \(L = 176.6366\). Additionally, Table 13 shows the statistical comparison of CMA with other algorithms. CMA’s optimal value is 6059.7143, the median is 6095.9742, the mean is 6346.7459, and the worst value is 7276.59, all ranking first. The standard deviation of 350.5653 ranks second, indicating that CMA performs better than most other algorithms across multiple metrics.

Figure 15 presents the convergence curve and constraint values generated by CMA, while Fig. 16 contrasts CMA’s convergence performance with that of other algorithms. The results suggest that the structure identified by CMA significantly differs from those produced by other algorithms, resulting in the lowest fabrication cost.

Three-bar truss design problem

Figure 17 presents the schematic diagram of the three-bar truss design problem97, which seeks to minimize volume while adhering to stress constraints on each member of the truss. This problem, defined by the variable vector \(x = (A_1, A_2)\), can be expressed as Eq. (34).

Table 14 shows the optimal results for the three-bar truss design problem using various algorithms. CMA achieves near-optimal solutions, with \(A_1 = 0.7888\) and \(A_2 = 0.4078\), and it attains the best cost of 263.8957 among all compared algorithms. Table 15 provides a detailed statistical comparison, where CMA’s optimal value is 263.8957, the median is 263.8957, the mean is 263.8958, and the worst value is 263.8959, all ranking first.

Figure 18 presents the convergence curve and constraint values provided by CMA, while Fig. 19 compares the convergence performance of CMA with other algorithms. The results indicate that the structure identified by CMA differs significantly from those obtained by other algorithms, and it successfully achieves the lowest fabrication cost.

All in all, experimental results on five real-world engineering optimization problems demonstrate that CMA outperforms other algorithms, confirming its effectiveness and competitiveness in engineering design optimization problems.

Conclusions

In this paper, an innovative CMA, inspired by the heterosis theory, is introduced to tackle global optimization and complex engineering design problems. The CMA is built upon the theory of heterosis, specifically, it has taken advantage of the cooperative evolution concept of HRO, dividing the population into three distinct subgroups according to fitness levels for collaborative evolution. Moreover, a three-phase SES strategy is developed for each subgroup. In the search phase, the widely-used PSO is utilized for global exploration. In the escape phase, escape energy is dynamically calculated, and if it exceeds a threshold, Lévy flights are employed for jumping out the local optima. Otherwise, PSO continues to focus on local search. During the synchronization phase, an elite-based strategy preserves the best solution from each subgroup in every iteration, while optimal solution information is exchanged among subgroups. Meanwhile, ACO, with provable convergence, is introduced to refine the local search near the optimal solution, ensuring thorough exploration and enhancing both population stability and diversity. This algorithm fosters a smooth balance between exploration and exploitation. The effectiveness of CMA is demonstrated through rigorous testing on 26 standard benchmark functions, outperforming ten advanced MAs. Furthermore, CMA exhibited strong performance in five real-world engineering design problems, including welded beam design, speed reducer design, tension/compression spring design, three-bar truss design, and pressure vessel design.

By leveraging the heterosis theory, this paper not only demonstrates the effectiveness of CMA in solving complex optimization problems but also highlights the broader significance of this theory in the design of hybrid optimization algorithms. Therefore, we will further explore the integration of CMA with other algorithms in the future, such as combining it with the Whale Optimization Algorithm (WOA). WOA’s spiral and encircling mechanisms will be employed to enhance CMA’s ability to escape the local optima and to provide more diverse exploration of the solution space. Additionally, we will develop a binary version of CMA for high-dimensional feature selection and apply CMA for deep neural networks, further enhancing its performance and adaptability in complex optimization tasks.

Data availability

The datasets used and/or analyzed during the current study are publicly available and can be accessed without any restrictions. If needed, they can also be obtained by contacting the corresponding author.

References

Abualigah, L., Diabat, A., Mirjalili, S., Abd Elaziz, M. & Gandomi, A. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 376, 113609 (2021).

Altunbey Özbay, F. & Alatas, B. Review of social-based artificial intelligence optimization algorithms for social network analysis. Int. J. Pure Appl. Sci. 1, 33–52 (2015).

Altunbey Özbay, F. & Özbay, E. Performance analysis of seagull optimization algorithm for constrained engineering design problems. J. Eng. Sci. Adiyaman Univ. 15, 469–485 (2021).

Abualigah, L., Elaziz, M., Sumari, P., Geem, Z. & Gandomi, A. Reptile search algorithm (rsa): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 191, 116158. https://doi.org/10.1016/j.eswa.2021.116158 (2022).

Ting, T., Yang, X., Cheng, S. & Huang, K. Hybrid metaheuristic algorithms: Past, present, and future. Stud. Comput. Intell. 585, 1–20 (2015).

Acampora, G., Chiatto, A. & Vitiello, A. Genetic algorithms as classical optimizer for the quantum approximate optimization algorithm. Appl. Soft Comput. 142, 110296 (2023).

Yang, Q. et al. Random contrastive interaction for particle swarm optimization in high-dimensional environment. IEEE Transactions on Evolutionary Computation (2023).

Zhou, X. et al. Multi-strategy competitive-cooperative co-evolutionary algorithm and its application. Inf. Sci. 635, 328–344 (2023).

Yang, Q. et al. Random contrastive interaction for particle swarm optimization in high-dimensional environment. IEEE Transactions on Evolutionary Computation (2023).

Ye, Z., Ma, L. & Chen, H. A hybrid rice optimization algorithm. In 2016 11th International Conference on Computer Science & Education (ICCSE). 169–174 (IEEE, 2016).

Akbari, M. A., Zare, M., Azizipanah-Abarghooee, R., Mirjalili, S. & Deriche, M. The cheetah optimizer: A nature-inspired metaheuristic algorithm for large-scale optimization problems. Sci. Rep. 12, 10953 (2022).

Amiri, M. H., Mehrabi Hashjin, N., Montazeri, M., Mirjalili, S. & Khodadadi, N. Hippopotamus optimization algorithm: A novel nature-inspired optimization algorithm. Sci. Rep. 14, 5032 (2024).

Matoušová, I., Trojovský, P., Dehghani, M., Trojovská, E. & Kostra, J. Mother optimization algorithm: A new human-based metaheuristic approach for solving engineering optimization. Sci. Rep. 13, 10312 (2023).

Trojovský, P. & Dehghani, M. A new bio-inspired metaheuristic algorithm for solving optimization problems based on walruses behavior. Sci. Rep. 13, 8775 (2023).

Li, C., Sun, G., Deng, L., Qiao, L. & Yang, G. A population state evaluation-based improvement framework for differential evolution. Inf. Sci. 629, 15–38 (2023).

Mazaheri, H., Goli, S. & Nourollah, A. Path planning in three-dimensional space based on butterfly optimization algorithm. Sci. Rep. 14, 2332 (2024).

Aguila-Leon, J., Vargas-Salgado, C., Chiñas-Palacios, C. & Díaz-Bello, D. Solar photovoltaic maximum power point tracking controller optimization using grey wolf optimizer: A performance comparison between bio-inspired and traditional algorithms. Expert Syst. Appl. 211, 118700 (2023).

Liu, Y. et al. Simulated annealing-based dynamic step shuffled frog leaping algorithm: Optimal performance design and feature selection. Neurocomputing 503, 325–362 (2022).

Wang, M., Wan, Y., Ye, Z., Gao, X. & Lai, X. A band selection method for airborne hyperspectral image based on chaotic binary coded gravitational search algorithm. Neurocomputing 273, 57–67 (2018).

Braik, M., Ryalat, M. & Al-Zoubi, H. A novel meta-heuristic algorithm for solving numerical optimization problems: Ali baba and the forty thieves. Neural Comput. Appl. 34, 409–455 (2022).

Hashim, F., Hussain, K., Houssein, E., Mabrouk, M. & Al-Atabany, W. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 51, 1531–1551 (2021).

Alonso, G., del Valle, E. & Ramirez, J. Optimization methods. Desalin. Nucl. Power Plants 1, 1–20 (2020).

Arora, J., Agrawal, U., Tiwari, P., Gupta, D. & Khanna, A. Ensemble Feature Selection Method Based on Recently Developed Nature-inspired Algorithms. Vol. 1087. 451–467 (Springer Singapore, 2020).

Ezugwu, A. et al. Metaheuristics: A comprehensive overview and classification along with bibliometric analysis. Artif. Intell. Rev. 54, 4237–4316 (2021).

Hussain, K., Salleh, M., Cheng, S. & Shi, Y. On the exploration and exploitation in popular swarm-based metaheuristic algorithms. Neural Comput. Appl. 31, 6865–6889 (2019).

Morales-Castañeda, B., Zaldívar, D., Cuevas, E., Fausto, F. & Rodríguez, A. A better balance in metaheuristic algorithms: Does it exist?. Swarm Evol. Comput. 54, 100671 (2020).

Houssein, E., Hassan, M., Kamel, S., Hussain, K. & Hashim, F. Modified lévy flight distribution algorithm for global optimization and parameters estimation of modified three-diode photovoltaic model. Appl. Intell. 53, 11799–11819 (2023).

Osei-kwakye, J., Han, F., Amponsah, A., Ling, Q.-H. & Abeo, T. A diversity enhanced hybrid particle swarm optimization and crow search algorithm for feature selection. Appl. Intell. 1, 119017 (2023).

Salgotra, R., Lamba, A. K., Talwar, D., Gulati, D. & Gandomi, A. H. A hybrid swarm intelligence algorithm for region-based image fusion. Sci. Rep. 14, 13723 (2024).

Qiao, J. et al. A hybrid particle swarm optimization algorithm for solving engineering problem. Sci. Rep. 14, 8357 (2024).

Xu, H., Lu, Y. & Guo, Q. Application of improved butterfly optimization algorithm combined with black widow optimization in feature selection of network intrusion detection. Electronics 11, 3531 (2022).

Xue, Y., Aouari, A., Mansour, R. & Su, S. A hybrid algorithm based on PSO and GA for feature selection. J. Cyber Secur. 3, 117–124 (2021).

Mirjalili, S. & Hashim, S. A new hybrid psogsa algorithm for function optimization. In Proceedings of the 2010 International Conference on Computer and Information Application (ICCIA 2010). 374–377 (Tianjin, 2010).

Xu, Y. et al. Mfeature: Towards high performance evolutionary tools for feature selection. Expert Syst. Appl. 186, 115655 (2021).

Wolpert, D. & Macready, W. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1, 67–82 (1997).

Shu, Z. et al. A modified hybrid rice optimization algorithm for solving 0–1 knapsack problem. Appl. Intell. 52, 5751–5769 (2022).

Ye, Z. et al. A band selection approach for hyperspectral image based on a modified hybrid rice optimization algorithm. Symmetry 14, 1293 (2022).

Ye, Z., Luo, J., Zhou, W., Wang, M. & He, Q. An ensemble framework with improved hybrid breeding optimization-based feature selection for intrusion detection. Future Generation Computer Systems (2023).

Ye, Z. et al. High-dimensional feature selection based on improved binary ant colony optimization combined with hybrid rice optimization algorithm. Int. J. Intell. Syst. 2023, 1444938 (2023).

Subrata, P. 100 Optimization techniques (Google Books, 2023).

Heidari, A. et al. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 97, 849–872 (2019).

Tu, J., Chen, H., Wang, M. & Gandomi, A. The colony predation algorithm. J. Bionic Eng. 18, 784–793 (2021).

Zhao, W., Wang, L. & Zhang, Z. Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl. Based Syst. 163, 283–311 (2019).

Yu, X. & Zhang, W. A teaching-learning-based optimization algorithm with reinforcement learning to address wind farm layout optimization problem. Appl. Soft Comput. 151, 111135 (2024).

Qin, F., Zain, A. & Zhou, K.-Q. Harmony search algorithm and related variants: A systematic review. Swarm Evol. Comput. 74, 101126 (2022).

Samareh Moosavi, S. & Bardsiri, V. Poor and rich optimization algorithm: A new human-based and multi populations algorithm. Eng. Appl. Artif. Intell. 86, 103224 (2019).

Zhang, J., Huang, Y., Wang, Y. & Ma, G. Multi-objective optimization of concrete mixture proportions using machine learning and metaheuristic algorithms. Constr. Build. Mater. 253, 119208 (2020).

Karimi, F., Dowlatshahi, M. & Hashemi, A. Semiaco: A semi-supervised feature selection based on ant colony optimization. Expert Syst. Appl. 214, 119130 (2023).

Liu, X., Fang, Y., Zhan, Z. & Zhang, J. A cooperative evolutionary computation algorithm for dynamic multiobjective multi-auv path planning. IEEE Trans. Ind. Inform. 20, 669–680 (2023).

Yassami, M. & Ashtari, P. A novel hybrid optimization algorithm: Dynamic hybrid optimization algorithm. Multimed. Tools Appl. 82, 435–454 (2023).

Özbay, F. A modified seahorse optimization algorithm based on chaotic maps for solving global optimization and engineering problems. Eng. Sci. Technol. Int. J. 41, 101408 (2023).

Elsayad, A., Nassef, A. & Al-Dhaifallah, M. Bayesian optimization of multiclass svm for efficient diagnosis of erythemato-squamous diseases. Biomed. Signal Process. Control 71, 103223 (2022).

Chen, X. & Dai, Y. Research on an improved ant colony algorithm fusion with genetic algorithm for route planning. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference. 1–6 (Chongqing, 2020).

Garg, H. A hybrid GSA-GA algorithm for constrained optimization problems. Inf. Sci. 478, 499–523 (2019).

Tam, J., Ong, Z., Ismail, Z., Ang, B. & Khoo, S. A new hybrid GA-ACO-PSO algorithm for solving various engineering design problems. Int. J. Comput. Math. 96, 883–919 (2018).

Zhou, X., Cai, X., Zhang, H., Ma, Y. & Liu, Z. Multi-strategy competitive-cooperative co-evolutionary algorithm and its application. Inf. Sci. 635, 328–344 (2023).

Zhao, F. et al. A multipopulation cooperative coevolutionary whale optimization algorithm with a two-stage orthogonal learning mechanism. Knowl. Based Syst. 246, 108664 (2022).

Akhand, M., Rahman, M. & Siddique, N. Advances on Particle Swarm Optimization in Solving Discrete Optimization Problems. 69–92 (Springer, 2023).

Liu, X., Fang, Y., Zhan, Z. & Zhang, J. Strength learning particle swarm optimization for multiobjective multirobot task scheduling. IEEE Trans. Syst. Man Cybern. Syst. 53, 4052–4063 (2023).

Kwakye, B. et al. Particle guided metaheuristic algorithm for global optimization and feature selection problems. Expert Syst. Appl. 248, 123362 (2024).

Liu, J. et al. Multisurrogate-assisted ant colony optimization for expensive optimization problems with continuous and categorical variables. IEEE Trans. Cybern. 52, 11348–11361 (2021).

Wang, Z., Gao, S., Zhou, M., Li, Y. & Tang, K. Information-theory-based nondominated sorting ant colony optimization for multiobjective feature selection in classification. IEEE Trans. Cybern. 53, 5276–5289 (2022).

Nemati, S., Basiri, M., Ghasem-Aghaee, N. & Aghdam, M. A novel ACO-GA hybrid algorithm for feature selection in protein function prediction. Expert Syst. Appl. 36, 12086–12094 (2009).

Liu, M. et al. A hybrid BSO-ACO for dynamic vehicle routing problem on real-world road networks. IEEE Access 10, 118302–118312 (2022).

Zhou, X. et al. Random following ant colony optimization: Continuous and binary variants for global optimization and feature selection. Appl. Soft Comput. 144, 110513 (2023).

Miikkulainen, R. & Stanley, K. Competitive coevolution through evolutionary complexification. J. Artif. Intell. Res. 21, 63–100 (2004).

Tan, K., Yang, Y. & Goh, C. A distributed cooperative coevolutionary algorithm for multiobjective optimization. IEEE Trans. Evol. Comput. 10, 527–549 (2006).

Raj, K. H., Sharma, R. S., Mishra, G. S., Dua, A. & Patvardhan, C. An evolutionary computational technique for constrained optimisation in engineering design. J. Inst. Eng. (India) Part MC Mech. Eng. Div. 86, 121–128 (2005).

Charin, C., Ishak, D., Mohd Zainuri, M., Ismail, B. & Mohd Jamil, M. A hybrid of bio-inspired algorithm based on levy flight and particle swarm optimizations for photovoltaic system under partial shading conditions. Sol. Energy 217, 364–377 (2021).

Contaldi, C., Vafaee, F. & Nelson, P. C. Bayesian network hybrid learning using an elite-guided genetic algorithm. Artif. Intell. Rev. 52, 245–272 (2019).

Moazen, H., Molaei, S., Farzinvash, L. & Sabaei, M. PSO-ELPM: PSO with elite learning, enhanced parameter updating, and exponential mutation operator. Inf. Sci. 628, 70–91 (2023).

Elgamal, Z. M. et al. Improved equilibrium optimization algorithm using elite opposition-based learning and new local search strategy for feature selection in medical datasets. Computation 9, 68 (2021).

Jamil, M. & Yang, X.-S. A literature survey of benchmark functions for global optimisation problems. Int. J. Math. Model. Numer. Optim. 4, 150–194 (2013).

Zhan, Z.-H., Shi, L., Tan, K. C. & Zhang, J. A survey on evolutionary computation for complex continuous optimization. Artif. Intell. Rev. 55, 59–110 (2022).

Kwakye, B. D., Li, Y., Mohamed, H. H., Baidoo, E. & Asenso, T. Q. Particle guided metaheuristic algorithm for global optimization and feature selection problems. Exp. Syst. Appl. 248, 123362 (2024).

Mazhoud, I., Hadj-Hamou, K., Bigeon, J. & Joyeux, P. Particle swarm optimization for solving engineering problems: a new constraint-handling mechanism. Eng. Appl. Artif. Intell. 26, 1263–1273 (2013).

Liao, T., Socha, K., de Oca, M. A. M., Stützle, T. & Dorigo, M. Ant colony optimization for mixed-variable optimization problems. IEEE Trans. Evolut. Comput. 18, 503–518 (2013).

Arora, S. & Singh, S. Butterfly optimization algorithm: A novel approach for global optimization. Soft Comput. 23, 715–734 (2019).