Abstract

The vanishing point (VP) is particularly important road information, which provides an important judgment criterion for the autonomous driving system. Existing vanishing point detection methods lack speed and accuracy when dealing with real road environments. This paper proposes a fast vanishing point detection method based on row space features. By analyzing the row space features, clustering candidates for similar vanishing points in the row space are performed, and then motion vectors are screened for the vanishing points in the candidate lines. The experimental results show that the average error of the normalized Euclidean distance is 0.0023716 in driving scenes under various lighting conditions. The unique candidate row space greatly reduces the amount of calculation, making the real-time FPS up to 86. It can be concluded that the fast vanishing point detection proposed in this paper would be suitable for high-speed driving scenarios.

Similar content being viewed by others

Introduction

In a 3D scene, a set of parallel lines is perspective projected in the 2D image plane of the camera and forms a set of lines in the image plane that intersect at a point, which is called the vanishing point (VP)1. The greater the number of parallel lines in the 3D space, the greater the number of vanishing points (VPs) in the corresponding image. VPs can provide a large amount of information existing in the image, so it is involved in many application fields, such as camera localization2, camera distortion correction3, viewpoint ___location recognition4, Lane Departure Warning (LDW)5, and Simultaneous Localization and Mapping (SLAM)6. Vanishing points are also used in autonomous driving, and among the many VPs presented in each frame of a driving video, the VPs that require the most attention are the intersections of lanes or road boundaries. VPs provide important information about ADAS and autonomous vehicle driving scenarios. Therefore, the detection of VPs has always been an indispensable factor in autonomous driving. For example, self-driving cars can provide lane departure warnings by identifying drivable areas based on the VPs ___location. Then, the instantaneous moving direction of the vehicle is judged to assist in determining the position of the lane line. Vanishing point detection techniques for clearly marked structured roads have been widely explored. But vanishing points on unstructured roads are still not marked, which also proves to be a challenging problem7.

Existing road VPs detection methods are mainly divided into two categories, traditional edge-based methods, and deep learning-based methods. Traditional edge-based methods in structured and unstructured road VPs detection methods mainly rely on texture features existing in images, with two disadvantages: (1) The detection of edge features is easily disturbed by external conditions, such as uneven illumination, image resolution, and other factors, which can lead to the low accuracy and unstable in many road conditions. (2) The traditional texture detection methods are generally based on a voting mechanism. Due to the increase in the amount of calculation, it cannot meet the real-time conditions required by automatic driving. There have been many strategies using deep learning (DL) to detect VPs. Convolutional Neural Network (CNN) has achieved good results in image classification, image recognition and so on. CNN was used to train the VPs detector in Ref.8,9,10. Regressive ResNet-34 was also used as a vanishing point detector in Ref.11. A combination of features from a modified HrNet12, plus heatmap regression was applied for VPs detection. Most methods based on deep learning only focus on structured roads, which can be used to detect VPs in restricted driving situations. Furthermore, general supervised learning methods are data-driven learning methods, and large training datasets with precise labels do not exist in real-world autonomous driving scenarios. It can be seen from Ref.8,9,10,54,55 that it is still difficult to achieve VPs detection suitable for real-world complex driving scenarios due to limited training datasets.

The motion-based detection methods13,14,15,16, this method usually needs to find line segment features from road information, use the intersection of line segments as candidate VPs, and then usually use the threshold method or voting method to filter. There are some shortcomings in these processes, such as the limitation of VPs voting, and a large amount of computation caused by too many motion vectors. In this paper, we propose a fast VPs detection method based on motion-constrained cluster segmentation under row-space features, which can increase detection accuracy and overcome these limitations. Specifically, image features are first extracted and line segments are screened as potential line segments to generate VPs, filter VPs using optimized similarity clustering in line space features, and then use motion vector-based constraints on the extracted points to estimate the most accurate VPs. The innovations of this study are as follows:

-

(1)

A processing method under row space features is proposed, which can effectively reduce the global motion vector and reduce the amount of computation.

-

(2)

An optimized clustering method is proposed for VPs detection.

-

(3)

A motion vector detection linking the upper and lower frames is proposed.

-

(4)

The proposed vanishing point detection method clusters candidate VPs in the image by line-space features, and then screens out the wrong VPs by upper and lower frame motion detection. High accuracy and extremely fast speed are achieved in real-world driving scenarios.

Related work

A lot of excellent work has been done on VPs detection. The current mainstream detection technology is based on image feature extraction, using edges and lines to find candidate VPs in the image, and then using voting methods to determine. Texture-based detection17,18,19, filtering noise by directional filters or steerable filters, followed by edge detection, such as Gabor filters20, steerable filter banks21. Since the boundaries are somewhat curved, the actual VP cannot be determined, resulting in false detections. Luton et al.22 used the Hough transform to detect line segments and determined VP through the intersection of the synthesized line segments. McLean et al.23 generated gradient directions by clustering line structures in images, which were validated using two grayscale images. Collins et al.25 and Shufelt24 used a unique spatial structure to address false edges that have occurred due to orientation constraints. Rother26 used the idea of constructing the environment to detect the correct VP in order to avoid errors caused by camera parameters. Cantoni et al.27 investigated two methods, by changing the method of line segment detection in images, and by continuous analysis to detect VPs. In particular, deep learning-based methods have recently become very popular for detecting VPs28,29, but most current road datasets have little information about vanishing points. This limits deep learning methods that require a large number of training images.

Existing line segment detection methods can be roughly divided into two categories: (1) Hough transform48,49,50; Hough transform is an ingenious feature extraction technique that transforms the global pattern detection problem in the image ___domain into an efficient peak detection problem in the parameter space. Generally, standard Hough-based line segment detectors first obtain a binary edge map from the input image, use edge operators such as the Canny operator, and then apply Hough transform to the extracted edge map to find all candidate lines, according to a pre-defined gap and length standard, cut it into line segments. (2) Perceptual grouping51,52,53. The perceptual grouping looks at the detection problem from a different perspective. Methods in this class describe line segments as connected regions where sufficiently similar and collinear components exist. The key idea is to generate chains of pixels in a given edge map, extract straight line segments and arcs by traversing these chains and then estimate the area using a validation method. For the first time, a line segment gradient orientation detection is proposed, where a line segment is detected as a rectilinear image region where internal pixels roughly share the same orientation (similarity). This method outperforms the Hough transform in terms of both accuracy and false positives but requires empirical and manual tuning of a range of parameters.

In motion-based vanishing point detection methods13,14,15,16, corner points are usually taken as the start and end points of motion vectors. At this time, the motion vector can be regarded as a line segment in the video frame. Corner detectors are usually used to detect corners in a video frame, then these corners are used as the starting point, and then the second frame is tracked and used as the endpoint. The start and end points are determined, and the motion vector establishes the connection between the two frames. However, the number of computations increases due to the constant repetition of corner detection and tracking. In addition, the detection of corner points is also unstable. Because the interval between two video frames is short, the motion vector formed by the start and end points will not be very long. The main purpose of image segmentation is to label foreground objects and backgrounds. If objects and backgrounds are properly labeled, key edge information can be obtained by retrieving object contours, which is beneficial for locating vanishing lines and vanishing points. After identifying the vanishing point, the image segmentation also marks the regions of the object and background. This is critical for generating correct depth maps. Clustering algorithms are the most common methods for dealing with image segmentation problems32,33,34,35. However, it is not easy to correctly label the object and background areas of any image. The image is reproduced by applying the fuzzy c-means algorithm (FCM)36 to group the entire pixels according to RGB values. If the object and background are not correctly classified, it means that an accurate initial number of clusters has not been determined, which forms the difficulty of image segmentation. Different initial cluster numbers will produce different segmentation results.

The method used in the current paper is based on line segment detection, it performs vanishing point estimation for clustering under line space features, and then uses a motion-based method to eliminate and limit false vanishing points.

Vanishing point detection strategy

Vanishing points exist in the intersection of long parallel lines in the image, and lane lines contain many candidate vanishing points. This paper adopts a special spatial feature and improves the clustering strategy, using the similarity theory, the similarity between vanishing points to identify the actual vanishing points, including three steps.

-

(1)

After the line segment is collected, we delete redundant line segments, including short lines, horizontal lines, vertical lines, etc., to reduce the computational cost.

-

(2)

Using the spatial features after row segmentation, we perform clustering similarity matching on the candidate vanishing points in the space to optimize the identification of the main vanishing points and perform bubble sorting between row vectors, and the sorting is based on the space of each row. The size of the inner vanishing point cluster similarity; to reduce extreme cases, such as the row that happens to be on the row split, we will extract the top two rows of the ranking as candidate rows.

-

(3)

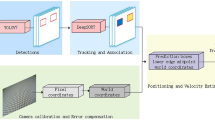

Constrain the motion vectors of the upper and lower frames on the vanishing points in the candidate row and eliminate the noisy candidate points. Figure 1 represents the various stages of the method.

Line detection and corner collection

Image features contain many noisy edges, so detected line segments cannot all be used as feature line segments for estimating vanishing points. It is necessary to filter the parallel line segments on the edge features of the image and delete the redundant line segments. Figure 2 shows the identification of suitable candidate lines. As shown in Fig. 2(a), the Line Segment Detector (LSD) algorithm37 is used to collect the line segments contained in the image. In the image, vertical and horizontal lines usually do not belong to the characteristic line segment of the vanishing point, because the point where their line segments meet is often at the edge of the image, so we remove them. As shown in Fig. 2(b), we deleted redundant line segments, and also deleted the shorter lines generated by the distortion, and kept the longer line segments.

Since vanishing points exist at the intersections of feature line segments in the image, the number of feature line segments determines the computation time to find vanishing points. And reducing computation time is also one of the reasons why we filter redundant line segments that are not related to vanishing points. Pass the filtered feature line segments, preserve their directions, and extend their lengths, and utilize the Shi-Tomasi corner detector30 to find corners efficiently. All collected corners are taken as an initial set, denoted as Ft0 = {Ft0(1), Ft0(2),… Ft0(n),}, where n is the number of corners, as shown in Fig. 3.

Clustering under row partitioning

The task of dividing data into groups can make objects in a cluster similar in a suitable way.38 proposed an idea, transforming the classification knowledge "{Image: Label}" into a pairwise relationship "{(Image A, Image B): Similarity Label}", where similarity represents whether the label of Image A and Image B is the same or not.

Drawing on this idea, the images with the set of corners are clustered, and the task is to filter the vanishing points in each row. This is done by assigning the instance ID of the vanishing point pixel as a unique index to all vanishing point pixels in row space. The indices are integers i, 1 ≤ i ≤ n + 1, where n is the number of vanishing point instances in the scene, add an extra 1 for the best cluster point for the cluster in each row. Create a function f that assigns an index yi = f (xi) to pixel xi, where yi ∈ Z and i is the index of the vanishing point pixel in the image. The synthetic label of all pixels in the image, i.e., Y = { yi }∀ i , should satisfy the relation G. For any two pixels xi, xj, G(xi, xj) ∈ {0, 1} is defined as Eq. (1):

G is a more accurate representation of the actual clustering target. From Eq. (1), G can be directly reconstructed from a given vanishing point. Specifically, if two pixels belong to the same instance, then each output node should not be mapped to a fixed class but should be guided by pairwise similarity. If there are similar pairs, their cluster distribution should be similar and vice versa. The distance between two distributions can be evaluated by pairwise KL-Divergence. Given a pair of pixels xi and xj, their corresponding output distributions are expressed as Xi = f (xi) = [ti,1..ti,n] and Xj = f(xj) = [tj,1..tj,n], where n is the number of vanishing point indices used for marking. The cost of similar pairs is described as Eq. (2):

The cost ζ (xi, xj)+ is symmetric w.r.t. xi, xj, where Xi* and Xj* are alternately assumed to be constant.

If xi, xj come from different pairs, the formula shown in Eq. (3) can be used to describe the output distribution of different instances.

Margin σ was set to 2. A criterion is established to evaluate the G function and its suitability. By comparing the form, ζ (xi, xj) can be described by Eq. (4), where the integer 1 is used to denote a similarity pair.

Some problems occur during instance segmentation. Since the vanishing point of the instance is very sparse in the background, and some vanishing points will just be stuck at the edge of the line space feature, where is the vanishing point pixel on the boundary. Although it still belongs to the vanishing point, it is easy to be ignored. According to the resolution of different pictures, it is horizontally divided to form a row space. The first row is selected from the place where the lane lines start in the image as the bottom, and if the image resolution changes, the rows are divided by the principle of equal distribution of resolution. Figure 4 is divided into 13 rows according to the total number of pixels, and each row is clustered. This change not only reduces the difficulty of each row clustering but also improves the computational efficiency. A basic VP cluster point is formed in each row, and the similarity between each instance and the cluster point is analyzed by selecting different vanishing points in the same row. By comparing the output distribution with the cluster points, they can be divided into similar pairs and dissimilar pairs on this basis.

After clustering filtering for each row, the number of candidate vanishing points in each row is compared by bubble sorting. To solve the problem of candidate vanishing points on the boundary, the first two rows will be selected as candidate rows for the next step of motion vector screening. The first two rows here are the first two rows of space after bubble sorting. Since it is impossible to determine whether the VP of the current frame is on the boundary of the row partition, the space of the first two rows of the ranking is chosen to reduce the error arising from this situation. We use purple circles to mark the cases where there are vanishing points on the boundary in Fig. 4.

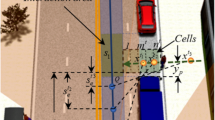

Selection of motion vectors

Our proposed detection method takes a given set of clustered corner points Gt(0) as the starting pixel coordinates of motion vectors bit. Starting from the first frame given at time t0, use the Kanade-Lucas optical flow method31 to track each of the initial sets over successive frames t(0+L) (L = 1, 2, 3, …) point. Points not tracked in the initial set in the current frame t(0+L) are removed from the set Gt(0+L-1). Otherwise, update the continuous tracking points into the tracking set Kt(0+L). The corresponding tracking points in the upper and lower frames, Gt(0+L)[i] and Kt(0+L+1)[i], indicate that their pixel coordinates are Ut (0+L) (xi, yi) and Vt (0+L+1) (xi, yi).

At the same time, the left side of the pixel corresponding to Gt(0+L+1)[i] is represented as Ut(0+L+1)(xi, yi). At this time, the three coordinates are shown in Fig. 5.

Suppose Ut(0+L)(xi, yi) represents the coordinate of the i-th candidate vanishing point after the first frame clustering screening, Vt(0+L+1)(xi, yi) represents the coordinate of the i-th candidate vanishing point tracked by the optical flow method in the second frame, and Ut(0+L+1)(xi, yi) represents the coordinate of the i-th candidate vanishing point after the clustering screening of the second frame.

In Fig. 5, the black line represents the motion vector Z(i) obtained by the optical flow method, and the red line represents the motion vector C(i) obtained by cluster screening, and the angle between the two lines is θ(i).

when the vector C(i) is closer to Z(i), the angle θ(i) is smaller, and it is used as a metric to establish a piecewise function. When the actual angle θ(i) is smaller than our set angle threshold, we can set the corner of the upper and lower frames of C(i) as the vanishing point; otherwise, the index i is removed from the set of upper and lower frames.

By this method, the corner points that are misjudged by clustering can be effectively eliminated, and the number of candidate point sets can be continuously limited, and only two candidate rows are used to screen motion vectors, which greatly improves the performance.

Experiments

In this paper, the widely used datasets are deployed for experiments and analysis, and the experimental results are obtained. Section 1 is an introduction to the experimental setup. Section "Related work" presents the comparison of detection results and the analysis of the proposed method.

Experimental setup

All experiments were configured on a standard 12th Gen Intel(R) Core (TM) i7-12700H 2.70 GHz computer with 16 GB RAM, implemented via python. Because there are very few structured road datasets with vanishing point labels, some public datasets of unstructured roads are added for experiments on environmental changes such as color and background, AVA Landscape39, SIFT flow dataset40, all meet the requirements. The AVA dataset, containing approximately 250,000 images, uses 674 images with Vanishing point tags. the SIFT flow dataset consists of 102,206 frames from 731 videos, mostly from street scenes. Ten videos about streets were randomly selected with a total of 1398 frames. 2047 images are transformed for night scenes using CycleGAN. To simulate the detection of actual road information, we join the Jiqing Expressway dataset41, which contains various road conditions in the expressway. The dataset contains 32 videos, and each video consists of 5393 frames. This dataset annotates the coordinates of the lane lines, but they are not vanishing point coordinates, so this paper will calculate the intersection of the lanes and use it as the vanishing point ground truth.

We refer to the proposed method as LCS for short. In the comparative experiments of the LCS method, 200 corner points (nt0 = 200) are detected and tracked in the initial frame. Whenever the number of detected corners is lower than 40, in order to guarantee the number of corners, an additional 100 corners in a new frame are added to the set Ft(0+L). In the stable motion vector detection step, when the angle between two consecutive frames is less than a threshold, a tracking point is reserved.

To adjust the parameters required for the experiment, including the Gaussian filter s in line segment detection, quantization error specification q, and clustering σ, the most suitable parameters are determined by using the clustering score as a consideration index. The line segment sampling rate is related to the detected line segment quality and the number of short line segments (redundant), and the clustering parameter sigma is related to the actual clustering effect. The Normalized Mutual Information (NMI), Adjusted Rand index (ARI), and adjusted Mutual Information (AMI) are used for reference.

The clustering parameter sigma is σ, which can be set to 1 or 2. α = 1 is used to represent the default parameter set for line segment detection, and α = 2 is used to represent the adjusted parameter set with the least redundant line segments, where s = 0.1, q = 1.4. It can be seen from Table 1 that when using the line segment detection parameter set we adjusted and the clustering parameter σ is 2, the three clustering indicators all reach the best.

Performance evaluation

The LCS method proposed in this paper is compared with 6 existing vanishing point detection methods of different types. They are the edge-based method42, motion-based Road vanishing point detection R-VP43, as well as the classic methods of Kong (Gabor)44 and yang45, the deep learning-based CNN method HrNet12, and the disappearance of MST clustering point detection method Hwang47.

To evaluate the accuracy of the vanishing point detection method, the normalized Euclidean distance proposed in46 is adopted, where the Euclidean distance between the detected vanishing point and the ground truth determined by the diagonal of the input image line lengths is normalized as follows:

where Ud(xi, yi) and Ug(x, y) are the coordinates of the detected vanishing point and ground truth, respectively. DiagLen is the diagonal length of each image. The value of Dnorm depends on whether the position of the vanishing point is close to the position of the ground truth, when it is 0, it proves that the vanishing point coincides with the ground truth.

For each image, calculate the normalized Euclidean distance between the detected ground truth and the vanishing point ___location. When the detection distance does not exceed the threshold, the detected vanishing point is considered as a correct detection. The performance of different methods can be obtained by changing the threshold from 0 to 0.1 as used in45, as shown in Fig. 6.

In Fig. 6, the abscissa represents the normalized distance from the ground truth, and the ordinate represents the percentage of correct predictions. The curves show that our proposed vanishing point detection method is better. Compared with the four vanishing point detection algorithms in the figure, our method exhibits the highest predicted probability in [0, 0.1), and when the normalized Euclidean distance threshold reference is 0.05, the detection accuracy is 4.4% better than the motion-based R-VP method, 5.4% better than the convolution-based HrNet method, 16.3% better than Kong (Gabor) method, and 21.4% better than Yang's method.

The accuracy of the proposed method and the other 4 methods are shown in Table 2, which shows the average value of the normalization error and provides the processing time in milliseconds for comparing the computational complexity. To evaluate the effectiveness of normalization error, all experiments are carried out in the same environment.

As shown in Table 2 the comparison of edge-based methods with motion-based R-VP, Gabor-based Kong, and convolutional neural network-based HrNet with the proposed LCS is carried out. LCS vanishing point detection applies both clustering and motion vectors, with the highest accuracy (minimum mean error). The proposed method also has excellent processing time. Among them, the FPS measurement can reach 86 when the resolution is less than HD (1280 × 720). The proposed method achieves an average distance error of 0.0023716 (5.06 pixels).

As shown in Table 3, the performance of the threshold method and the perpendicular method under three light environments is compared. The data for bright days and tunnels are extracted from the Jiqing Expressway dataset and evaluated by the average error. Since nighttime videos are not included in the real dataset, additional tests are performed on nighttime scenes. As shown in Table 3, under three different lighting conditions, the metric error using the angle threshold is smaller than that of the vertical distance, and the error of the threshold method is very similar. This demonstrates the generalizability of the proposed method.

Ablation experiments are performed on the proposed method, and we use three different line segment detection methods, including Hough line segment detection, LSD, and Canny edge detector. At the same time, vanishing point detection based on the MST clustering method Hwang47 is added for clustering comparison. In order to compare the clustering effect, a certain proportion of unstructured road AVA landscape dataset and other datasets are added as support. For experimental accuracy, we use mean squared error (MSE) for evaluation. MSE is the mean of the mean distance of the vanishing point from the ground truth.

As shown in Table 4, the vanishing point detection method using similarity clustering based on LSD detection and optimization is better than other methods. The Hough transform has high time and space complexity, and only straight lines can be determined during the detection process; furthermore, the orientation and length information of the line segment is lost. The Canny edge detector extracts gradient features, but sometimes there are high gradient features that affect cluster convergence and find the best vanishing point because they usually contain a lot of noise. Table 4 shows that the method using LSD edge detector and similarity clustering outperforms other combinations of edge detection and clustering methods.

To verify that the proposed algorithm can adapt to the requirements of autonomous driving, we use a real car model (BYD Qin) provided by Autocore.ai. The onboard computer was modified to combine AGX Xavier with the car, and the algorithm was transplanted into the real car for real-time testing on the test road in Lishui District, Nanjing City, Jiangsu Province, China. Figure 7 shows the results of driving under different road conditions using our method, which gives an example of vanishing point detection.

Some examples of the proposed method are shown in Fig. 7, and the examples show that the method works well in various road conditions, but the tunnel situation suffers from the phenomenon of vanishing point drift. Note that the vanishing point in the road condition of the tunnel in Fig. 7 is marked in red, indicating that the test failed. The analysis is that due to the large light source difference in the tunnel, the cluster similarity cannot be correctly matched. The remaining seven regular road conditions were observed that the detected vanishing points overlapped visually with the intersection of the road boundary, proving the accuracy of the proposed method. The robustness and generalization of the proposed method can be seen in the actual measurement of seven conventional road conditions.

The biggest role of the vanishing point is to delineate the drivable area for the automatic driving system. There are three types of curves, the first one is a regular curve as in Fig. 8(a). The second type is the premise of excluding the traffic rules, when the curve is driven, the area centered on the vehicle is drivable. At this time there is no restriction on the driveable area, so the vanishing point will exist directly in front of the front of car when the curve scenario is as in Fig. 8b. Another situation is when in a large intersection, as in Fig. 8c, the vanishing point will be in the closest road position to the direction of vehicle travel.

In general, from observations on structured road datasets (Nanjing Road Survey and Jiqing Expressway datasets) and unstructured road datasets (AVA landscape and SIFT flow datasets, etc.), the method can effectively detect vehicles When to drive the vanishing point normally; however, when encountering a tunnel, the vanishing point sometimes cannot be detected correctly.

Conclusion

In this paper, we investigate a VP detection method based on similarity clustering and motion constraints under row space features. Filter out redundant lines to collect corner points, perform similarity clustering in the row space, and only limit the motion vectors of the corner points that meet the conditions in the candidate row, which greatly reduces the amount of calculation. The FPS detection rate can reach 86, which can fully meet the requirements of real-time detection of autonomous driving.

The method has been verified by experiments and can quickly find the vanishing point even when there is no prior information on scene and camera parameters in the environment, and there are problems such as partial occlusion, background clutter, viewpoint changes, and lighting effects. Situations that cannot be detected correctly are only for the tunnel situation that occurs on structured roads. This shows that the proposed method is both robust and accurate. From the computational complexity point of view, the proposed approach can be implemented in real-time, enabling fast detection while ensuring accuracy. Our method plays an important role in drivable area detection, improving the accuracy of lane line detection, and autonomous driving applications.

Future work will explore how to overcome tunnel conditions and identify drivable areas with vanishing points, assisting lane line detection for area detection.

Data availability

All data generated or analyzed during this study are included in this published article (and its Supplementary Information files).

References

Hearn, D. & Baker, M. P. Chapter 12: Three-Dimensional Viewing. In Computer Graphics C Version 2nd edn (ed. Sufi, G.) 446–447 (Pearson, 1997).

Zhang, G. et al. On-orbit space camera self-calibration based on the orthogonal vanishing points obtained from solar panels. Meas. Sci. Technol. 29(6), 065013 (2018).

Zhu, Z., Liu, Q., Wang, X., Pei, S. & Zhou, F. Distortion correction method of a zoom lens based on the vanishing point geometric constraint. Meas. Sci. Technol. 30(10), 105402 (2019).

Pei, L. et al. Ivpr: An instant visual place recognition approach based on structural lines in manhattan world. IEEE Trans. Instrum. Measur. 5, 85 (2019).

Yoo, J. H., Lee, S.-W., Park, S.-K. & Kim, D. H. A robust lane detection method based on vanishing point estimation using the relevance of line segments. IEEE Trans. Intell. Transp. Syst. 18(12), 3254–3266 (2017).

Ji, Y., Yamashita, A. & Asama, H. Rgb-d slam using vanishing pointand door plate information in corridor environment. Intel. Serv. Robot. 8(2), 105–114 (2015).

Haris, M., Shakhnarovich, G. & Ukita, N. Deep back-projection networks for super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition 1664–1673 (2018).

Chang, C.-K., Jiaping, Z. & Laurent, I. DeepVP: Deep learning for vanishing point detection on 1 million street view images. In 2018 IEEE International Conference on Robotics and Automation (ICRA) 1–8 (2018).

Xingxin, L., Zhu, L., Yu, Z., & Wang, Y. Adaptive auxiliary input extraction based on vanishing point detection for distant object detection in high-resolution railway scene. In 2019 14th IEEE International Conference on Electronic Measurement & Instruments (ICEMI) 522–527 (2019).

Zeng, Z., Mengyang, Wu., Zeng, W. & Chi-Wing, Fu. Deep recognition of vanishing-point-constrained building planes in urban street views. IEEE Trans. Image Process. 29, 5912–5923 (2020).

Choi, H.-S., Keunhoi, A. & Myung-joo, K. Regression with residual neural network for vanishing point detection. Image Vis. Comput. 91, 58 (2019).

Liu, Y.-B., Zeng, M. & Meng, Q.-H. Unstructured road vanishing point detection using convolutional neural networks and heatmap regression. IEEE Trans. Instrum. Meas. 70, 1–8 (2021).

Yu, Z. & Lidong, Z. Roust vanishing point detection based on the combination of edge and optical flow. In 2019 4th Asia-Pacific Conference on Intelligent Robot Systems (ACIRS) 184–188 (2019).

Yu, Z., Zhu, L. & Guoyu, Lu. An improved phase correlation method for stop detection of autonomous driving. IEEE Access 8, 77972–77986 (2020).

Jang, J., Jo, Y., Shin, M. & Paik, J. Camera orientation estimation using motion-based vanishing point detection for advanced driver-assistance systems. IEEE Trans. Intell. Transp. Syst. 22, 6286–6296 (2021).

Jo, Y., Jinbeum, J., & Joonki, P. Camera orientation estimation using motion based vanishing point detection for automatic driving assistance system. In 2018 IEEE International Conference on Consumer Electronics (ICCE) 1–2 (2018).

Stentiford, F. Attention-based vanishing point detection. In 2006 International Conference on Image Processing 417–420 (2006).

Barat, M., Saeid, S., Alireza, M. & Hossein, N.-p. Texture-orientation based vanishing point detection using genetic algorithm. In 6th International Symposium on Telecommunications (IST) 806–810 (2012).

Rasmussen, C. Texture-based vanishing point voting for road shape estimation. In BMVC (2004).

Cheng, H., Nanning, Z., Chong, S., & van de Huub, W. Vanishing point and gabor feature based multi-resolution on-road vehicle detection. ISNN (2006).

Nieto, M. & Salgado, L. Real-time vanishing point estimation in road sequences using adaptive steerable filter banks. In Advanced Concepts for Intelligent Vision Systems (Lecture Notes in Computer Science). Springer 840–848 (2007). https://doi.org/10.1007/978-3-540-74607-2_76.

Lutton, E., Maiître, H. & Lopez-Krahe, J. Contribution to the determination of vanishing points using Hough transform. IEEE Trans. Pattern Anal. Mach. Intell. 16(4), 430–438. https://doi.org/10.1109/34.277598 (1994).

McLean, G. F. & Kotturi, D. Vanishing point detection by line clustering. IEEE Trans. Pattern Anal. Mach. Intell. 17(11), 1090–1095. https://doi.org/10.1109/34.473236 (1995).

Shufelt, J. A. Performance evaluation and analysis of vanishing point detection techniques. IEEE Trans. Pattern Anal. Mach. Intell. 21, 282–288 (1999).

Collins, R. T. & Weiss, R. S. Vanishing point calculation as a statistical inference on the unit sphere. In Proceedings of ICCV 400–403 (1990). https://doi.org/10.1109/ICCV.1990.139560.

Rother, C. A new approach for vanishing point detection in architectural environments. BMVC 5, 147 (2000).

Cantoni, V., Lombardi, L., Porta., M. & Sicard, N. Vanishing point detection: Representation analysis and new approaches. In Proceedings of CIAP 90–94 (2001).

Borji, A. Vanishing point detection with convolutional neural networks. ArXiv:abs/1609.00967 (2016).

Chang, C.-K., Zhao, J., & Itti, L. DeepVP: Deep learning for vanishing point detection on 1 million street view images. In Proceedings of ICRA 1–8 (2018).

Shi, J. & Carlo, T. Good features to track. In 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition 593–600 (1994).

Lucas, B. D. & Takeo, K. An iterative image registration technique with an application to stereo vision. In IJCAI (1981).

Masoud, H., Jalili, S. & Hasheminejad, S. M. H. Dynamic clustering using combinatorial particle swarm optimization. Appl. Intell. https://doi.org/10.1007/s10489-012-0373-9 (2012).

Pappas, T. N. An adaptive clustering algorithm for image segmentation. In [1988 Proceedings] Second International Conference on Computer Vision 310–315 (1988).

Mújica-Vargas, D., Gallegos-Funes, F. J., Rosales-Silva, A. J. & de Jesús, R. J. Robust C-prototypes algorithms for color image segmentation. EURASIP J. Image Video Process. https://doi.org/10.1186/1687-5281-2013-63 (2013).

Ray, S. & Turi, R. H., Determination of number of clusters in K-means clustering and application in colour image segmentation. In International Conference on Advances in Pattern Recognition and Digital Techniques 2729 (1999).

Bezdek, J. C. Pattern recognition with fuzzy objective function algorithms. In Advanced Applications in Pattern Recognition (1981).

von Gioi, R. G., Jérémie, J., Jean-Michel, M. & Gregory, R. LSD: A fast line segment detector with a false detection control. IEEE Trans. Pattern Anal. Mach. Intell. 32, 722–732 (2010).

Hsu, Y.-C., Zhaoyang, L. & Zsolt, K. Learning to cluster in order to Transfer across domains and tasks. ArXiv:abs/1711.10125 (2018).

Murray, N., Marchesotti, L., & Perronnin, F. AVA: A large-scale database for aesthetic visual analysis. In Proceedings of CVPR. Washington, DC, USA: IEEE Computer Society 2408–2415 (2012).

Liu, C., Yuen, J. & Torralba, A. Nonparametric scene parsing via label transfer. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2368–2382 (2011).

Jiqing Expressway Dataset. https://github.com/vonsj0210/Multi-Lane-Detection-Dataset-with-Ground-Truth. (Accessed on 8 February 2021)

Moon, Y. Y., Zong, W. G. & Gi-Tae, H. Vanishing point detection for self-driving car using harmony search algorithm. Swarm Evol. Comput. 41, 111–119 (2018).

Khac, C. N., Yeon-seok, C., Ju, H. P. & Ho-Youl, J. A robust road vanishing point detection adapted to the real-world driving scenes. Sensors (Basel, Switzerland) 21, 58 (2021).

Kong, H., Audibert, J.-Y. & Ponce, J. General road detection from a single image. IEEE Trans. Image Process. 19(8), 2211–2220 (2010).

Yang, W., Fang, B. & Tang, Y. Y. Fast and accurate vanishing point detection and its application in inverse perspective mapping of structured road. IEEE Trans. Syst. Man Cybern. Syst. 48(5), 755–766 (2016).

Ding, W. & Li, Y. Efficient vanishing point detection method in complex urban road environments. IET Comput. Vis. 9, 549–558 (2015).

Hwang, H. J., Gangjoon, Y. & Sang, M. Y. Optimized clustering scheme-based robust vanishing point detection. IEEE Trans. Intell. Transp. Syst 21, 199–208 (2020).

Richard, O. D. & Peter, E. H. Use of the hough transformation to detect lines and curves in pictures. Commun. ACM 15(1), 11–15 (1972).

Matas, J., Galambos, C. & Kittler, J. Robust detection of lines using the progressive probabilistic hough transform. Comput. Vis. Image Underst. 78(1), 119–137 (2000).

Furukawa, Y. & Shinagawa, Y. Accurate and robust line segment extraction by analyzing distribution around peaks in hough space. Comput. Vis. Image Underst. 92(1), 1–25 (2003).

James, H. E. & Richard, M. G. Ecological statistics of gestalt laws for the perceptual organization of contours. J. Vis. 2(4), 5–5 (2002).

Stephen, M. S. & Michael, B. J. Susan—a new approach to low level image processing. Int. J. Comput. Vis. 23(1), 45–78 (1997).

Xiaohu, L., Jian, Y., Kai, L., & Li, L. Cannylines: A parameter-free line segment detector. In 2015 IEEE International Conference on Image Processing (ICIP) 507–511. IEEE (2015).

Lin, Y. et al. Deep vanishing point detection: Geometric priors make dataset variations vanish. IEEE/CVF Conf. Comput. Vis. Pattern Recogn. 2022, 6093–6103 (2022).

Chen, G., Kai, C., Lijun, Z., Liming, Z. & Alois, K. VCANet: Vanishing-point-guided context-aware network for small road object detection. Autom. Innov. 2, 89 (2021).

Author information

Authors and Affiliations

Contributions

Credit contribution statement: Q.Y. Conceptualization, Data curation, Investigation, Methodology, Validation, Visualization, Writing, Data curation, Project administration, Resources, Supervision – original draft Y.M. Supervision, Validation, Writing – review & editing L.L. Supervision, Writing – review & editing Y.G. Investigation J.T. Investigation Z.H Investigation R.J. Investigation All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, Q., Ma, Y., Li, L. et al. A fast vanishing point detection method based on row space features suitable for real driving scenarios. Sci Rep 13, 3088 (2023). https://doi.org/10.1038/s41598-023-30152-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-30152-7